【MM32F5270开发板试用】六、如何用 星辰内核 + 国产RTOS 通过I2S播放 “星辰大海”

本篇文章来自极术社区与灵动组织的MM32F5270开发板评测活动,更多开发板试用活动请关注极术社区网站。作者:Magicoe是攻城狮

这个demo和想法是参考了大神的文章

https://aijishu.com/a/1060000…

我简单的想法是 和 星辰 这个内核呼应下,星辰大海嘛,这首歌还挺好听的,咱们的目标是星辰大海,目标要大一点 应该能实现。

先说音频播放的接口,一般是I2S,这一点大神的文章里介绍的很详细了,我就不再赘述。我关注的目标是rt-thread上播放音乐这码事,稍微区别下。

rt-thread提供了wav播放的包,名字叫wavplayer,我懒得移植helix的MP3

库了,估计有很多人移植来着,我就不趟了,我的核心是IoT方向,今早收拢战线回归主要战场。

wavplayer的下载链接,当然如果你有空用脚本生成那倒无所谓

https://github.com/RT-Thread-…

或

gitee我没找到…

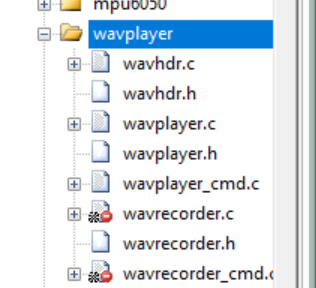

在keil的工程里添加wavplayer的源码

这里我没有用到record录音的功能,所以这部分c文件就编译屏蔽了

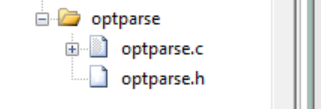

wavplayer需要用到rtt的optparse的相关定义和函数,添加optparse.c及其include的路径到工程

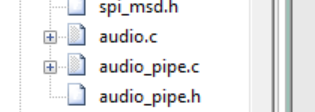

接下来就是rt-thread的audio框架的相关代码了,在component文件夹下

主要是audio.c和audio_pipe.c

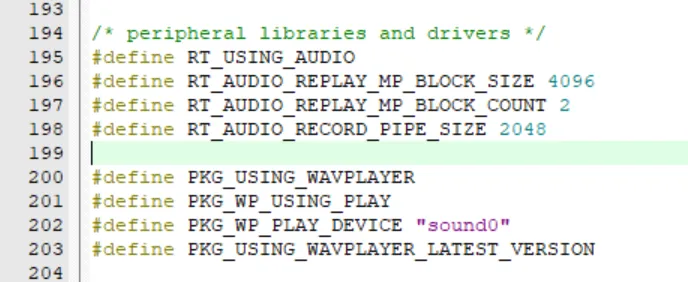

嗯,预想实现功能,rtconfig.h的修改是必不可少的,咱们需要打开如下几个宏定义,欸~

一切就绪就是本次文章的重头了,也是我干到夜里2点没干动的代码—drv_sound.c

我先把源文件列在这里,再讲我的思路,整的不好容易 啪啪啪 的破音,破音要么是数据DMA搬运的不连续,要么就是数据有问题,反正多搜索网上的帖子就好。

我代码偷懒了,并没有实现audio频率的设置,音量的设置(板子不支持)等我觉得很烦的功能,懒的搞,能播就行

/* * Copyright (c) 2020-2021, Bluetrum Development Team * * SPDX-License-Identifier: Apache-2.0 * * Date AuthorNotes * 2020-12-12 greedyhao first implementation */#include #include "rtdevice.h"#define DBG_TAG"drv.snd_dev"#define DBG_LVLDBG_ERROR#include #include #include #include "hal_common.h"#include "hal_rcc.h"#include "hal_i2s.h"#include "hal_dma.h"#include "hal_dma_request.h"#include "hal_gpio.h"#include "clock_init.h"#define SAI_AUDIO_FREQUENCY_48K ((uint32_t)48000u)#define SAI_AUDIO_FREQUENCY_44K ((uint32_t)44100u)#define SAI_AUDIO_FREQUENCY_38K ((uint32_t)38000u)#define TX_FIFO_SIZE (4096*2)struct sound_device{ struct rt_audio_device audio; struct rt_audio_configure replay_config; rt_uint8_t *tx_fifo; rt_uint8_t volume;};static struct sound_device snd_dev = {0};#pragma pack (4)volatile uint8_t g_PlayIndex = 0;uint8_t g_AudioBuf[TX_FIFO_SIZE] __attribute__((section(".ARM.__at_0x20000000"))) ;#pragma pack()/* I2S IRQ. */void DMA1_CH5_IRQHandler(void){ rt_interrupt_enter(); if (0u != (DMA_GetChannelInterruptStatus(DMA1, DMA_REQ_DMA1_SPI2_TX) & DMA_CHN_INT_XFER_DONE) ) { DMA_ClearChannelInterruptStatus(DMA1, DMA_REQ_DMA1_SPI2_TX, DMA_CHN_INT_XFER_DONE); DMA_EnableChannel(DMA1, DMA_REQ_DMA1_SPI2_TX, true); g_PlayIndex = 1; rt_audio_tx_complete(&snd_dev.audio); } if(0u != (DMA_CHN_INT_XFER_HALF_DONE & DMA_GetChannelInterruptStatus(DMA1, DMA_REQ_DMA1_SPI2_TX)) ) { DMA_ClearChannelInterruptStatus(DMA1, DMA_REQ_DMA1_SPI2_TX, DMA_CHN_INT_XFER_HALF_DONE); g_PlayIndex = 0; rt_audio_tx_complete(&snd_dev.audio); } rt_interrupt_leave();}//apll = 采样率*ADPLL_DIV*512//audio pll initvoid adpll_init(uint8_t out_spr){ GPIO_Init_Type gpio_init; /* SPI2. */ RCC_EnableAPB1Periphs(RCC_APB1_PERIPH_SPI2, true); RCC_ResetAPB1Periphs(RCC_APB1_PERIPH_SPI2); /* PD3 - I2S_CK. */ gpio_init.Pins = GPIO_PIN_3; gpio_init.PinMode = GPIO_PinMode_AF_PushPull; gpio_init.Speed = GPIO_Speed_10MHz; GPIO_Init(GPIOD, &gpio_init); GPIO_PinAFConf(GPIOD, gpio_init.Pins, GPIO_AF_5); /* PE6 - I2S_SD. */ gpio_init.Pins = GPIO_PIN_6; gpio_init.PinMode = GPIO_PinMode_AF_PushPull; gpio_init.Speed = GPIO_Speed_10MHz; GPIO_Init(GPIOE, &gpio_init); GPIO_PinAFConf(GPIOE, gpio_init.Pins, GPIO_AF_5); /* PE4 - I2S_WS. */ gpio_init.Pins = GPIO_PIN_4; gpio_init.PinMode = GPIO_PinMode_AF_PushPull; gpio_init.Speed = GPIO_Speed_10MHz; GPIO_Init(GPIOE, &gpio_init); GPIO_PinAFConf(GPIOE, gpio_init.Pins, GPIO_AF_5); /* PE5 - I2S_MCK. */ gpio_init.Pins = GPIO_PIN_5; gpio_init.PinMode = GPIO_PinMode_AF_PushPull; gpio_init.Speed = GPIO_Speed_10MHz; GPIO_Init(GPIOE, &gpio_init); GPIO_PinAFConf(GPIOE, gpio_init.Pins, GPIO_AF_5); /* Setup the DMA for I2S RX. */ DMA_Channel_Init_Type dma_channel_init; dma_channel_init.MemAddr = (uint32_t)(g_AudioBuf); dma_channel_init.MemAddrIncMode = DMA_AddrIncMode_IncAfterXfer; dma_channel_init.PeriphAddr = I2S_GetTxDataRegAddr(SPI2); /* use tx data register here. */ dma_channel_init.PeriphAddrIncMode = DMA_AddrIncMode_StayAfterXfer; dma_channel_init.Priority = DMA_Priority_Highest; dma_channel_init.XferCount = TX_FIFO_SIZE/2; dma_channel_init.XferMode = DMA_XferMode_MemoryToPeriph; dma_channel_init.ReloadMode = DMA_ReloadMode_AutoReload; dma_channel_init.XferWidth = DMA_XferWidth_16b; DMA_InitChannel(DMA1, DMA_REQ_DMA1_SPI2_TX, &dma_channel_init); /* Enable DMA transfer done interrupt. */ DMA_EnableChannelInterrupts(DMA1, DMA_REQ_DMA1_SPI2_TX, DMA_CHN_INT_XFER_DONE, true); DMA_EnableChannelInterrupts(DMA1, DMA_REQ_DMA1_SPI2_TX, DMA_CHN_INT_XFER_HALF_DONE, true); NVIC_EnableIRQ(DMA1_CH5_IRQn); /* Setup the I2S. */ I2S_Master_Init_Type i2s_master_init; i2s_master_init.ClockFreqHz = CLOCK_APB1_FREQ; i2s_master_init.SampleRate = SAI_AUDIO_FREQUENCY_44K; i2s_master_init.DataWidth = I2S_DataWidth_16b; i2s_master_init.Protocol = I2S_Protocol_PHILIPS; i2s_master_init.EnableMCLK = true; i2s_master_init.Polarity = I2S_Polarity_1; i2s_master_init.XferMode = I2S_XferMode_TxOnly; I2S_InitMaster(SPI2, &i2s_master_init); I2S_EnableDMA(SPI2, true); I2S_Enable(SPI2, true); DMA_EnableChannel(DMA1, DMA_REQ_DMA1_SPI2_TX, true);}static rt_err_t sound_getcaps(struct rt_audio_device *audio, struct rt_audio_caps *caps){ rt_err_t result = RT_EOK; struct sound_device *snd_dev = RT_NULL; RT_ASSERT(audio != RT_NULL); snd_dev = (struct sound_device *)audio->parent.user_data; LOG_D("%s:main_type: %d, sub_type: %d", __FUNCTION__, caps->main_type, caps->sub_type); switch (caps->main_type) { case AUDIO_TYPE_QUERY: /* qurey the types of hw_codec device */ { switch (caps->sub_type) { case AUDIO_TYPE_QUERY: caps->udata.mask = AUDIO_TYPE_OUTPUT | AUDIO_TYPE_MIXER; break; default: result = -RT_ERROR; break; } break; } case AUDIO_TYPE_OUTPUT: /* Provide capabilities of OUTPUT unit */ { switch (caps->sub_type) { case AUDIO_DSP_PARAM: caps->udata.config.samplerate = snd_dev->replay_config.samplerate; caps->udata.config.channels = snd_dev->replay_config.channels; caps->udata.config.samplebits = snd_dev->replay_config.samplebits; break; case AUDIO_DSP_SAMPLERATE: caps->udata.config.samplerate = snd_dev->replay_config.samplerate; break; case AUDIO_DSP_CHANNELS: caps->udata.config.channels = snd_dev->replay_config.channels; break; case AUDIO_DSP_SAMPLEBITS: caps->udata.config.samplebits = snd_dev->replay_config.samplebits; break; default: result = -RT_ERROR; break; } break; } case AUDIO_TYPE_MIXER: /* report the Mixer Units */ { switch (caps->sub_type) { case AUDIO_MIXER_QUERY: caps->udata.mask = AUDIO_MIXER_VOLUME; break; case AUDIO_MIXER_VOLUME: // caps->udata.value = saia_volume_get(); break; default: result = -RT_ERROR; break; } break; } default: result = -RT_ERROR; break; } return RT_EOK;}static rt_err_t sound_configure(struct rt_audio_device *audio, struct rt_audio_caps *caps){ rt_err_t result = RT_EOK; struct sound_device *snd_dev = RT_NULL; RT_ASSERT(audio != RT_NULL); snd_dev = (struct sound_device *)audio->parent.user_data; switch (caps->main_type) { case AUDIO_TYPE_MIXER: { switch (caps->sub_type) { case AUDIO_MIXER_VOLUME: { rt_uint8_t volume = caps->udata.value; // saia_volume_set(volume); snd_dev->volume = volume; LOG_D("set volume %d", volume); break; } case AUDIO_MIXER_EXTEND: break; default: result = -RT_ERROR; break; } break; } case AUDIO_TYPE_OUTPUT: { switch (caps->sub_type) { case AUDIO_DSP_PARAM: { /* set samplerate */ // saia_frequency_set(caps->udata.config.samplerate); /* set channels */ // saia_channels_set(caps->udata.config.channels); /* save configs */ snd_dev->replay_config.samplerate = caps->udata.config.samplerate; snd_dev->replay_config.channels = caps->udata.config.channels; snd_dev->replay_config.samplebits = caps->udata.config.samplebits; LOG_D("set samplerate %d", snd_dev->replay_config.samplerate); break; } case AUDIO_DSP_SAMPLERATE: { // saia_frequency_set(caps->udata.config.samplerate); snd_dev->replay_config.samplerate = caps->udata.config.samplerate; LOG_D("set samplerate %d", snd_dev->replay_config.samplerate); break; } case AUDIO_DSP_CHANNELS: { // saia_channels_set(caps->udata.config.channels); snd_dev->replay_config.channels = caps->udata.config.channels; LOG_D("set channels %d", snd_dev->replay_config.channels); break; } case AUDIO_DSP_SAMPLEBITS: { /* not support */ snd_dev->replay_config.samplebits = caps->udata.config.samplebits; break; } default: result = -RT_ERROR; break; } break; } default: break; } return RT_EOK;}static rt_err_t sound_init(struct rt_audio_device *audio){ struct sound_device *snd_dev = RT_NULL; RT_ASSERT(audio != RT_NULL); snd_dev = (struct sound_device *)audio->parent.user_data; adpll_init(0); /* set default params */ // saia_frequency_set(snd_dev->replay_config.samplerate); //saia_channels_set(snd_dev->replay_config.channels); //saia_volume_set(snd_dev->volume); return RT_EOK;}static rt_err_t sound_start(struct rt_audio_device *audio, int stream){ struct sound_device *snd_dev = RT_NULL; RT_ASSERT(audio != RT_NULL); snd_dev = (struct sound_device *)audio->parent.user_data; if (stream == AUDIO_STREAM_REPLAY) { LOG_D("open sound device"); DMA_EnableChannel(DMA1, DMA_REQ_DMA1_SPI2_TX, true); } return RT_EOK;}static rt_err_t sound_stop(struct rt_audio_device *audio, int stream){ RT_ASSERT(audio != RT_NULL); if (stream == AUDIO_STREAM_REPLAY) { LOG_D("close sound device"); DMA_EnableChannel(DMA1, DMA_REQ_DMA1_SPI2_TX, false); } return RT_EOK;}rt_size_t sound_transmit(struct rt_audio_device *audio, const void *writeBuf, void *readBuf, rt_size_t size){ struct sound_device *snd_dev = RT_NULL; rt_size_t count = 0;// RT_ASSERT(audio != RT_NULL);// snd_dev = (struct sound_device *)audio->parent.user_data;// rt_kprintf("Size %d %d\r\n", size, g_PlayIndex); if(g_PlayIndex == 0) { memcpy(&g_AudioBuf[0], writeBuf, size); } else { memcpy(&g_AudioBuf[TX_FIFO_SIZE/2], writeBuf, size); } return size;}static void sound_buffer_info(struct rt_audio_device *audio, struct rt_audio_buf_info *info){ struct sound_device *snd_dev = RT_NULL; RT_ASSERT(audio != RT_NULL); snd_dev = (struct sound_device *)audio->parent.user_data; /** * TX_FIFO * +----------------+----------------+ * | block1 | block2 | * +----------------+----------------+ * \ block_size / */ info->buffer = snd_dev->tx_fifo; info->total_size = TX_FIFO_SIZE; info->block_size = TX_FIFO_SIZE/2; info->block_count = 2;}static struct rt_audio_ops ops ={ .getcaps = sound_getcaps, .configure = sound_configure, .init = sound_init, .start= sound_start, .stop = sound_stop, .transmit = sound_transmit,//NULL,//sound_transmit, .buffer_info = sound_buffer_info,};static int rt_hw_sound_init(void){ rt_uint8_t *tx_fifo = RT_NULL; rt_uint8_t *rx_fifo = RT_NULL; tx_fifo = rt_malloc(TX_FIFO_SIZE); if(tx_fifo == RT_NULL) { rt_kprintf("Sound can alloc tx_fifo\r\n"); return -RT_ENOMEM; } rt_memset(&g_AudioBuf[0], 0x00, TX_FIFO_SIZE); rt_memset(tx_fifo, 0, TX_FIFO_SIZE/2); snd_dev.tx_fifo = tx_fifo; /* init default configuration */ { snd_dev.replay_config.samplerate = SAI_AUDIO_FREQUENCY_44K; snd_dev.replay_config.channels = 2; snd_dev.replay_config.samplebits = 16; snd_dev.volume = 55; } /* register snd_dev device */ snd_dev.audio.ops = &ops; rt_audio_register(&snd_dev.audio, "sound0", RT_DEVICE_FLAG_WRONLY, &snd_dev); return RT_EOK;}INIT_DEVICE_EXPORT(rt_hw_sound_init);DMA搬运wav的音频数据我遇到了很多问题,最后用一个大的buffer给DMA搬运,把这个buffer拆成两半,DMA使用half transfer interrupt以及transfered interrupt即传输一半给中断,传输完成给中断。

传输一半的时候让wav解码任务读取一部分内容放到buffer前半部分,传输完成通知wav解码任务再读取一部分内容放到buffer的后半部分。类似pingpong buffer 这种机制,才能保证wav播放的连续性。

好吧编译后下载运行看视频的结果,wav播放的命令是wavplayer -s 文件名