Kubernetes 管理员认证(CKA)考试笔记(四)

写在前面

- 嗯,准备考

cka证书,报了个班,花了好些钱,一定要考过去。 - 这篇博客是报班听课后整理的笔记,适合温习。

- 博客内容涉及:

生活的意义就是学着真实的活下去,生命的意义就是寻找生活的意义 -----山河已无恙

heml

helm的作用就是把许多的资源定义 比如svc,deployment,一次性通过全部定义好,放在源里统一管理,这样很容易在其他机器上部署,个人理解这个类似于自动化运维中ansible中的角色概念,前端项目中的npm包管理工具,后端项目中的maven等构建工具一样,类比Ansible使用角色来整合playbook.yaml达到复用性。同样的,使用helm用于整合k8s中的资源对象yaml文件,实现复用性

Helm是一个由CNCF孵化和管理的项目,用于对需要在Kubernetes上部署的复杂应用进行定义、安装和更新。Helm以Chart的方式对应用软件进行描述,可以方便地创建、版本化、共享和发布复杂的应用软件。

heml主要概念

Chart:一个Helm包,其中包含运行一个应用所需要的工具和资源定义,还可能包含Kubernetes集群中的服务定义,类似Ansible中的rhel-system-roles软件包

Release: 在Kubernetes集群上运行的一个Chart实例。在同一个集群上,一个Chart可以被安装多次。

Repository:用于存放和共享Chart仓库。简单来说, Helm整个系统的主要任务就是,在仓库中查找需要的Chart,然后将Chart以Release的形式安装到Kubernetes集群中。

使用helm我们首先需要安装,可以通过Github下载安装包

heml 安装

安装包下载:https://github.com/helm/helm/releases:

解压安装

┌──[root@vms81.liruilongs.github.io]-[~]└─$tar zxf helm-v3.2.1-linux-amd64.tar.gz┌──[root@vms81.liruilongs.github.io]-[~]└─$cd linux-amd64/┌──[root@vms81.liruilongs.github.io]-[~/linux-amd64]└─$lshelm LICENSE README.md之后直接将helm复制到/usr/local/bin/,配置完之后,即可以使用helm命令

┌──[root@vms81.liruilongs.github.io]-[~/linux-amd64]└─$cp helm /usr/local/bin/┌──[root@vms81.liruilongs.github.io]-[~/linux-amd64]└─$ls /usr/local/bin/helm配置命令自动补全,通过写入/etc/profile 文件 souece < (helm completion bash)的方式配置命令自动补全。配置完记得使用source /etc/profile去刷新配置

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create]└─$helm --help | grep bash completion generate autocompletions script for the specified shell (bash or zsh)┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create]└─$vim /etc/profile┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create]└─$source /etc/profile┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create]└─$cat /etc/profile | grep -v ^# | grep sourcesource <(kubectl completion bash)source <(helm completion bash)┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create]└─$验证安装,查看heml版本

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create]└─$helm versionversion.BuildInfo{Version:"v3.2.1", GitCommit:"fe51cd1e31e6a202cba7dead9552a6d418ded79a", GitTreeState:"clean", GoVersion:"go1.13.10"}┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create]└─$配置helm源

使用helm需要配置yaml源,常见的有阿里。微软,和Githup上的源

- 阿里云的源 https://apphub.aliyuncs.com

- 微软azure的源 http://mirror.azure.cn/kubernetes/charts/

查看所以的源

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create]└─$helm repo list #查看所以的源Error: no repositories to show添加指定是源

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create]└─$helm repo add azure http://mirror.azure.cn/kubernetes/charts/"azure" has been added to your repositories┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create]└─$helm repo add ali https://apphub.aliyuncs.com"ali" has been added to your repositories查看刚才添加的yum源

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create]└─$helm repo listNAME URLazure http://mirror.azure.cn/kubernetes/charts/ali https://apphub.aliyuncs.com┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create]└─$Helm的常见用法

Helm的常见用法,包括搜索Chart、安装Chart、自定义Chart配置、更新或回滚Release、删除Release、创建自定义Chart、搭建私有仓库等

helm search:搜索可用的Chart

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create]└─$helm search repo mysqlNAMECHART VERSION APP VERSION DESCRIPTIONali/mysql 6.8.0 8.0.19 Chart to create a Highly available MySQL clusterali/mysqldump 2.6.0 2.4.1 A Helm chart to help backup MySQL databases usi...ali/mysqlha1.0.0 5.7.13 MySQL cluster with a single master and zero or ...ali/prometheus-mysql-exporter 0.5.2 v0.11.0 A Helm chart for prometheus mysql exporter with...azure/mysql1.6.9 5.7.30 DEPRECATED - Fast, reliable, scalable, and easy...azure/mysqldump 2.6.2 2.4.1 DEPRECATED! - A Helm chart to help backup MySQL...azure/prometheus-mysql-exporter 0.7.1 v0.11.0 DEPRECATED A Helm chart for prometheus mysql 。。。。。。。。。。chart包拉取

安装chart可以直接使用命令安装,也可以拉取到本地之后安装,也可以直接通过命名行安装

- 本地的Chart压缩包(helm install mysql-1.6.4.tgz)

- 一个Chart目录(helm install mysql/)

- 一个完整的URL(helm install https://example.com/charts/mysql-1.6.4.tgz)

chart包拉取

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create]└─$helm pull azure/mysql --version=1.6.4┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create]└─$lsmysql-1.6.4.tgzhelm install:安装Chart

chart包直接安装

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create]└─$#helm install db azure/mysql --version=1.6.4┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create]└─$拉取的chart包详细信息,通过解压之后查看

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create]└─$lsmysql-1.6.4.tgz┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create]└─$tar zxf mysql-1.6.4.tgz.......┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create]└─$lsmysql mysql-1.6.4.tgz┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create]└─$cd mysql/┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create/mysql]└─$lsChart.yaml README.md templates values.yaml| yaml文件 | 描述 |

|---|---|

| Chart.yaml | 用于描述Chart信息的YAML文件 |

| README.md | 可选: README文件 |

| values.yaml | 默认的配置值 |

| templates | 可选:结合values.yaml,能够生成Kubernetes的manifest文件 |

对于下载好的yaml文件,我们可以修改后使用helm package重新打包

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create]└─$rm -rf mysql-1.6.4.tgz ; helm package mysql/Successfully packaged chart and saved it to: /root/ansible/k8s-helm-create/mysql-1.6.4.tgz┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create]└─$lsmysql mysql-1.6.4.tgz下面我们修改chart中的镜像为已经下载好的mysql和busybox镜像

┌──[root@vms81.liruilongs.github.io]-[~/ansible]└─$ansible 192.168.26.82 -m shell -a "docker images | grep mysql"192.168.26.82 | CHANGED | rc=0 >>mysql latest ecac195d15af 2 months ago 516MBmysql <none> 9da615fced53 3 months ago 514MBhub.c.163.com/library/mysql latest 9e64176cd8a2 4 years ago 407MB┌──[root@vms81.liruilongs.github.io]-[~/ansible]└─$ansible 192.168.26.82 -m shell -a "docker images | grep busybox"192.168.26.82 | CHANGED | rc=0 >>busybox latest ffe9d497c324 5 weeks ago 1.24MBbusybox <none> 7138284460ff 2 months ago 1.24MBbusybox <none> cabb9f684f8b 2 months ago 1.24MBbusybox 1.27 6ad733544a63 4 years ago 1.13MByauritux/busybox-curl latest 69894251bd3c 5 years ago 21.3MB┌──[root@vms81.liruilongs.github.io]-[~/ansible]└─$fgvim ./k8s-helm-create/mysql/values.yaml┌──[root@vms81.liruilongs.github.io]-[~/ansible]└─$通过修好的yaml文件创建chart, 查看当前运行的chart

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create]└─$helm lsNAME NAMESPACEREVISION UPDATED STATUS CHART APP VERSION使用helm install运行Chart

这里我们使用之前的那个mysq chart来安装一个mysql

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create]└─$cd mysql/┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create/mysql]└─$helm install mydb .NAME: mydbLAST DEPLOYED: Thu Jan 13 01:51:42 2022NAMESPACE: liruilong-network-createSTATUS: deployedREVISION: 1NOTES:MySQL can be accessed via port 3306 on the following DNS name from within your cluster:mydb-mysql.liruilong-network-create.svc.cluster.localTo get your root password run: MYSQL_ROOT_PASSWORD=$(kubectl get secret --namespace liruilong-network-create mydb-mysql -o jsonpath="{.data.mysql-root-password}" | base64 --decode; echo)To connect to your database:1. Run an Ubuntu pod that you can use as a client: kubectl run -i --tty ubuntu --image=ubuntu:16.04 --restart=Never -- bash -il2. Install the mysql client: $ apt-get update && apt-get install mysql-client -y3. Connect using the mysql cli, then provide your password: $ mysql -h mydb-mysql -pTo connect to your database directly from outside the K8s cluster: MYSQL_HOST=127.0.0.1 MYSQL_PORT=3306 # Execute the following command to route the connection: kubectl port-forward svc/mydb-mysql 3306 mysql -h ${MYSQL_HOST} -P${MYSQL_PORT} -u root -p${MYSQL_ROOT_PASSWORD}查看是否是否运行成功mydb的pod和SVC

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create/mysql]└─$kubectl get podsNAME READY STATUS RESTARTS AGEmydb-mysql-7f8c5c47bd-82cts 1/1 Running 0 55spod1 1/1 Running 2 (7d17h ago) 9dpod2 1/1 Running 3 (3d3h ago) 9d┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create/mysql]└─$kubectl get svcNAME TYPE CLUSTER-IPEXTERNAL-IP PORT(S) AGEmydb-mysql ClusterIP 10.107.17.103 <none> 3306/TCP62ssvc1 LoadBalancer 10.106.61.84 192.168.26.240 80:30735/TCP 9dsvc2 LoadBalancer 10.111.123.194 192.168.26.241 80:31034/TCP 9d┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create/mysql]└─$安装一个mysql客户端测试OK

┌──[root@vms82.liruilongs.github.io]-[~]└─$yum install mariadb -y┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create/mysql]└─$mysql -h10.107.17.103 -uroot -ptestingWelcome to the MariaDB monitor. Commands end with ; or \g.Your MySQL connection id is 7Server version: 5.7.18 MySQL Community Server (GPL)Copyright (c) 2000, 2018, Oracle, MariaDB Corporation Ab and others.Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.MySQL [(none)]> use mysqlReading table information for completion of table and column namesYou can turn off this feature to get a quicker startup with -ADatabase changed删除helm

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create/mysql]└─$helm del mydbrelease "mydb" uninstalled┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create/mysql]└─$helm lsNAME NAMESPACEREVISION UPDATED STATUS CHART APP VERSION┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create/mysql]└─$搭建私有Repository

自建的Chart之后自然需要搭建私有仓库。下面使用Nginx搭建一个简单的Chart私有仓库

仓库搭建

仓库搭建,找一台机器运行一个Nginx服务做仓库,需要注意要对主页数据做映射

┌──[root@vms83.liruilongs.github.io]-[~]└─$netstat -ntulp | grep 80┌──[root@vms83.liruilongs.github.io]-[~]└─$docker run -dit --name=helmrepo -p 8080:80 -v /data:/usr/share/nginx/html/charts docker.io/nginx7201e001b02602f087105ca6096b0816acb03db02296c35c098a3dfddcb9c8d0┌──[root@vms83.liruilongs.github.io]-[~]└─$docker ps | grep helmrepo7201e001b026 nginx "/docker-entrypoint.…" 16 seconds ago Up 15 seconds 0.0.0.0:8080->80/tcp, :::8080->80/tcp helmrepo访问测试

┌──[root@vms83.liruilongs.github.io]-[~]└─$curl 127.0.0.1:8080<!DOCTYPE html><html><head><title>Welcome to nginx!</title>。。。。。。。。chart包上传

打包之前的mysql包,上传helm私有仓库。这里需要本读生成索引文件index.yaml

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create]└─$helm package mysql/Successfully packaged chart and saved it to: /root/ansible/k8s-helm-create/mysql-1.6.4.tgz┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create]└─$helm repo index . --url http://192.168.26.83:8080/charts┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create]└─$lsindex.yaml mysql mysql-1.6.4.tgz┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create]└─$cd ..将索引文件和chart包一同上传到私有仓库

┌──[root@vms81.liruilongs.github.io]-[~/ansible]└─$ansible 192.168.26.83 -m copy -a "src=./k8s-helm-create/index.yaml dest=/data/"192.168.26.83 | CHANGED => { "ansible_facts": { "discovered_interpreter_python": "/usr/bin/python" }, "changed": true, "checksum": "233a0f3837d46af8a50098f1b29aa524b751cb29", "dest": "/data/index.yaml", "gid": 0, "group": "root", "md5sum": "66953d9558e44ab2f049dc602600ffda", "mode": "0644", "owner": "root", "size": 843, "src": "/root/.ansible/tmp/ansible-tmp-1642011407.72-76313-71345316897038/source", "state": "file", "uid": 0}┌──[root@vms81.liruilongs.github.io]-[~/ansible]└─$ansible 192.168.26.83 -m copy -a "src=./k8s-helm-create/mysql-1.6.4.tgz dest=/data/"192.168.26.83 | CHANGED => { "ansible_facts": { "discovered_interpreter_python": "/usr/bin/python" }, "changed": true, "checksum": "4fddb1c13c71673577570e61f68f926af7255bad", "dest": "/data/mysql-1.6.4.tgz", "gid": 0, "group": "root", "md5sum": "929267de36f9be04e0adfb2f9c9f5812", "mode": "0644", "owner": "root", "size": 11121, "src": "/root/.ansible/tmp/ansible-tmp-1642011437.58-76780-127185287864942/source", "state": "file", "uid": 0}┌──[root@vms81.liruilongs.github.io]-[~/ansible]└─$仓库索引文件更新

如果添加新的chart包到私有仓库,需要对于索引文件进行更新

helm create 创建一个自定义的chart包

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create]└─$helm create liruilonghelmCreating liruilonghelm┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create]└─$lsindex.yaml liruilonghelm mysql mysql-1.6.4.tgz┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create]└─$helm package liruilonghelm/Successfully packaged chart and saved it to: /root/ansible/k8s-helm-create/liruilonghelm-0.1.0.tgz┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create]└─$lsindex.yaml liruilonghelm liruilonghelm-0.1.0.tgz mysql mysql-1.6.4.tgz使用同样的命名跟新索引文件

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create]└─$helm repo index . --url http://192.168.26.83:8080/charts┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create]└─$cat index.yamlapiVersion: v1entries: liruilonghelm: - apiVersion: v2 appVersion: 1.16.0 created: "2022-01-13T02:22:19.442365047+08:00" description: A Helm chart for Kubernetes digest: abb491f061cccc8879659149d96c99cbc261af59d5fcf8855c5e86251fbd53c1 name: liruilonghelm type: application urls: - http://192.168.26.83:8080/charts/liruilonghelm-0.1.0.tgz version: 0.1.0 mysql: - apiVersion: v1 appVersion: 5.7.30 created: "2022-01-13T02:22:19.444985984+08:00" description: Fast, reliable, scalable, and easy to use open-source relational database system. digest: 29153332e509765010c7e5e240a059550d52b01b31b69f25dd27c136dffec40f home: https://www.mysql.com/ icon: https://www.mysql.com/common/logos/logo-mysql-170x115.png keywords: - mysql - database - sql maintainers: - email: o.with@sportradar.com name: olemarkus - email: viglesias@google.com name: viglesiasce name: mysql sources: - https://github.com/kubernetes/charts - https://github.com/docker-library/mysql urls: - http://192.168.26.83:8080/charts/mysql-1.6.4.tgz version: 1.6.4generated: "2022-01-13T02:22:19.440764685+08:00"┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create]└─$会发现索引文件已经被更新,entries里有两个对象,上传相关的数据

┌──[root@vms81.liruilongs.github.io]-[~/ansible]└─$ansible 192.168.26.83 -m copy -a "src=./k8s-helm-create/index.yaml dest=/data/"192.168.26.83 | CHANGED => { "ansible_facts": { "discovered_interpreter_python": "/usr/bin/python" }, "changed": true, "checksum": "dbdc550a24159764022ede9428b9f11a09ccf291", "dest": "/data/index.yaml", "gid": 0, "group": "root", "md5sum": "b771d8e50dd49228594f8a566117f8bf", "mode": "0644", "owner": "root", "size": 1213, "src": "/root/.ansible/tmp/ansible-tmp-1642012325.1-89511-190591844764611/source", "state": "file", "uid": 0}┌──[root@vms81.liruilongs.github.io]-[~/ansible]└─$ansible 192.168.26.83 -m copy -a "src=./k8s-helm-create/liruilonghelm-0.1.0.tgz dest=/data/"192.168.26.83 | CHANGED => { "ansible_facts": { "discovered_interpreter_python": "/usr/bin/python" }, "changed": true, "checksum": "f7fe8a0a7585adf23e3e23f8378e3e5a0dc13f92", "dest": "/data/liruilonghelm-0.1.0.tgz", "gid": 0, "group": "root", "md5sum": "04670f9b7e614d3bc6ba3e133bddae59", "mode": "0644", "owner": "root", "size": 3591, "src": "/root/.ansible/tmp/ansible-tmp-1642012352.54-89959-104738456182106/source", "state": "file", "uid": 0}用私有仓库chart部署应用程序

添加私有源

┌──[root@vms81.liruilongs.github.io]-[~/ansible]└─$helm repo add liruilong_repo http://192.168.26.83:8080/charts"liruilong_repo" has been added to your repositories┌──[root@vms81.liruilongs.github.io]-[~/ansible]└─$helm repo listNAME URLazure http://mirror.azure.cn/kubernetes/charts/ali https://apphub.aliyuncs.comliruilong_repo http://192.168.26.83:8080/charts私有源查找安装的chart

┌──[root@vms81.liruilongs.github.io]-[~/ansible]└─$helm search repo mysql | grep liruilongliruilong_repo/mysql 1.6.4 5.7.30 Fast, reliable, scalable, and easy to use open-...┌──[root@vms81.liruilongs.github.io]-[~/ansible]└─$安装私有源chart

┌──[root@vms81.liruilongs.github.io]-[~/ansible]└─$helm listNAME NAMESPACEREVISION UPDATED STATUS CHART APP VERSION┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create]└─$helm install liruilongdb liruilong_repo/mysqlNAME: liruilongdbLAST DEPLOYED: Thu Jan 13 02:42:41 2022NAMESPACE: liruilong-network-createSTATUS: deployedREVISION: 1NOTES:MySQL can be accessed via port 3306 on the following DNS name from within your cluster:liruilongdb-mysql.liruilong-network-create.svc.cluster.localTo get your root password run: MYSQL_ROOT_PASSWORD=$(kubectl get secret --namespace liruilong-network-create liruilongdb-mysql -o jsonpath="{.data.mysql-root-password}" | base64 --decode; echo)To connect to your database:1. Run an Ubuntu pod that you can use as a client: kubectl run -i --tty ubuntu --image=ubuntu:16.04 --restart=Never -- bash -il2. Install the mysql client: $ apt-get update && apt-get install mysql-client -y3. Connect using the mysql cli, then provide your password: $ mysql -h liruilongdb-mysql -pTo connect to your database directly from outside the K8s cluster: MYSQL_HOST=127.0.0.1 MYSQL_PORT=3306 # Execute the following command to route the connection: kubectl port-forward svc/liruilongdb-mysql 3306 mysql -h ${MYSQL_HOST} -P${MYSQL_PORT} -u root -p${MYSQL_ROOT_PASSWORD}验证安装,查看chart列表

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create]└─$helm listNAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSIONliruilongdb liruilong-network-create 1 2022-01-13 02:42:41.537928447 +0800 CST deployedmysql-1.6.4 5.7.30┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create]└─$在helm install命令的执行过程中,可以使用helm status命令跟踪 Release的状态:Helm不会等待所有创建过程的完成,这是因为有些Chart的Docker镜像较大,会消耗很长的时间进行下载和创建

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create]└─$helm status liruilongdbNAME: liruilongdbLAST DEPLOYED: Thu Jan 13 02:42:41 2022NAMESPACE: liruilong-network-createSTATUS: deployedREVISION: 1NOTES:MySQL can be accessed via port 3306 on the following DNS name from within your cluster:liruilongdb-mysql.liruilong-network-create.svc.cluster.localTo get your root password run: MYSQL_ROOT_PASSWORD=$(kubectl get secret --namespace liruilong-network-create liruilongdb-mysql -o jsonpath="{.data.mysql-root-password}" | base64 --decode; echo)To connect to your database:1. Run an Ubuntu pod that you can use as a client: kubectl run -i --tty ubuntu --image=ubuntu:16.04 --restart=Never -- bash -il2. Install the mysql client: $ apt-get update && apt-get install mysql-client -y3. Connect using the mysql cli, then provide your password: $ mysql -h liruilongdb-mysql -pTo connect to your database directly from outside the K8s cluster: MYSQL_HOST=127.0.0.1 MYSQL_PORT=3306 # Execute the following command to route the connection: kubectl port-forward svc/liruilongdb-mysql 3306 mysql -h ${MYSQL_HOST} -P${MYSQL_PORT} -u root -p${MYSQL_ROOT_PASSWORD}在成功安装Chart后,系统会在当前命名空间内创建一个ConfigMap用于保存Release对象的数据

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create]└─$kubectl get configmapsNAMEDATA AGEkube-root-ca.crt 1 12dliruilongdb-mysql-test 1 2d19h┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create]└─$kubectl describe configmaps liruilongdb-mysql-testName: liruilongdb-mysql-testNamespace: liruilong-network-createLabels:app=liruilongdb-mysqlapp.kubernetes.io/managed-by=Helmchart=mysql-1.6.4heritage=Helmrelease=liruilongdbAnnotations: meta.helm.sh/release-name: liruilongdbmeta.helm.sh/release-namespace: liruilong-network-createData====run.sh:----@test "Testing MySQL Connection" { mysql --host=liruilongdb-mysql --port=3306 -u root -ptesting}BinaryData====Events: <none>┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create]└─$查看创建pod运行状态

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create]└─$kubectl get podsNAME READY STATUS RESTARTS AGEliruilongdb-mysql-5cbf489f65-6ff4q 1/1 Running 1 (56m ago) 26h┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create]└─$helm delete liruilongdbrelease "liruilongdb" uninstalled┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create]└─$kubectl get podsNAME READY STATUS RESTARTS AGEliruilongdb-mysql-5cbf489f65-6ff4q 1/1 Terminating 1 (57m ago) 26h┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create]└─$Kubernetes监控管理

Kubernetes平台搭建好后,了解Kubernetes平台及在此平台上部署的应用的运行状况,以及处理系统主要告誓及性能瓶颈,这些都依赖监控管理系统。

Kubernetes的早期版本依靠Heapster来实现完整的性能数据采集和监控功能,Kubernetes从1.8版本开始,性能数据开始以Metrics APl的方式提供标准化接口,并且从1.10版本开始将Heapster替换为MetricsServer。

在Kubernetes新的监控体系中:Metrics Server用于提供核心指标(Core Metrics) ,包括Node, Pod的CPU和内存使用指标。对其他自定义指标(Custom Metrics)的监控则由Prometheus等组件来完成。

监控节点状态,我们使用docker的话可以通过docker stats.

┌──[root@vms81.liruilongs.github.io]-[~]└─$docker statsCONTAINER ID NAME CPU % MEM USAGE / LIMIT MEM % NET I/O BLOCK I/O PIDS781c898eea19 k8s_kube-scheduler_kube-scheduler-vms81.liruilongs.github.io_kube-system_5bd71ffab3a1f1d18cb589aa74fe082b_180.15% 23.22MiB / 3.843GiB 0.59% 0B / 0B 0B / 0B7acac8b21bb57 k8s_kube-controller-manager_kube-controller-manager-vms81.liruilongs.github.io_kube-system_93d9ae7b5a4ccec4429381d493b5d475_18 1.18% 59.16MiB / 3.843GiB 1.50% 0B / 0B 0B / 0B6fe97754d3dab k8s_calico-node_calico-node-skzjp_kube-system_a211c8be-3ee1-44a0-a4ce-3573922b65b2_14 4.89% 94.25MiB / 3.843GiB 2.39% 0B / 0B 0B / 4.1kB 40那使用k8s的话,我们可以通过Metrics Server监控Pod和Node的CPU和内存资源使用数据

Metrics Server:集群性能监控平台

Metrics Server在部署完成后,将通过Kubernetes核心API Server 的/apis/metrics.k8s.io/v1beta1路径提供Pod和Node的监控数据。

安装Metrics Server

Metrics Server源代码和部署配置可以在GitHub代码库

curl -Ls https://api.github.com/repos/kubernetes-sigs/metrics-server/tarball/v0.3.6 -o metrics-server-v0.3.6.tar.gz相关镜像

docker pull mirrorgooglecontainers/metrics-server-amd64:v0.3.6镜像小伙伴可以下载一下,这里我已经下载好了,直接上传导入镜像

┌──[root@vms81.liruilongs.github.io]-[~/ansible]└─$ansible all -m copy -a "src=./metrics-img.tar dest=/root/metrics-img.tar"┌──[root@vms81.liruilongs.github.io]-[~/ansible]└─$ansible all -m shell -a "systemctl restart docker "192.168.26.82 | CHANGED | rc=0 >>192.168.26.83 | CHANGED | rc=0 >>192.168.26.81 | CHANGED | rc=0 >>通过docker命令导入镜像

┌──[root@vms81.liruilongs.github.io]-[~/ansible]└─$ansible all -m shell -a "docker load -i /root/metrics-img.tar"192.168.26.83 | CHANGED | rc=0 >>Loaded image: k8s.gcr.io/metrics-server-amd64:v0.3.6192.168.26.81 | CHANGED | rc=0 >>Loaded image: k8s.gcr.io/metrics-server-amd64:v0.3.6192.168.26.82 | CHANGED | rc=0 >>Loaded image: k8s.gcr.io/metrics-server-amd64:v0.3.6┌──[root@vms81.liruilongs.github.io]-[~/ansible]└─$修改metrics-server-deployment.yaml

┌──[root@vms81.liruilongs.github.io]-[~/ansible]└─$mv kubernetes-sigs-metrics-server-d1f4f6f/ metrics┌──[root@vms81.liruilongs.github.io]-[~/ansible]└─$cd metrics/┌──[root@vms81.liruilongs.github.io]-[~/ansible/metrics]└─$lscmd deploy hack OWNERS README.md versioncode-of-conduct.md Gopkg.lock LICENSE OWNERS_ALIASES SECURITY_CONTACTSCONTRIBUTING.md Gopkg.toml Makefile pkg vendor┌──[root@vms81.liruilongs.github.io]-[~/ansible/metrics]└─$cd deploy/1.8+/┌──[root@vms81.liruilongs.github.io]-[~/ansible/metrics/deploy/1.8+]└─$lsaggregated-metrics-reader.yaml metrics-apiservice.yaml resource-reader.yamlauth-delegator.yaml metrics-server-deployment.yamlauth-reader.yaml metrics-server-service.yaml这里修改一些镜像获取策略,因为Githup上的镜像拉去不下来,或者拉去比较麻烦,所以我们提前上传好

┌──[root@vms81.liruilongs.github.io]-[~/ansible/metrics/deploy/1.8+]└─$vim metrics-server-deployment.yaml 31- name: metrics-server 32 image: k8s.gcr.io/metrics-server-amd64:v0.3.6 33 #imagePullPolicy: Always 34 imagePullPolicy: IfNotPresent 35 command: 36 - /metrics-server 37 - --metric-resolution=30s 38 - --kubelet-insecure-tls 39 - --kubelet-preferred-address-types=InternalIP 40 volumeMounts:运行资源文件,创建相关资源对象

┌──[root@vms81.liruilongs.github.io]-[~/ansible/metrics/deploy/1.8+]└─$kubectl apply -f .查看pod列表,metrics-server创建成功

┌──[root@vms81.liruilongs.github.io]-[~/ansible/metrics/deploy/1.8+]└─$kubectl get pods -n kube-systemNAMEREADY STATUS RESTARTS AGEcalico-kube-controllers-78d6f96c7b-79xx4 1/1 Running 2 3h15mcalico-node-ntm7v 1/1 Running 1 12hcalico-node-skzjp 1/1 Running 4 12hcalico-node-v7pj5 1/1 Running 1 12hcoredns-545d6fc579-9h2z4 1/1 Running 2 3h15mcoredns-545d6fc579-xgn8x 1/1 Running 2 3h16metcd-vms81.liruilongs.github.io 1/1 Running 1 13hkube-apiserver-vms81.liruilongs.github.io 1/1 Running 2 13hkube-controller-manager-vms81.liruilongs.github.io 1/1 Running 4 13hkube-proxy-rbhgf 1/1 Running 1 13hkube-proxy-vm2sf 1/1 Running 1 13hkube-proxy-zzbh9 1/1 Running 1 13hkube-scheduler-vms81.liruilongs.github.io 1/1 Running 5 13hmetrics-server-bcfb98c76-gttkh 1/1 Running 0 70m通过kubectl top nodes命令测试,

┌──[root@vms81.liruilongs.github.io]-[~/ansible/metrics/deploy/1.8+]└─$kubectl top nodesW1007 14:23:06.102605 102831 top_node.go:119] Using json format to get metrics. Next release will switch to protocol-buffers, switch early by passing --use-protocol-buffers flagNAME CPU(cores) CPU% MEMORY(bytes) MEMORY%vms81.liruilongs.github.io 555m 27% 2025Mi 52%vms82.liruilongs.github.io 204m 10% 595Mi 15%vms83.liruilongs.github.io 214m 10% 553Mi 14%┌──[root@vms81.liruilongs.github.io]-[~/ansible/metrics/deploy/1.8+]└─$Prometheus+Grafana+NodeExporter:集群监控平台

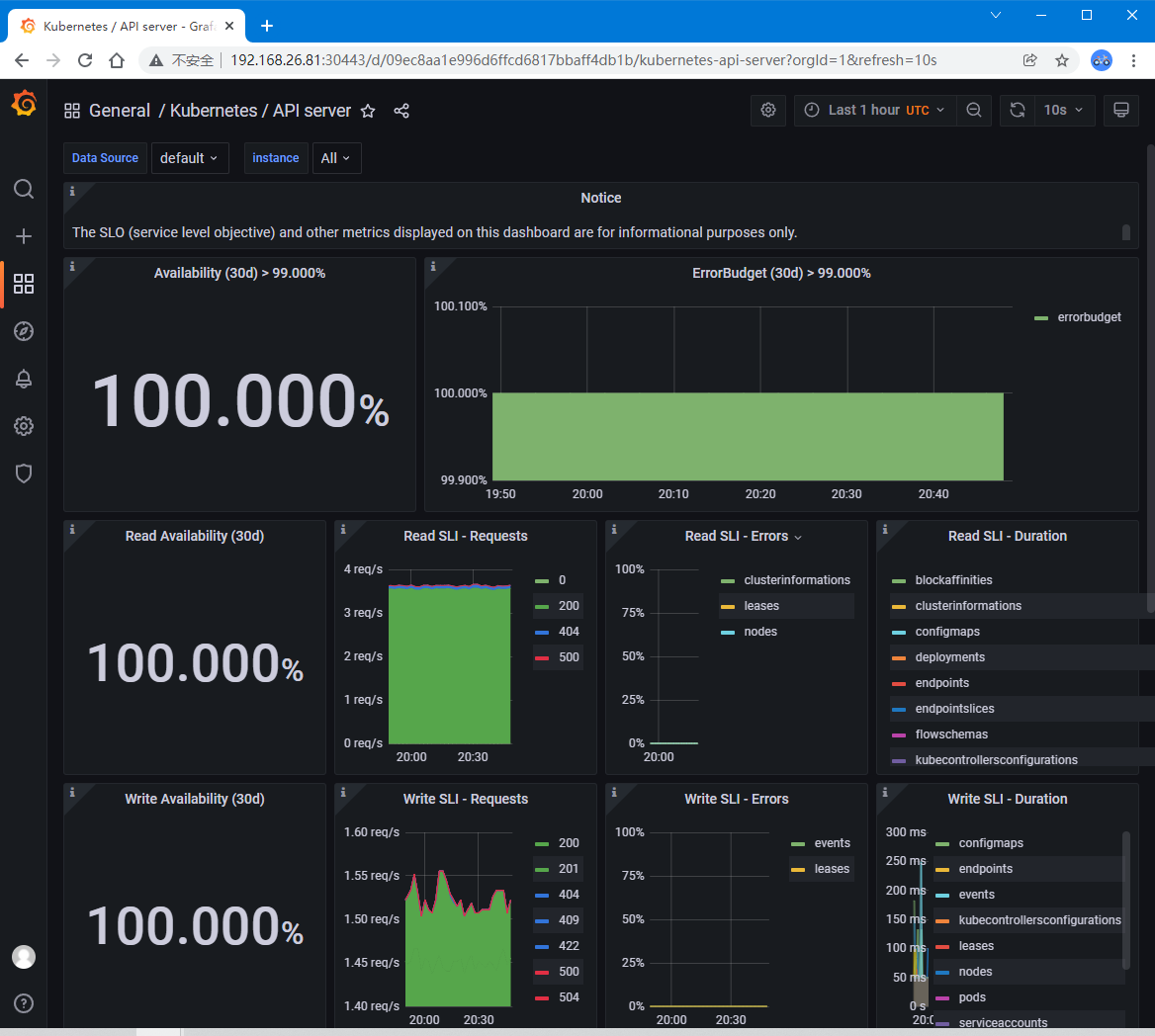

在各个计算节点上部署NodeExporter采集CPU、内存、磁盘及IO信息,并将这些信息传输给监控节点上的Prometheus服务器进行存储分析,通过Grafana进行可视化监控,

Prometheus

Prometheus是一款开源的监控解决方案,由SoundCloud公司开发的开源监控系统,是继Kubernetes之后CNCF第2个孵化成功的项目,在容器和微服务领域得到了广泛应用,能在监控Kubernetes平台的同时监控部署在此平台中的应用,它提供了一系列工具集及多维度监控指标。Prometheus依赖Grafana实现数据可视化。

Prometheus的主要特点如下:

- 使用

指标名称及键值对标识的多维度数据模型。 - 采用灵活的

查询语言PromQL。 - 不依赖

分布式存储,为自治的单节点服务。 - 使用

HTTP完成对监控数据的拉取。 - 支持通过

网关推送时序数据。 - 支持多种图形和

Dashboard的展示,例如Grafana。

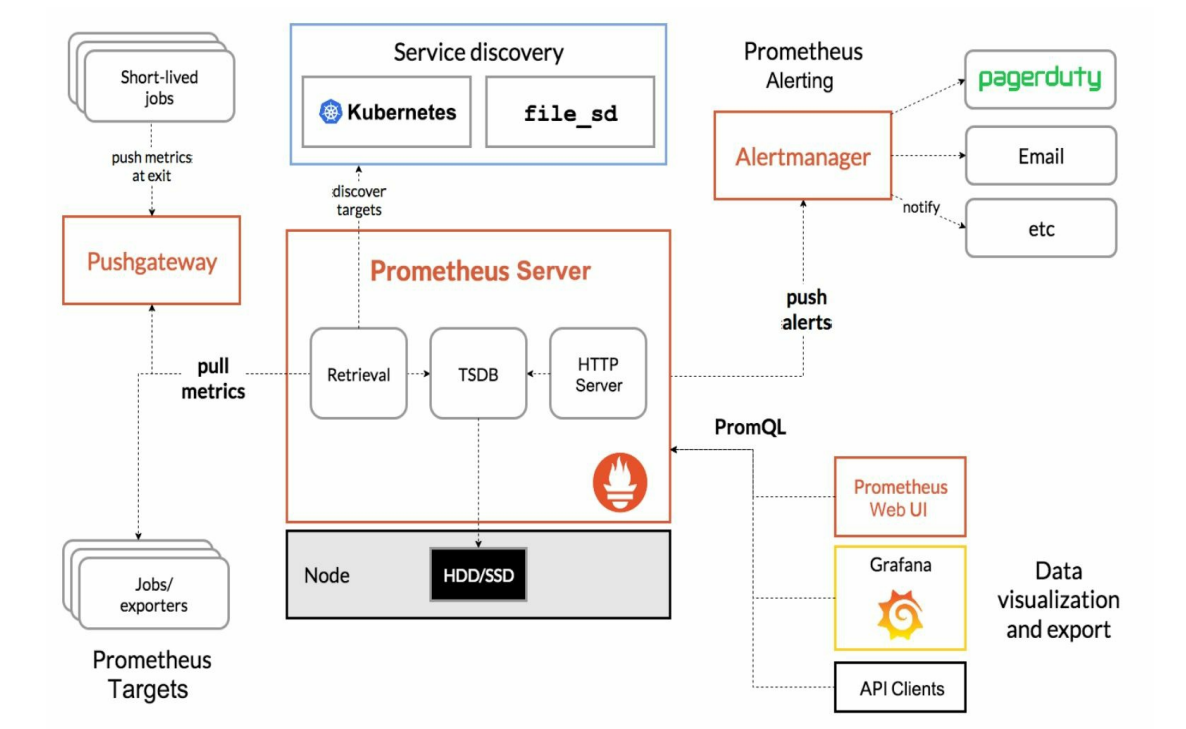

Prometheus生态系统由各种组件组成,用于功能的扩充:

| 组件 | 描述 |

|---|---|

| Prometheus Server | 负责监控数据采集和时序数据存储,并提供数据查询功能。 |

| 客户端SDK | 对接Prometheus的开发工具包。 |

| Push Gateway | 推送数据的网关组件。 |

| 第三方Exporter | 各种外部指标收集系统,其数据可以被Prometheus采集 |

| AlertManager | 告警管理器。 |

| 其他辅助支持工具 | – |

Prometheus的核心组件Prometheus Server的主要功能包括:

从

Kubernetes Master获取需要监控的资源或服务信息;从各种Exporter抓取(Pull)指标数据,然后将指标数据保存在时序数据库(TSDB)中;向其他系统提供HTTP API进行查询;提供基于PromQL语言的数据查询;可以将告警数据推送(Push)给AlertManager,等等。

Prometheus的系统架构:

NodeExporter

NodeExporter主要用来采集服务器CPU、内存、磁盘、IO等信息,是机器数据的通用采集方案。只要在宿主机上安装NodeExporter和cAdisor容器,通过Prometheus进行抓取即可。它同Zabbix的功能相似.

Grafana

Grafana是一个Dashboard工具,用Go和JS开发,它是一个时间序列数据库的界面展示层,通过SQL命令查询出Metrics并将结果展示出来。它能自定义多种仪表盘,可以轻松实现覆盖多个Docker的宿主机监控信息的展现。

搭建Prometheus+Grafana+NodeExporter平台

这里我们通过

helm的方式搭建,简单方便快捷,运行之后,相关的镜像都会创建成功.下面是创建成功的镜像列表。

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create/kube-prometheus-stack]└─$kubectl get podsNAME READY STATUS RESTARTS AGEalertmanager-liruilong-kube-prometheus-alertmanager-0 2/2 Running 0 61mliruilong-grafana-5955564c75-zpbjq 3/3 Running 0 62mliruilong-kube-prometheus-operator-5cb699b469-fbkw5 1/1 Running 0 62mliruilong-kube-state-metrics-5dcf758c47-bbwt4 1/1 Running 7 (32m ago) 62mliruilong-prometheus-node-exporter-rfsc5 1/1 Running 0 62mliruilong-prometheus-node-exporter-vm7s9 1/1 Running 0 62mliruilong-prometheus-node-exporter-z9j8b 1/1 Running 0 62mprometheus-liruilong-kube-prometheus-prometheus-02/2 Running 0 61m┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create/kube-prometheus-stack]└─$环境版本

我的K8s集群版本

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create]└─$kubectl get nodesNAME STATUS ROLES AGE VERSIONvms81.liruilongs.github.io Ready control-plane,master 34d v1.22.2vms82.liruilongs.github.io Ready <none> 34d v1.22.2vms83.liruilongs.github.io Ready <none> 34d v1.22.2hrlm版本

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create]└─$helm versionversion.BuildInfo{Version:"v3.2.1", GitCommit:"fe51cd1e31e6a202cba7dead9552a6d418ded79a", GitTreeState:"clean", GoVersion:"go1.13.10"}prometheus-operator(旧名字)安装出现的问题

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create]└─$helm search repo prometheus-operatorNAMECHART VERSION APP VERSION DESCRIPTIONali/prometheus-operator 8.7.0 0.35.0 Provides easy monitoring definitions for Kubern...azure/prometheus-operator9.3.2 0.38.1 DEPRECATED Provides easy monitoring definitions...┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create]└─$helm install liruilong ali/prometheus-operatorError: failed to install CRD crds/crd-alertmanager.yaml: unable to recognize "": no matches for kind "CustomResourceDefinition" in version "apiextensions.k8s.io/v1beta1"┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create]└─$helm pull ali/prometheus-operator┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create]└─$解决办法:新版本安装

直接下载kube-prometheus-stack(新)的chart包,通过命令安装:

https://github.com/prometheus-community/helm-charts/releases/download/kube-prometheus-stack-30.0.1/kube-prometheus-stack-30.0.1.tgz

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create]└─$lsindex.yaml kube-prometheus-stack-30.0.1.tgz liruilonghelm liruilonghelm-0.1.0.tgz mysql mysql-1.6.4.tgz┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create]└─$helm listNAME NAMESPACEREVISION UPDATED STATUS CHART APP VERSION解压chart包kube-prometheus-stack-30.0.1.tgz

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create]└─$tar -zxf kube-prometheus-stack-30.0.1.tgz创建新的命名空间

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create]└─$cd kube-prometheus-stack/┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create/kube-prometheus-stack]└─$kubectl create ns monitoringnamespace/monitoring created┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create/kube-prometheus-stack]└─$kubectl config set-context $(kubectl config current-context) --namespace=monitoringContext "kubernetes-admin@kubernetes" modified.进入文件夹,直接通过helm install liruilong .安装

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create/kube-prometheus-stack]└─$lsChart.lock charts Chart.yaml CONTRIBUTING.md crds README.md templates values.yaml┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create/kube-prometheus-stack]└─$helm install liruilong .kube-prometheus-admission-create对应Pod的相关镜像下载不下来问题

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create]└─$kubectl get podsNAME READY STATUS RESTARTS AGEliruilong-kube-prometheus-admission-create--1-bn7x2 0/1 ImagePullBackOff 0 33s查看pod详细信息,发现是谷歌的一个镜像国内无法下载

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create]└─$kubectl describe pod liruilong-kube-prometheus-admission-create--1-bn7x2Name: liruilong-kube-prometheus-admission-create--1-bn7x2Namespace: monitoringPriority: 0Node: vms83.liruilongs.github.io/192.168.26.83Start Time: Sun, 16 Jan 2022 02:43:07 +0800Labels:app=kube-prometheus-stack-admission-createapp.kubernetes.io/instance=liruilongapp.kubernetes.io/managed-by=Helmapp.kubernetes.io/part-of=kube-prometheus-stackapp.kubernetes.io/version=30.0.1chart=kube-prometheus-stack-30.0.1controller-uid=2ce48cd2-a118-4e23-a27f-0228ef6c45e7heritage=Helmjob-name=liruilong-kube-prometheus-admission-createrelease=liruilongAnnotations: cni.projectcalico.org/podIP: 10.244.70.8/32cni.projectcalico.org/podIPs: 10.244.70.8/32Status:PendingIP: 10.244.70.8IPs: IP: 10.244.70.8Controlled By: Job/liruilong-kube-prometheus-admission-createContainers: create: Container ID: Image: k8s.gcr.io/ingress-nginx/kube-webhook-certgen:v1.0@sha256:f3b6b39a6062328c095337b4cadcefd1612348fdd5190b1dcbcb9b9e90bd8068 Image ID: Port: <none> Host Port: 。。。。。。。。。。。。。。。。。。。。。。。。。。。在dokcer仓库里找了一个类似的,通过 kubectl edit修改

image: k8s.gcr.io/ingress-nginx/kube-webhook-certgen:v1.0 替换为 : docker.io/liangjw/kube-webhook-certgen:v1.1.1或者也可以修改配置文件从新install(记得要把sha注释掉)

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create]└─$lsindex.yaml kube-prometheus-stack kube-prometheus-stack-30.0.1.tgz liruilonghelm liruilonghelm-0.1.0.tgz mysql mysql-1.6.4.tgz┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create]└─$cd kube-prometheus-stack/┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create/kube-prometheus-stack]└─$lsChart.lock charts Chart.yaml CONTRIBUTING.md crds README.md templates values.yaml┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create/kube-prometheus-stack]└─$cat values.yaml | grep -A 3 -B 2 kube-webhook-certgen enabled: true image: repository: docker.io/liangjw/kube-webhook-certgen tag: v1.1.1 #sha: "f3b6b39a6062328c095337b4cadcefd1612348fdd5190b1dcbcb9b9e90bd8068" pullPolicy: IfNotPresent┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create/kube-prometheus-stack]└─$┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create/kube-prometheus-stack]└─$helm del liruilong;helm install liruilong .之后其他的相关pod正常创建中

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create/kube-prometheus-stack]└─$kubectl get podsNAME READY STATUSRESTARTS AGEliruilong-grafana-5955564c75-zpbjq 0/3 ContainerCreating 0 27sliruilong-kube-prometheus-operator-5cb699b469-fbkw5 0/1 ContainerCreating 0 27sliruilong-kube-state-metrics-5dcf758c47-bbwt4 0/1 ContainerCreating 0 27sliruilong-prometheus-node-exporter-rfsc50/1 ContainerCreating 0 28sliruilong-prometheus-node-exporter-vm7s90/1 ContainerCreating 0 28sliruilong-prometheus-node-exporter-z9j8b0/1 ContainerCreating 0 28skube-state-metrics这个pod的镜像也没有拉取下来。应该也是相同的原因

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create/kube-prometheus-stack]└─$kubectl get podsNAME READY STATUS RESTARTS AGEalertmanager-liruilong-kube-prometheus-alertmanager-0 2/2 Running 0 3m35sliruilong-grafana-5955564c75-zpbjq 3/3 Running 0 4m46sliruilong-kube-prometheus-operator-5cb699b469-fbkw5 1/1 Running 0 4m46sliruilong-kube-state-metrics-5dcf758c47-bbwt4 0/1 ImagePullBackOff 0 4m46sliruilong-prometheus-node-exporter-rfsc5 1/1 Running 0 4m47sliruilong-prometheus-node-exporter-vm7s9 1/1 Running 0 4m47sliruilong-prometheus-node-exporter-z9j8b 1/1 Running 0 4m47sprometheus-liruilong-kube-prometheus-prometheus-02/2 Running 0 3m34s同样 k8s.gcr.io/kube-state-metrics/kube-state-metrics:v2.3.0 这个镜像没办法拉取

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create/kube-prometheus-stack]└─$kubectl describe pod liruilong-kube-state-metrics-5dcf758c47-bbwt4Name: liruilong-kube-state-metrics-5dcf758c47-bbwt4Namespace: monitoringPriority: 0Node: vms82.liruilongs.github.io/192.168.26.82Start Time: Sun, 16 Jan 2022 02:59:53 +0800Labels:app.kubernetes.io/component=metricsapp.kubernetes.io/instance=liruilongapp.kubernetes.io/managed-by=Helmapp.kubernetes.io/name=kube-state-metricsapp.kubernetes.io/part-of=kube-state-metricsapp.kubernetes.io/version=2.3.0helm.sh/chart=kube-state-metrics-4.3.0pod-template-hash=5dcf758c47release=liruilongAnnotations: cni.projectcalico.org/podIP: 10.244.171.153/32cni.projectcalico.org/podIPs: 10.244.171.153/32Status:PendingIP: 10.244.171.153IPs: IP: 10.244.171.153Controlled By: ReplicaSet/liruilong-kube-state-metrics-5dcf758c47Containers: kube-state-metrics: Container ID: Image: k8s.gcr.io/kube-state-metrics/kube-state-metrics:v2.3.0 Image ID: Port: 8080/TCP 。。。。。。。。。。。。。。。。。。。。。。同样的,我们通过docker仓库找一下相同的,然后通过kubectl edit pod修改一下

k8s.gcr.io/kube-state-metrics/kube-state-metrics 替换为: docker.io/dyrnq/kube-state-metrics:v2.3.0可以先在节点机上拉取一下

┌──[root@vms81.liruilongs.github.io]-[~/ansible]└─$ ansible node -m shell -a "docker pull dyrnq/kube-state-metrics:v2.3.0"192.168.26.82 | CHANGED | rc=0 >>v2.3.0: Pulling from dyrnq/kube-state-metricse8614d09b7be: Pulling fs layer53ccb90bafd7: Pulling fs layere8614d09b7be: Verifying Checksume8614d09b7be: Download completee8614d09b7be: Pull complete53ccb90bafd7: Verifying Checksum53ccb90bafd7: Download complete53ccb90bafd7: Pull completeDigest: sha256:c9137505edaef138cc23479c73e46e9a3ef7ec6225b64789a03609c973b99030Status: Downloaded newer image for dyrnq/kube-state-metrics:v2.3.0docker.io/dyrnq/kube-state-metrics:v2.3.0192.168.26.83 | CHANGED | rc=0 >>v2.3.0: Pulling from dyrnq/kube-state-metricse8614d09b7be: Pulling fs layer53ccb90bafd7: Pulling fs layere8614d09b7be: Verifying Checksume8614d09b7be: Download completee8614d09b7be: Pull complete53ccb90bafd7: Verifying Checksum53ccb90bafd7: Download complete53ccb90bafd7: Pull completeDigest: sha256:c9137505edaef138cc23479c73e46e9a3ef7ec6225b64789a03609c973b99030Status: Downloaded newer image for dyrnq/kube-state-metrics:v2.3.0docker.io/dyrnq/kube-state-metrics:v2.3.0修改完之后,会发现所有的pod都创建成功

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create/kube-prometheus-stack]└─$kubectl get podsNAME READY STATUS RESTARTS AGEalertmanager-liruilong-kube-prometheus-alertmanager-0 2/2 Running 0 61mliruilong-grafana-5955564c75-zpbjq 3/3 Running 0 62mliruilong-kube-prometheus-operator-5cb699b469-fbkw5 1/1 Running 0 62mliruilong-kube-state-metrics-5dcf758c47-bbwt4 1/1 Running 7 (32m ago) 62mliruilong-prometheus-node-exporter-rfsc5 1/1 Running 0 62mliruilong-prometheus-node-exporter-vm7s9 1/1 Running 0 62mliruilong-prometheus-node-exporter-z9j8b 1/1 Running 0 62mprometheus-liruilong-kube-prometheus-prometheus-02/2 Running 0 61m┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create/kube-prometheus-stack]└─$然后我们需要修改liruilong-grafana SVC的类型为NodePort,这样,物理机就可以访问了

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create/kube-prometheus-stack/templates]└─$kubectl get svcNAME TYPE CLUSTER-IPEXTERNAL-IP PORT(S) AGEalertmanager-operated ClusterIP None <none> 9093/TCP,9094/TCP,9094/UDP 33mliruilong-grafana ClusterIP 10.99.220.121 <none> 80/TCP 34mliruilong-kube-prometheus-alertmanager ClusterIP 10.97.193.228 <none> 9093/TCP34mliruilong-kube-prometheus-operatorClusterIP 10.101.106.93 <none> 443/TCP 34mliruilong-kube-prometheus-prometheus ClusterIP 10.105.176.19 <none> 9090/TCP34mliruilong-kube-state-metrics ClusterIP 10.98.94.55 <none> 8080/TCP34mliruilong-prometheus-node-exporterClusterIP 10.110.216.215 <none> 9100/TCP34mprometheus-operated ClusterIP None <none> 9090/TCP33m┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create/kube-prometheus-stack]└─$kubectl edit svc liruilong-grafanaservice/liruilong-grafana edited┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create/kube-prometheus-stack/templates]└─$kubectl get svcNAME TYPE CLUSTER-IPEXTERNAL-IP PORT(S) AGEalertmanager-operated ClusterIP None <none> 9093/TCP,9094/TCP,9094/UDP 35mliruilong-grafana NodePort 10.99.220.121 <none> 80:30443/TCP 36mliruilong-kube-prometheus-alertmanager ClusterIP 10.97.193.228 <none> 9093/TCP36mliruilong-kube-prometheus-operatorClusterIP 10.101.106.93 <none> 443/TCP 36mliruilong-kube-prometheus-prometheus ClusterIP 10.105.176.19 <none> 9090/TCP36mliruilong-kube-state-metrics ClusterIP 10.98.94.55 <none> 8080/TCP36mliruilong-prometheus-node-exporterClusterIP 10.110.216.215 <none> 9100/TCP36mprometheus-operated ClusterIP None <none> 9090/TCP35m| 物理机访问 |

|---|

|

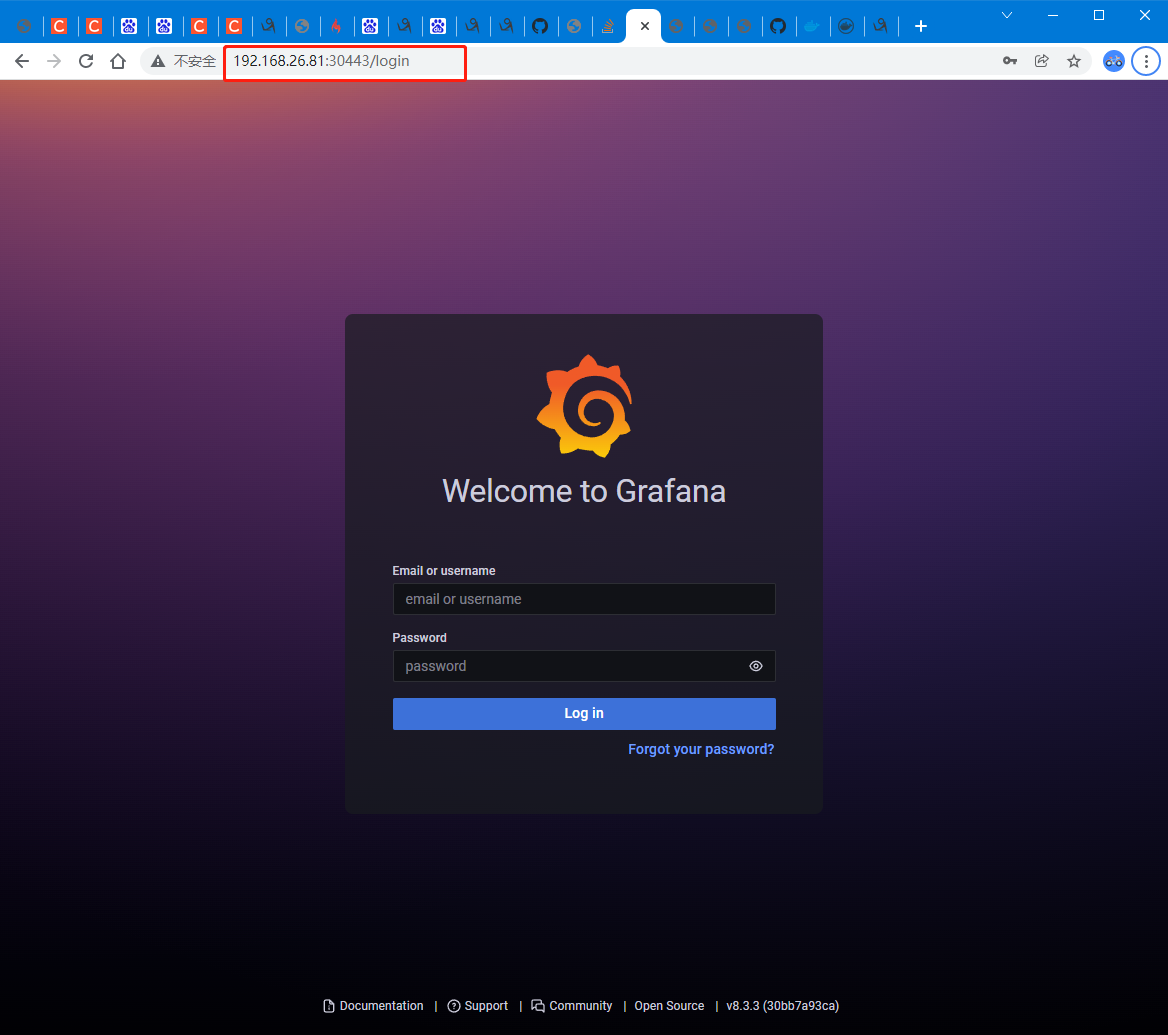

通过secrets解密获取用户名密码

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create/kube-prometheus-stack/templates]└─$kubectl get secrets | grep grafanaliruilong-grafana Opaque 3 38mliruilong-grafana-test-token-q8z8j kubernetes.io/service-account-token 3 38mliruilong-grafana-token-j94p8 kubernetes.io/service-account-token 3 38m┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create/kube-prometheus-stack/templates]└─$kubectl get secrets liruilong-grafana -o yamlapiVersion: v1data: admin-password: cHJvbS1vcGVyYXRvcg== admin-user: YWRtaW4= ldap-toml: ""kind: Secretmetadata: annotations: meta.helm.sh/release-name: liruilong meta.helm.sh/release-namespace: monitoring creationTimestamp: "2022-01-15T18:59:40Z" labels: app.kubernetes.io/instance: liruilong app.kubernetes.io/managed-by: Helm app.kubernetes.io/name: grafana app.kubernetes.io/version: 8.3.3 helm.sh/chart: grafana-6.20.5 name: liruilong-grafana namespace: monitoring resourceVersion: "1105663" uid: c03ff5f3-deb5-458c-8583-787f41034469type: Opaque┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create/kube-prometheus-stack/templates]└─$kubectl get secrets liruilong-grafana -o jsonpath='{.data.admin-user}}'| base64 -dadminbase64: 输入无效┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create/kube-prometheus-stack/templates]└─$kubectl get secrets liruilong-grafana -o jsonpath='{.data.admin-password}}'| base64 -dprom-operatorbase64: 输入无效得到用户名密码:admin/prom-operator

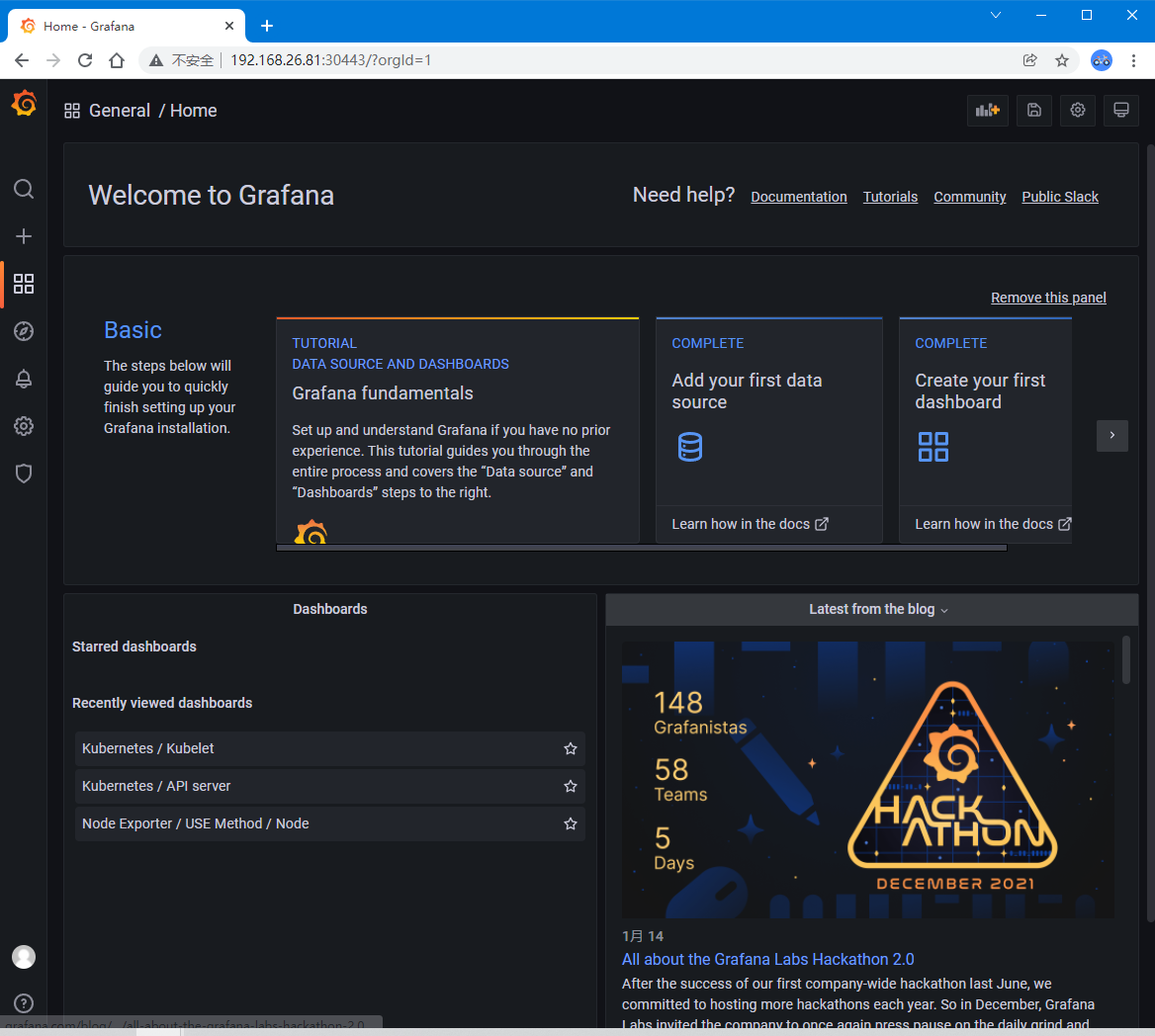

| 正常登录,查看监控信息 |

|---|

|

|

安全管理

API Server认证管理

Kubernetes集群中所有资源的访问和变更都是通过Kubernetes API Server的REST API来实现的,所以集群安全的关键点就在于如何鉴权和授权

一个简单的

Demo,在master节点上,我们通过root用户可以直接通kubectl来请求API Service从而获取集群信息,但是我们通过其他用户登录就没有这个权限,这就涉及到k8s的一个认证问题.

root用户可以正常访问

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create]└─$kubectl get podsNAME READY STATUS RESTARTSAGEliruilong-grafana-5955564c75-zpbjq 3/3 Terminating 08hliruilong-kube-prometheus-operator-5cb699b469-fbkw5 1/1 Terminating 08hliruilong-prometheus-node-exporter-vm7s91/1 Terminating 2 (109m ago) 8hprometheus-liruilong-kube-prometheus-prometheus-0 2/2 Terminating 08h┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create]└─$切换tom用户来访问,没有权限,报错找不到集群API的位置,那么为什么会这样呢?

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create]└─$su tom[tom@vms81 k8s-helm-create]$ kubectl get podsThe connection to the server localhost:8080 was refused - did you specify the right host or port?[tom@vms81 k8s-helm-create]$ exitexit为了演示认证,我们需要在集群外的机器上安装一个客户端工具kubectl,用于和集群的入口api-Service交互

┌──[root@liruilongs.github.io]-[~]└─$ yum install -y kubectl-1.22.2-0 --disableexcludes=kubernetes可以通过kubectl cluster-info来查看集群的相关信息

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create]└─$kubectl cluster-infoKubernetes control plane is running at https://192.168.26.81:6443CoreDNS is running at https://192.168.26.81:6443/api/v1/namespaces/kube-system/services/kube-dns:dns/proxyMetrics-server is running at https://192.168.26.81:6443/api/v1/namespaces/kube-system/services/https:metrics-server:/proxyTo further debug and diagnose cluster problems, use 'kubectl cluster-info dump'.┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create]└─$Kubernetes集群提供了3种级别的客户端身份认证方式

HTTP Token认证:通过一个Token来识别合法用户。

HTTPS 证书认证:基于CA根证书签名的双向数字证书认证方式

HTTP Base认证:通过用户名+密码的方式认证,这个只有1.19之前的版本适用,之后的版本不在支持

下面就Token和SSL和小伙伴分享下,Bash因为在高版本的K8s中不在支持,所以我们这里不聊。关于上面的普通用户范围集群的问题,我们也会改出解答

HTTP Token认证

HTTP Token的认证是用一个很长的特殊编码方式的并且难以被模仿的字符串Token来表明客户身份的一种方式。

每个Token对应一个用户名,存储在APIServer能访问的一个文件中。当客户端发起API调用请求时,需要在HTTP Header里放入Token,这样一来, API Server就能识别合法用户和非法用户了。

当 API 服务器的命令行设置了--token-auth-file=SOMEFILE选项时,会从文件中 读取持有者令牌。目前,令牌会长期有效,并且在不重启 API 服务器的情况下 无法更改令牌列表。下面我们一个通过Demo来演示通过静态Token的用户认证,

通过openssl生成一个令牌

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create]└─$openssl rand -hex 104bf636c8214b7ff0a0fb令牌文件是一个 CSV 文件,包含至少 3 个列:

令牌、用户名和用户的 UID。 其余列被视为可选的组名。这里需要注意的是,令牌文件要放到/etc/kubernetes/pki目录下才可以,可能默认读取令牌的位置即是这个位置

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create]└─$echo "4bf636c8214b7ff0a0fb,admin2,3" > /etc/kubernetes/pki/liruilong.csv┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create]└─$cat /etc/kubernetes/pki/liruilong.csv4bf636c8214b7ff0a0fb,admin2,3通过Sed添加kube-apiserver服务启动参数,- --token-auth-file=/etc/kubernetes/pki/liruilong.csv

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create]└─$sed '17a \ \ \ \ - --token-auth-file=/etc/kubernetes/pki/liruilong.csv' /etc/kubernetes/manifests/kube-apiserver.yaml | grep -A 5 command - command: - kube-apiserver - --advertise-address=192.168.26.81 - --allow-privileged=true - --token-auth-file=/etc/kubernetes/liruilong.csv - --authorization-mode=Node,RBAC┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create]└─$sed -i '17a \ \ \ \ - --token-auth-file=/etc/kubernetes/pki/liruilong.csv' /etc/kubernetes/manifests/kube-apiserver.yaml 检查修改的启动参数

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create]└─$cat -n /etc/kubernetes/manifests/kube-apiserver.yaml | grep -A 5 command 14 - command: 15 - kube-apiserver 16 - --advertise-address=192.168.26.81 17 - --allow-privileged=true 18 - --token-auth-file=/etc/kubernetes/pki/liruilong.csv 19 - --authorization-mode=Node,RBAC┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create]└─$重启kubelet服务

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create]└─$systemctl restart kubelet┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-helm-create]└─$确认集群能够正常访问

┌──[root@vms81.liruilongs.github.io]-[/etc/kubernetes/pki]└─$kubectl get nodesNAME STATUS ROLES AGE VERSIONvms81.liruilongs.github.io Ready control-plane,master 34d v1.22.2vms82.liruilongs.github.io Ready <none> 34d v1.22.2vms83.liruilongs.github.io NotReady <none> 34d v1.22.2┌──[root@vms81.liruilongs.github.io]-[/etc/kubernetes/pki]└─$在集群外的客户机访问集群信息,这里提示我们admin2用户没有访问的权限,说明已经认证成功了,只是没有权限

┌──[root@liruilongs.github.io]-[~]└─$ kubectl -s="https://192.168.26.81:6443" --insecure-skip-tls-verify=true --token="4bf636c8214b7ff0a0fb" get pods -n kube-systemError from server (Forbidden): pods is forbidden: User "admin2" cannot list resource "pods" in API group "" in the namespace "kube-system"┌──[root@liruilongs.github.io]-[~]└─$这里我们修改一些token的字符串,Token和集群的Token文件不对应,会提示我们没有获得授权,即认证失败

┌──[root@liruilongs.github.io]-[~]└─$ kubectl -s="https://192.168.26.81:6443" --insecure-skip-tls-verify=true --token="4bf636c8214b7ff0a0f" get pods -n kube-systemerror: You must be logged in to the server (Unauthorized)kubeconfig文件认证

在回到我们之前的那个问题,为什么使用root用户可以访问集群信息,但是通过tom用户去不能够访问集群信息,这里就涉及到一个kubeconfig 文件认证的问题

在通过kubeadm创建集群的时候,不知道小伙伴没还记不记得下面这个文件admin.conf,这个文件就是kubeadm帮我们生成的kubeconfig文件

┌──[root@vms81.liruilongs.github.io]-[~/.kube]└─$ll /etc/kubernetes/admin.conf-rw------- 1 root root 5676 12月 13 02:13 /etc/kubernetes/admin.conf┌──[root@vms81.liruilongs.github.io]-[~/.kube]└─$我们把这个文件拷贝到tom用户的目录下,修改权限

┌──[root@vms81.liruilongs.github.io]-[~/.kube]└─$cp /etc/kubernetes/admin.conf ~tom/┌──[root@vms81.liruilongs.github.io]-[~/.kube]└─$chown tom:tom ~tom/admin.conf这个时候发现通过 --kubeconfig=admin.conf 指定这个文件,就可以访问集群信息

[tom@vms81 home]$ cd tom/[tom@vms81 ~]$ lsadmin.conf[tom@vms81 ~]$ kubectl get podsThe connection to the server localhost:8080 was refused - did you specify the right host or port?[tom@vms81 ~]$ kubectl get pods -A --kubeconfig=admin.confNAMESPACE NAME READY STATUS RESTARTS AGEingress-nginxingress-nginx-controller-744d4fc6b7-t9n4l 1/1 Running 6 (8h ago) 44hkube-system calico-kube-controllers-78d6f96c7b-85rv91/1 Running 193 31dkube-system calico-node-6nfqv 1/1 Running 254 34dkube-system calico-node-fv458 0/1 Running 50 34dkube-system calico-node-h5lsq 1/1 Running 94 (7h10m ago) 34dkube-system.......................... 那个,kubeconfig文件是个什么东西,官方文档中这样描述:

使用 kubeconfig 文件来组织有关集群、用户、命名空间和身份认证机制的信息。kubectl 命令行工具使用 kubeconfig 文件来查找选择集群所需的信息,并与集群的 API 服务器进行通信。

换句话讲,通过kubeconfig与集群的 API 服务器进行通信,类似上面的Token的作用,我们要说的HTTPS证书认证就是放到这里

默认情况下,kubectl 在 $HOME/.kube 目录下查找名为 config 的文件。

┌──[root@vms81.liruilongs.github.io]-[~]└─$ls ~/.kube/config/root/.kube/config┌──[root@vms81.liruilongs.github.io]-[~]└─$ll ~/.kube/config-rw------- 1 root root 5663 1月 16 02:33 /root/.kube/config将kubeconfig文件复制到 $HOME/.kube 目录下改名为 config 发现tom用户依旧可以访问

[tom@vms81 ~]$ lsadmin.conf[tom@vms81 ~]$ cp admin.conf .kube/config[tom@vms81 ~]$ kubectl get pods -n kube-systemNAMEREADY STATUS RESTARTS AGEcalico-kube-controllers-78d6f96c7b-85rv9 1/1 Running 193 31dcalico-node-6nfqv 1/1 Running 254 34dcalico-node-fv458 0/1 Running 50 34dcalico-node-h5lsq 1/1 Running 94 (7h13m ago) 34d。。。。。。。也可以通过设置 KUBECONFIG 环境变量或者设置 --kubeconfig参数来指定其他kubeconfig文件。

[tom@vms81 ~]$ export KUBECONFIG=admin.conf[tom@vms81 ~]$ kubectl get pods -n kube-systemNAMEREADY STATUS RESTARTS AGEcalico-kube-controllers-78d6f96c7b-85rv9 1/1 Running 193 31dcalico-node-6nfqv 1/1 Running 254 34dcalico-node-fv458 0/1 Running 50 34dcalico-node-h5lsq 1/1 Running 94 (7h11m ago) 34d..............当我们什么都不设置时,tom用户获取不到kubeconfig文件,没有认证信息,无法访问

[tom@vms81 ~]$ unset KUBECONFIG[tom@vms81 ~]$ kubectl get pods -n kube-systemThe connection to the server localhost:8080 was refused - did you specify the right host or port?查看kubeconfig文件的配置信息

┌──[root@vms81.liruilongs.github.io]-[~/.kube]└─$kubectl config viewapiVersion: v1clusters:- cluster: certificate-authority-data: DATA+OMITTED server: https://192.168.26.81:6443 name: kubernetescontexts:- context: cluster: kubernetes namespace: liruilong-rbac-create user: kubernetes-admin name: kubernetes-admin@kubernetescurrent-context: kubernetes-admin@kuberneteskind: Configpreferences: {}users:- name: kubernetes-admin user: client-certificate-data: REDACTED client-key-data: REDACTED┌──[root@vms81.liruilongs.github.io]-[~/.kube]└─$所以我们要想访问集群信息,只需要把这个kubeconfig 文件拷贝到客户机上就OK了

但是

kubeadm对admin.conf中的证书进行签名时,将其配置为Subject: O = system:masters, CN = kubernetes-admin。system:masters是一个例外的、超级用户组,可以绕过鉴权层(例如 RBAC)。 所以不能将admin.conf文件与任何人共享,应该使用kubeadm kubeconfig user命令为其他用户生成kubeconfig文件,完成对他们的定制授权。

创建 kubeconfig 文件

一个kubeconfig 文件包括一下几部分:

- 集群信息:

- 集群CA证书

- 集群地址

- 上下文信息

- 所有上下文信息

- 当前上下文

- 用户信息

- 用户CA证书

- 用户私钥

要创建 kubeconfig 文件的话,我们需要一个私钥,以及集群 CA 授权颁发的证书。同理我们不能直接用私钥生成公钥,而必须是用私钥生成证书请求文件(申请书),然后根据证书请求文件向 CA(权威机构)申请证书(身份证),CA 审核通过之后会颁发证书。

环境准备

┌──[root@vms81.liruilongs.github.io]-[~/ansible]└─$kubectl create ns liruilong-rbac-createnamespace/liruilong-rbac-create created┌──[root@vms81.liruilongs.github.io]-[~/ansible]└─$mkdir k8s-rbac-create;cd k8s-rbac-create┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-rbac-create]└─$kubectl config set-context $(kubectl config current-context) --namespace=liruilong-rbac-createContext "kubernetes-admin@kubernetes" modified.┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-rbac-create]└─$申请证书

生成一个 2048 位的 私钥 iruilong.key 文件

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-rbac-create]└─$openssl genrsa -out liruilong.key 2048Generating RSA private key, 2048 bit long modulus....................+++...........................................................................................................+++e is 65537 (0x10001)查看私钥文件

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-rbac-create]└─$cat liruilong.key-----BEGIN RSA PRIVATE KEY-----MIIEpAIBAAKCAQEAt9OBnwaA3VdFfjdiurJPtcaiXOGPc1AWFmrlgocq4vT5WZgq................................................................LHd0n1yCKpwbYMGghF4iGmEGIIdsCVZP+EV6lduPKjqEm9kjuLROKzRZHFoGyASOKrb3VR4CKHvnZAPVctv7Pu+4JgMliJHl8GVYhqM5UykbLRMdNHSNIQ==-----END RSA PRIVATE KEY-----┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-rbac-create]└─$利用刚生成的私有 liruilong.key 生成证书请求文件 liruilong.key:这里CN的值 liruilong,就是后面我们授权的用户。

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-rbac-create]└─$openssl req -new -key liruilong.key -out liruilong.csr -subj "/CN=liruilong/O=cka2020"┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-rbac-create]└─$lsliruilong.csr liruilong.key对证书请求文件进行 base64 编码

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-rbac-create]└─$cat liruilong.csr | base64 |tr -d "\n"LS0tLS1CRUdJTiBDRVJUSUZJ...............编写申请证书请求文件的 yaml 文件:cat csr.yaml

apiVersion: certificates.k8s.io/v1kind: CertificateSigningRequestmetadata: name: liruilongspec: signerName: kubernetes.io/kube-apiserver-client request: LS0tLS1CRUdJTiBDRVJUSUZJ............... usages: - client auth这里 request 里的是 base64 编码之后的证书请求文件。申请证书

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-rbac-create]└─$kubectl apply -f csr.yamlcertificatesigningrequest.certificates.k8s.io/liruilong created查看已经发出证书申请请求:

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-rbac-create]└─$kubectl get csrNAME AGE SIGNERNAMEREQUESTOR REQUESTEDDURATION CONDITIONliruilong 15s kubernetes.io/kube-apiserver-client kubernetes-admin <none>Pending批准证书:

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-rbac-create]└─$kubectl certificate approve liruilongcertificatesigningrequest.certificates.k8s.io/liruilong approved查看审批通过的证书:

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-rbac-create]└─$kubectl get csr/liruilong -o yamlapiVersion: certificates.k8s.io/v1kind: CertificateSigningRequestmetadata: annotations: kubectl.kubernetes.io/last-applied-configuration: | {"apiVersion":"certificates.k8s.io/v1","kind":"CertificateSigningRequest","metadata":{"annotations":{},"name":"liruilong"},"spec":{"request":"LS0tLS1CRUdJTiBDRVJUSUZJQ0FURSBSRVFVRVNULS0tLS0KTUlJQ2F6Q0NBVk1DQVFBd0pqRVNNQkFHQTFVRUF3d0piR2x5ZFdsc2IyNW5NUkF3RGdZRFZRUUtEQWRqYTJFeQpNREl3TUlJQklqQU5CZ2txaGtpRzl3MEJBUUVGQUFPQ0FROEFNSUlCQ2dLQ0FRRUF0OU9CbndhQTNWZEZmamRpCnVySlB0Y2FpWE9HUGMxQVdGbXJsZ29jcTR2VDVXWmdxd1g5T0RvSnpDREJZZVFJQ3h0Wm5uUk9XY1B2dVB6K1IKb1Eybk83K3FnNUNjZzlWZmVOWFRwUDB0VXZsQ21ZVVg2dkRDdlgxUDR3VnNFdXNydlZBdkF4NmdqZTZzNW94VgphZTIwcXFBRXpTUXJhczhPeldsZ1Frd0xjNU5MZ2k3bWlpNHNzaVpQRXU1ZFZIRWs5dHdCeUZTV0dsanJETkhvCnN4UkFFNXlrWjBnODBWSzN1U1JNNmFHSEJ0QmVpbysxa2d0U0xDMlVScy9QWUwwRGNSQm9zUUx0c3JublFSMTkKSE5NWTkweUhYN3Jta3ZqcHdOdkRZWjNIWUVvbGJQZThWZjhBTFpsbDVBTnJ5SUJqbXNrY01QM2lRMzdxWGZUNwptSzhKeHdJREFRQUJvQUF3RFFZSktvWklodmNOQVFFTEJRQURnZ0VCQUFwa09aUUNTTGxGYWJTVk9zNmtzL1ZyCmd3b3FTdjFOM1YzUC84SmNzR1pFUTc4TkpJb2R0REExS3EwN25DWjJWUktselZDN1kyMCszZUVzTXZNWnFMc1MKbUtaS0w2SFE3N2RHa1liUjhzKzRMaFo4YXR6cXVMSnlqZUZKODQ2N1ZrUXF5T1R6by9wZ3E4YWJJY01XNzlKMgoxWEkybi92RWlIMEgvWU9DaWExVHRqTnpSWGtlL2hPQTZ4Y29CcVRpdWtkUHBqZDJSaWFTRUNUS1h4ZGNOS0xLCmZVbFhkb2s5UkVkQ2V3bU9ISUdvVG9qUGRWdWlPdkYzZkFqUXZNNDJ3UjJDdklHMWs1YUQzdWVlbzcwd0pnUlQKYzhZNnUwY2padEI5ZW5xUStmRFFqdUUyZElrMDJLbm5HQVppK0wxUnRnSnA2Tm1udEg5WUc3RlBLSXYrakFZPQotLS0tLUVORCBDRVJUSUZJQ0FURSBSRVFVRVNULS0tLS0K","signerName":"kubernetes.io/kube-apiserver-client","usages":["client auth"]}} creationTimestamp: "2022-01-16T15:25:24Z" name: liruilong resourceVersion: "1185668" uid: 51837659-7214-4dec-bcd4-b7a9129ee2bbspec: groups: - system:masters - system:authenticated request: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURSBSRVFVRVNULS0tLS0KTUlJQ2F6Q0NBVk1DQVFBd0pqRVNNQkFHQTFVRUF3d0piR2x5ZFdsc2IyNW5NUkF3RGdZRFZRUUtEQWRqYTJFeQpNREl3TUlJQklqQU5CZ2txaGtpRzl3MEJBUUVGQUFPQ0FROEFNSUlCQ2dLQ0FRRUF0OU9CbndhQTNWZEZmamRpCnVySlB0Y2FpWE9HUGMxQVdGbXJsZ29jcTR2VDVXWmdxd1g5T0RvSnpDREJZZVFJQ3h0Wm5uUk9XY1B2dVB6K1IKb1Eybk83K3FnNUNjZzlWZmVOWFRwUDB0VXZsQ21ZVVg2dkRDdlgxUDR3VnNFdXNydlZBdkF4NmdqZTZzNW94VgphZTIwcXFBRXpTUXJhczhPeldsZ1Frd0xjNU5MZ2k3bWlpNHNzaVpQRXU1ZFZIRWs5dHdCeUZTV0dsanJETkhvCnN4UkFFNXlrWjBnODBWSzN1U1JNNmFHSEJ0QmVpbysxa2d0U0xDMlVScy9QWUwwRGNSQm9zUUx0c3JublFSMTkKSE5NWTkweUhYN3Jta3ZqcHdOdkRZWjNIWUVvbGJQZThWZjhBTFpsbDVBTnJ5SUJqbXNrY01QM2lRMzdxWGZUNwptSzhKeHdJREFRQUJvQUF3RFFZSktvWklodmNOQVFFTEJRQURnZ0VCQUFwa09aUUNTTGxGYWJTVk9zNmtzL1ZyCmd3b3FTdjFOM1YzUC84SmNzR1pFUTc4TkpJb2R0REExS3EwN25DWjJWUktselZDN1kyMCszZUVzTXZNWnFMc1MKbUtaS0w2SFE3N2RHa1liUjhzKzRMaFo4YXR6cXVMSnlqZUZKODQ2N1ZrUXF5T1R6by9wZ3E4YWJJY01XNzlKMgoxWEkybi92RWlIMEgvWU9DaWExVHRqTnpSWGtlL2hPQTZ4Y29CcVRpdWtkUHBqZDJSaWFTRUNUS1h4ZGNOS0xLCmZVbFhkb2s5UkVkQ2V3bU9ISUdvVG9qUGRWdWlPdkYzZkFqUXZNNDJ3UjJDdklHMWs1YUQzdWVlbzcwd0pnUlQKYzhZNnUwY2padEI5ZW5xUStmRFFqdUUyZElrMDJLbm5HQVppK0wxUnRnSnA2Tm1udEg5WUc3RlBLSXYrakFZPQotLS0tLUVORCBDRVJUSUZJQ0FURSBSRVFVRVNULS0tLS0K signerName: kubernetes.io/kube-apiserver-client usages: - client auth username: kubernetes-adminstatus: certificate: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSURDekNDQWZPZ0F3SUJBZ0lRUC9aR05rUjdzVy9sdHhkQTNGQjBoekFOQmdrcWhraUc5dzBCQVFzRkFEQVYKTVJNd0VRWURWUVFERXdwcmRXSmxjbTVsZEdWek1CNFhEVEl5TURFeE5qRTFNakV3TWxvWERUSXpNREV4TmpFMQpNakV3TWxvd0pqRVFNQTRHQTFVRUNoTUhZMnRoTWpBeU1ERVNNQkFHQTFVRUF4TUpiR2x5ZFdsc2IyNW5NSUlCCklqQU5CZ2txaGtpRzl3MEJBUUVGQUFPQ0FROEFNSUlCQ2dLQ0FRRUF0OU9CbndhQTNWZEZmamRpdXJKUHRjYWkKWE9HUGMxQVdGbXJsZ29jcTR2VDVXWmdxd1g5T0RvSnpDREJZZVFJQ3h0Wm5uUk9XY1B2dVB6K1JvUTJuTzcrcQpnNUNjZzlWZmVOWFRwUDB0VXZsQ21ZVVg2dkRDdlgxUDR3VnNFdXNydlZBdkF4NmdqZTZzNW94VmFlMjBxcUFFCnpTUXJhczhPeldsZ1Frd0xjNU5MZ2k3bWlpNHNzaVpQRXU1ZFZIRWs5dHdCeUZTV0dsanJETkhvc3hSQUU1eWsKWjBnODBWSzN1U1JNNmFHSEJ0QmVpbysxa2d0U0xDMlVScy9QWUwwRGNSQm9zUUx0c3JublFSMTlITk1ZOTB5SApYN3Jta3ZqcHdOdkRZWjNIWUVvbGJQZThWZjhBTFpsbDVBTnJ5SUJqbXNrY01QM2lRMzdxWGZUN21LOEp4d0lECkFRQUJvMFl3UkRBVEJnTlZIU1VFRERBS0JnZ3JCZ0VGQlFjREFqQU1CZ05WSFJNQkFmOEVBakFBTUI4R0ExVWQKSXdRWU1CYUFGR0RjS1N1dVY1TTV5Wk5CR1AxLzZoN0xZNytlTUEwR0NTcUdTSWIzRFFFQkN3VUFBNElCQVFCagpOelREMmZ5bTc3bXQ4dzlacXRZN3NQelhmNHJQTXpWUzVqV3NzenpidlhEUzhXcFNMWklIYkQ3VU9vYlYxcFYzClYzRW02RXlpWUEvbjhMYTFRMnZra0EyUDk1d3JqWlBuemZIeUhWVFpCTUY4YU1MSHVpVHZ5WlVVV0JYMTg1UFAKQ2MxRncwanNmVThJMDBzbUNOeURBZjVMejFjRUVrNWlGYUswMDJRblUyNk5lcDF3U3BMcVZWWVptSW9UVU9DOApCNzNpU3J6Y0wyVmdBejRCaUQxdUVlUkFMM20zRTB2VVpsQjduKzF1MllrNDFCajdGYnpWR2w1dFpYT3hDMVhxCjJVc0hSbmkzY1VYZ203QlloZDU3aTFHclRRRFJpckRwVFV1RDB3ZlFYTjZLdEx1TmVDYUc0alc4ZTl4QkQrTjIKOFE4Z25UZjdPSEI3VWZkUzVnMWQKLS0tLS1FTkQgQ0VSVElGSUNBVEUtLS0tLQo= conditions: - lastTransitionTime: "2022-01-16T15:26:02Z" lastUpdateTime: "2022-01-16T15:26:01Z" message: This CSR was approved by kubectl certificate approve. reason: KubectlApprove status: "True" type: Approved导出证书文件:

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-rbac-create]└─$kubectl get csr liruilong -o jsonpath='{.status.certificate}'| base64 -d > liruilong.crt给用户授权,这里给 liruilong 一个集群角色 cluster-role(类似于root一样的角色),这样 liruilong 具有管理员权限

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-rbac-create]└─$kubectl create clusterrolebinding test --clusterrole=cluster-admin --user=liruilongclusterrolebinding.rbac.authorization.k8s.io/test created┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-rbac-create]└─$创建 kubeconfig 文件

拷贝 CA 证书

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-rbac-create]└─$lscsr.yaml #(申请证书请求文件yaml) liruilong.crt #公钥(证书文件) liruilong.csr #(证书请求文件) liruilong.key #私钥┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-rbac-create]└─$ls /etc/kubernetes/pki/apiserver.crtapiserver.key ca.crt front-proxy-ca.crt front-proxy-client.key sa.pubapiserver-etcd-client.crt apiserver-kubelet-client.crt ca.key front-proxy-ca.key liruilong.csvapiserver-etcd-client.key apiserver-kubelet-client.key etcd front-proxy-client.crt sa.key┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-rbac-create]└─$cp /etc/kubernetes/pki/ca.crt .设置集群字段,这里包含集群名字,服务地址和集群证书

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-rbac-create]└─$kubectl config --kubeconfig=kc1 set-cluster cluster1 --server=https://192.168.26.81:6443 --certificate-authority=ca.crt --embed-certs=trueCluster "cluster1" set.在上面集群中创建一个上下文context1

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-rbac-create]└─$kubectl config --kubeconfig=kc1 set-context context1 --cluster=cluster1 --namespace=default --user=liruilongContext "context1" created.这里–embed-certs=true 的意思是把证书内容写入到此 kubeconfig 文件里。

设置用户字段,包含用户名字,用户证书,用户私钥

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-rbac-create]└─$kubectl config --kubeconfig=kc1 set-credentials liruilong --client-certificate=liruilong.crt --client-key=liruilong.key --embed-certs=trueUser "liruilong" set.查看创建的kubeconfig文件信息

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-rbac-create]└─$cat kc1apiVersion: v1clusters:- cluster: certificate-authority-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUMvakNDQWVhZ0F3SUJBZ0lCQURBTkJna3Foa2lHOXcwQkFRc0ZBREFWTVJNd0VRWURWUVFERXdwcmRXSmwKY201bGRHVnpNQjRYRFRJeE1USXhNakUyTURBME1sb1hEVE14TVRJeE1ERTJNREEwTWxvd0ZURVRNQkVHQTFVRQpBeE1LYTNWaVpYSnVaWFJsY3pDQ0FTSXdEUVlKS29aSWh2Y05BUUVCQlFBRGdnRVBBRENDQVFvQ2dnRUJBTkdkCisrWnhFRDJRQlR2Rm5ycDRLNFBrd2lsYXUrNjdXNTVobVdwc09KSHF6ckVoWUREY3l4ZTU2Z1VJVDFCUTFwbU0KcGFrM0V4L0JZRStPeHY4ZmxtellGbzRObDZXQjl4VXovTW5HQi96dHZsTGpaVEVHZy9SVlNIZTJweCs2MUlSMQo2Mkh2OEpJbkNDUFhXN0pmR3VXNDdKTXFUNTUrZUNuR00vMCtGdnI2QUJnT2YwNjBSSFFuaVlzeGtpSVJmcjExClVmcnlPK0RFTGJmWjFWeDhnbi9tcGZEZ044cFgrVk9FNFdHSDVLejMyNDJtWGJnL3A0emd3N2NSalpSWUtnVlUKK2VNeVIyK3pwaTBhWW95L2hLYmg4RGRUZ3FZeERDMzR6NHFoQ3RGQnVia1hmb3Ftc3FGNXpQUm1ZS051RUgzVAo2c1FNSFl4emZXRkZvSGQ2Y0JNQ0F3RUFBYU5aTUZjd0RnWURWUjBQQVFIL0JBUURBZ0trTUE4R0ExVWRFd0VCCi93UUZNQU1CQWY4d0hRWURWUjBPQkJZRUZHRGNLU3V1VjVNNXlaTkJHUDEvNmg3TFk3K2VNQlVHQTFVZEVRUU8KTUF5Q0NtdDFZbVZ5Ym1WMFpYTXdEUVlKS29aSWh2Y05BUUVMQlFBRGdnRUJBRVE0SUJhM0hBTFB4OUVGWnoyZQpoSXZkcmw1U0xlanppMzkraTdheC8xb01SUGZacElwTzZ2dWlVdHExVTQ2V0RscTd4TlFhbVVQSFJSY1RrZHZhCkxkUzM5Y1UrVzk5K3lDdXdqL1ZrdzdZUkpIY0p1WCtxT1NTcGVzb3lrOU16NmZxNytJUU9lcVRTbGpWWDJDS2sKUFZxd3FVUFNNbHFNOURMa0JmNzZXYVlyWUxCc01EdzNRZ3N1VTdMWmg5bE5TYVduSzFoR0JKTnRndjAxdS9MWAo0TnhKY3pFbzBOZGF1OEJSdUlMZHR1dTFDdEFhT21CQ2ZjeTBoZHkzVTdnQXh5blR6YU1zSFFTamIza0JDMkY5CkpWSnJNN1FULytoMStsOFhJQ3ZLVzlNM1FlR0diYm13Z1lLYnMvekswWmc1TE5sLzFJVThaTUpPREhTVVBlckQKU09ZPQotLS0tLUVORCBDRVJUSUZJQ0FURS0tLS0tCg== server: https://192.168.26.81:6443 name: cluster1contexts:- context: cluster: cluster1 namespace: default user: liruilong name: context1current-context: ""kind: Configpreferences: {}users:- name: liruilong user: client-certificate-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSURDekNDQWZPZ0F3SUJBZ0lRUC9aR05rUjdzVy9sdHhkQTNGQjBoekFOQmdrcWhraUc5dzBCQVFzRkFEQVYKTVJNd0VRWURWUVFERXdwcmRXSmxjbTVsZEdWek1CNFhEVEl5TURFeE5qRTFNakV3TWxvWERUSXpNREV4TmpFMQpNakV3TWxvd0pqRVFNQTRHQTFVRUNoTUhZMnRoTWpBeU1ERVNNQkFHQTFVRUF4TUpiR2x5ZFdsc2IyNW5NSUlCCklqQU5CZ2txaGtpRzl3MEJBUUVGQUFPQ0FROEFNSUlCQ2dLQ0FRRUF0OU9CbndhQTNWZEZmamRpdXJKUHRjYWkKWE9HUGMxQVdGbXJsZ29jcTR2VDVXWmdxd1g5T0RvSnpDREJZZVFJQ3h0Wm5uUk9XY1B2dVB6K1JvUTJuTzcrcQpnNUNjZzlWZmVOWFRwUDB0VXZsQ21ZVVg2dkRDdlgxUDR3VnNFdXNydlZBdkF4NmdqZTZzNW94VmFlMjBxcUFFCnpTUXJhczhPeldsZ1Frd0xjNU5MZ2k3bWlpNHNzaVpQRXU1ZFZIRWs5dHdCeUZTV0dsanJETkhvc3hSQUU1eWsKWjBnODBWSzN1U1JNNmFHSEJ0QmVpbysxa2d0U0xDMlVScy9QWUwwRGNSQm9zUUx0c3JublFSMTlITk1ZOTB5SApYN3Jta3ZqcHdOdkRZWjNIWUVvbGJQZThWZjhBTFpsbDVBTnJ5SUJqbXNrY01QM2lRMzdxWGZUN21LOEp4d0lECkFRQUJvMFl3UkRBVEJnTlZIU1VFRERBS0JnZ3JCZ0VGQlFjREFqQU1CZ05WSFJNQkFmOEVBakFBTUI4R0ExVWQKSXdRWU1CYUFGR0RjS1N1dVY1TTV5Wk5CR1AxLzZoN0xZNytlTUEwR0NTcUdTSWIzRFFFQkN3VUFBNElCQVFCagpOelREMmZ5bTc3bXQ4dzlacXRZN3NQelhmNHJQTXpWUzVqV3NzenpidlhEUzhXcFNMWklIYkQ3VU9vYlYxcFYzClYzRW02RXlpWUEvbjhMYTFRMnZra0EyUDk1d3JqWlBuemZIeUhWVFpCTUY4YU1MSHVpVHZ5WlVVV0JYMTg1UFAKQ2MxRncwanNmVThJMDBzbUNOeURBZjVMejFjRUVrNWlGYUswMDJRblUyNk5lcDF3U3BMcVZWWVptSW9UVU9DOApCNzNpU3J6Y0wyVmdBejRCaUQxdUVlUkFMM20zRTB2VVpsQjduKzF1MllrNDFCajdGYnpWR2w1dFpYT3hDMVhxCjJVc0hSbmkzY1VYZ203QlloZDU3aTFHclRRRFJpckRwVFV1RDB3ZlFYTjZLdEx1TmVDYUc0alc4ZTl4QkQrTjIKOFE4Z25UZjdPSEI3VWZkUzVnMWQKLS0tLS1FTkQgQ0VSVElGSUNBVEUtLS0tLQo= client-key-data: LS0tLS1CRUdJTiBSU0EgUFJJVkFURSBLRVktLS0tLQpNSUlFcEFJQkFBS0NBUUVBdDlPQm53YUEzVmRGZmpkaXVySlB0Y2FpWE9HUGMxQVdGbXJsZ29jcTR2VDVXWmdxCndYOU9Eb0p6Q0RCWWVRSUN4dFpublJPV2NQdnVQeitSb1Eybk83K3FnNUNjZzlWZmVOWFRwUDB0VXZsQ21ZVVgKNnZEQ3ZYMVA0d1ZzRXVzcnZWQXZBeDZnamU2czVveFZhZTIwcXFBRXpTUXJhczhPeldsZ1Frd0xjNU5MZ2k3bQppaTRzc2laUEV1NWRWSEVrOXR3QnlGU1dHbGpyRE5Ib3N4UkFFNXlrWjBnODBWSzN1U1JNNmFHSEJ0QmVpbysxCmtndFNMQzJVUnMvUFlMMERjUkJvc1FMdHNybm5RUjE5SE5NWTkweUhYN3Jta3ZqcHdOdkRZWjNIWUVvbGJQZTgKVmY4QUxabGw1QU5yeUlCam1za2NNUDNpUTM3cVhmVDdtSzhKeHdJREFRQUJBb0lCQUJsS0I3TVErZmw1WUI0VgpFSWdPcjlpYUV3d2tHOUFKWElDSkJEb0l6bVdhdmhNTlZCUjZwd3BuOTl0UWkxdGFZM2RuVjZuTVlBMzdHck9vCjB5Z004TXpQZVczUUh6Z2p5cGFkRkJqR204Mm1iUHNoekVDT0RyeHkyT0txaEV1MS9yWjBxWU1NVzVvckU2NUQKOEJ3NmozaEp1MTlkY251bk1Lb2hyUlJ4MGNGOGJrVHpRcWk1Y0xGZ0lBUlArNklFcnQrS0FVSGFVQ0wvSUtTYgoyblJnSmhvSWszTUJnQnM3eVl0NFpVNlpkSVFLRU56RzRUd2VjNG5ESXE2TkllU1ZpSEVtbmdjRXVOdTNOaWEvCmxwTWd5ZHJKZWJKYStrc0FiZU4zU21TRGc2MXpVWUpHV2FOZUFPemdmbkZRTVp2Y2FyNEQ1b0xCQUE5Rlpic0EKc0hUZjBBRUNnWUVBNWVmdDJSV25kM2pueWJBVVExa3dRTzlzN1BmK241OXF3ekIwaEdrcC9qcjh2dGRYZkxMNgo0THBoNWFhKzlEM0w5TEpMdk8xa0cyaG5UbFE4Q25uVXpVUUUreldiYlRJblR0eE1hRzB5VjJlZ3NaQkFseERYClk1K2tZZ29EVXIyN2dWbE5QZ29SWVkzRG1ZZHplVmp0NEFHOE9JNWRPUlJ6bFE3VHN0Nk9XUUVDZ1lFQXpMQ3kKM2Q0SkRZRjBpeXFFc2FsNFNsckdEc3hmY2xxeUZaVWpmcUcvdGFHeEFTeE9zL0h3SDNwR1cwQXh3c2tPdVNkUwpWcGxOOC9uZjBMQjdPV0hQL2FjTlJLbHdJeUZrNG9EU0VMOVJ5d0FFVEF3NHdrYitoRzNZdUFHU2YvY1dEWXNtCjNJUUxlMVdFS0JSZDVBS1lkYXdyYlJtclFkSndSaFFkalNzOTJzY0NnWUVBck1WSVpwVHhUc1ViV3VQcHRscjEKK2paekl2bVM3WjI5ZTRXVWFsVWxhNW9raWI0R1R2MnBydXdoMlpVZmR5aGhkemZ0MXNLSE1sbVpHTElRbE1iTgpkcHdoS2k4MDZEQ0NmYTdyOUtYcTZPaEZTR3JoUHlVMjEvVUdjVzZZNUxzVWg3WDJhQ0xrd096cUN4eFJXT1hOCmpVT0FrUGZiY3FPOTRFeE9KdU05RWdFQ2dZRUFwNUVqN0xPL0wzcENBVWVlZDU3bjVkN244dWRtWDhSVnM0dHoKRWxDeUU2dzVybDhxVXUrR0J3N2ZtQVkyZG1LSUZoVlZ0NlVyQnNjUmJkTjhIUjZ3MmRNdTduM1RXajhWU3NQdwp0RnNiUjVkTTdVQzRHbnRxRXRtbUtBVEpmTTYzRkFGTm9Bck5KM3Q3aENBZ09PL1RCY29iaHVZVHAvL3hmNzBwCjhBNXRSYk1DZ1lCMkJTb0ZTbW1sVFN5RjdnZnhmM281dTNXM3lwTENIRFV0cFZkYlAxZm9vemJwZUs3V29IY2YKTEhkMG4xeUNLcHdiWU1HZ2hGNGlHbUVHSUlkc0NWWlArRVY2bGR1UEtqcUVtOWtqdUxST0t6UlpIRm9HeUFTTwpLcmIzVlI0Q0tIdm5aQVBWY3R2N1B1KzRKZ01saUpIbDhHVllocU01VXlrYkxSTWROSFNOSVE9PQotLS0tLUVORCBSU0EgUFJJVkFURSBLRVktLS0tLQo=┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-rbac-create]└─$修改kubeconfig文件当前的上下文为之前创建的上下文

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-rbac-create]└─$sed 's#current-context: ""#current-context: "context1"#' kc1 | grep current-contextcurrent-context: "context1"┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-rbac-create]└─$sed -i 's#current-context: ""#current-context: "context1"#' kc1┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-rbac-create]└─$cat kc1 | grep current-contextcurrent-context: "context1"这样 kubeconfig 文件就创建完毕了,下面开始验证 kubeconfig 文件。

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-rbac-create]└─$kubectl auth can-i list pods --as liruilong #检查是否具有 list 当前命名空间里的 pod 的权限yes┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-rbac-create]└─$kubectl auth can-i list pods -n kube-system --as liruilong #检查 是否具有 list 命名空间 kube-system 里 pod 的权限yes┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-rbac-create]└─$拷贝证书到客户机

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-rbac-create]└─$scp kc1 root@192.168.26.55:~客户机指定证书访问测试

┌──[root@liruilongs.github.io]-[~]└─$ kubectl --kubeconfig=kc1 get pods -n kube-systemNAMEREADY STATUS RESTARTS AGEcalico-kube-controllers-78d6f96c7b-85rv9 1/1 Running194 (14h ago) 33dcalico-node-6nfqv 0/1 Running255 (14h ago) 35dcalico-node-fv458 0/1 Running5035dcalico-node-h5lsq 1/1 Running94 (38h ago) 35d。。。。。。。。。。。。┌──[root@liruilongs.github.io]-[~]└─$这样一个kubeconfig文件就创建完成

API Server授权管理

环境版本

┌──[root@vms81.liruilongs.github.io]-[~/ansible]└─$kubectl get nodesNAME STATUS ROLES AGE VERSIONvms81.liruilongs.github.io Ready control-plane,master 41d v1.22.2vms82.liruilongs.github.io Ready <none> 41d v1.22.2vms83.liruilongs.github.io Ready <none> 41d v1.22.2除了k8s集群,我们还用到了集群外的机器liruilongs.github.io,这个机器作为客户器,安装了kubectl客户端,以上传了liruilong用户的kubeconfig证书文件

命名空间环境准备

┌──[root@vms81.liruilongs.github.io]-[~/ansible]└─$kubectl create ns liruilong-rbac-createnamespace/liruilong-rbac-create created┌──[root@vms81.liruilongs.github.io]-[~/ansible]└─$mkdir k8s-rbac-create;cd k8s-rbac-create┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-rbac-create]└─$kubectl config set-context $(kubectl config current-context) --namespace=liruilong-rbac-createContext "kubernetes-admin@kubernetes" modified.┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-rbac-create]└─$授权策略简述

当客户端发起API Server调用时, API Server内部要先进行用户认证,然后执行用户鉴权流程,即通过鉴权策略来决定一个API调用是否合法。想来对于开发的小伙伴并不陌生,常用的Spring Security等安全框架,都会涉及认证和鉴权的过程。

既然鉴权,那必有授权的过程,简单地说,授权就是授予不同的用户不同的访问权限。

API Server目前支持以下几种授权策略

| 策略 | 描述 |

|---|---|

| AlwaysDeny | 表示拒绝所有请求,一般用于测试。 |

| AlwaysAllow | 允许接收所有请求,如果集群不需要授权流程,则可以采用该策略,这也是Kubernetes的默认配置。 |

| ABAC | (Attribute-Based Access Control)基于属性的访问控制,表示使用用户配置的授权规则对用户请求进行匹配和控制。 |

| Webhook | 通过调用外部REST服务对用户进行授权。 |

| RBAC | (Role-Based Access Control)基于角色的访问控制。 |

| Node | 是一种专用模式,用于对kubelet发出的请求进行访问控制。 |

策略的设置通过通过API Server的启动参数"--authorization-mode"设置。

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-rbac-create]└─$cat /etc/kubernetes/manifests/kube-apiserver.yaml | grep authorization-mode - --authorization-mode=Node,RBAC┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-rbac-create]└─$关于授权策略,AlwaysAllow和AlwaysDeny不多讲,ABAC不够灵活,Node授权器主要用于各个node上的kubelet访问apiserver时使用的,其他一般均由RBAC授权器来授权,下面我们看看RBAC的权限策略

RBAC授权模式

这一块,官方文档写的很详细,详细学习,小伙伴还是去官网看看,这里讲一些常用的完整Demo,官网地址:https://kubernetes.io/zh/docs/reference/access-authn-authz/rbac/

RBAC(Role-Based Access Control,基于角色的访问控制)即权限是和角色相关的,而用户则被分配相应的角色作为其成员。

在

Kubernetes的1.5版本中引入,在1.6版本时升级为Beta版本,在1.8版本时升级为GA。作为kubeadm安装方式的默认选项,相对于其他访问控制方式,新的RBAC具有如下优势。

- 对集群中的资源和非资源权限均有完整的覆盖。

- 整个RBAC完全由几个API对象完成,同其他API对象一样,可以用

kubectl或API进行操作。 - 可以在运行时进行调整,无须重新启动

API Server。

要使用RBAC授权模式,需要在API Server的启动参数中加上–authorization-mode=RBAC,如果小伙伴默认使用kubeadm安装,那么默认使用Node,RBAC两种策略

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-rbac-create]└─$cat /etc/kubernetes/manifests/kube-apiserver.yaml | grep authorization-mode - --authorization-mode=Node,RBACRBAC的API资源对象引入了4个新的顶级资源对象:

RoleClusterRoleRoleBindingClusterRoleBinding

同其他API资源对象一样,用户可以使用kubectl或者API调用等方式操作这些资源对象。

角色

角色(Role)一个角色就是一组权限的集合。在同一个命名空间中,可以用Role来定义一个角色,如果是集群级别的,就需要使用ClusterRole了。角色只能对命名空间内的资源进行授权

集群角色(ClusterRole)集群角色除了具有和角色一致的, 命名空间内资源的管理能力,因其集群级别的范围,还可以用于以下特殊元素的授权。

- 集群范围的资源,例如Node.

- 非资源型的路径,例如“/api"

- 包含全部命名空间的资源,例如pods (用于

kubectl get pods -all-namespaces这样的操作授权)。

下面我们找一个集群中内置的集群角色管理员看看详细信息

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-rbac-create]└─$kubectl describe clusterrole adminName: adminLabels:kubernetes.io/bootstrapping=rbac-defaultsAnnotations: rbac.authorization.kubernetes.io/autoupdate: truePolicyRule: Resources Non-Resource URLs Resource Names Verbs --------- ----------------- -------------- ----- rolebindings.rbac.authorization.k8s.io [] [][create delete deletecollection get list patch update watch] roles.rbac.authorization.k8s.io [] [][create delete deletecollection get list patch update watch] configmaps [] [][create delete deletecollection patch update get list watch] events[] [][create delete deletecollection patch update get list watch] persistentvolumeclaims [] [][create delete deletecollection patch update get list watch] pods [] [][create delete deletecollection patch update get list watch] replicationcontrollers/scale [] [][create delete deletecollection patch update get list watch].........┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-rbac-create]└─$在上面的管理员集群角色中,

Resources列为允许访问的资源,Verbs列为允许操作的行为或者动作

角色绑定

有个角色,那么角色和用户或者用户组是如何绑定的,这里就需要角色绑定这个资源对象了,不知道小伙记不记得,我们在做认证的时候,有一个授权的动作

给用户授权,这里给 liruilong 一个集群角色 cluster-role(类似于root一样的角色),这样 liruilong 具有管理员权限

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-rbac-create]└─$kubectl create clusterrolebinding test --clusterrole=cluster-admin --user=liruilongclusterrolebinding.rbac.authorization.k8s.io/test created┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-rbac-create]└─$在这里,我们把cluster-admin这个集群角色绑定到了用户liruilong,cluster-admin这个角色相当于是一个root用户的角色

角色绑定(RoleBinding)和集群角色绑定(ClusterRoleBinding) :用来把一个角色绑定到一个目标上,绑定目标可以是User (用户) 、Group (组)或者Service Account。

在一般情况下,使用RoleBinding为某个命名空间授权,使用ClusterRoleBinding为集群范围内授权。

角色绑定的区别:

RoleBinding(角色绑定)可以引用Role进行授权。也可以引用ClusterRole,对属于同一命名空间内ClusterRole定义的资源主体进行授权

ClusterRoleBinding(集群角色绑定)中的角色只能是集群角色(ClusterRole),用于进行集群级别或者对所有命名空间都生效的授权

实战

角色创建

查看系统中角色

┌──[root@vms81.liruilongs.github.io]-[~/ansible]└─$kubectl get roleNo resources found in liruilong-rbac-create namespace.创建一个role角色资源对象

┌──[root@vms81.liruilongs.github.io]-[~/ansible]└─$kubectl create role role-liruilong --verb=get,list,watch --resource=pod --dry-run=client -o yaml这里我们创建一个角色,名字叫role-liruilong,定义这个角色拥有pod资源的查看详细信息,列表查看,监听,创建的权限

查看资源文件

apiVersion: rbac.authorization.k8s.io/v1kind: Rolemetadata: creationTimestamp: null name: role-liruilongrules:- apiGroups: - "" resources: - pods verbs: - get - list - watch - create资源文件属性

- apiGroups:支持的

API组列表,例如“apiVersion:batch/v1”,“apiVersion: extensions:v1beta1”,“apiVersion: apps/v1beta1”等 - resources:支持的

资源对象列表,例如pods、deployments、jobs等。 - verbs:对

资源对象的操作方法列表,例如get、watch、list、delete、replace、patch等

通过yaml文件创建一个role资源对象

┌──[root@vms81.liruilongs.github.io]-[~/ansible]└─$kubectl create role role-liruilong --verb=get,list,watch,create --resource=pod --dry-run=client -o yaml >role-liruilong.yaml┌──[root@vms81.liruilongs.github.io]-[~/ansible]└─$kubectl apply -f role-liruilong.yamlrole.rbac.authorization.k8s.io/role-liruilong created查看角色信息

┌──[root@vms81.liruilongs.github.io]-[~/ansible]└─$kubectl get roleNAME CREATED ATrole-liruilong 2022-01-23T13:17:15Z查看角色详细信息

┌──[root@vms81.liruilongs.github.io]-[~/ansible]└─$kubectl describe role role-liruilongName: role-liruilongLabels:<none>Annotations: <none>PolicyRule: Resources Non-Resource URLs Resource Names Verbs --------- ----------------- -------------- ----- pods[] [][get list watch create]创建角色绑定

绑定角色到liruilong用户

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-rbac-create]└─$kubectl create rolebinding rbind-liruilong --role=role-liruilong --user=liruilongrolebinding.rbac.authorization.k8s.io/rbind-liruilong created查看角色绑定

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-rbac-create]└─$kubectl get rolebindingsNAMEROLE AGErbind-liruilong Role/role-liruilong 23s查看绑定角色的详细信息

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-rbac-create]└─$kubectl describe rolebindings rbind-liruilongName: rbind-liruilongLabels:<none>Annotations: <none>Role: Kind: Role Name: role-liruilongSubjects: Kind NameNamespace ---- ------------- User liruilong┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-rbac-create]└─$然后我们在客户机访问测试

这里使用我们之前创建的kubeonfig证书,我们之前创建证书也是基于用户liruilong的

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-rbac-create]└─$kubectl config view | grep namesp namespace: liruilong-rbac-create 修改一下kubeonfig证书的命名空间

┌──[root@liruilongs.github.io]-[~]└─$ cat kc1 | grep namesp namespace: default┌──[root@liruilongs.github.io]-[~]└─$ sed 's#namespace: default#namespace: liruilong-rbac-create#g' kc1 | grep namesp namespace: liruilong-rbac-create┌──[root@liruilongs.github.io]-[~]└─$ sed -i 's#namespace: default#namespace: liruilong-rbac-create#g' kc1 | grep namesp在客户机指定kubeconfig文件测试,认证和鉴权没有问题,当前命名空间没有资源对象

┌──[root@liruilongs.github.io]-[~]└─$ kubectl --kubeconfig=kc1 get podsNo resources found in liruilong-rbac-create namespace. 然后我们在客户机创建一个pod

┌──[root@liruilongs.github.io]-[~]└─$ kubectl --kubeconfig=kc1 run pod-demo --image=nginx --image-pull-policy=IfNotPresent --dry-run=client -o yaml >pod-demo.yaml查看pod资源,创建成功

┌──[root@liruilongs.github.io]-[~]└─$ kubectl --kubeconfig=kc1 get podsNAMEREADY STATUS RESTARTS AGEpod-demo 1/1 Running 0 28m删除一个pod,因为没有授权删除的权限,所以无法删除

┌──[root@liruilongs.github.io]-[~]└─$ kubectl --kubeconfig=kc1 delete pod pod-demoError from server (Forbidden): pods "pod-demo" is forbidden: User "liruilong" cannot delete resource "pods" in API group "" in the namespace "liruilong-rbac-create"┌──[root@liruilongs.github.io]-[~]└─$回到集群,添加删除的角色

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-rbac-create]└─$vim role-liruilong.yaml┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-rbac-create]└─$kubectl apply -f role-liruilong.yamlrole.rbac.authorization.k8s.io/role-liruilong configuredapiVersion: rbac.authorization.k8s.io/v1kind: Rolemetadata: creationTimestamp: null name: role-liruilongrules:- apiGroups: - "" resources: - pods verbs: - get - list - watch - create - delete重新删除,pod删除成功

┌──[root@liruilongs.github.io]-[~]└─$ kubectl --kubeconfig=kc1 delete pod pod-demoError from server (Forbidden): pods "pod-demo" is forbidden: User "liruilong" cannot delete resource "pods" in API group "" in the namespace "liruilong-rbac-create"┌──[root@liruilongs.github.io]-[~]└─$ kubectl --kubeconfig=kc1 delete pod pod-demopod "pod-demo" deleted┌──[root@liruilongs.github.io]-[~]└─$这里我们查看SVC信息,发现没有权限

┌──[root@liruilongs.github.io]-[~]└─$ kubectl --kubeconfig=kc1 get svcError from server (Forbidden): services is forbidden: User "liruilong" cannot list resource "services" in API group "" in the namespace "liruilong-rbac-create"提示我们用户liruilong需要添加services资源权限

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-rbac-create]└─$vim role-liruilong.yaml┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-rbac-create]└─$kubectl apply -f role-liruilong.yamlrole.rbac.authorization.k8s.io/role-liruilong configuredapiVersion: rbac.authorization.k8s.io/v1kind: Rolemetadata: creationTimestamp: null name: role-liruilongrules:- apiGroups: - "" resources: - pods - services - deployments verbs: - get - list - watch - create - delete这里我们在集群中添加svc和deploy。然后在客户机访问测试,之前访问没有权限,现在提示命名空间中没有资源,说明鉴权成功

┌──[root@liruilongs.github.io]-[~]└─$ kubectl --kubeconfig=kc1 get svcNo resources found in liruilong-rbac-create namespace.┌──[root@liruilongs.github.io]-[~]└─$但是我们访问deploy的时候,提示报错,没有权限,什么原因呢,这就涉及到一个- apiGroups:的原因

┌──[root@liruilongs.github.io]-[~]└─$ kubectl --kubeconfig=kc1 get deploymentsError from server (Forbidden): deployments.apps is forbidden: User "liruilong" cannot list resource "deployments" in API group "apps" in the namespace "liruilong-rbac-create"┌──[root@liruilongs.github.io]-[~]└─$我们查看api资源对象,发现deployments和service的版本定义不同,一个是apps/v1,一个是v1,资源对象定义的yaml文件中apiGroups字段用于定义版本组别。

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-rbac-create]└─$kubectl api-resources | grep podspods po v1 true Podpods metrics.k8s.io/v1beta1 true PodMetricspodsecuritypolicies psp policy/v1beta1 false PodSecurityPolicy┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-rbac-create]└─$kubectl api-resources | grep deploydeployments deployapps/v1 true Deployment┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-rbac-create]└─$kubectl api-resources | grep serviceserviceaccounts sa v1 true ServiceAccountservices svc v1 true Serviceapiservices apiregistration.k8s.io/v1false APIServiceservicemonitors monitoring.coreos.com/v1 true ServiceMonitor┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-rbac-create]└─$修改资源配置文件,添加v1的的上一级apps

┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-rbac-create]└─$vim role-liruilong.yaml┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-rbac-create]└─$kubectl apply -f role-liruilong.yamlrole.rbac.authorization.k8s.io/role-liruilong configuredapiVersion: rbac.authorization.k8s.io/v1kind: Rolemetadata: creationTimestamp: null name: role-liruilongrules:- apiGroups: - "" - "apps" resources: - pods - services - deployments verbs: - get - list - watch - create - delete客户机访问测试,鉴权成功,但是没有相关的deploy资源

┌──[root@liruilongs.github.io]-[~]└─$ kubectl --kubeconfig=kc1 get deploymentsError from server (Forbidden): deployments.apps is forbidden: User "liruilong" cannot list resource "deployments" in API group "apps" in the namespace "liruilong-rbac-create"┌──[root@liruilongs.github.io]-[~]└─$ kubectl --kubeconfig=kc1 get deploymentsNo resources found in liruilong-rbac-create namespace.┌──[root@liruilongs.github.io]-[~]└─$这里我们在客户机创建一个deploy

┌──[root@liruilongs.github.io]-[~]└─$ kubectl --kubeconfig=kc1 create deployment web-liruilong --image=nginx --replicas=2 --dry-run=client -o yamlapiVersion: apps/v1kind: Deploymentmetadata: creationTimestamp: null labels: app: web-liruilong name: web-liruilongspec: replicas: 2 selector: matchLabels: app: web-liruilong strategy: {} template: metadata: creationTimestamp: null labels: app: web-liruilong spec: containers: - image: nginx name: nginx resources: {}status: {}┌──[root@liruilongs.github.io]-[~]└─$ kubectl --kubeconfig=kc1 create deployment web-liruilong --image=nginx --replicas=2 --dry-run=client -o yaml >web-liruilong.yaml创建成功,查看deploy的资源,可以正常查看