python爬取舔狼语录-助你520之前找到girlfriends

🐚作者简介:苏凉(专注于网络爬虫,数据分析)

🐳博客主页:苏凉.py的博客

🌐系列专栏:python网络爬虫专栏

👑名言警句:海阔凭鱼跃,天高任鸟飞。

📰要是觉得博主文章写的不错的话,还望大家三连支持一下呀!!!

👉关注✨点赞👍收藏📂

🍒写在前面

这不5-20快到了嘛,给各位单身的朋友精心准备了这套“舔狗”语录。当然,舔到了那就不叫舔狗,懂我意思吧! /手动滑稽

只要你主动,我们才会有故事!!

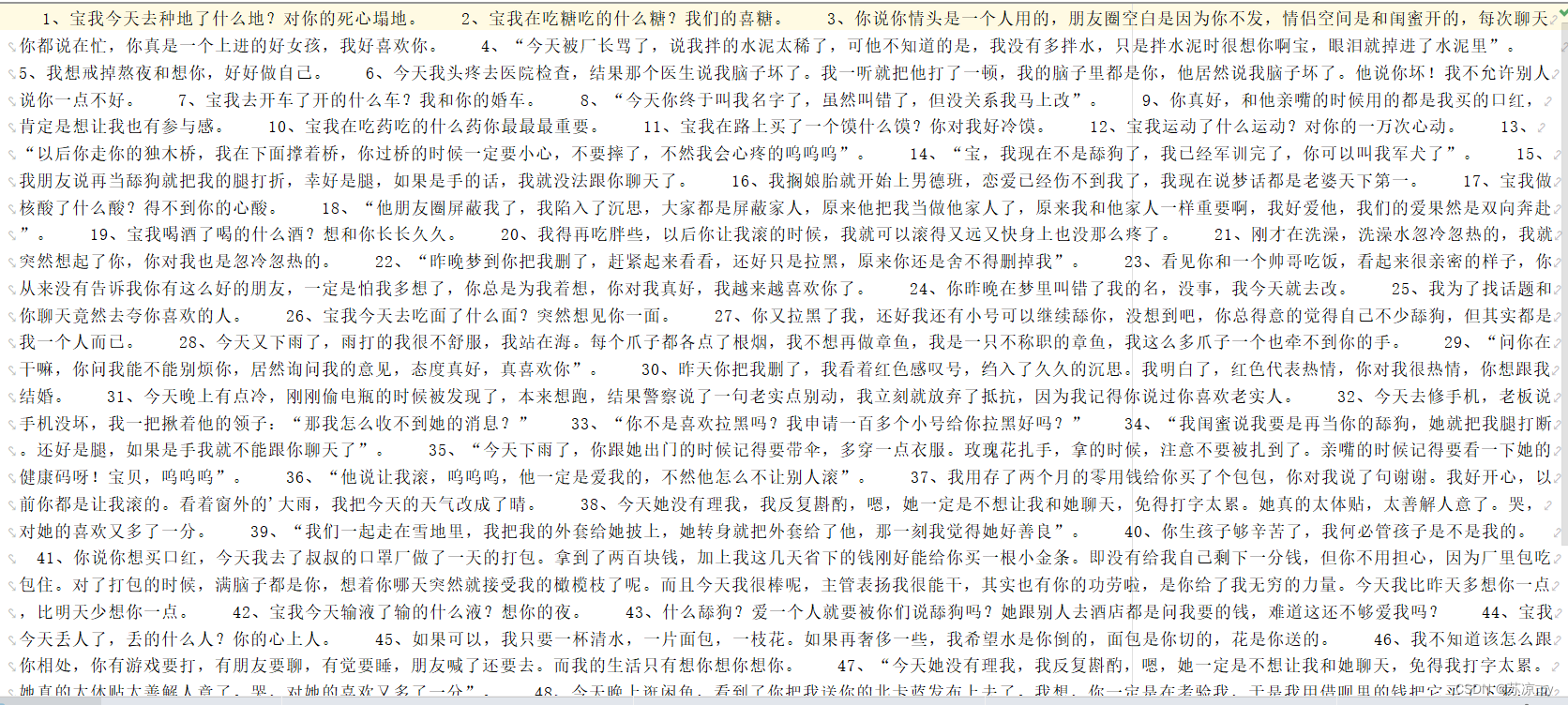

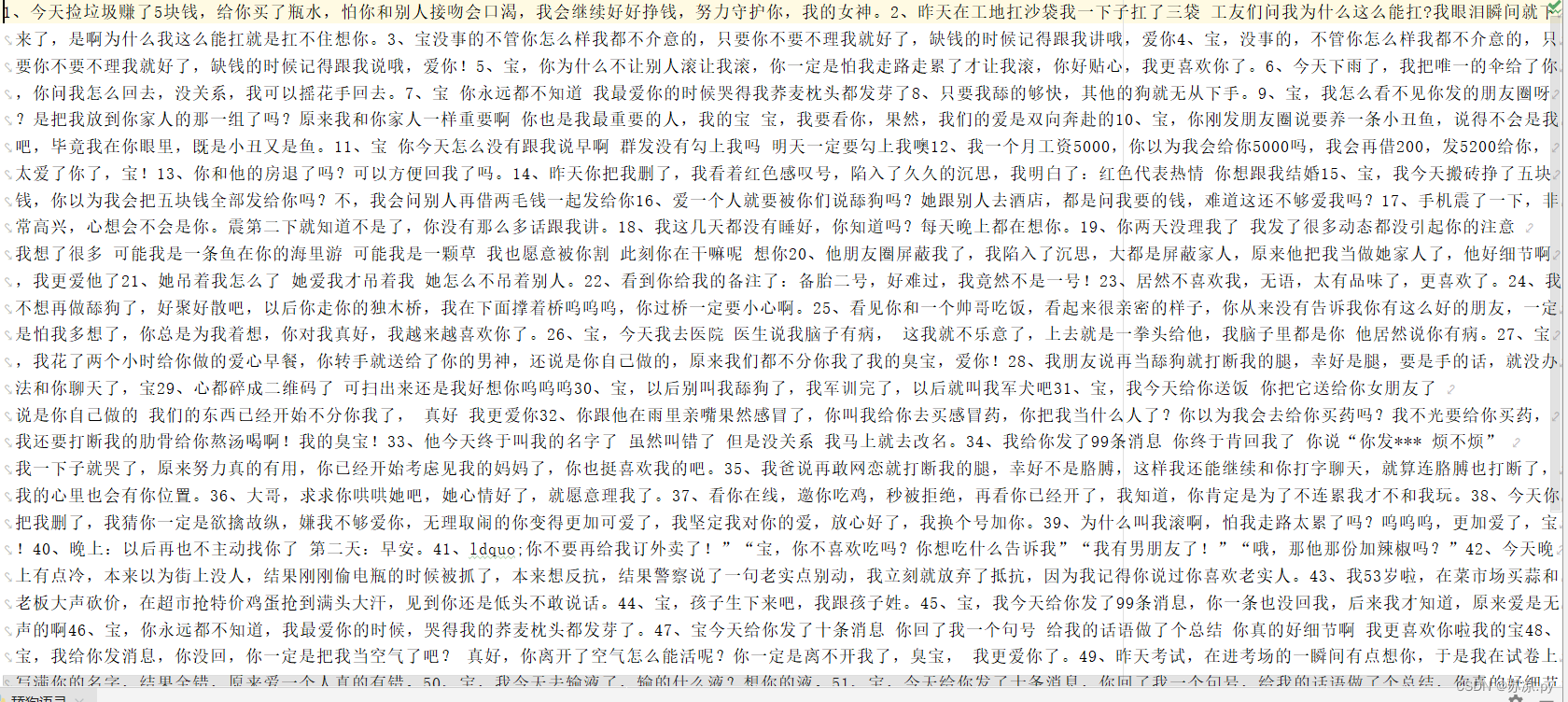

效果实现

随便一句,拿捏的死死的!!

源码分享

此代码支持抓取不同的页面,只需添加相对应的url和所爬取句子的xpath路径即可。

xpath解析请看往期:xpath网页解析

1.导入模块

import requestsfrom lxml import etree2.程序入口

if __name__ == '__main__': x = int(input('输入想获取的舔狗语录:')) content = call_back(x) if x == 1: down_load1(content) print('第一个页面下载完成!') elif x == 2: down_load2(content) print('第二个页面下载完成!')3.返回目标网页源码

def call_back(x): if x == 1: url = "https://www.ruiwen.com/wenxue/juzi/850648.html" elif x == 2: url = "https://m.taiks.com/article/439013.html" headers = { 'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/98.0.4758.102 Safari/537.36' } response = requests.get(url = url,headers = headers) if x == 1: response.encoding="gb2312" elif x == 2: response.encoding='utf-8' content = response.text # print(content) print(url) return content4.通过xpath路径找到句子并下载文本

def down_load1(content): tree = etree.HTML(content) word_list = tree.xpath('//div[@class = "content"]//p//text()') for i in range(2,53): word = word_list[i] # print(word) with open('舔狗语狼.txt','a',encoding='utf-8')as f: f.write(word)def down_load2(content): tree = etree.HTML(content) word_list2 = tree.xpath('//div//table//p//text()') for i in range(len(word_list2)): word2 = word_list2[i] with open('舔狗语狼2.txt', 'a', encoding='utf-8')as fp: fp.write(word2)完整代码

import requestsfrom lxml import etreedef call_back(x): if x == 1: url = "https://www.ruiwen.com/wenxue/juzi/850648.html" elif x == 2: url = "https://m.taiks.com/article/439013.html" headers = { 'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/98.0.4758.102 Safari/537.36' } response = requests.get(url = url,headers = headers) if x == 1: response.encoding="gb2312" elif x == 2: response.encoding='utf-8' content = response.text # print(content) print(url) return contentdef down_load1(content): tree = etree.HTML(content) word_list = tree.xpath('//div[@class = "content"]//p//text()') for i in range(2,53): word = word_list[i] # print(word) with open('舔狼语录.txt','a',encoding='utf-8')as f: f.write(word)def down_load2(content): tree = etree.HTML(content) word_list2 = tree.xpath('//div//table//p//text()') for i in range(len(word_list2)): word2 = word_list2[i] with open('舔狼语录2.txt', 'a', encoding='utf-8')as fp: fp.write(word2)if __name__ == '__main__': x = int(input('输入想获取的舔狗语录:')) content = call_back(x) if x == 1: down_load1(content) print('第一个页面下载完成!') elif x == 2: down_load2(content) print('第二个页面下载完成!')结语

好啦,特此声明本文仅供娱乐学习,提前祝大家520快乐,有女朋友的长长久久,没有女朋友的早日脱单。咱们下期再见啦!!