【 ServiceMesh 】VirtualService实现Istio高级流量治理

一、VirtualService(丰富路由控制)

1.1、URL重定向 (redirect和rewrite)

- redirect :即重定向。

- rewrite :即重写,不仅仅可以实现redirect在url上的重定向,还可以直接重写请求道实际的文件以及更多附加功能。

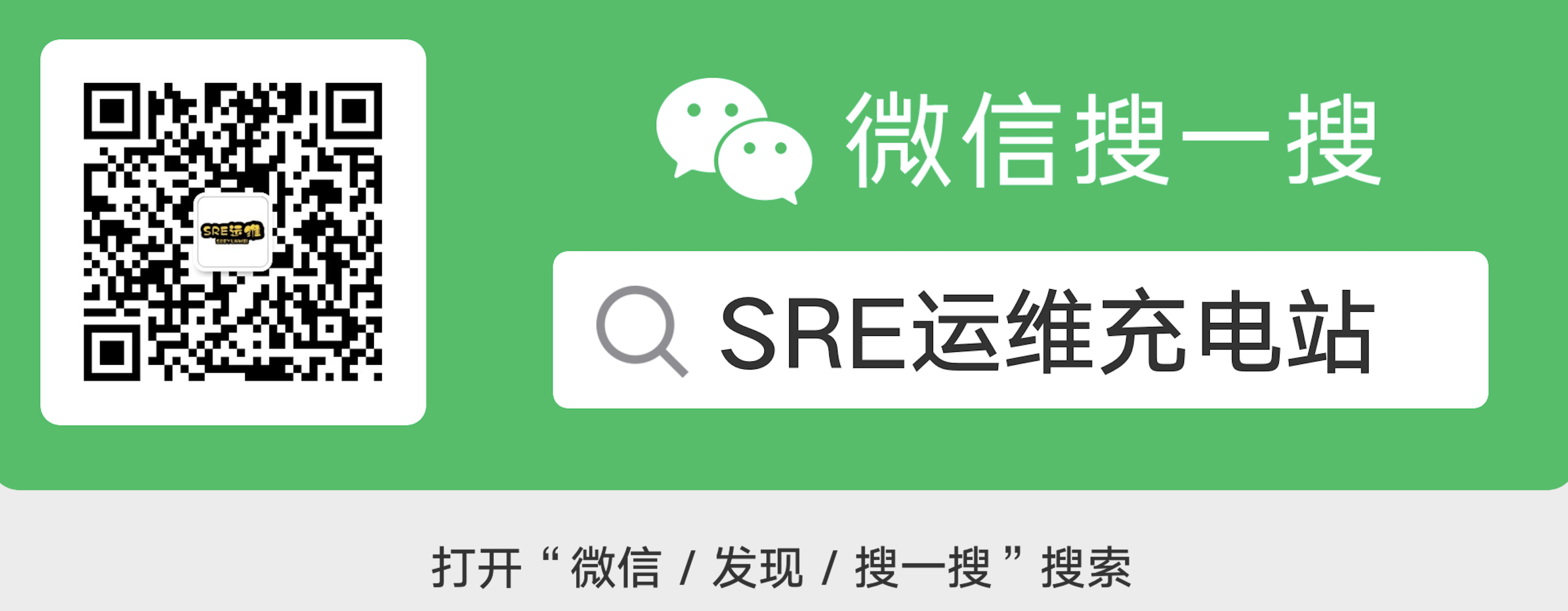

1.1.1、案例示图

- proxy-gateway -> virtualservices/proxy -> virtualservices/demoapp(/backend) -> backend:8082 (Cluster)

1.1.2、案例实验

1、virtualservice 增加路由策略# cat virtualservice-demoapp.yamlapiVersion: networking.istio.io/v1beta1kind: VirtualServicemetadata: name: demoappspec: hosts: - demoapp # hosts名称要和后端service名称所生成的默认路由保持一致 (需要和istioctl proxy-config routes $DEMOAPP的DOMAINS搜索域名称保持一致) # 七层路由机制 http: # rewrite 策略 : http://demoapp/canary -重写-> http://demoapp/ - name: rewrite match: - uri: prefix: /canary rewrite: uri: / # 路由目标 route: # 调度给demoapp的clusters的v11子集 - destination: host: demoapp subset: v11 # redirect 策略 : http://demoapp/backend -重定向-> http://demoapp/ - name: redirect match: - uri: prefix: "/backend" redirect: uri: / authority: backend # 和destination 效果类似,所以也需要 backend service port: 8082 - name: default # 路由目标 route: # 调度给demoapp的clusters的v10子集 - destination: host: demoapp subset: v10 2、部署backend 服务# cat deploy-backend.yaml---apiVersion: apps/v1kind: Deploymentmetadata: labels: app: backend version: v3.6 name: backendv36spec: progressDeadlineSeconds: 600 replicas: 2 selector: matchLabels: app: backend version: v3.6 template: metadata: creationTimestamp: null labels: app: backend version: v3.6 spec: containers: - image: registry.cn-wulanchabu.aliyuncs.com/daizhe/gowebserver:v0.1.0 imagePullPolicy: IfNotPresent name: gowebserver env: - name: "SERVICE_NAME" value: "backend" - name: "SERVICE_PORT" value: "8082" - name: "SERVICE_VERSION" value: "v3.6" ports: - containerPort: 8082 name: web protocol: TCP resources: limits: cpu: 50m---apiVersion: v1kind: Servicemetadata: name: backendspec: ports: - name: http-web port: 8082 protocol: TCP targetPort: 8082 selector: app: backend version: v3.6# kubectl apply -f deploy-backend.yamldeployment.apps/backendv36 createdservice/backend created# kubectl get podsNAME READY STATUS RESTARTS AGEbackendv36-64bd9dd97c-2qtcj 2/2 Running 0 7m47sbackendv36-64bd9dd97c-wmtd9 2/2 Running 0 7m47s...# kubectl get serviceNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEbackend ClusterIP 10.100.168.13 8082/TCP 8m1s...3、proxy上查看路由策略# istioctl proxy-config routes proxy-6b567ff76f-27c4k | grep -E "demoapp|backend" # backend 并不会真正显示重定向效果8082backend, backend.default + 1 more... /*8080demoapp, demoapp.default + 1 more... /canary* demoapp.default8080demoapp, demoapp.default + 1 more... /*demoapp.default80 demoapp.default.svc.cluster.local /canary* demoapp.default80 demoapp.default.svc.cluster.local /*demoapp.default4、集群内client 访问 frontend proxy (补充 :真正发挥网格流量调度的是 egress listener)# kubectl run client --image=registry.cn-wulanchabu.aliyuncs.com/daizhe/admin-box -it --rm --restart=Never --command -- /bin/shIf you don't see a command prompt, try pressing enter.root@client # curl proxyProxying value: iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.6, ServerName: demoappv10-5c497c6f7c-fdwf4, ServerIP: 10.220.104.187! - Took 331 milliseconds.root@client # curl proxy/canaryProxying value: iKubernetes demoapp v1.1 !! ClientIP: 127.0.0.6, ServerName: demoappv11-7984f579f5-qsw5z, ServerIP: 10.220.104.166! - Took 40 milliseconds.# 真正定义客户访问backend路由是在demoapp上定义的,所以只有将url发送到后端去,后端才能实现重写策略,因此定义在哪个服务上才能对哪个服务进行请求;root@client # curl proxy/backendProxying value: 404 Not Found Not Found

The requested URL was not found on the server. If you entered the URL manually please check your spelling and try again.

- Took 41 milliseconds.5、将 backend 路由重写策略定义 frontend proxy 上,定义适配到网格内部的proxy VirtualService (——> frontend proxy -重定向-> backend)# cat virtualservice-proxy.yamlapiVersion: networking.istio.io/v1beta1kind: VirtualServicemetadata: name: proxyspec: hosts: - proxy http: - name: redirect match: - uri: prefix: "/backend" redirect: uri: / authority: backend port: 8082 - name: default route: - destination: host: proxy# kubectl apply -f virtualservice-proxy.yamlvirtualservice.networking.istio.io/proxy configured# istioctl proxy-config routes proxy-6b567ff76f-27c4k | grep -E "demoapp|backend"8082backend, backend.default + 1 more... /*8080demoapp, demoapp.default + 1 more... /canary* demoapp.default8080demoapp, demoapp.default + 1 more... /*demoapp.default80 demoapp.default.svc.cluster.local /canary* demoapp.default80 demoapp.default.svc.cluster.local /*demoapp.default80 proxy, proxy.default + 1 more.../backend*proxy.default6、再次测试集群内client 访问 frontend proxy# kubectl run client --image=registry.cn-wulanchabu.aliyuncs.com/daizhe/admin-box -it --rm --restart=Never --command -- /bin/shIf you don't see a command prompt, try pressing enter.root@client # curl proxyProxying value: iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.6, ServerName: demoappv10-5c497c6f7c-fdwf4, ServerIP: 10.220.104.187! - Took 331 milliseconds.root@client # curl proxy/canaryProxying value: iKubernetes demoapp v1.1 !! ClientIP: 127.0.0.6, ServerName: demoappv11-7984f579f5-qsw5z, ServerIP: 10.220.104.166!root@client # curl -I proxy/backendHTTP/1.1 301 Moved Permanentlylocation: http://backend:8082/date: Sat, 29 Jan 2022 04:32:08 GMTserver: envoytransfer-encoding: chunked1.2、流量分隔 (基于权重的路由)

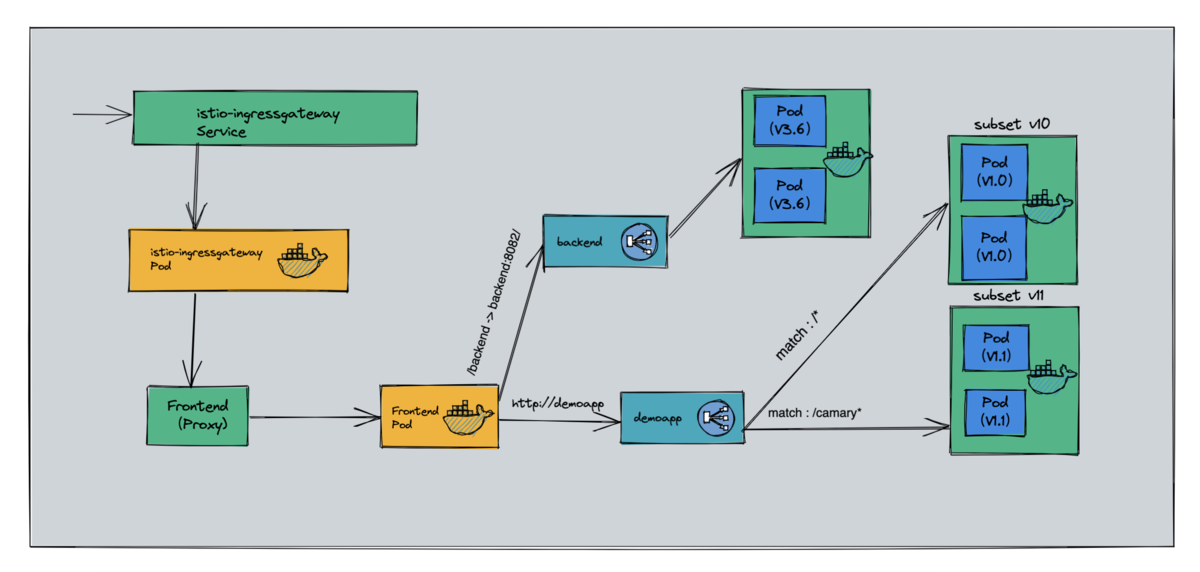

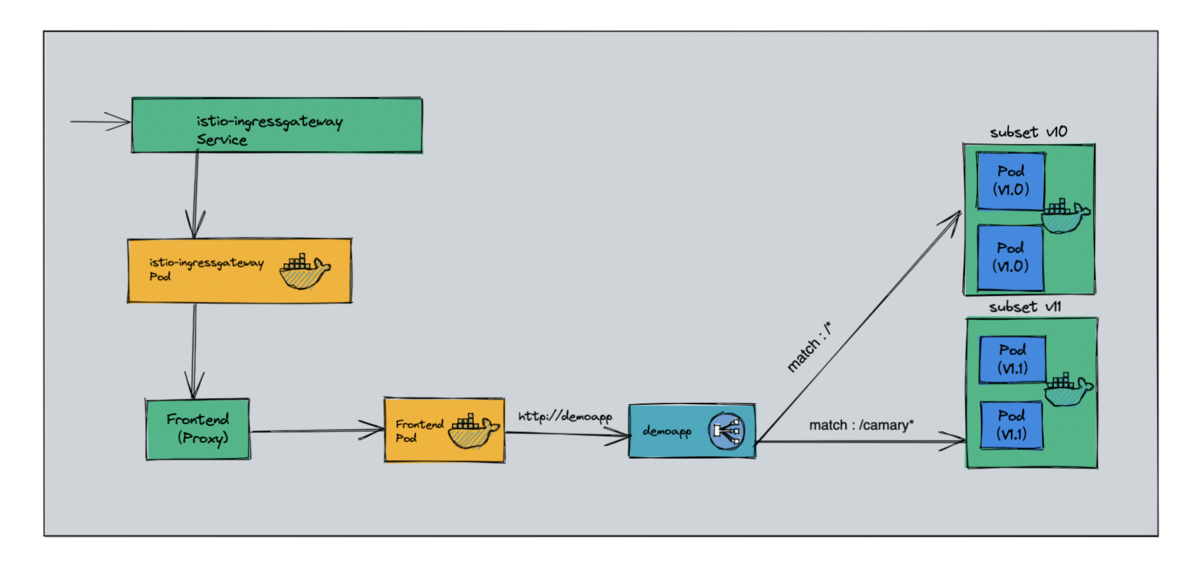

1.2.1、案例图示

1.2.2、案例实验

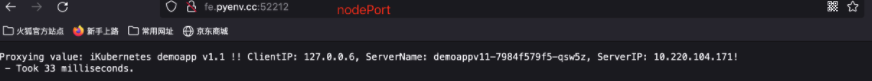

1、定义 VirtualService# cat virtualservice-demoapp.yamlapiVersion: networking.istio.io/v1beta1kind: VirtualServicemetadata: name: demoappspec: # (demoapp 自动生成的虚拟主机做高级配置),需要和istioctl proxy-config routes $DEMOAPP的DOMAINS搜索域名称保持一致 # 对demoapp service 服务的访问 hosts: - demoapp # 七层路由机制 http: # 路由策略为列表项,可以设置为多个 # 路由名称,起一个名称 - name: weight-based-routing # 路由目标 - 未设置match路由匹配条件,表示流量就在两个集群中进行轮换发送 route: - destination: host: demoapp # 调度给demoapp的clusters的v10子集 subset: v10 weight: 90 # 承载权重 90% 流量 - destination: host: demoapp # 调度给demoapp的clusters的v11子集 subset: v11 weight: 10 # 承载权重 10% 流量# kubectl apply -f virtualservice-demoapp.yamlvirtualservice.networking.istio.io/demoapp created2、查看定义的 VS 是否生效# DEMOAPP=$(kubectl get pods -l app=demoapp -o jsonpath={.items[0].metadata.name})# echo $DEMOAPPdemoappv10-5c497c6f7c-24dk4# istioctl proxy-config routes $DEMOAPP | grep demoapp80 demoapp.default.svc.cluster.local /*demoapp.default8080demoapp, demoapp.default + 1 more... /*demoapp.default3、client 访问 frontend proxy (补充 :真正发挥网格流量调度的是 egress listener)# 9 : 1 的比例# kubectl run client --image=registry.cn-wulanchabu.aliyuncs.com/daizhe/admin-box -it --rm --restart=Never --command -- /bin/shIf you don't see a command prompt, try pressing enter.root@client # curl proxyProxying value: iKubernetes demoapp v1.1 !! ClientIP: 127.0.0.6, ServerName: demoappv11-7984f579f5-9bzmv, ServerIP: 10.220.104.177! - Took 230 milliseconds.root@client # curl proxyProxying value: iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.6, ServerName: demoappv10-5c497c6f7c-24dk4, ServerIP: 10.220.104.155! - Took 38 milliseconds.root@client # curl proxyProxying value: iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.6, ServerName: demoappv10-5c497c6f7c-ks5hk, ServerIP: 10.220.104.133! - Took 22 milliseconds.root@client # curl proxyProxying value: iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.6, ServerName: demoappv10-5c497c6f7c-fdwf4, ServerIP: 10.220.104.150! - Took 22 milliseconds.root@client # curl proxyProxying value: iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.6, ServerName: demoappv10-5c497c6f7c-24dk4, ServerIP: 10.220.104.155! - Took 15 milliseconds.root@client # curl proxyProxying value: iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.6, ServerName: demoappv10-5c497c6f7c-ks5hk, ServerIP: 10.220.104.133! - Took 6 milliseconds.root@client # curl proxyProxying value: iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.6, ServerName: demoappv10-5c497c6f7c-fdwf4, ServerIP: 10.220.104.150! - Took 13 milliseconds.root@client # curl proxyProxying value: iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.6, ServerName: demoappv10-5c497c6f7c-24dk4, ServerIP: 10.220.104.155! - Took 4 milliseconds.- 4、集群外部访问 proxy (9:1)

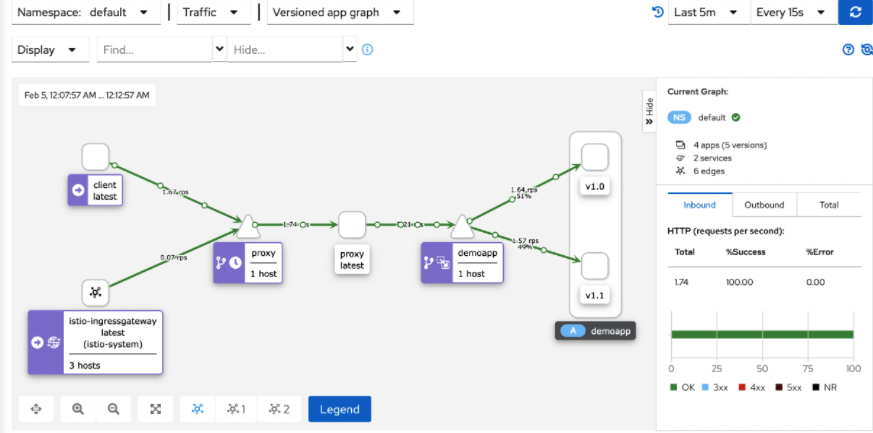

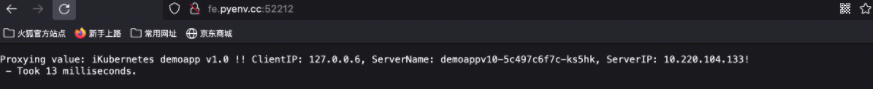

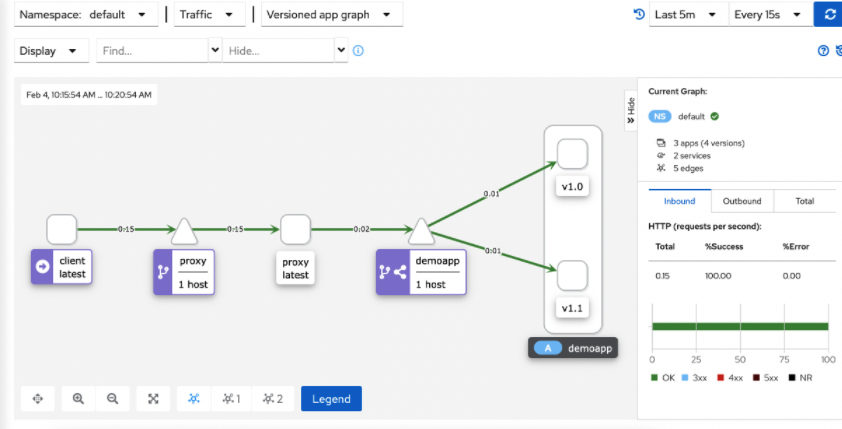

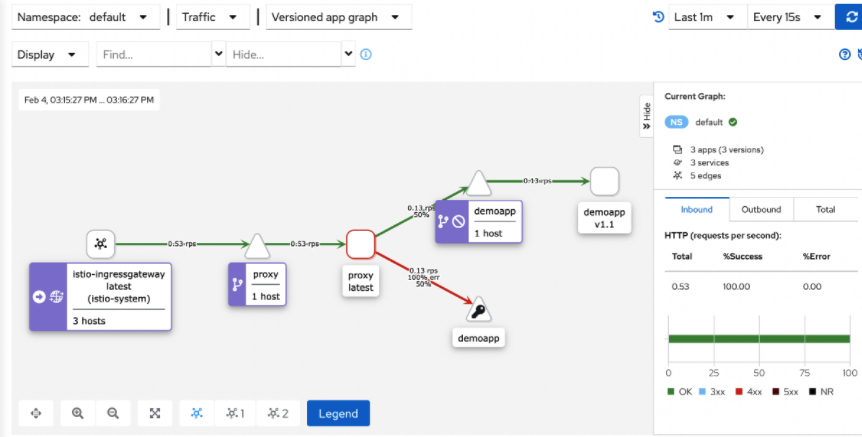

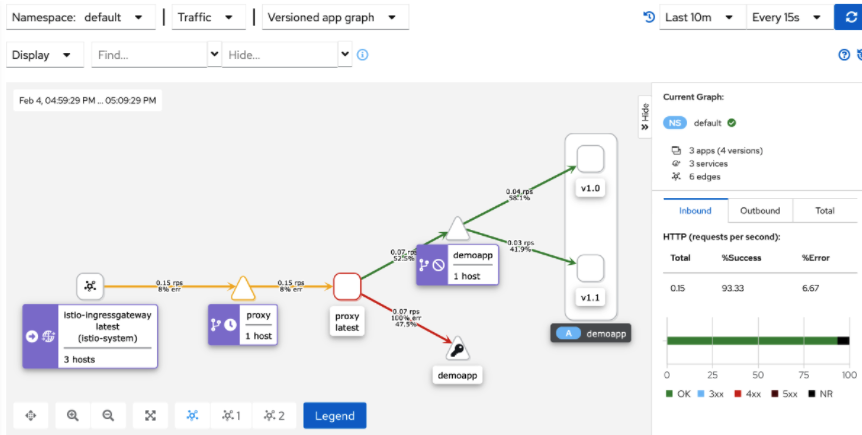

- 5、kiali graph能够根据流量实时进行图形绘制 (样本足够大的情况下,流量比例就是 9 :1)

集群内部访问

集群外部访问

1.3、Headers Operation (操纵标头 -> 请求报文&响应报文)

- Headers 为 Istio提供了操作 HTTP Header的途径,用于操作 HTTP 请求报文中 Request 或 Response 标头;

- headers 字段支持 request和response两个内嵌字段;

- request : 操纵发送给Destination 的请求报文中的标头;

- response : 操纵发送给客户端的响应报文中的标头;

- 以上两个对应的类型都是 HeaderOperstions 类型,都支持使用

set、add、remove

字段操纵指定的标头 :- set : 使用map上的Key和Value覆盖Request或者Response中对应的Header;

- add : 追加map上的Key和Value到原有 Header;

- remove : 删除在列表中指定的Header;

- headers 字段支持 request和response两个内嵌字段;

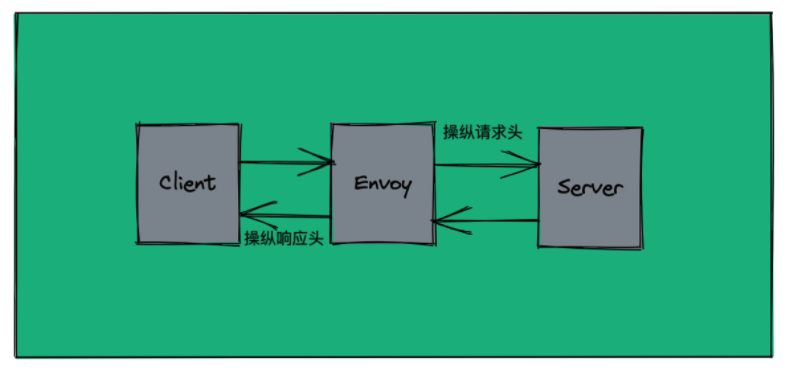

1.3.1、案例图示

- 流量请求

- Client SidecarProxy Envoy ServerPod

1.3.2、案例实验

1、定义 VirtualService 完成对demoapp service的请求报文&响应报文的操纵# cat virtualservice-demoapp.yamlapiVersion: networking.istio.io/v1beta1kind: VirtualServicemetadata: name: demoappspec: # 主机 :对demoapp service 服务的访问 hosts: - demoapp # http 七层路由机制 http: # 路由策略为列表项,可以设置为多个 # 路由名称,起一个名称 - name: canary # 匹配条件 match: # match匹配请求标头部分 : 如请求标头 x-canary: true的话则路由给 v11 子集,并操纵请求标头在请求报文当中修改 User-Agent: Chrome ,操纵响应标头增加 x-canary: true标头; # 匹配请求标头条件 - headers: x-canary: exact: "true" # 路由目标 route: - destination: host: demoapp subset: v11 # 操纵标头 headers: # 请求标头 request: # 修改标头值 set: User-Agent: Chrome # 响应标头 response: # 增加新标头 add: x-canary: "true" # 路由名称 : 标识为上述条件未匹配的默认路由,发送给 v10 子集; - name: default # 操纵标头 : 此处的定义位置生效范围表示发往所有default的路由目标都进行如下条件的响应报文操纵 headers: # 响应标头 response: # 操纵发从下游的响应标头增加 X-Envoy: test add: X-Envoy: test # 路由目标 route: - destination: host: demoapp subset: v10# kubectl apply -f virtualservice-demoapp.yamlvirtualservice.networking.istio.io/demoapp configured2、为了验证标头操纵,所以我们需要通过 client 直接向demoapp 发送请求# 模拟match的headers匹配条件,标头 x-canary: true的话则路由给demoapp v11 子集root@client /# curl -H "x-canary: true" demoapp:8080iKubernetes demoapp v1.1 !! ClientIP: 127.0.0.6, ServerName: demoappv11-7984f579f5-msn2w, ServerIP: 10.220.104.159!root@client /# curl -H "x-canary: true" demoapp:8080iKubernetes demoapp v1.1 !! ClientIP: 127.0.0.6, ServerName: demoappv11-7984f579f5-9pm74, ServerIP: 10.220.104.164!# 查看响应头root@client /# curl -I -H "x-canary: true" demoapp:8080HTTP/1.1 200 OKcontent-type: text/html; charset=utf-8content-length: 116server: envoydate: Fri, 04 Feb 2022 05:49:44 GMTx-envoy-upstream-service-time: 2x-canary: true# 查看操作后的请求报文中浏览器类型root@client /# curl -H "x-canary: true" demoapp:8080/user-agentUser-Agent: Chrome---# 请求default 默认路由 demoapp v10子集# 默认浏览器类型root@client /# curl demoapp:8080/user-agentUser-Agent: curl/7.67.0# 操纵发从下游的响应标头增加 X-Envoy: testroot@client /# curl -I demoapp:8080/user-agentHTTP/1.1 200 OKcontent-type: text/html; charset=utf-8content-length: 24server: envoydate: Fri, 04 Feb 2022 05:53:09 GMTx-envoy-upstream-service-time: 18x-envoy: test1.4、故障注入 (测试服务韧性)

1.4.1、案例图示

1.4.2、案例实验

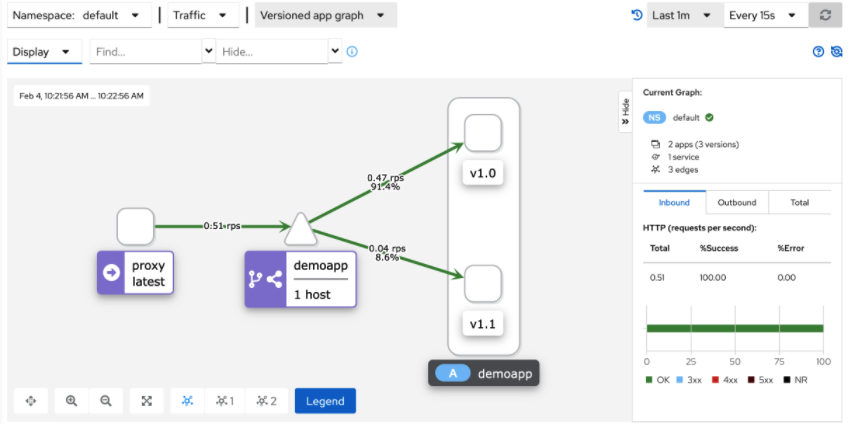

1、通过VirtualService 对demoapp进行故障注入测试# cat virtualservice-demoapp.yamlapiVersion: networking.istio.io/v1beta1kind: VirtualServicemetadata: name: demoappspec: hosts: - demoapp http: - name: canary match: - uri: prefix: /canary rewrite: uri: / route: - destination: host: demoapp subset: v11 # 故障注入 fault: # 故障类型 : 中断故障 abort: # 在多大的流量上进行故障注入 percentage: # 在百分之二十的流量上进行故障注入 value: 20 # 注入的故障响应给客户端的状态码 555 httpStatus: 555 - name: default route: - destination: host: demoapp subset: v10 # 故障注入 fault: # 故障类型 : 延迟故障 delay: # 在多大的流量上进行故障注入 percentage: # 在百分之二十的流量上进行故障注入 3s 的延迟故障 value: 20 fixedDelay: 3s# kubectl apply -f virtualservice-demoapp.yamlvirtualservice.networking.istio.io/demoapp configured2、外部访问 proxy 调度给 demoapp v10 子集# default 延迟故障daizhe@daizhedeMacBook-Pro ~ % while true; do curl fe.toptops.top:52212; sleep 0.$RANDOM; doneProxying value: iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.6, ServerName: demoappv10-5c497c6f7c-7fklt, ServerIP: 10.220.104.154! - Took 6 milliseconds.Proxying value: iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.6, ServerName: demoappv10-5c497c6f7c-ncnn6, ServerIP: 10.220.104.165! - Took 13 milliseconds.Proxying value: iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.6, ServerName: demoappv10-5c497c6f7c-7fklt, ServerIP: 10.220.104.154! - Took 3099 milliseconds. # 如果流量基数够大可以观察到百分之二十的流量被注入了3s延迟3、集群内client 访问 frontend proxy调度给 demoapp v11子集 (补充 :真正发挥网格流量调度的是 egress listener)# canary 中断故障root@client /# while true; do curl proxy/canary; sleep 0.$RANDOM; doneProxying value: fault filter abort - Took 1 milliseconds.Proxying value: iKubernetes demoapp v1.1 !! ClientIP: 127.0.0.6, ServerName: demoappv11-7984f579f5-9pm74, ServerIP: 10.220.104.164! - Took 3 milliseconds.Proxying value: iKubernetes demoapp v1.1 !! ClientIP: 127.0.0.6, ServerName: demoappv11-7984f579f5-msn2w, ServerIP: 10.220.104.159! - Took 4 milliseconds.Proxying value: fault filter abort - Took 6 milliseconds. # 如果流量基数够大可以观察到百分之二十的流量被注入了中断故障,响应客户端状态码为 555Proxying value: fault filter abort - Took 2 milliseconds.- 4、kiali graph能够根据流量实时进行图形绘制 (故障比例 20%)

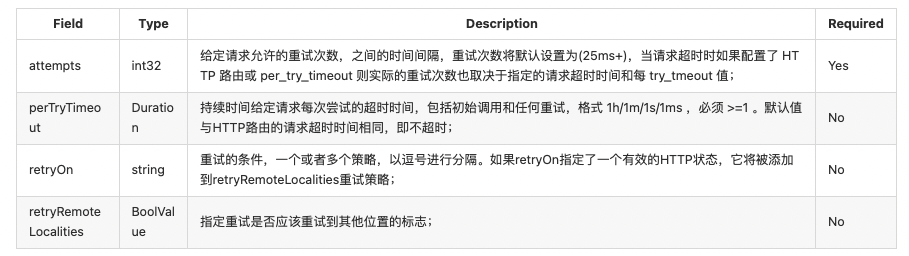

1.5、HTTP Rerty (超时重试)

- 请求重试的相关配置参数

- HTTP 请求重试条件 (route.RertyPlolicy)

- 重试条件 (同 x-envoy-retry-on 标头)

- 5xx : 上游主机返回5xx响应码,或者根本未予响应 (断开/重试/读取超时);

- gateway-error : 网关错误,类似于 5xx 策略,但仅为 502、503、504的应用进行重试;

- connection-failure : 在TCP级别上与上游服务建立连接超时失败时进行重试;

- retriable-4xx : 上游服务器返回可重复的4xx响应码时进行重试;

- refused-stream : 上游服务使用REFUSED—stream 错误码重置时进行重试;

- retriable-status-codes : 上游服务器的响应码与重试策略或者 x-envoy-retriable-status-codes 标头值中定义的响应码匹配时进行重试;

- reset : 上游主机完全不响应时 (disconnect/reset/read 超时) ,Envoy将进行重试;

- retriable-headers : 如果上游服务器响应报文匹配重试策略或 x-envoy-retriable-header-names 标头中包含的任何标头,则Envoy将尝试重试;

- envoy-ratelimited : 标头中存在 x-envoy-ratelimited 时进行重试;

- 重试条件2 (同x-envoy-retry-grpc-on 标头)

- cancelled : gRPC 应答标头中的状态码是 “cancelled” 时进行重试;

- deadline-exceeded : gRPC 应答标头中的状态码是 “deadline-exceeded” 时进行重试;

- internal : gRPC应答标头中的状态码是 “internal” 时进行重试;

- resource-exhausted : gRPC应答标头中的状态码是 “resource-exhausted” 时进行重试;

- unavailable : gRPC应答标头中的状态码是 “unavailable” 时进行重试;

- 默认情况下,Envoy不会将任何类型的重试操作重试,除非明确定义;

- 重试条件 (同 x-envoy-retry-on 标头)

1、调整对 demoapp 访问的故障注入的故障比例 50%,以便 HTTP Rerty 更好查看效果 # cat virtualservice-demoapp.yamlapiVersion: networking.istio.io/v1beta1kind: VirtualServicemetadata: name: demoappspec: hosts: - demoapp http: - name: canary match: - uri: prefix: /canary rewrite: uri: / route: - destination: host: demoapp subset: v11 fault: abort: percentage: value: 50 httpStatus: 555 - name: default route: - destination: host: demoapp subset: v10 fault: delay: percentage: value: 50 fixedDelay: 3s# kubectl apply -f virtualservice-demoapp.yaml virtualservice.networking.istio.io/demoapp configured2、集群外部访问通过 Gateway访问的真正调用 demoapp集群的客户端是 frontend proxy ,所以在 proxy之上增加了容错机制# 对 Ingress Gateway 给Proxy定义VirtualService # cat virtualservice-proxy.yamlapiVersion: networking.istio.io/v1beta1kind: VirtualServicemetadata: name: proxyspec: # 用于定义哪个路由和虚拟主机有关系,所以需要指定hosts,此hosts必须和GW中hosts保持一致或者包含关系 hosts: - "fe.toptops.top"# 对应于gateways/proxy-gateway # (和网关相关联) # gateways用于指定该 vs 是定义在 Ingress Gateway 的接收入栈流量,并指定GW名称 gateways: - istio-system/proxy-gateway# 相关定义仅应用于Ingress Gateway上 #- mesh # http 七层路由 http: # 路由策略名称 - name: default # 路由目标 route: - destination: # proxy cluster 是被自动生成的,因为集群内部有一个同名的Service,而且此集群在 ingess gateway 上本身存在 # 内部集群Service名称,但是流量不会直接发给Service,而是发给由Service组成的集群(这里的七层调度流量不再经由Service) host: proxy # proxy是要对demoapp 发起请求的 # 超时时长设置为 1s ,如果服务端要处理1s以上才会回复响应,则会立即响应客户端为超时; timeout: 1s # 重试策略 retries: # 重试次数 attempts: 5 # 重试操作的超时时长,如果重试时超过1s以后则会响应客户端为超时; perTryTimeout: 1s # 重试的条件 : 对于后端服务端返回的 5xx 系列的响应状态码会转到retries 重试策略; retryOn: 5xx,connect-failure,refused-stream# kubectl apply -f virtualservice-proxy.yamlvirtualservice.networking.istio.io/proxy configured3、集群外部进行访问 proxy测试# 外部访问 proxy 调度给 demoapp v10 子集 ,default 路由策略50%流量注入了延迟故障3s,如果请求超过1s则直接返回 upstream request timeout请求超时;daizhe@daizhedeMacBook-Pro ~ % while true; do curl fe.toptops.top:52212; sleep 0.$RANDOM; doneupstream request timeoutProxying value: iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.6, ServerName: demoappv10-5c497c6f7c-qv9ws, ServerIP: 10.220.104.160! - Took 3 milliseconds.upstream request timeoutupstream request timeoutupstream request timeoutProxying value: iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.6, ServerName: demoappv10-5c497c6f7c-ncnn6, ServerIP: 10.220.104.165! - Took 4 milliseconds.Proxying value: iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.6, ServerName: demoappv10-5c497c6f7c-7fklt, ServerIP: 10.220.104.154! - Took 32 milliseconds.Proxying value: iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.6, ServerName: demoappv10-5c497c6f7c-7fklt, ServerIP: 10.220.104.154! - Took 4 milliseconds. # 外部访问 proxy 调度给 demoapp v11 子集 ,canary 路由策略50%流量注入了中断故障并返回客户端状态码为 555,满足网关设置的重试策略,重试5次;daizhe@daizhedeMacBook-Pro ~ % while true; do curl fe.toptops.top:52212/canary; sleep 0.$RANDOM; doneProxying value: fault filter abort - Took 1 milliseconds.Proxying value: fault filter abort - Took 2 milliseconds.Proxying value: iKubernetes demoapp v1.1 !! ClientIP: 127.0.0.6, ServerName: demoappv11-7984f579f5-9pm74, ServerIP: 10.220.104.164! - Took 6 milliseconds.Proxying value: iKubernetes demoapp v1.1 !! ClientIP: 127.0.0.6, ServerName: demoappv11-7984f579f5-msn2w, ServerIP: 10.220.104.159! - Took 6 milliseconds.Proxying value: fault filter abort - Took 1 milliseconds.Proxying value: fault filter abort - Took 2 milliseconds.Proxying value: iKubernetes demoapp v1.1 !! ClientIP: 127.0.0.6, ServerName: demoappv11-7984f579f5-msn2w, ServerIP: 10.220.104.159! - Took 4 milliseconds.- 4、kiali graph能够根据流量实时进行图形绘制

1.6、HTTP 流量镜像

1、调整对 demoapp 访问策略,增加流量镜像策略# cat virtualservice-demoapp.yamlapiVersion: networking.istio.io/v1beta1kind: VirtualServicemetadata: name: demoappspec: # 主机 :对demoapp service 服务的访问 hosts: - demoapp # http 七层路由 http: - name: traffic-mirror # 路由目标,正常流量全部发往 demoapp v10 子集 route: - destination: host: demoapp subset: v10 # 并将所有正常流量镜像一份到 demoapp v11 子集 mirror: host: demoapp subset: v11# kubectl apply -f virtualservice-demoapp.yamlvirtualservice.networking.istio.io/demoapp configured2、集群内client 访问 frontend proxy调度给 demoapp v10子集并镜像一份给 demoapp v11 子集(补充 :真正发挥网格流量调度的是 egress listener)root@client /# while true; do curl proxy; sleep 0.$RANDOM; doneProxying value: iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.6, ServerName: demoappv10-5c497c6f7c-ncnn6, ServerIP: 10.220.104.165! - Took 15 milliseconds.Proxying value: iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.6, ServerName: demoappv10-5c497c6f7c-7fklt, ServerIP: 10.220.104.154! - Took 8 milliseconds.Proxying value: iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.6, ServerName: demoappv10-5c497c6f7c-qv9ws, ServerIP: 10.220.104.160! - Took 8 milliseconds.Proxying value: iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.6, ServerName: demoappv10-5c497c6f7c-ncnn6, ServerIP: 10.220.104.165! - Took 3 milliseconds.Proxying value: iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.6, ServerName: demoappv10-5c497c6f7c-ncnn6, ServerIP: 10.220.104.165! - Took 8 milliseconds.# 外部访问 proxy daizhe@daizhedeMacBook-Pro ~ % while true; do curl fe.toptops.top:52212; sleep 0.$RANDOM; done# 流量镜像需要从下面 kiali 查看- 3、kiali graph能够根据流量实时进行图形绘制