中国电信大数据离线数据仓库

文章目录

- 电信数据仓库

- ```第一章```

-

- 项目研发流程

- 项目简介

- 业务总则

-

- 信息域概述

- 市场运营域(BSS 域)

- 企业管理域(MSS 域)

- 网络运营域(OSS 域)

- 通用数据仓库分层

- 总体架构

- 数据仓库

- 权限划分

- 数据仓库数据架构

- 分层命名规范

- ```第二章```

- 旅游大数据整体框架及处理流程

-

- 一、整体流程图

- 二、参数字段

-

- 1.数据源

-

- 1.1 OIDD数据

- 1.2 WCDR数据

- 1.3 移动DPI数据

- 1.4 移动DDR数据

- 2.集成和存储层

- 2.1 融合文件

-

- 2.1.1 位置表

- 2.1.2 区域表

- 2.2 停留点

- 2.3 职住地

-

- 2.3.1居住追踪地

- 2.3.2工作追踪地

- 2.3.3当前居住地表

- 2.3.4历史居住地表

- 2.3.5当前工作地表

- 2.3.6历史工作地表

- 2.3.7网格标签表

- 2.3.8旅游专题职住表

- 2.4 跨域

-

- 2.4.1最新城市跨域表

- 2.4.2历史城市跨域表

- 2.4.3最新区县跨域表

- 2.4.4历史区县跨域表

- 2.4.5城市OD表

- 2.4.6区县OD表

- 2.5 准实时

-

- 2.5.1准实时位置字段表

- 2.5.1准实时区域字段表

- 3.分析层

-

- 3.1省市目的地游客分析专题逻辑表-市级别

- 3.2省市目的地游客分析专题逻辑表-省级别

- 3.3区县级别目的地游客分析专题逻辑表

- 3.4景区目的地游客分析专题逻辑表

- 3.5出行基础表

- 3.6乡村游出游表

- 3.7游客旅游历史信息表

- 3.8用户画像表

- 3.9用户标签表

- 4.应用层

-

- 4.1实时查询指标

- 4.2景区指标

- 4.3行政区指标

- ```第三章```

- 1、数据仓库搭建

-

- 1.1、开启hdfs的权限认证,以及ACL认证

-

- 修改hdfs-site.xml文件,将权限认证打开

- 解决hive tmp目录权限不够问题

-

- 重启hadoop

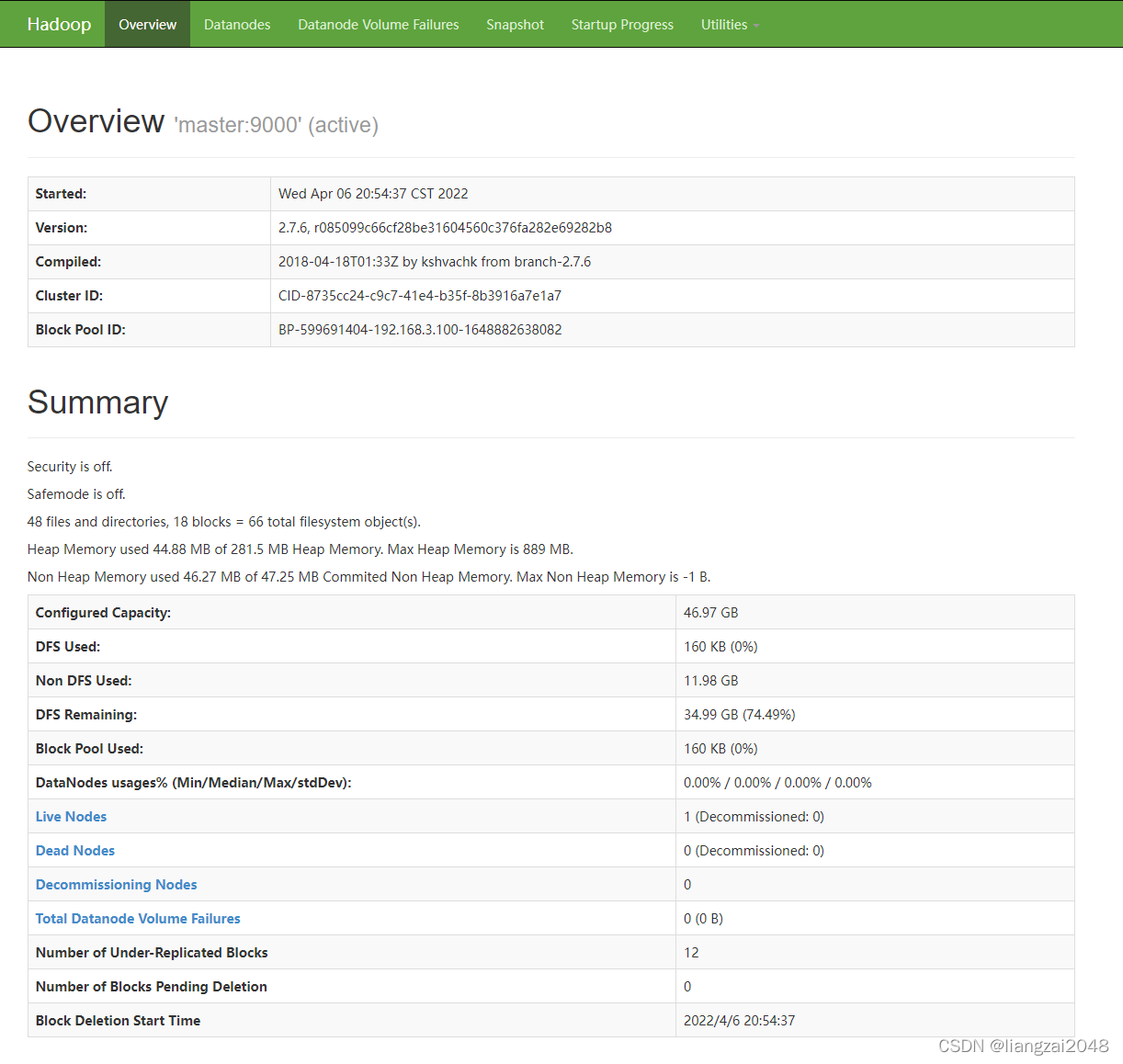

- 验证http://master:50070是否能访问

- 1.2、为每一个层创建一个用户

-

- 增加权限的命令

- 1.3、为每一个层创建一个hive的库

- 1.4、为每一个层在hdfs中创建一个目录

- 1.5、将本地hive目录的权限设置为777

- 2、数据采集 -- 使用ods用户操作

-

- 2.1 oidd数据采集

-

- 1、在hive中创建表

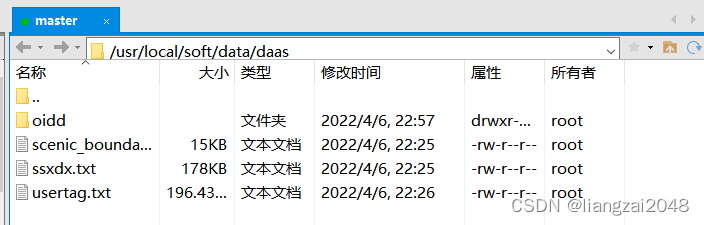

- 2、将数据上传到服务器

- 3、使用flume监控基站日志目录采集数据

- 4、启动flume

- 5、查看结果

- 6、增加分区

- 2.2、采集crm系统中的数据

-

- 1、在mysql中创建crm数据库

- 2、创建表

- 3、先在hive的ods层中创建表

- 4、使用datax 采集数据

- ``第四章```

- 位置融合表

-

- 新建IDEA的Maven项目

-

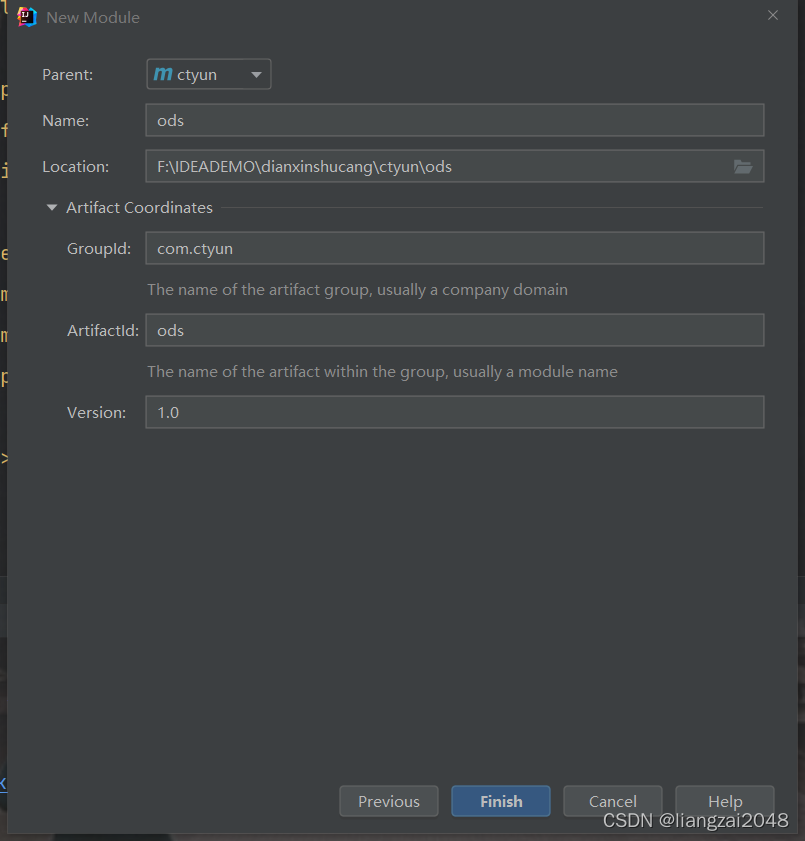

- 新建ods层

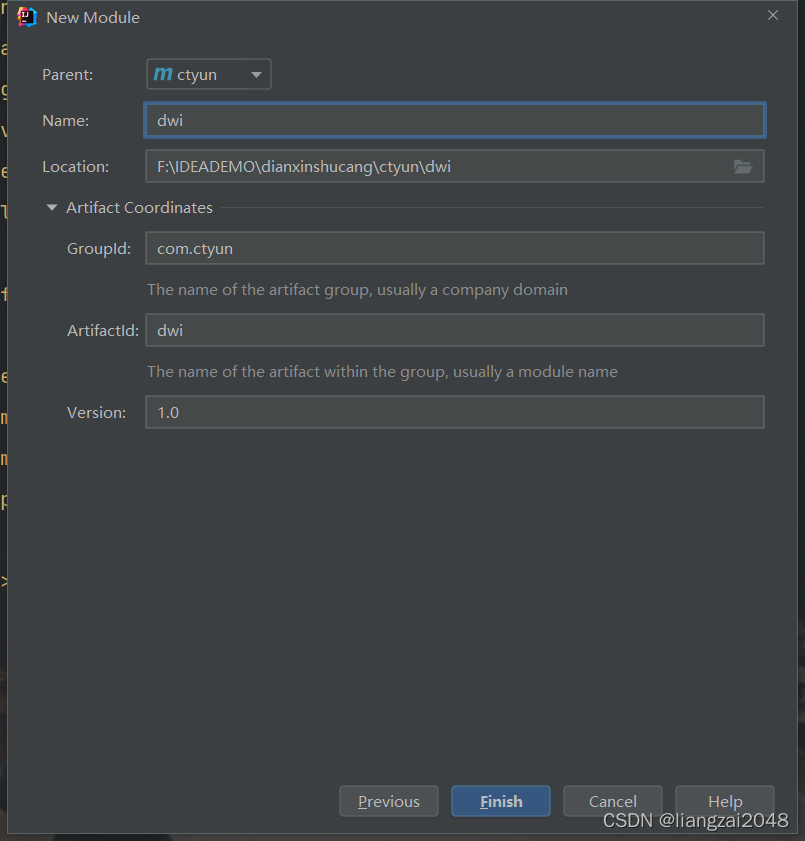

- 新建dwi层

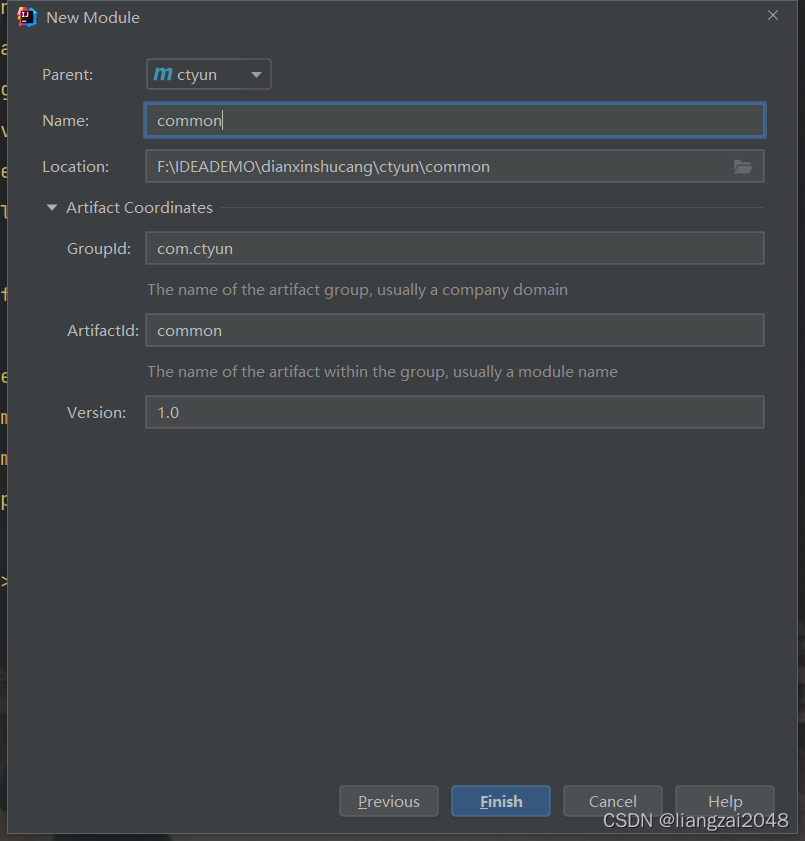

- 新建common公共模块

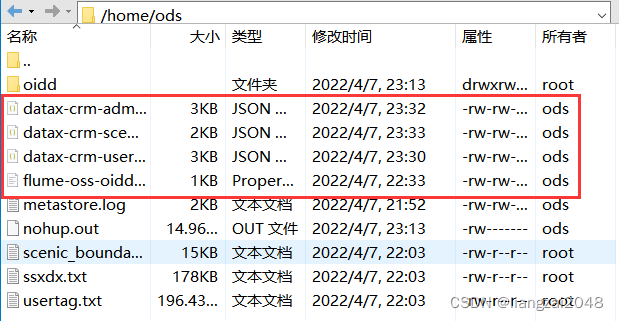

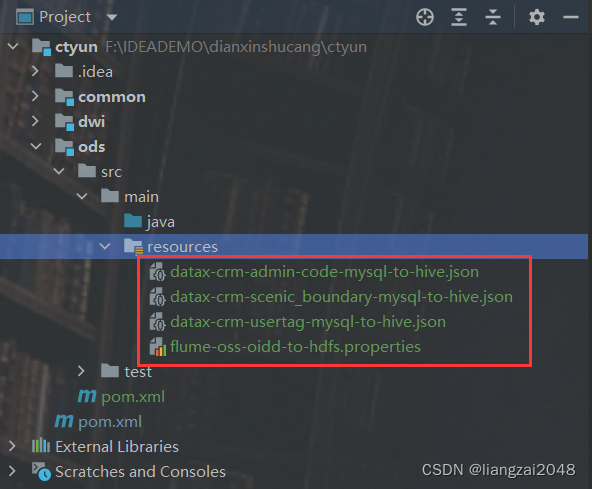

- 将ods本地脚本上传到ods的resources目录下

- 在外部 pom文件导入spark依赖(和公司spark版本一致)

- 在dwi层导入ctyun的scala依赖

- 在dwi层创建DwiResRegnMergelocationMskDay类

-

- 导入隐式转换

- 计算两个经纬度之间的距离

-

- 在common中创建Geography的java类

- dwi层引入 common层的依赖

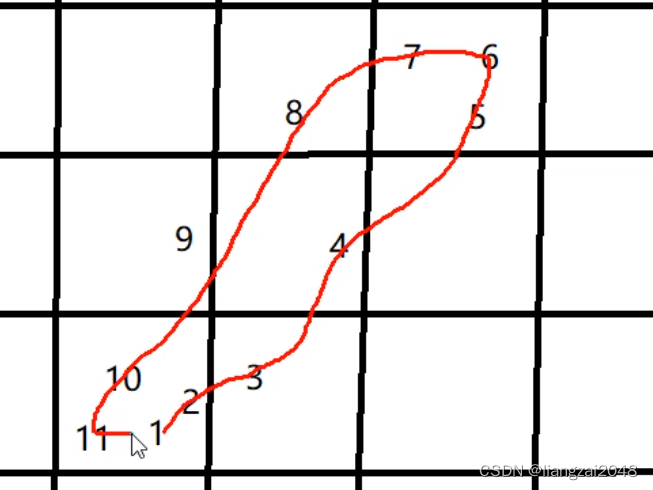

- 在时间线上做聚类操作

-

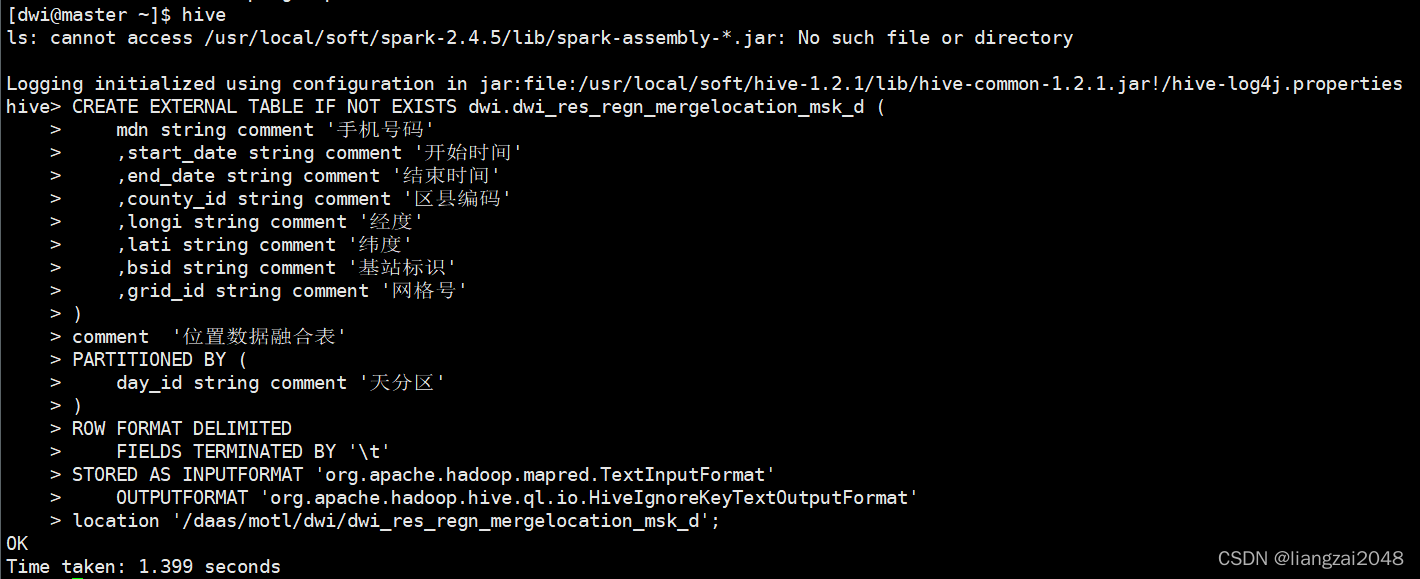

- 在root的hive建表merge用于测试

- 时间聚类测试数据

- 上传数据

- 通过 spark-sql进行测试

- 超速处理

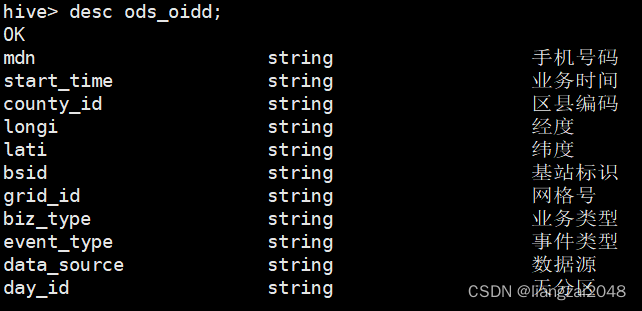

- 查看ods_oidd是否有读权限

-

- 删除之前运行结果

- 位置数据融合表

- 编写DwiResRegnMergelocationMskDay代码

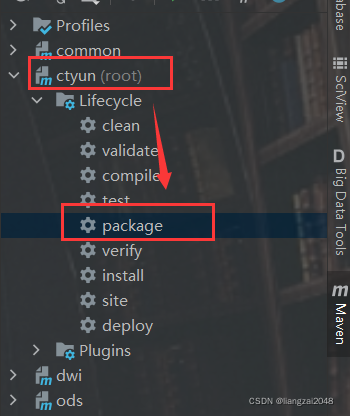

- 打包、上传、运行(dwi)

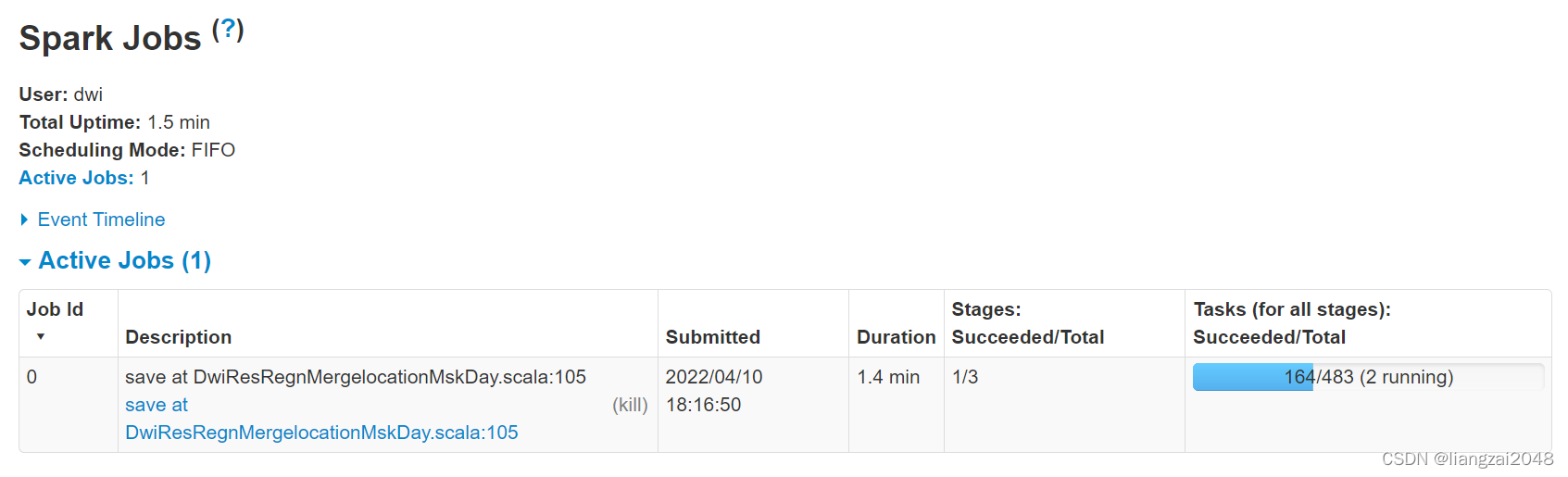

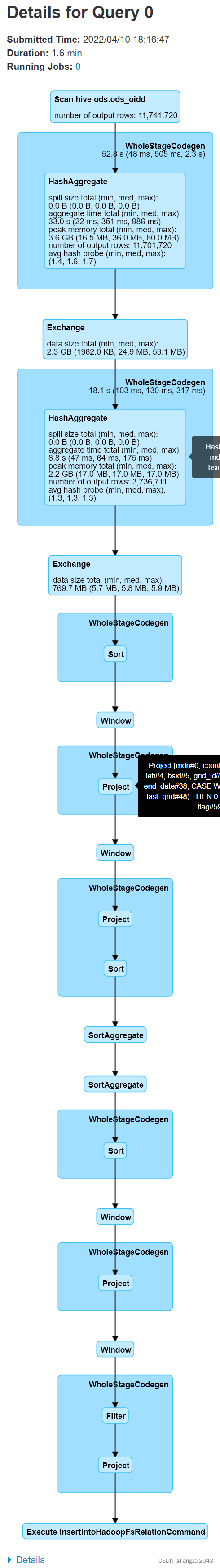

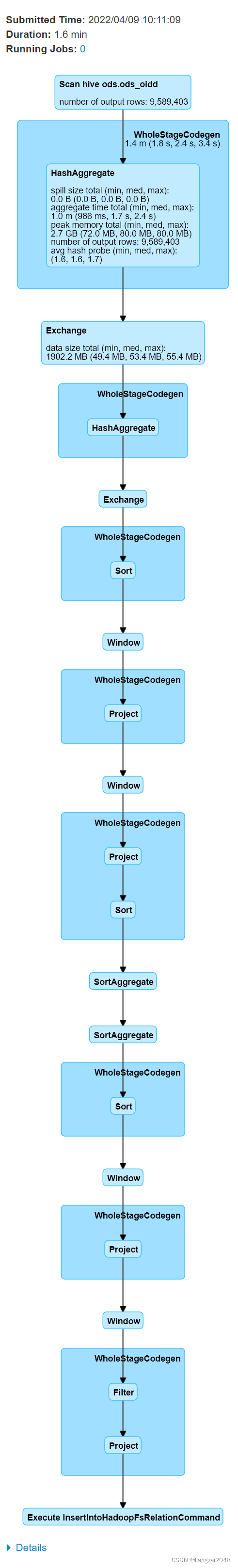

- 访问master:4040和master:8088的web ui 查看运行结果

- 在dwi登陆hive查看运行结果

- 对手机号敏感数据进行加密

- ```第四章```

-

- 用户画像表

-

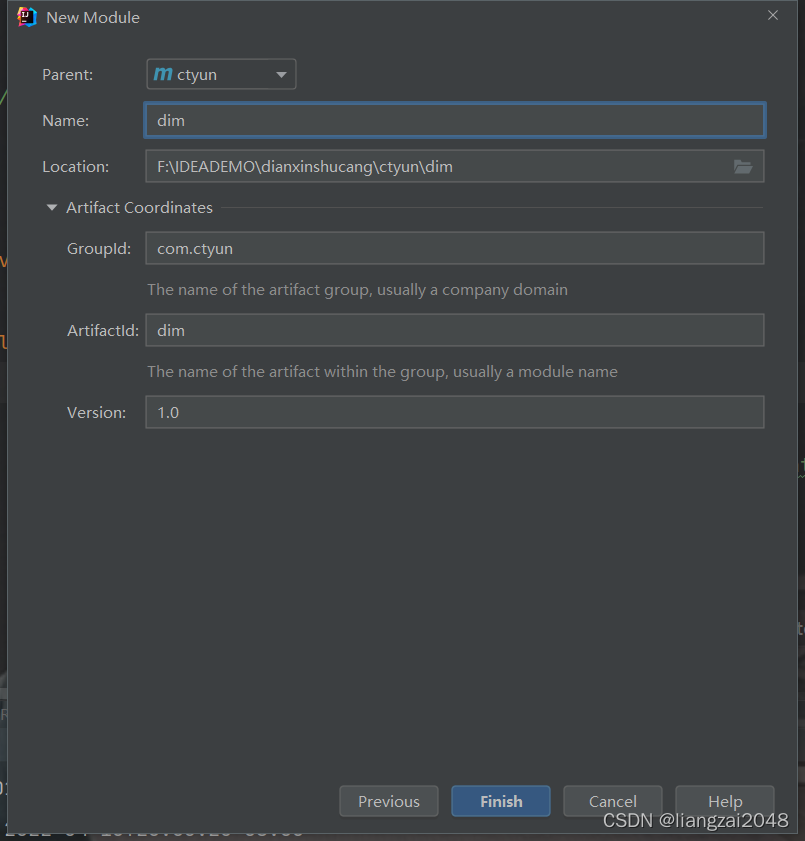

- 创建dim层

- 添加依赖

- 新建包

- 新建DimUserTagDay类

- 登录ods的hive查看用户画像表 是否存在

-

- hive表如何导入本地目录里

- 封装工具

- 在common里增加spark依赖

- 新建SparkTool的class工具类并编写

-

- 测试

-

- 新建Test的object类

- 编写电信数仓的SparkTool封装工具

- 使用SparkTool的位置融合表代码

- 修改ods的权限查看数据

- 编写运行script脚本

-

- 在dim层新建dim-usertag-day.sh脚本并编写

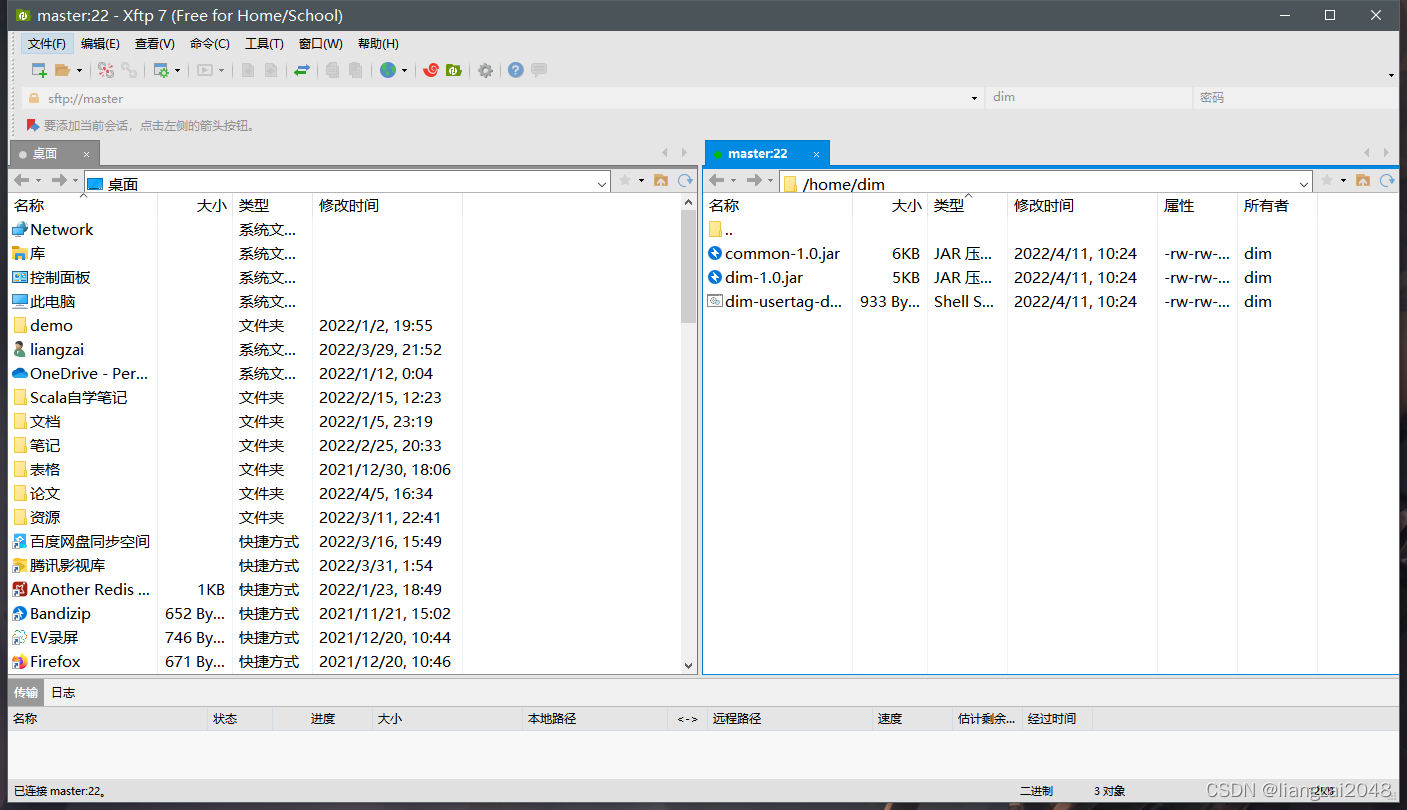

- 打包上传到dim用户

- 运行用户画像表脚本

- 查看用户画像表运行结果

- 在dwi层新建dwi-res-regn-mergelocation-msk-day.sh脚本并编写

- 运行位置融合表脚本

- 在dim层的hive创建行政区配置表

- 在dim层新建dim-admincode.sh脚本并编写

- 运行dim-admincode.sh脚本

- 查看dim-admincode.sh脚本运行结果

- 在dim层 创建景区配置表

- 在dim层新建dim-scenic-boundary.sh脚本并编写

- 上传运行dim-scenic-boundary.sh脚本

- 查看dim-scenic-boundary.sh脚本运行结果

- 编写ods层脚本

- 编写datax-crm-admin-code-mysql-to-hive.sh脚本

- 编写datax-crm-scenic_boundary-mysql-to-hive.sh脚本

- 编写datax-crm-usertag-mysql-to-hive.sh脚本

- 编写flume-oss-oidd-to-hdfs.sh脚本

- ```第五章```

- 时空伴随着

-

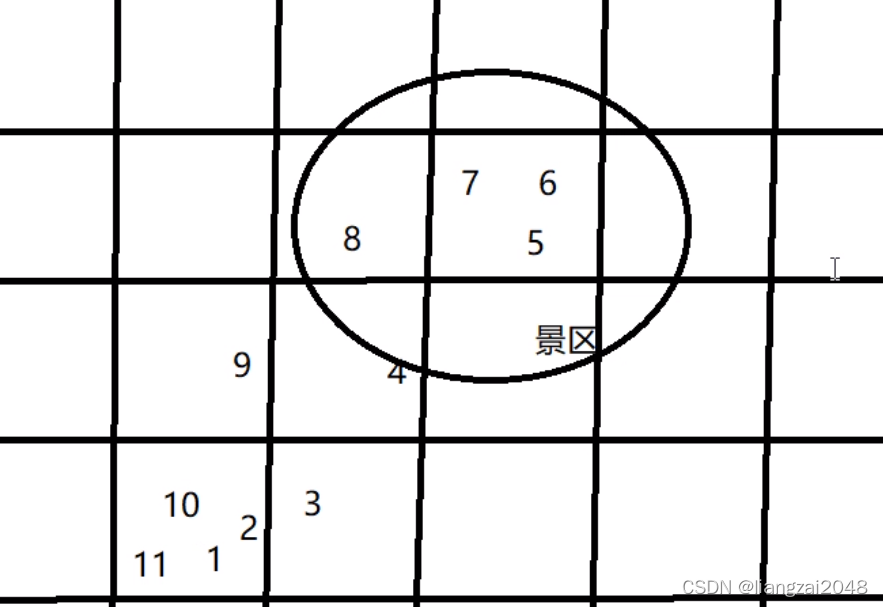

- 判断一个人是否是景区的游客

-

- 在MySQL中创建intimate表(疫情密接者)

- 向intimate表插入数据

-

- 进入dwi层的hive

- 查询到的手机号复制到visual Studio code进行处理

- 在MySQL中创建confirmed表(疫情确诊)

- 向confirmed表插入数据

- 时空伴随着计算

-

- 新建dws模块

- 新建com.ctyun.dws包

- 导入试试park依赖

- 新建SpacetimeAdjoint类

-

- 在common新建DateUtil并编辑

- 代码优化

- 编辑SpacetimeAdjoint类(已优化)

- 在dws层 编写spacetime-adjoint.sh脚本

- 在dwi层增加权限

- 上传包并运行spacetime-adjoint.sh脚本

- 查看spacetime-adjoint.sh脚本运行结果

- 再次优化

-

- 在SparkTool里新增代码块

- 优化后的SpacetimeAdjoint代码

- 打包上传并运行spacetime-adjoint.sh脚本

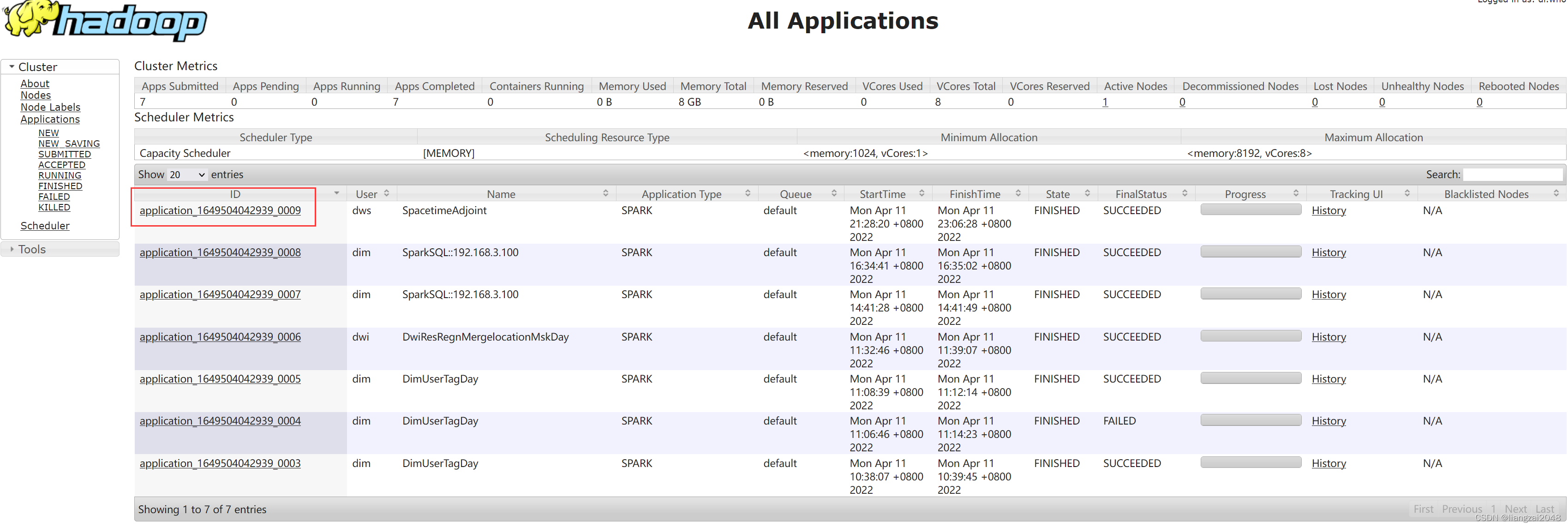

- 访问mater:8088和master:4040查看运行 状态

- 查看优化后的运行结果

- ```第六章```

- Azkaban定时调度

-

- 在root安装搭建Azkaban

-

- 1、上传解压

- 2、修改配置文件

- 3、启动azkaban

- 4、访问azkaban

- 5、配置邮箱服务

- 6、重启 、关闭、启动

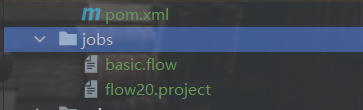

- 在IDEA中ctyun目录下创建jobs目录

-

- 编写basic.flow

- 市游客表

-

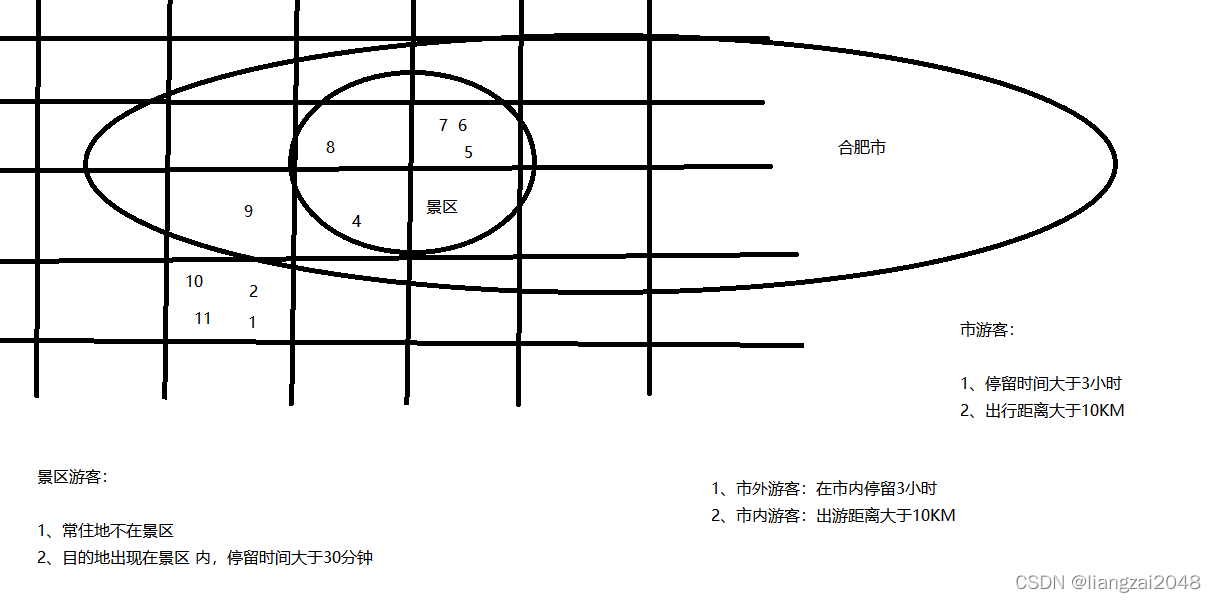

- 判断一个人是否是景区的游客

-

- 在dws层创建dws_city_tourist_msk_d游客表

- 新建并编写DwsCityTouristMskDay类

- 在SparkTool里新增

- 在Geography里新增

- 在common新建Grid并编写

- 在common新建GridLevel.java并编写

- 在common中新建GISUtil.java并编写

- 在 common新建 entity目录并编写Const.java、Gps.java、GridEntity.java

- 在common创建Constants.java并编写

- 在dws层打包上传并运行

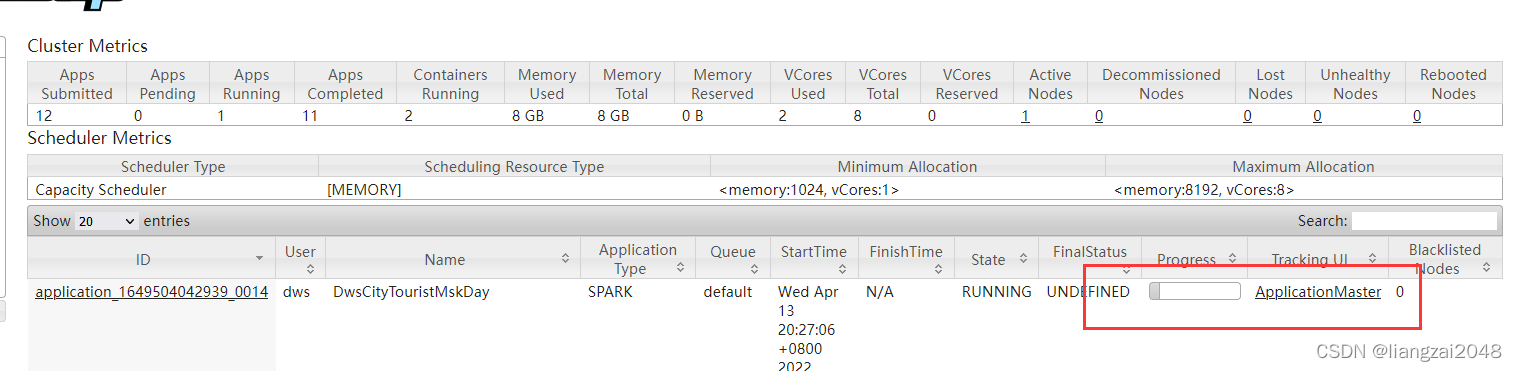

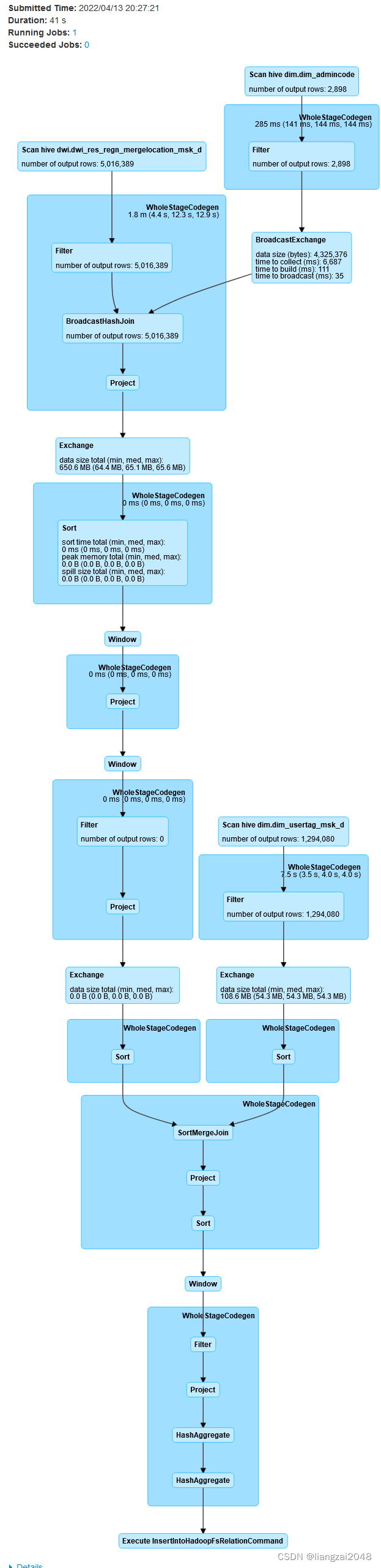

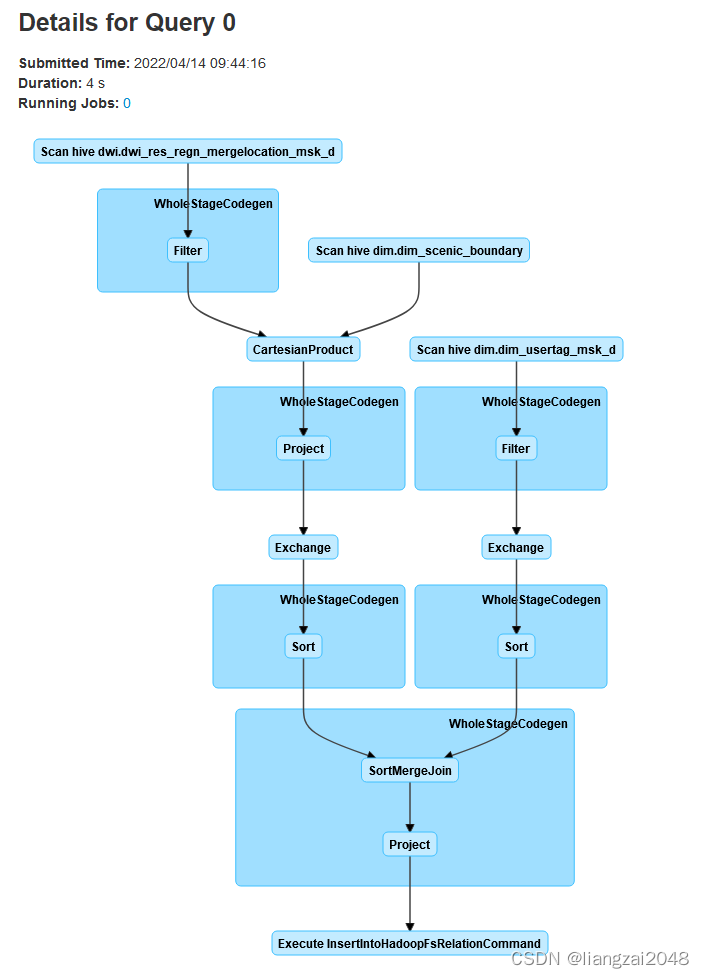

- 访问master:8088查看运行状态

- 在dws层查看运行结果

- 在jobs目录下编写Azkaban的脚本(新增)

- 景区游客表

-

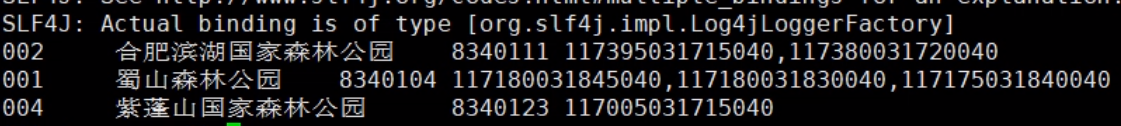

- 从百度地图上拿下合肥景区数据放到ctyun目录下命名为a.json

- 在dws层的hive新建dws_scenic_tourist_msk_d表

- 在dws层新建DwsScenicTouristMskDay.scala类并编写

- 在common下创建poly并编写Circle.java、 CommonUtil.java、DataFormatUtil.java、Line.java、StringUtil.java、Polygon.java

- 编写dws-scenic-tourist-msk-day.sh脚本

- 在dim层修改权限

- 打包上传运行脚本

- 访问mater:8088查看运行状态

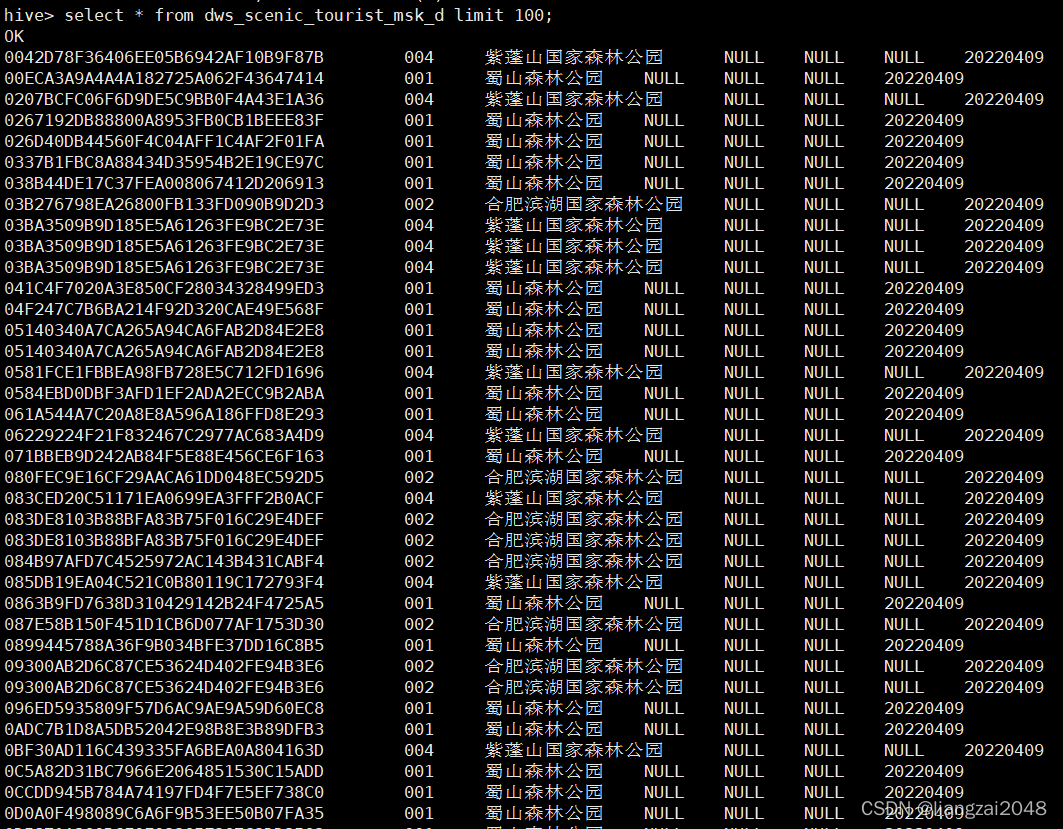

- 在dws的hive查看运行结果

- 构建景区网格表

-

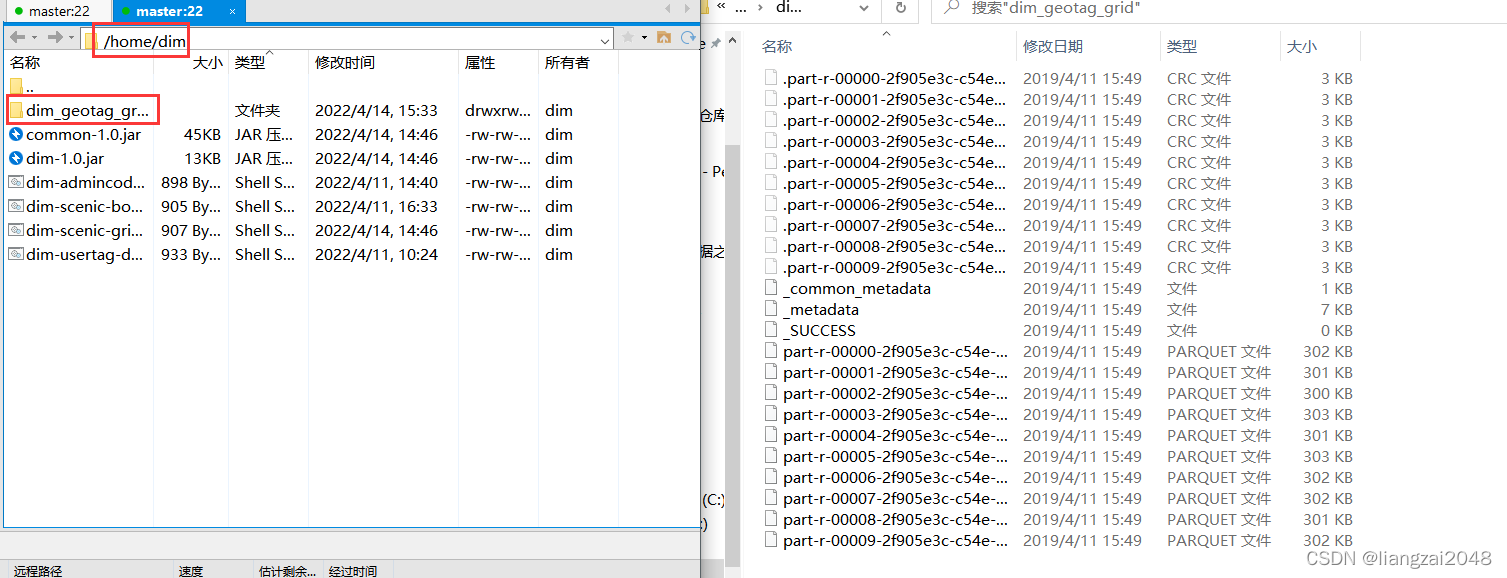

- 上传dim_scenic_grid数据

- 在dim层创建dim.dim_scenic_grid表

- 在dim层创建dim.dim_geotag_grid表

- 在dim层创建DimScenicGrid.scala类并编写

- 在dim层创建DimScenicGrid.scala脚本并编写

- 在dim层打包上传并运行

- 查看运行结果

- ```第七章```

- 指标统计

-

- 统计游客指标

-

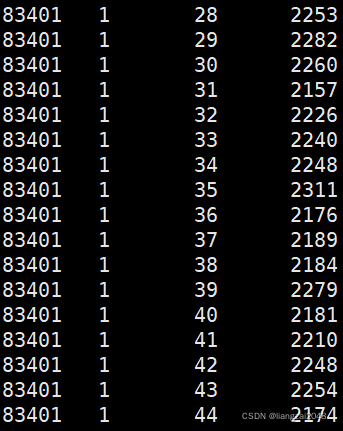

- 1、客流量按天 [市,客流量]

- 2、性别按天 [市,性别,客流量]

- 3、年龄按天 [市,年龄,客流量]

- 4、性别年龄按天 [市,性别,年龄,客流量]

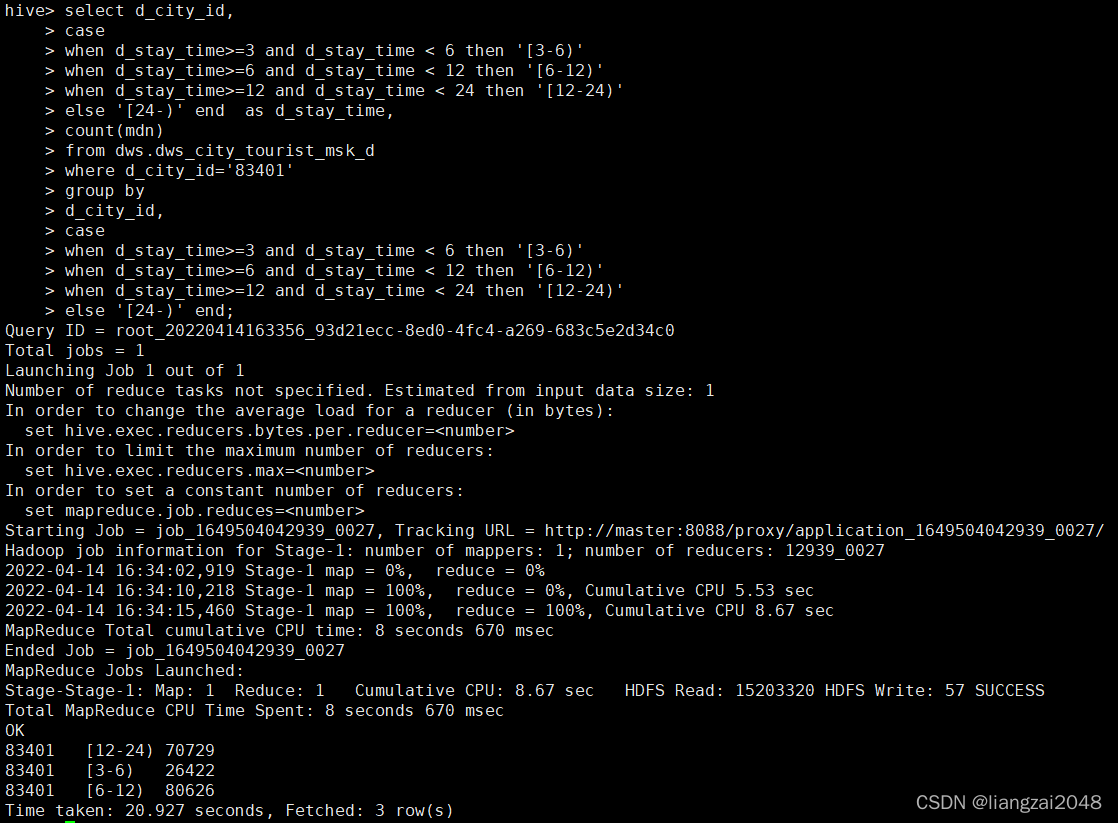

- 5、停留时长按天 [市,停留时长,客流量]

- 宽表

-

- 在IDEA的ctyun目录下新dal模块

-

- 导入spark的maven依赖

- 在dal层创建DalCityTouristMskWideDay.scala类并编写

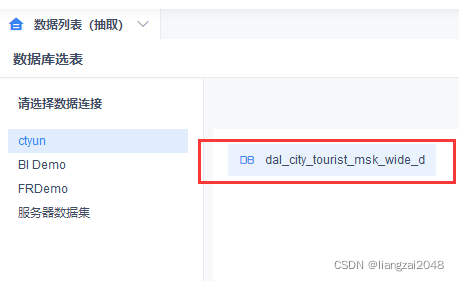

- 在dal用户的hive创建 dal_city_tourist_msk_wide_d表

- 通过dws用户给dal增加权限

- 通过dim用户给dal增加权限

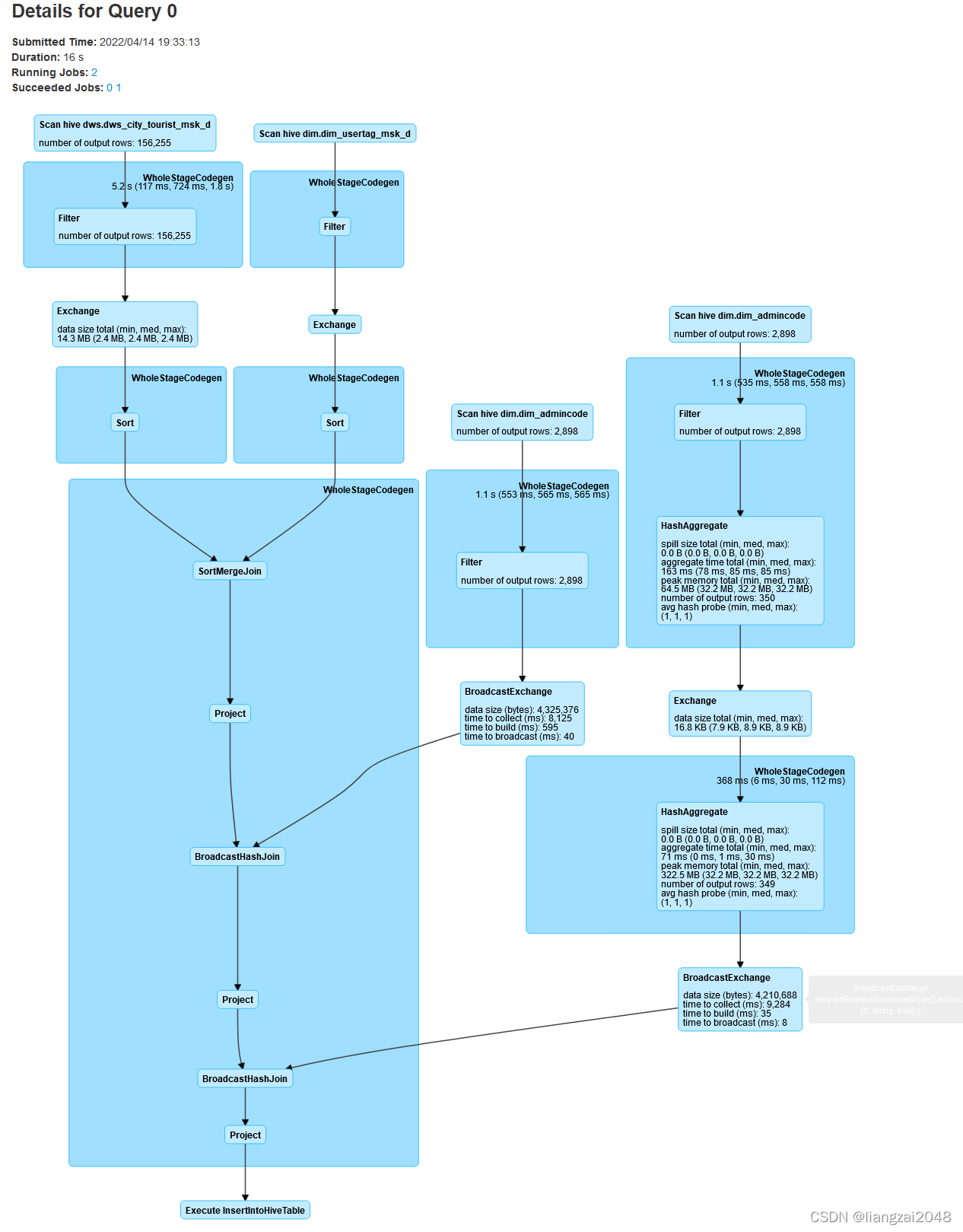

- 通过dal用户使用spark-sql查看sql是否能运行

- 在dal层 编写dal-city-tourist-msk-wide-day.sh脚本

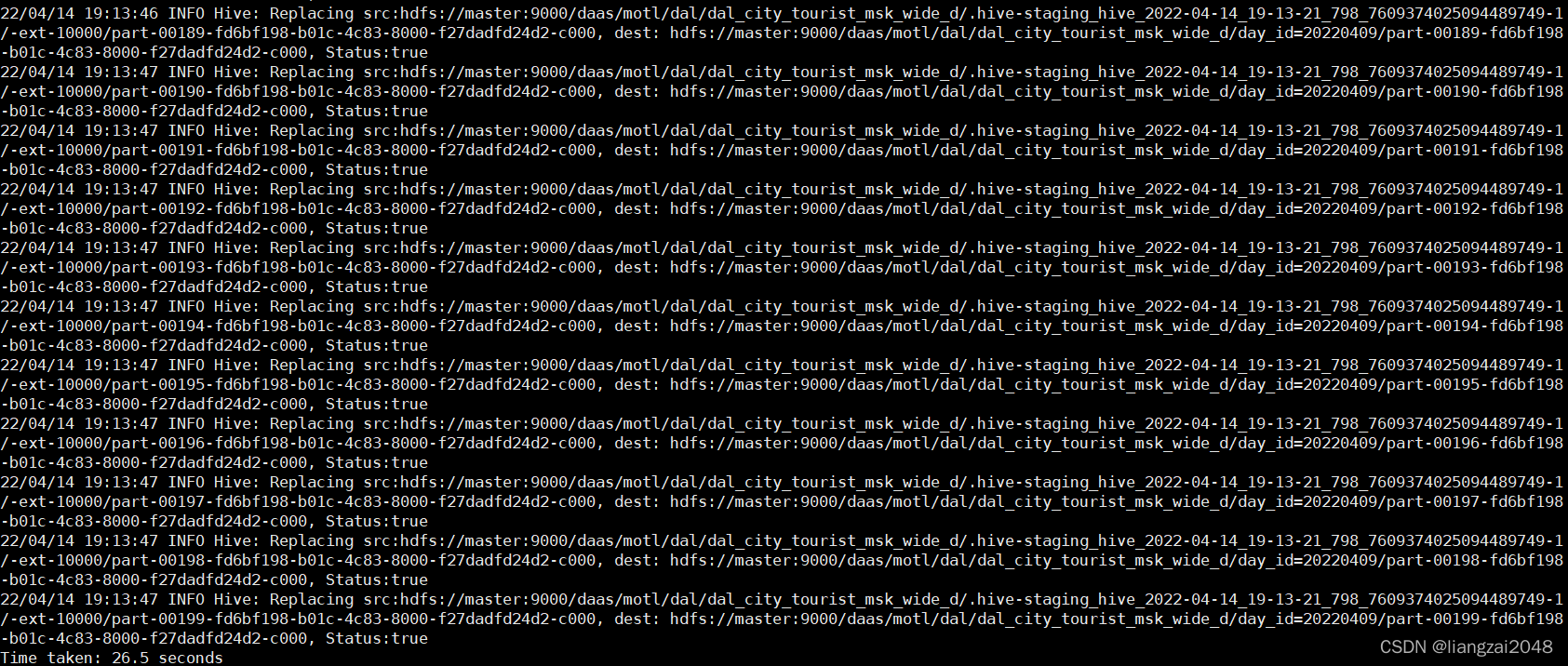

- 打包上传并运行dal-city-tourist-msk-wide-day.sh脚本

- 访问master:8088查看运行情况

- 查看dal-city-tourist-msk-wide-day.sh脚本运行结果

- ```第八章```

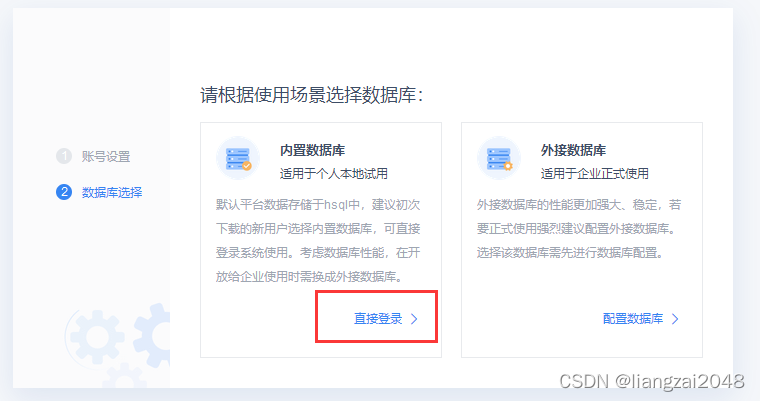

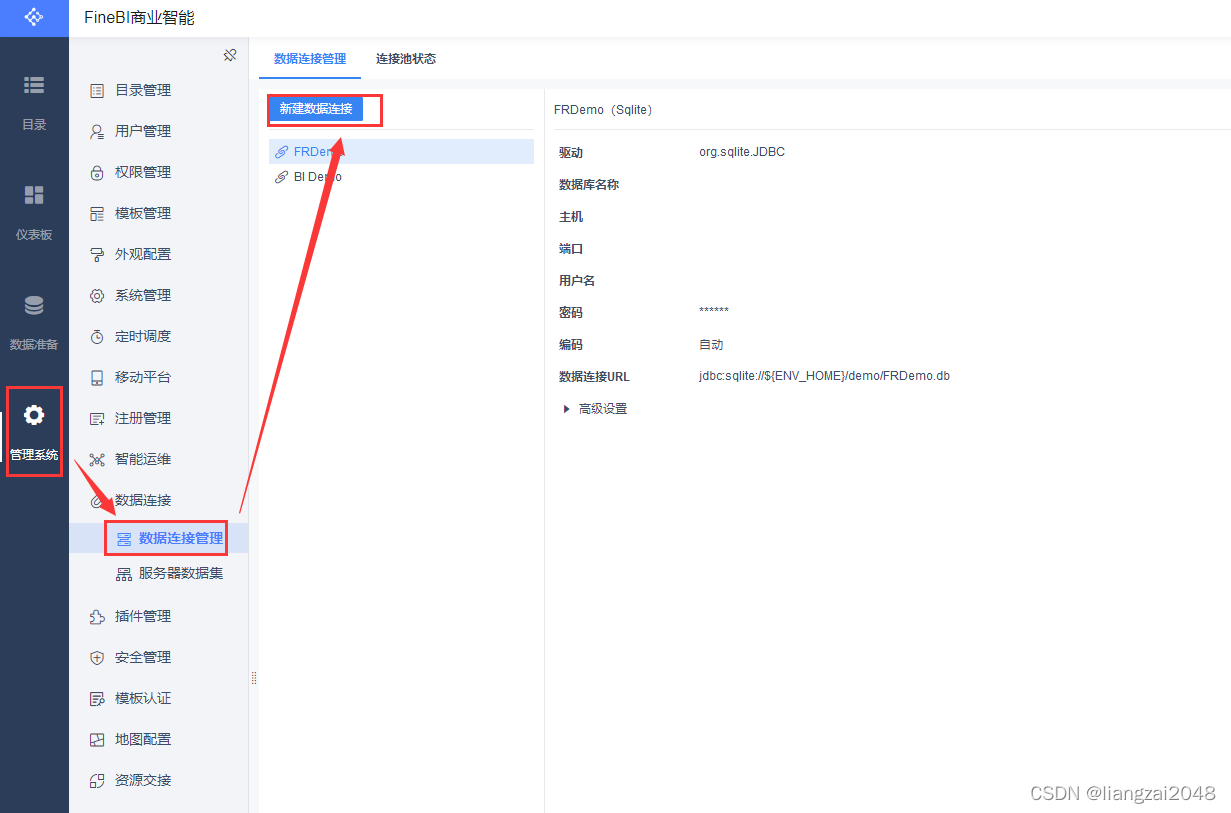

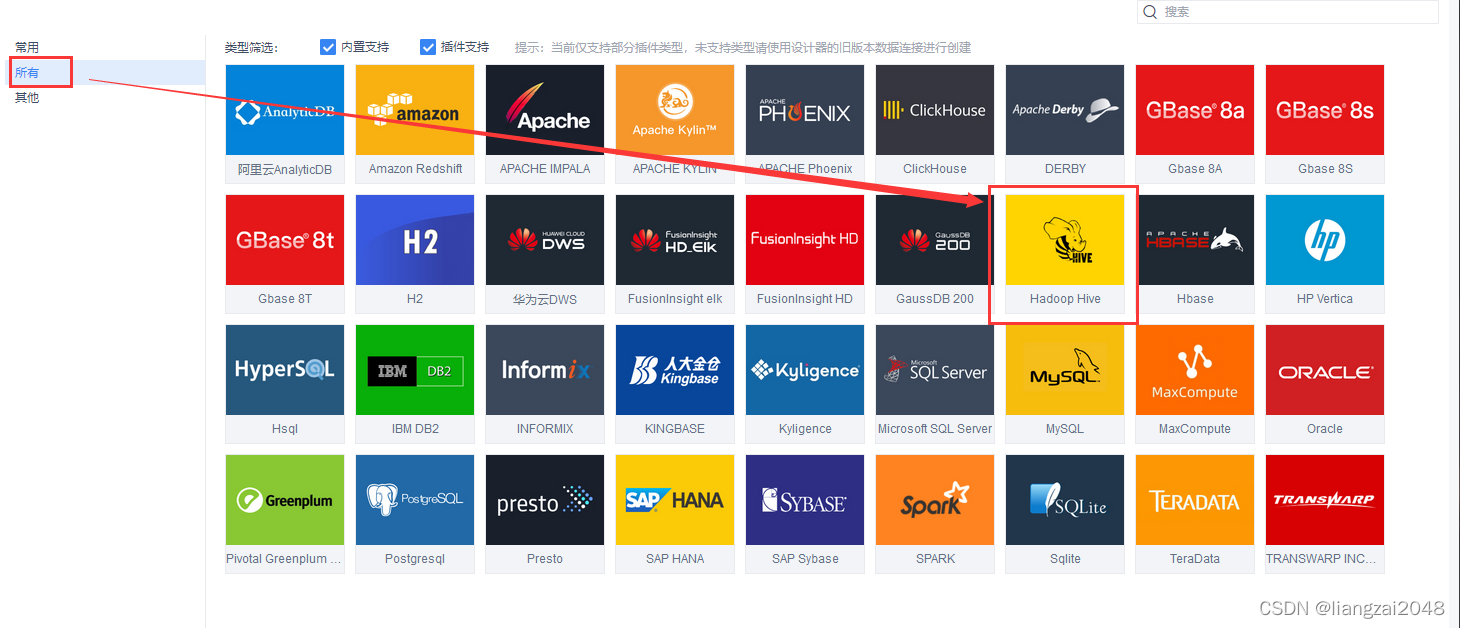

- Finebi

-

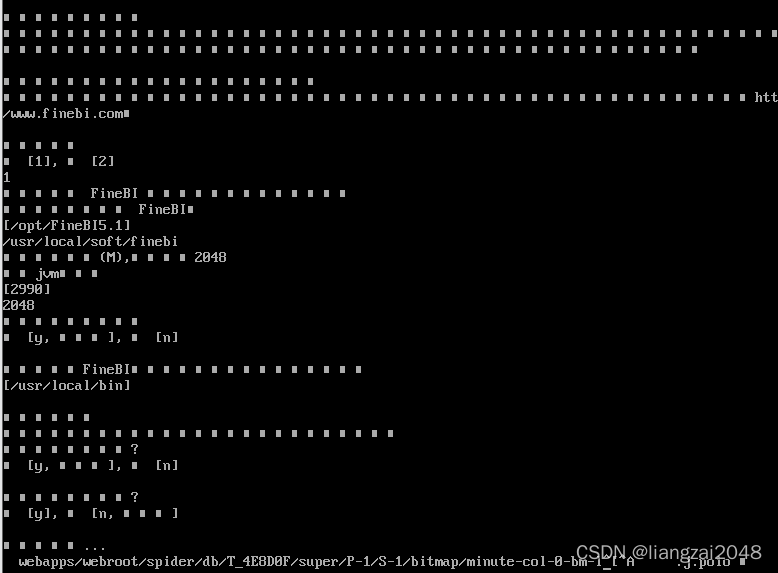

- Finebi官网[https://www.finebi.com/](https://www.finebi.com/)

-

- 注意

- 访问

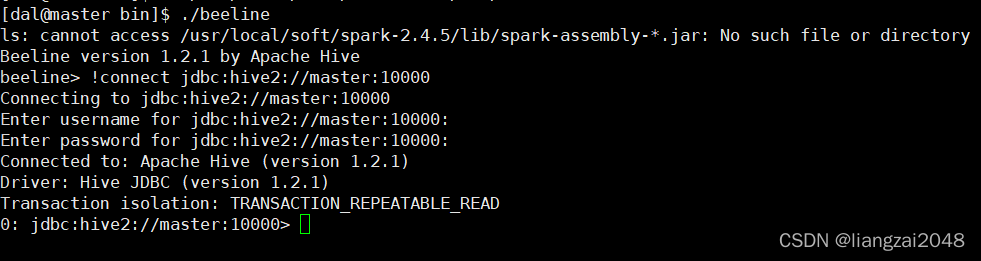

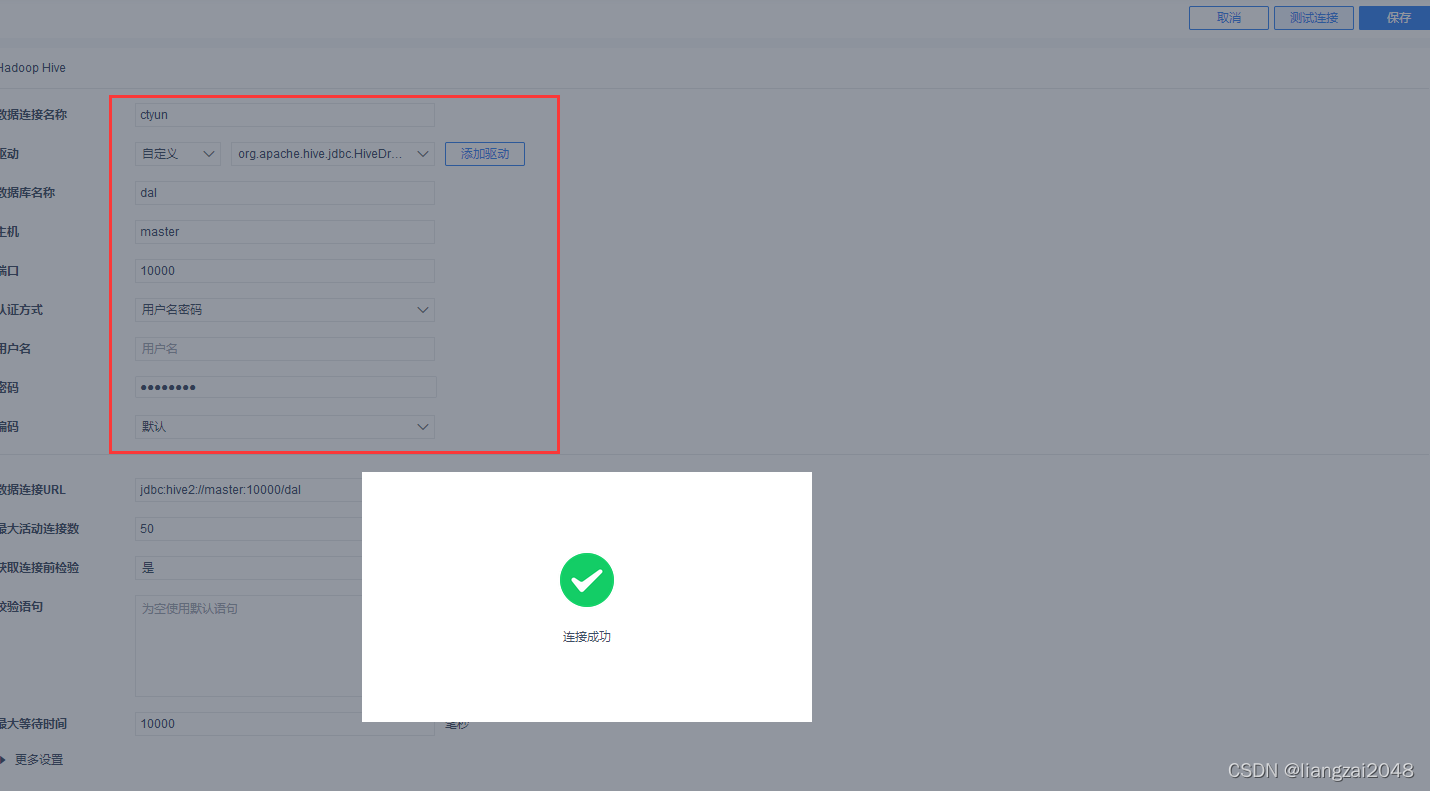

- (使用dal用户)开启hive服务连接JDBC

- ```解决0: jdbc:hive2://master:10000 (closed)>问题 ```

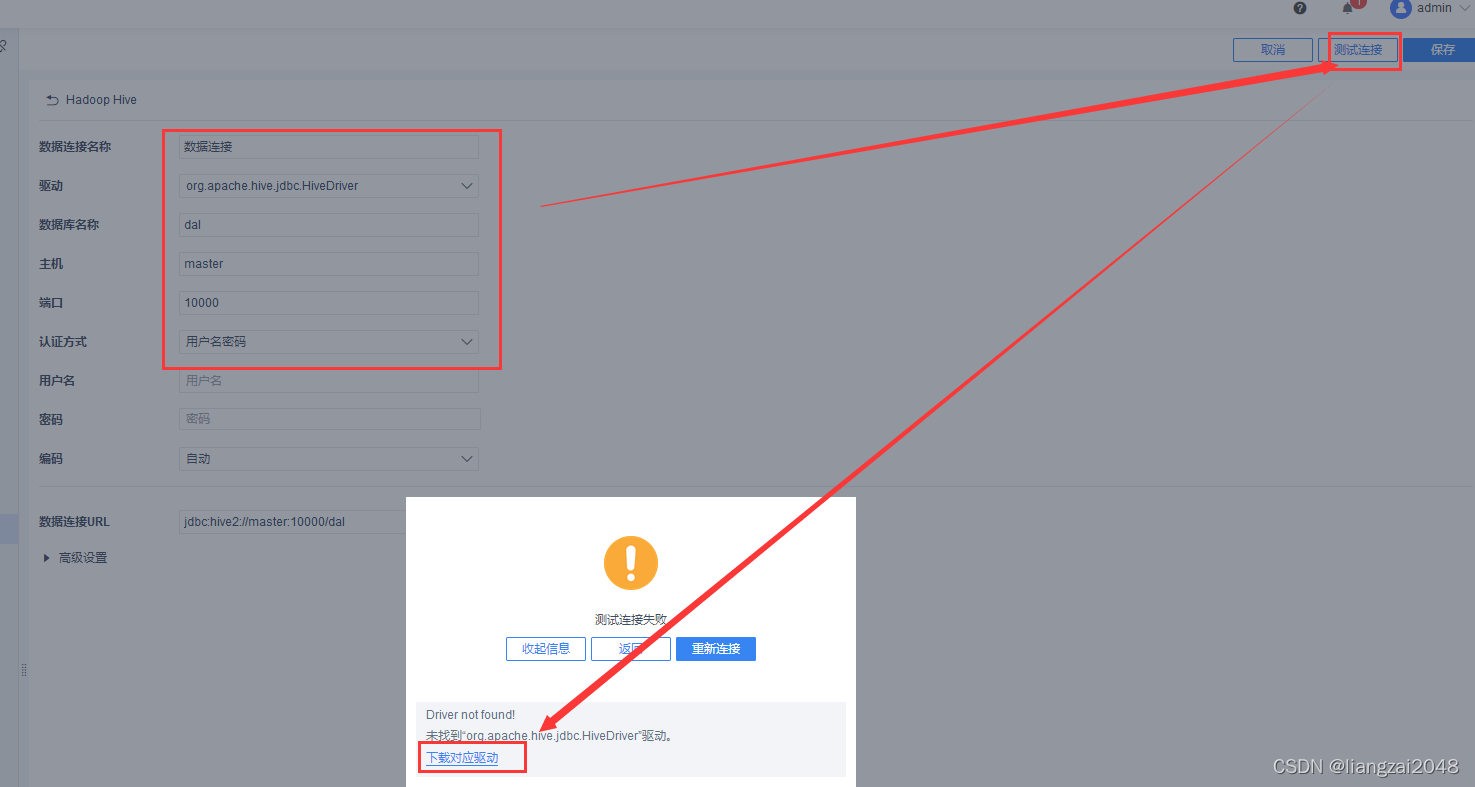

- 卸载(root)

- 当遇到这个问题别急好办,去VMware装

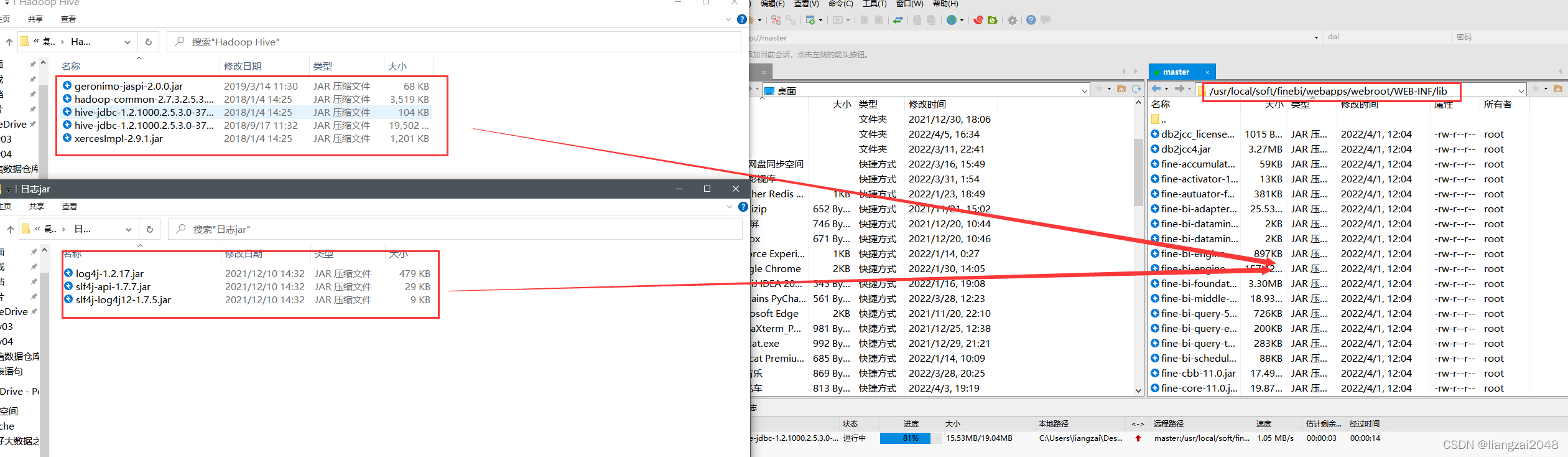

- 将下载的驱动解压上传

- 重启finebi

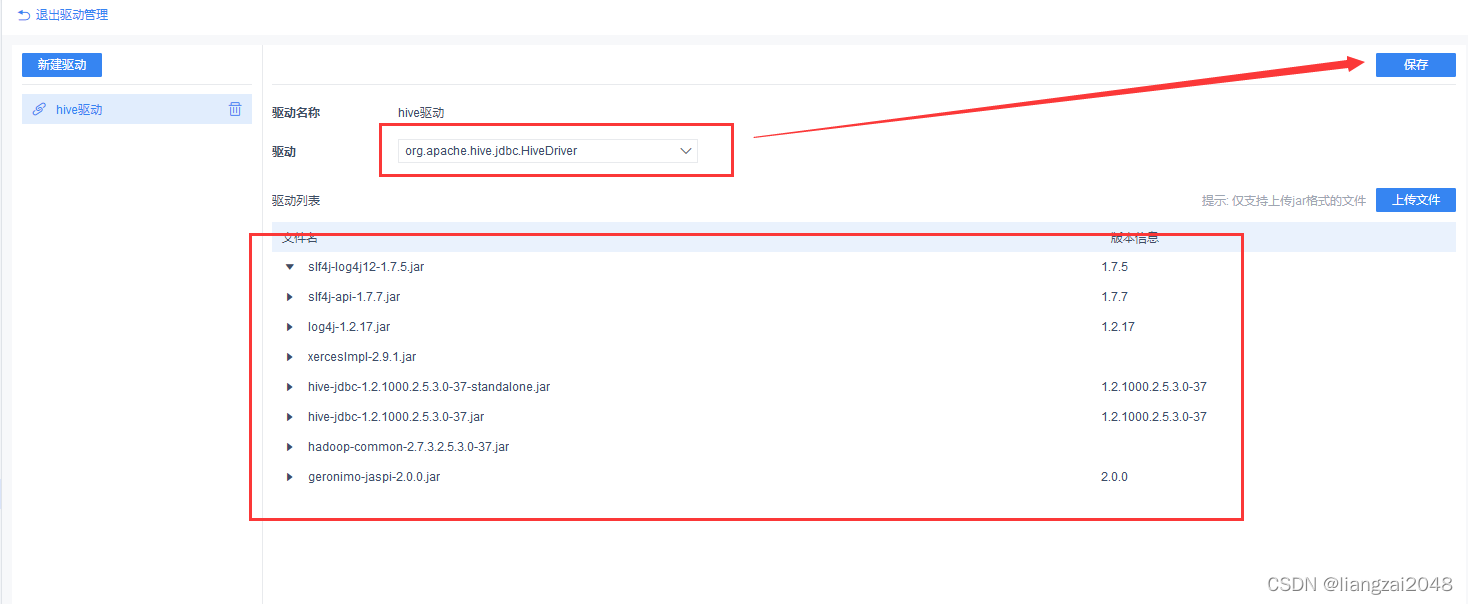

- 新建驱动

- 新建连接

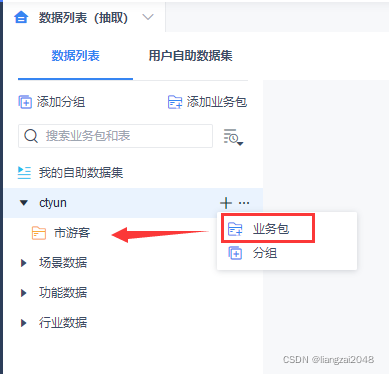

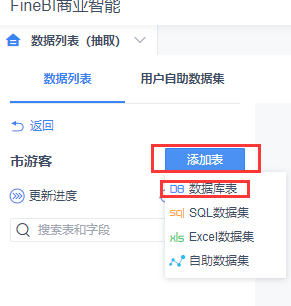

- 数据准备

- 可视化效果

- 电信离线仓库项目总结

电信数据仓库

第一章

项目研发流程

项目简介

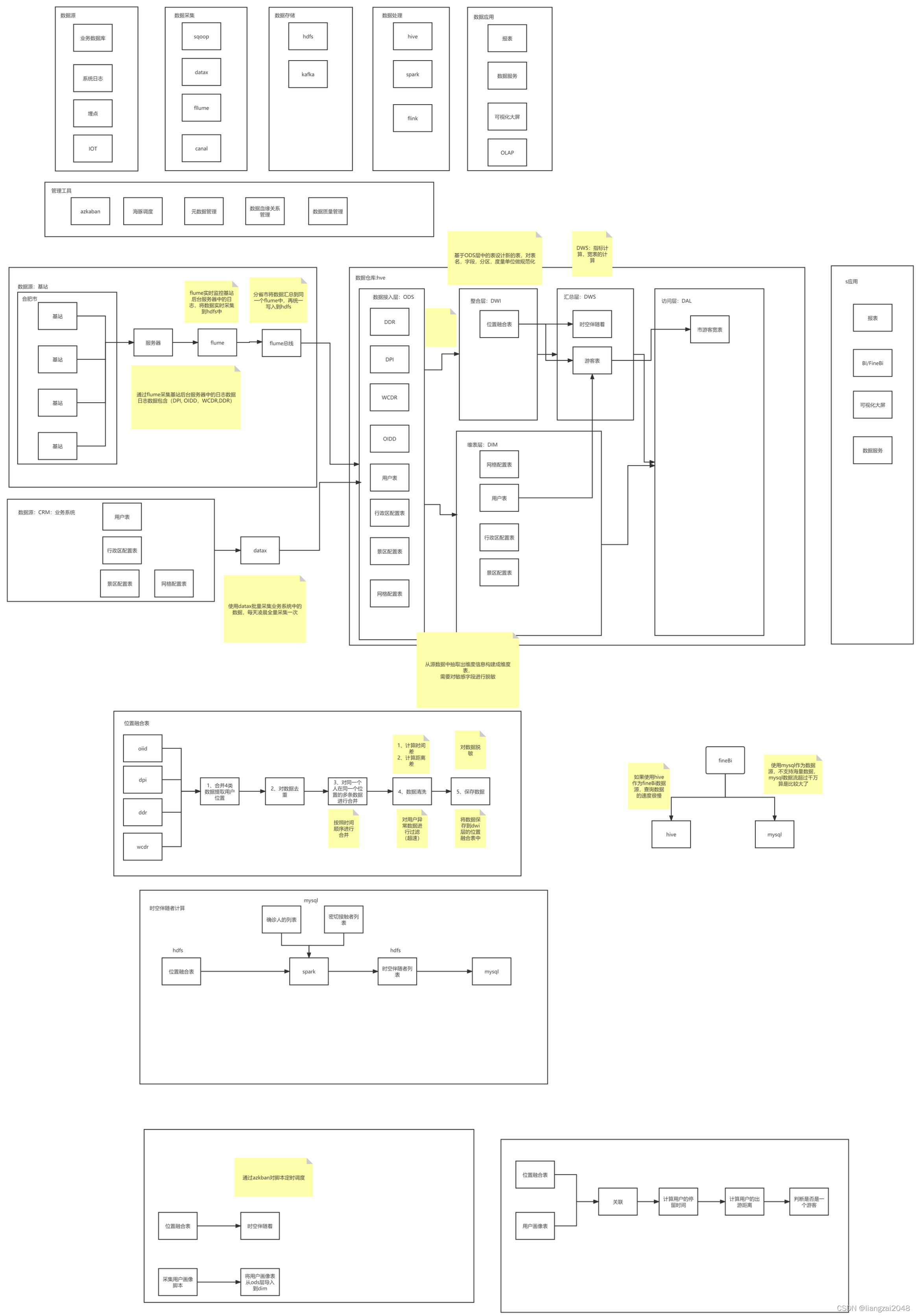

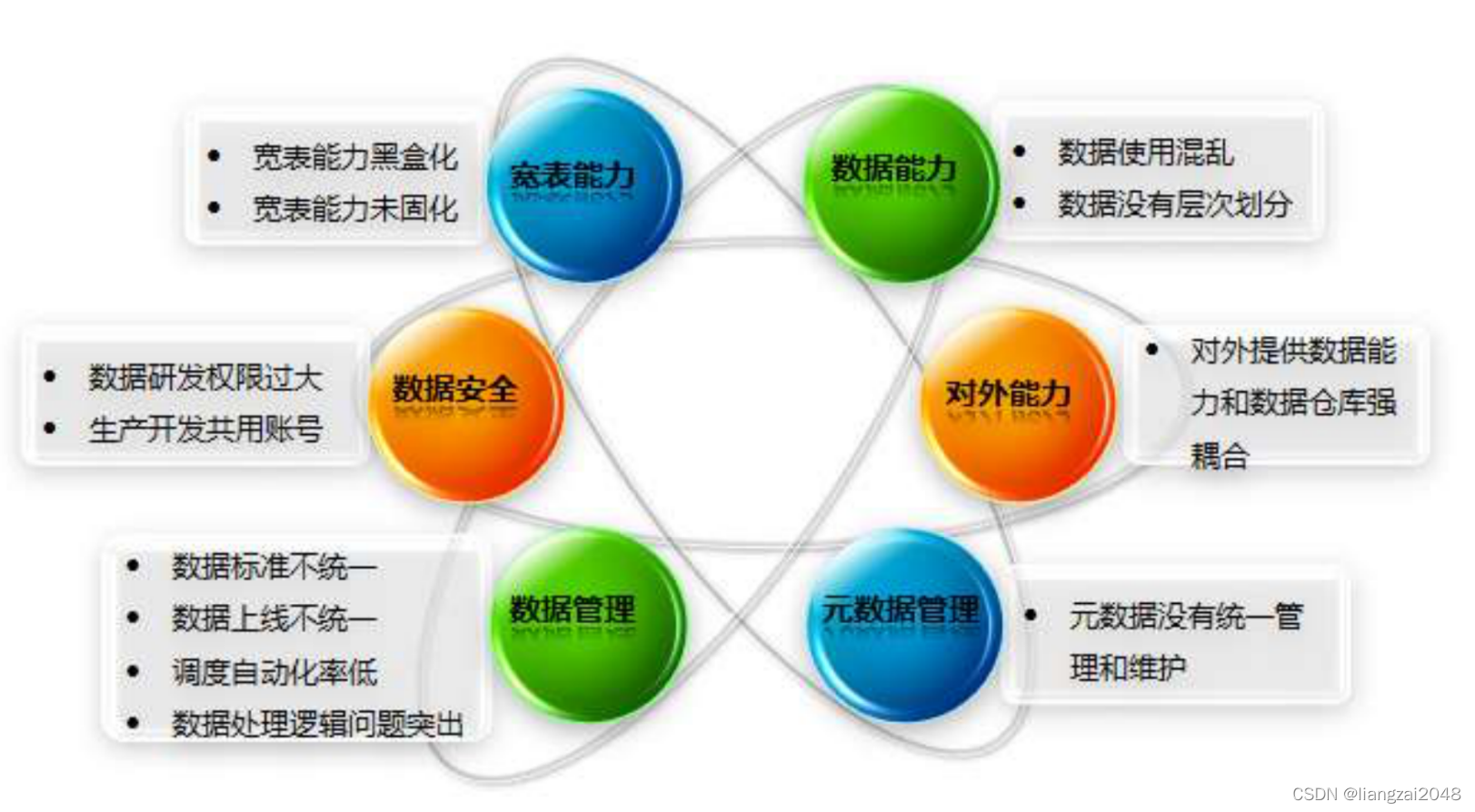

作为一家提供大数据服务的公司,数据能力是公司的核心资产。随着外部业务的快速发展,内部数据的不断丰富完善,数据能力需要更好的支撑公司重点平台化产品,即消费金融平台、终端产业平台、商业地产平台、基础及行业标签平台等等。现有的数据能力由于各项目按照各自需求自行开发,缺少统一规划、统一管理等,导致现行数据仓库存在如下问题:

业务总则

信息域概述

在电信行业企业中,将 IT 支撑系统划分为三个大域,分别为:市场运营域 (BSS:

Business support system)、网络运营域(OSS:Operation support system) 、企业管理域

(MSS:Management Support System),是电信行业 IT 战略规划具有重要地位的三大支柱内容。

IT 支撑系统包括 BSS、OSS、MSS 三个子系统。三个子系统在整个 IT 支撑系统中承担不同

的责任,同时彼此之间相互关联。

市场运营域(BSS 域)

业务支持系统(BSS)主要实现了对电信业务、电信资费、电信营销的管理,以及对客户的管理和服务的过程,它所包含的主要系统包括:计费系统、客服系统、帐务系统、结算系统以及经营分析系统等。

企业管理域(MSS 域)

管理支持系统(MSS),包括为支撑企业所需的所有非核心业务流程,内容涵盖制订公司战略和发展方向、企业风险管理、审计管理、公众宣传与形象管理、财务与资产管理、人力资源管理、知识与研发管理、股东与外部关系管理、采购管理、企业绩效评估、政府政策与法律等。

网络运营域(OSS 域)

运营支撑系统(OSS)主要是面向资源(网络、设备、计算系统)的后台支撑系统,包括专业网络管理系统、综合网络管理系统、资源管理系统、业务开通系统、服务保障系统等,为网络可靠、安全和稳定运行提供支撑手段。

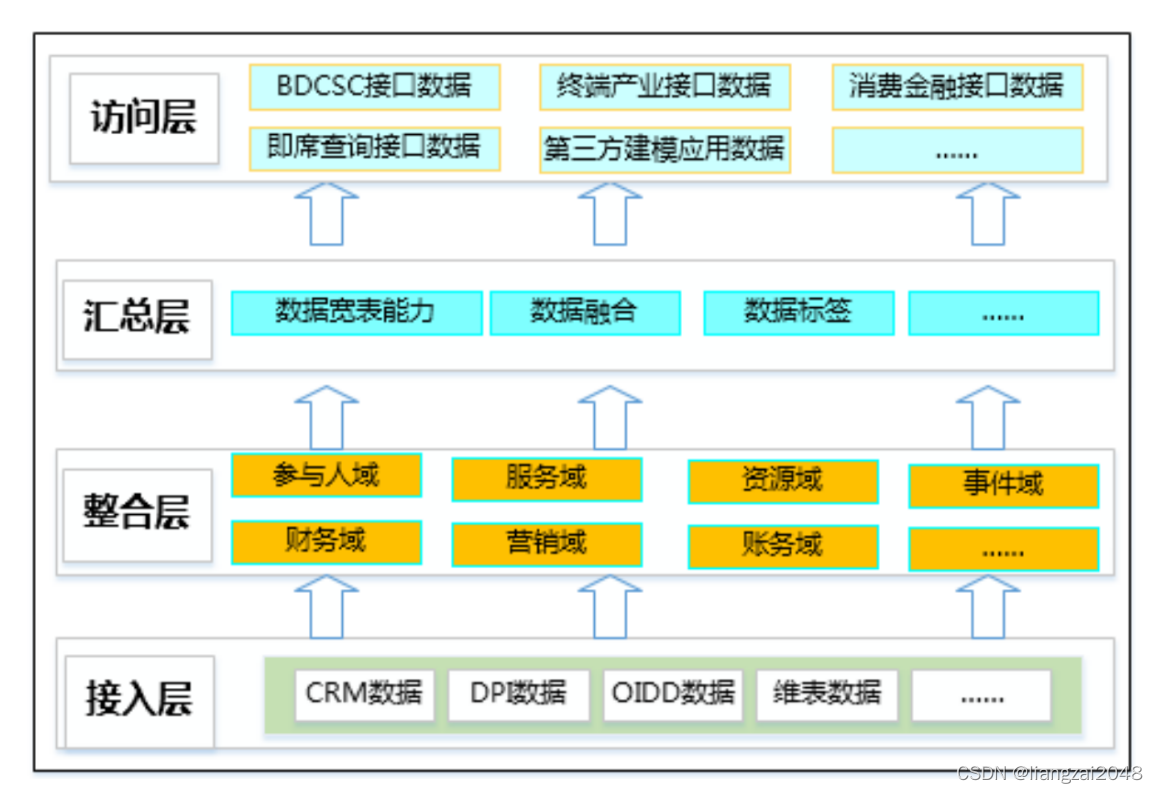

通用数据仓库分层

总体架构

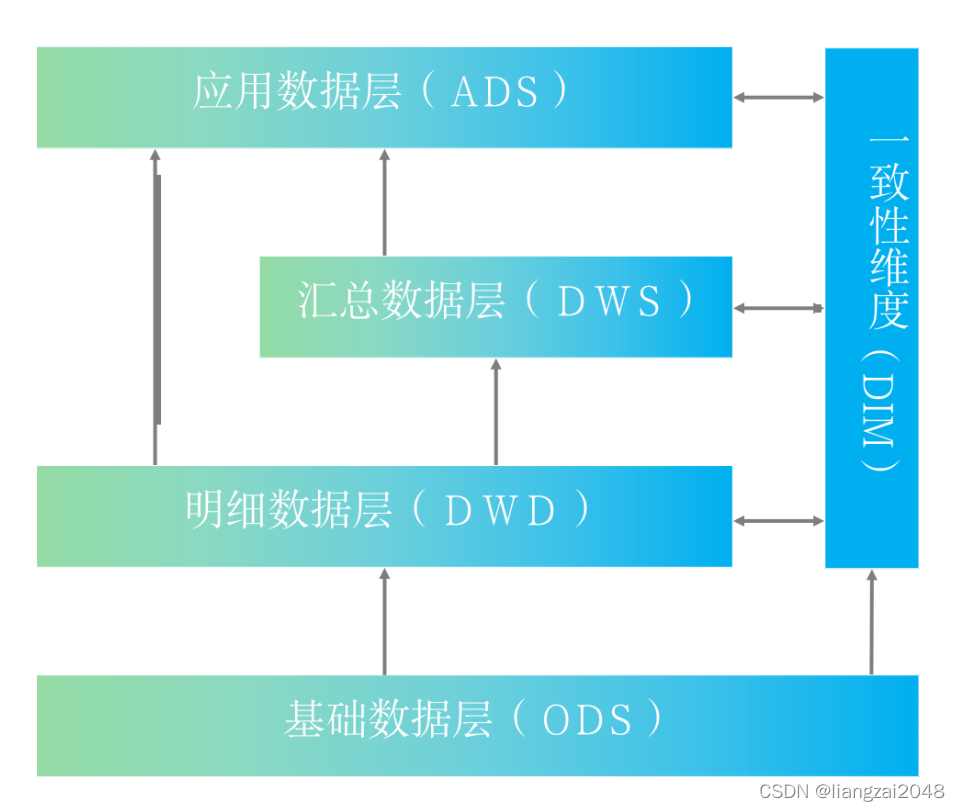

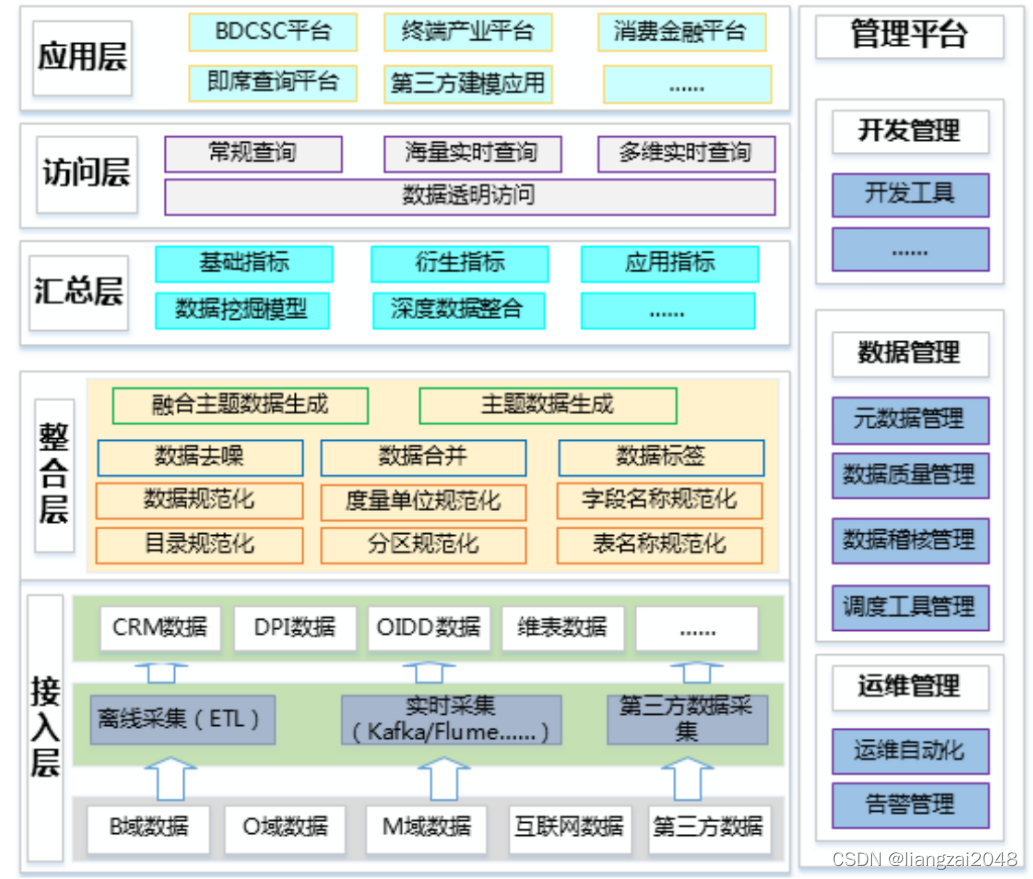

数据仓库

- 数据接入层: 数据接入层,即将数据源系统的数据装载进入数据仓库,以便充分利用数据仓库平台本身的性能完成后续数据的处理。数据接入包括:传统的 ETL 离线采集、也有实时数据采集、互联网爬虫解析、第三方数据采集等。

- 数据整合层: 对数据进行整合,即包括数据标准化、规范化(存储标准化、命名标准化、数据标准化等),又包括数据基本规整(数据去操、数据合并、数据标签),在标准化、规范化和数据规整的基础上进行主题数据生成建设和主题数据生成建设,形成基本数据能力层。

- 数据汇总层: 数据融合接入分析引擎,如:数据挖掘、深度学习等,根据公司发展需要和应用需求,建设各种指标模型和数据能力,能够快速、简洁支撑不同的内外部应用需要。

- 数据访问层: 主要是实现读写分离,将内、外部应用的查询、数据获取等能力与数据仓库计算能力剥离。坚持外部权限最小化,内部处理不影响外部应用的原则。

- 数据应用层: 根据不同的应用类别和应用需求,划分不同的应用数据组,坚持需要多少中国电信股份公司云计算分公司大数据事业部 数据仓库(BDDWH)V2.0总体设计提供多少的原则,坚持内部变化不影响外部应用的原则,坚持外部权限最小化的原则。管理平台:这是一纵,主要实现数据仓库的管理和运维,他横跨多层,实现数据仓库的统一管理。

权限划分

根据数据仓库数据层次和各层次实现功能,数据仓库权限坚持三个原则:

- 生产、临时需求支撑账户分离

- 对内权限最小化

- 对外权限固定化

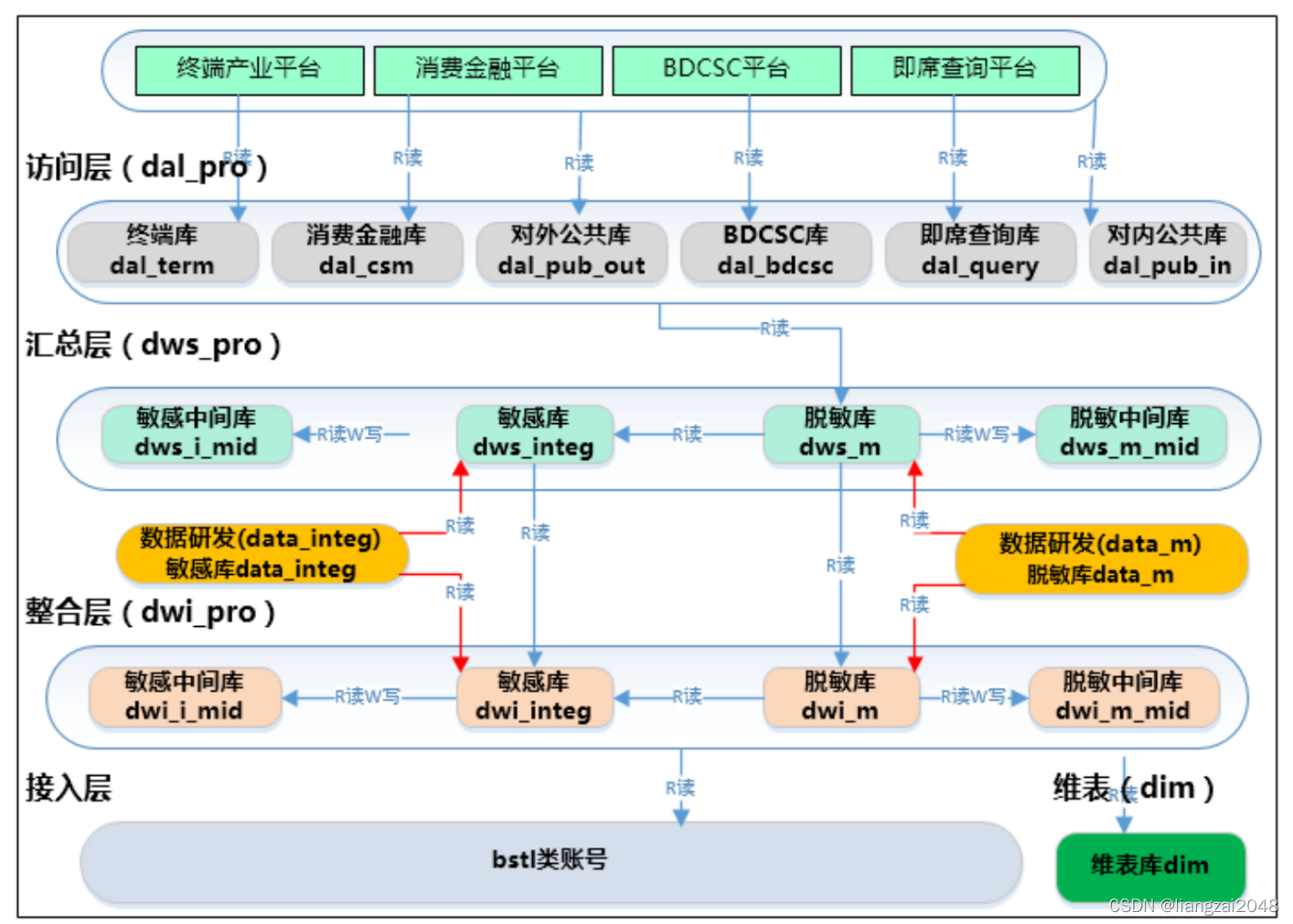

总之坚持权限最小化、账号最少化、管理智能化原则。大数据平台数据仓库权限根据数据层次划分,各账号设计和相互关系如下图:

数据仓库数据架构

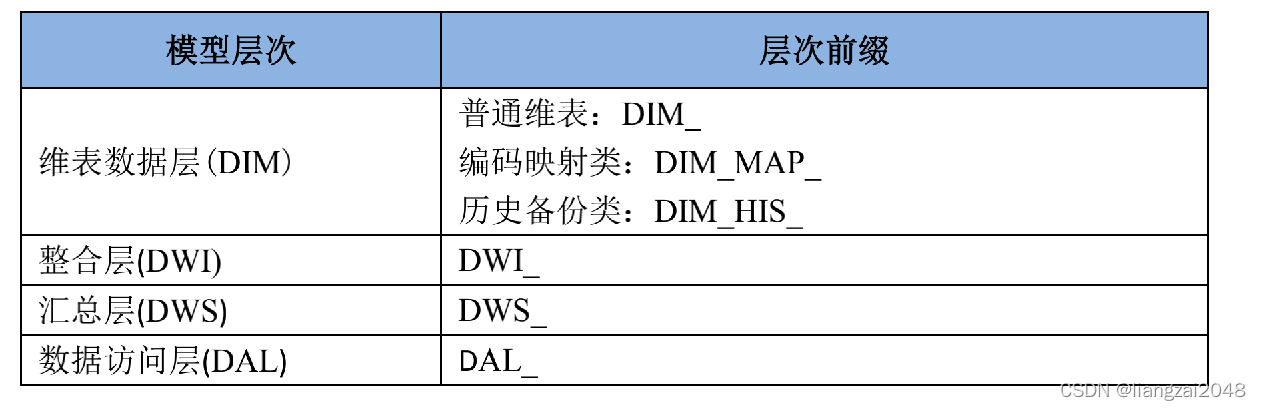

分层命名规范

命名规范参考整个大数据平台的分层架构体系:维表层(DIM)、整合层(DWI)、汇总层(DWS)、访问层(DAL)。

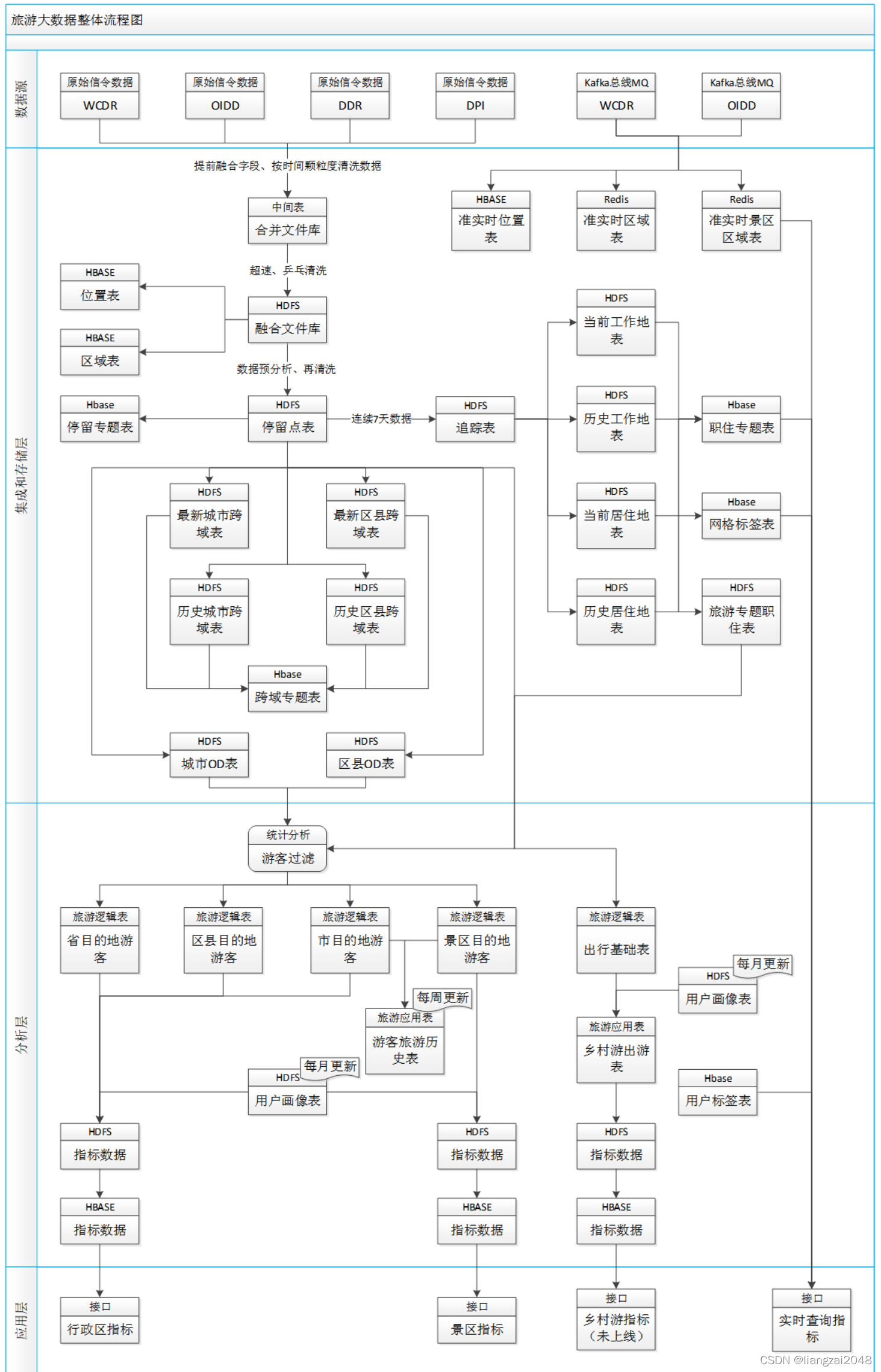

第二章

旅游大数据整体框架及处理流程

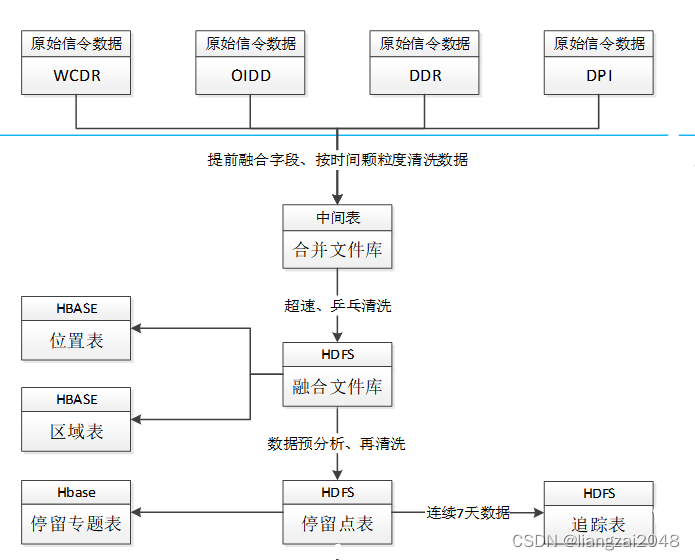

一、整体流程图

二、参数字段

1.数据源

1.1 OIDD数据

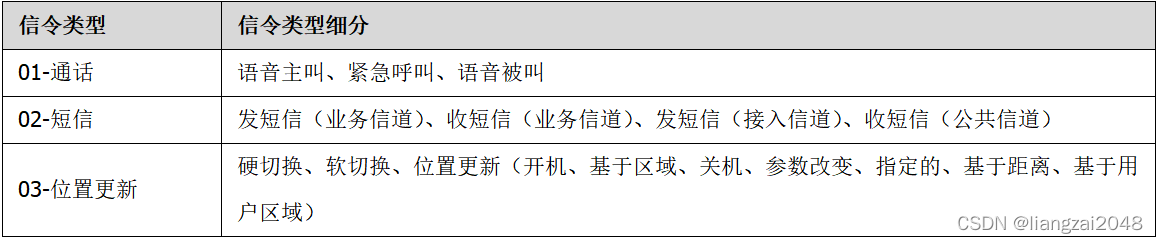

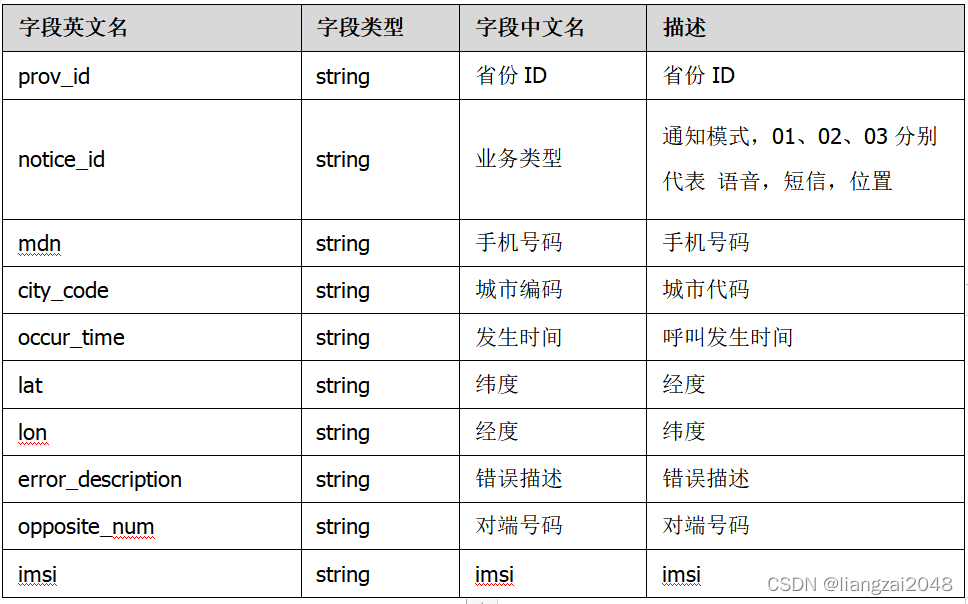

OIDD是采集A接口的信令数据,包括手机在发生业务时的位置信息。OIDD信令类型数据分为三大类,呼叫记录、短信记录和用户位置更新记录。

数据质量:

OIDD数据已汇聚了30省数据(除山东)。

数据字段:

1.2 WCDR数据

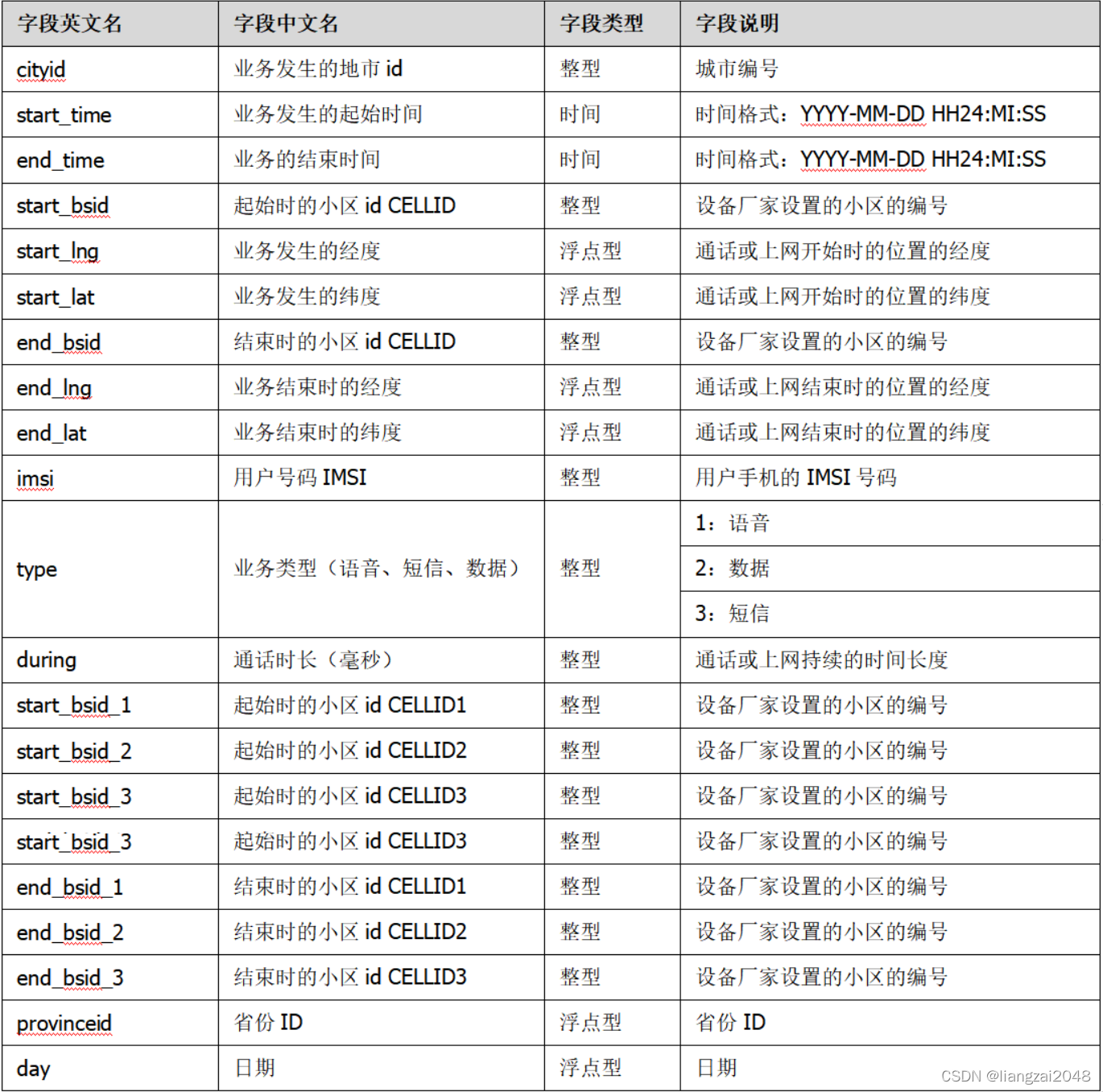

WCDR采集网络中ABIS接口的数据,基于业务发生过程中三个扇区的测量信息,通过三角定位法确定用户的位置信息。

数据质量;

目前WCDR数据上传至生产区仅有17省数据,并且上报的数据不完整。

数据字段:

1.3 移动DPI数据

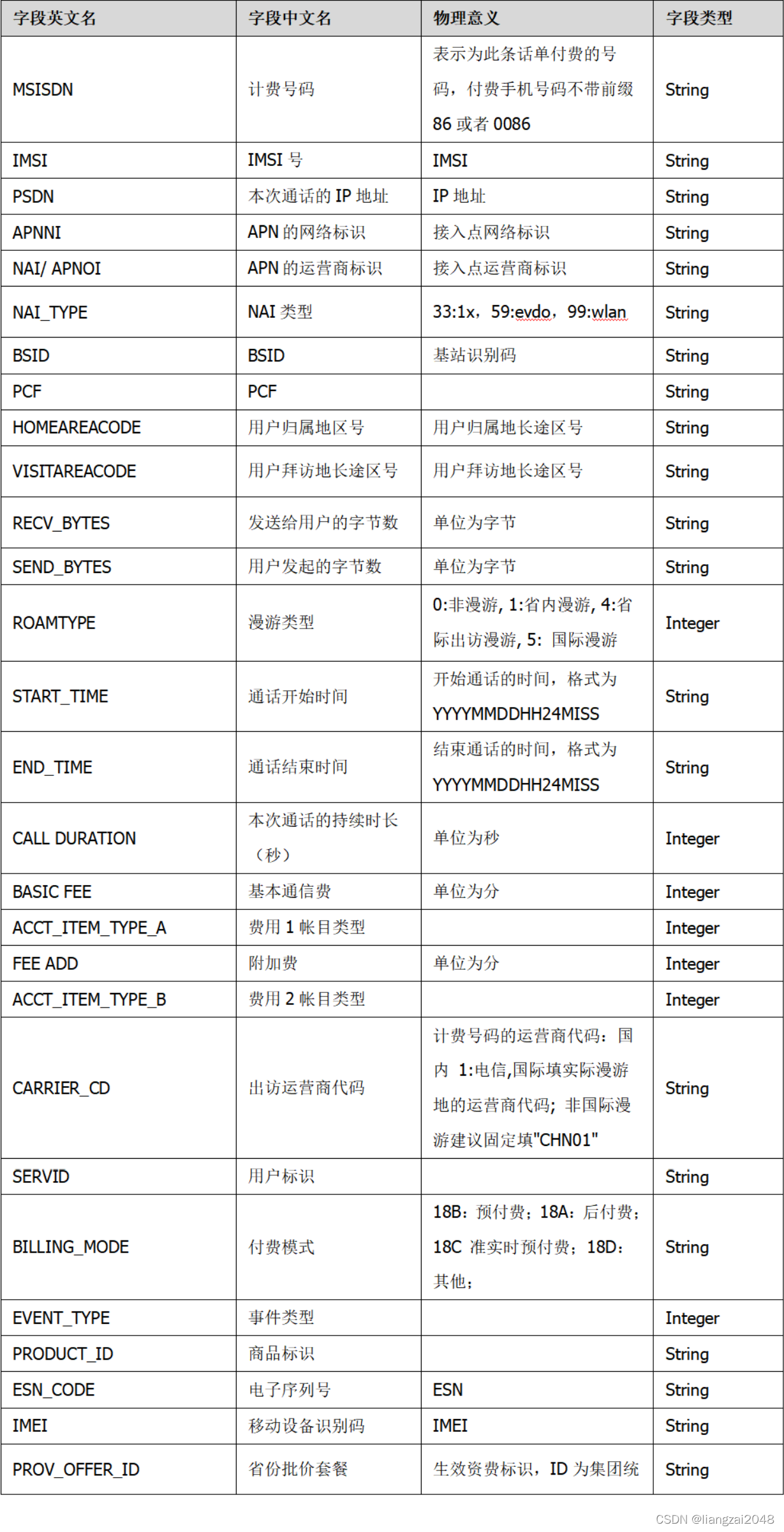

移动DPI数据采集用户移动用户数据上网时移动核心网和PDSN之间接口的数据。

数据质量;

移动DPI包括3G和4G的数据,3G中包含BSID位置信息,而4G中目前字段只含有SAI和TAI,无法根据这两个字段确定位置信息。

数据字段:

1.4 移动DDR数据

当前DDR中只有移动数据详单可以提取基站标识,其他语音,短信,增值等业务没有位置信息,不做为数据融合的基础数据。

数据质量;

DDR数据各个省公司上传相比其他数据延迟一天,因此DDR数据的融合数据延迟一天执行,其他数据第二天执行先融合清洗。

数据字段:

2.集成和存储层

2.1 融合文件

提取OIDD、移动DPI和WCDR分钟粒度位置数据,对重复时间点数据进行二次清洗,完成后写入合并文件库;根据乒乓切换、超速数据等清洗原则对噪声数据进行清洗,完成后写入融合文件库;移动DDR数据在数据上传后延迟一天进行。

将原始信令数据融合处理后,根据应用的需求和后期应用场景的需求,共保留12个字段。包括手机号、城市代码、业务开始时间、经纬度、BSID、对端号码、数据源和业务类型。

| 序号 | 字段英文名 | 字段中文名 | 物理意义 | 字段类型 | 备注 |

|---|---|---|---|---|---|

| 1 | mdn | 手机号码 | 用户手机号码 | String | |

| 2 | city_code | 地市代码 | 用户所在的地市区号 | String | 用户业务发生城市 |

| 3 | StartTime | 业务开始时间 | 业务流开始时间,格式为yyyymmddhhmmss(24小时制),如果开启中间记录模式,每条记录都填写相同的开始时间。 | String | |

| 4 | lat | 经度 | 用户所在的经度 | String | |

| 5 | lon | 纬度 | 用户所在的纬度 | String | |

| 6 | BSID | 基站标识 | 基站标识,该标识包括:SID(4octets)+NID(4octets)+CI(4octets)。CI的高12比特为小区标识,低4比特为扇区标识 | String | 由于BSID在OIDD中不存在,建议后期OIDD提供BSID数据,当前情况下OIDD数据反填BSID数据 |

| 7 | gridID | 网格号 | 用户所在网格号 | String | |

| 8 | service_type | 业务类型 | 业务类型:话音1,短信2,位置更新3,数据业务4 | INTEGER | OIDD数据保持不变;DDR和DPI全部为4;WCDR业务类型中1保持不变,2(数据)修改为4,3(短信)修改为2. |

| 9 | event_type | 事件类型 | 事件类型: 语音:主叫(11)、被叫(12)。 短信:主叫(21)、被叫(22)。 位置更新:周期位置更新-310、开机-311、区域位置更新-312、关机-313. 4G:周期位置更新-320、开机-321、区域位置更新-322、关机-323 数据业务:协议类型 | INTEGER | 此字段暂时保留,主被叫业务在后期数据表中有数据后进行补充,位置更新数据在信令数据中包含业务详细分类后进行补充,数据业务中协议编号数据提取DPI数据中协议编号(protocolid )。格式为原数据编号前增加4。即:“4”+“DPI数据中协议编号” |

| 10 | data_source | 数据源 | OIDD(01),DPI(02),DDR(03),WCDR(04),… | INTEGER | 增加数据来源新标签,当前4个电信数据源,随数据源增加编号递增。 |

| 11 | RESERVER1 | 保留1 | String | ||

| 12 | RESERVER2 | 保留2 | String | ||

| 13 | RESERVER3 | 保留3 | String | ||

| 14 | RESERVER4 | 保留4 | String | ||

| 15 | RESERVER5 | 保留5 | String |

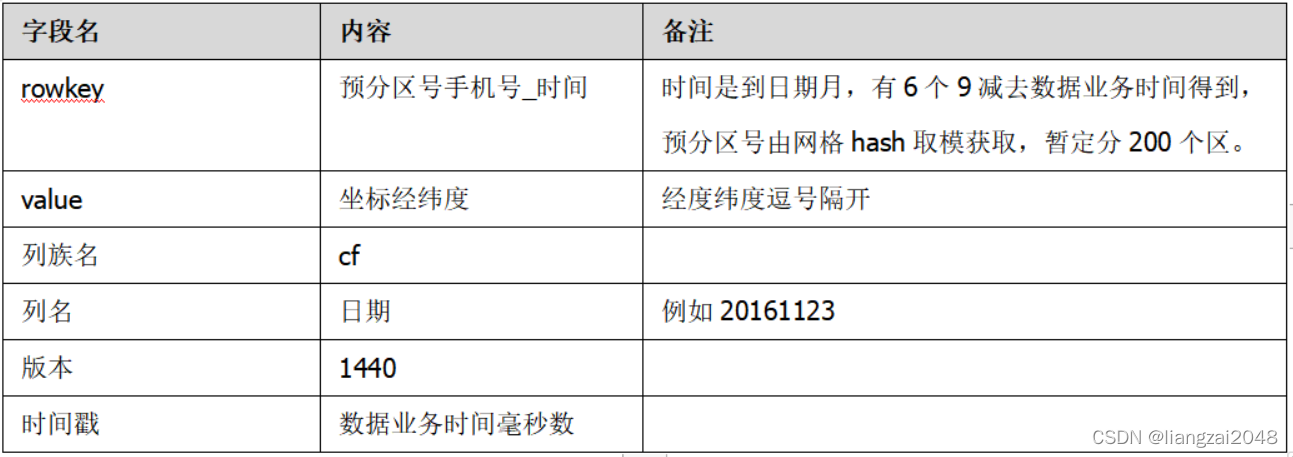

2.1.1 位置表

位置表包含了用户的位置信息,用日期做列名,用时间戳详细每个经纬度的时间。

位置表字段如下:

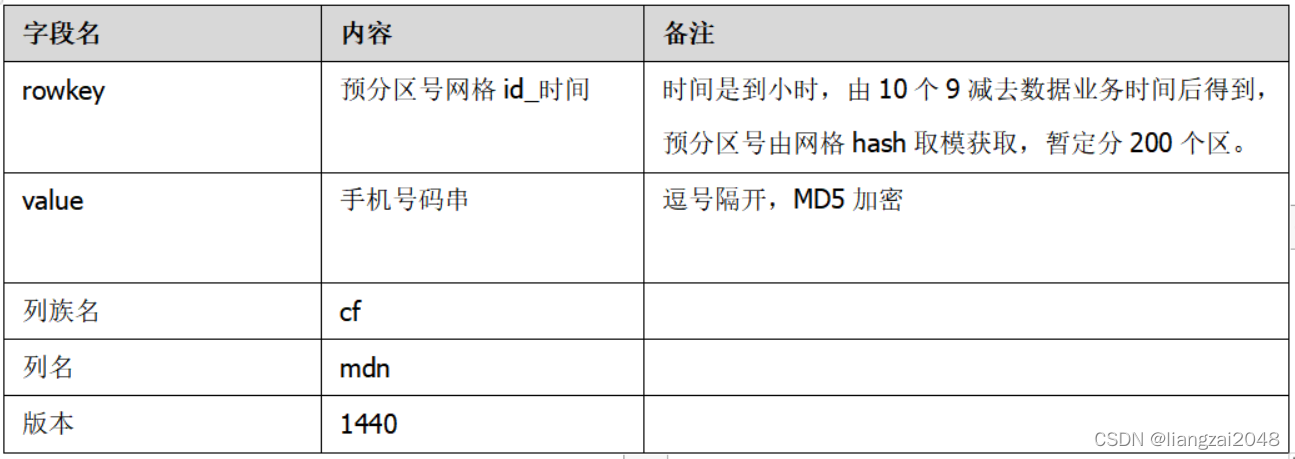

2.1.2 区域表

区域表包含了每个网格每小时内,存在的用户信息。

区域表字段如下:

2.2 停留点

停留点:在一个电信网格内停留就算停留点。实际是将电信网格内多个点变为两个点,或者一个点(如果本来就只有一个点),即为停留点。(网格:中国电信内部定义的500米乘以500米的网格。)

停留点表的字段如下:

2.3 职住地

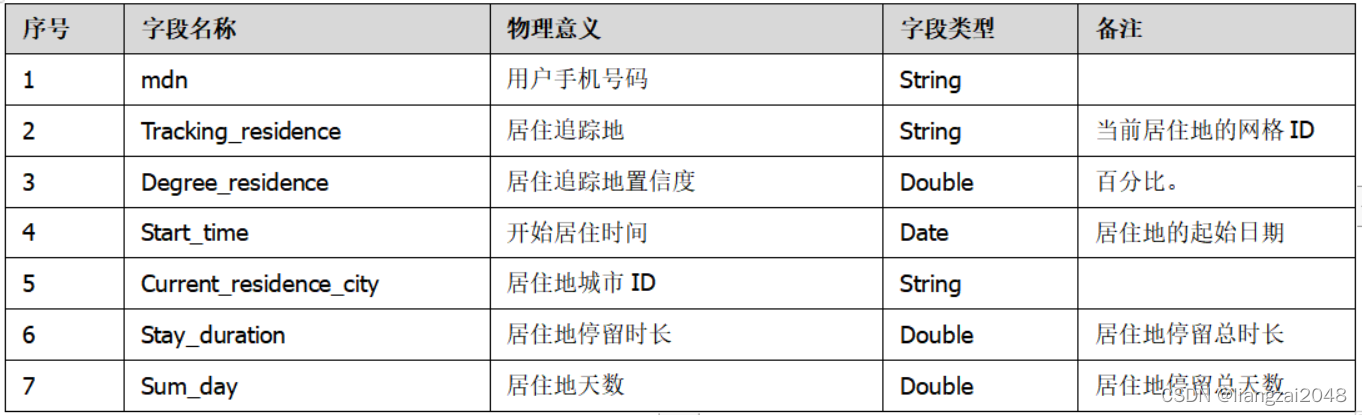

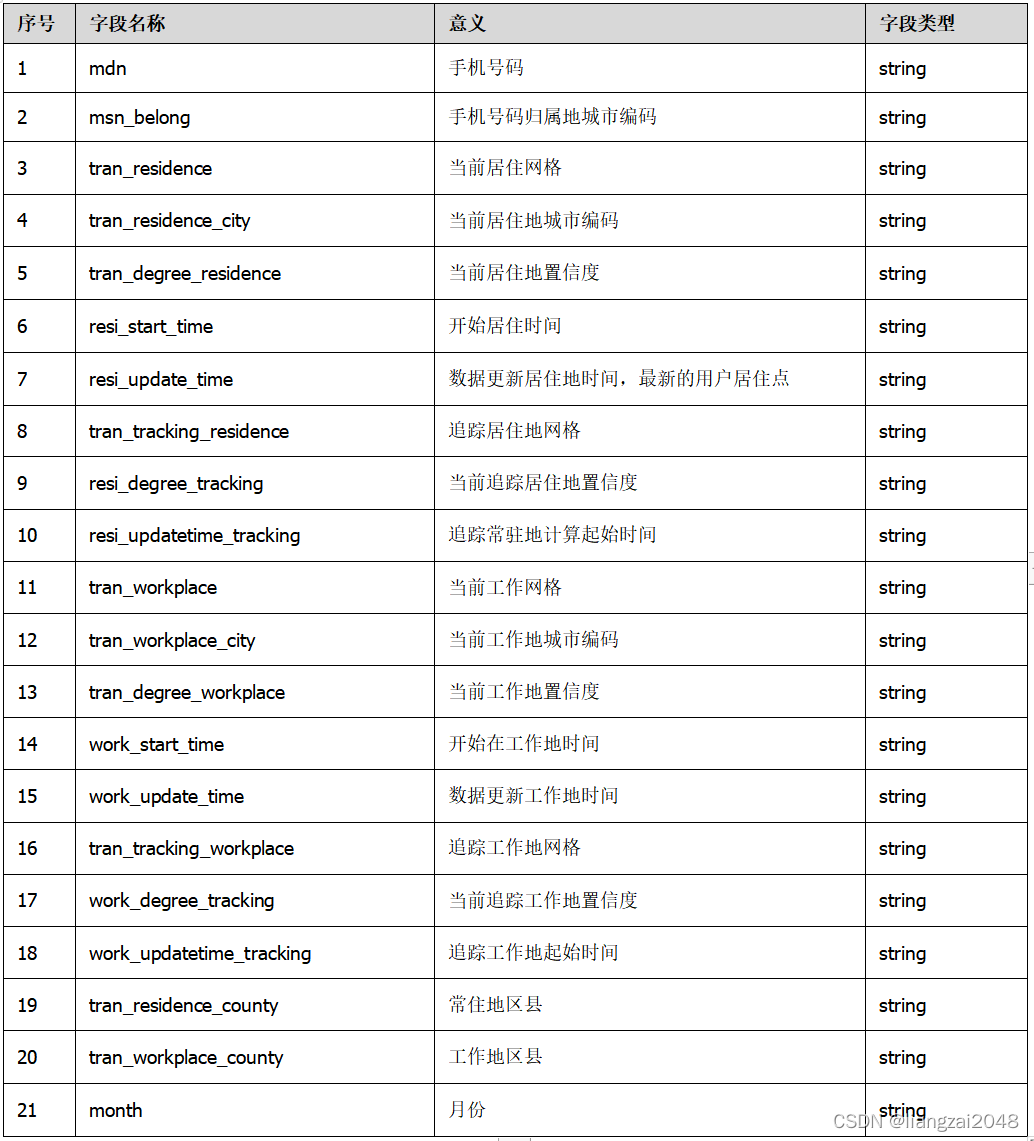

2.3.1居住追踪地

通过手机信令上报的位置数据,长期跟踪、监测用户在休息时间经常出现的地区,在本文档中定义为居住地。每周进行追踪,得到这周的居住地。

居住追踪地表字段如下:

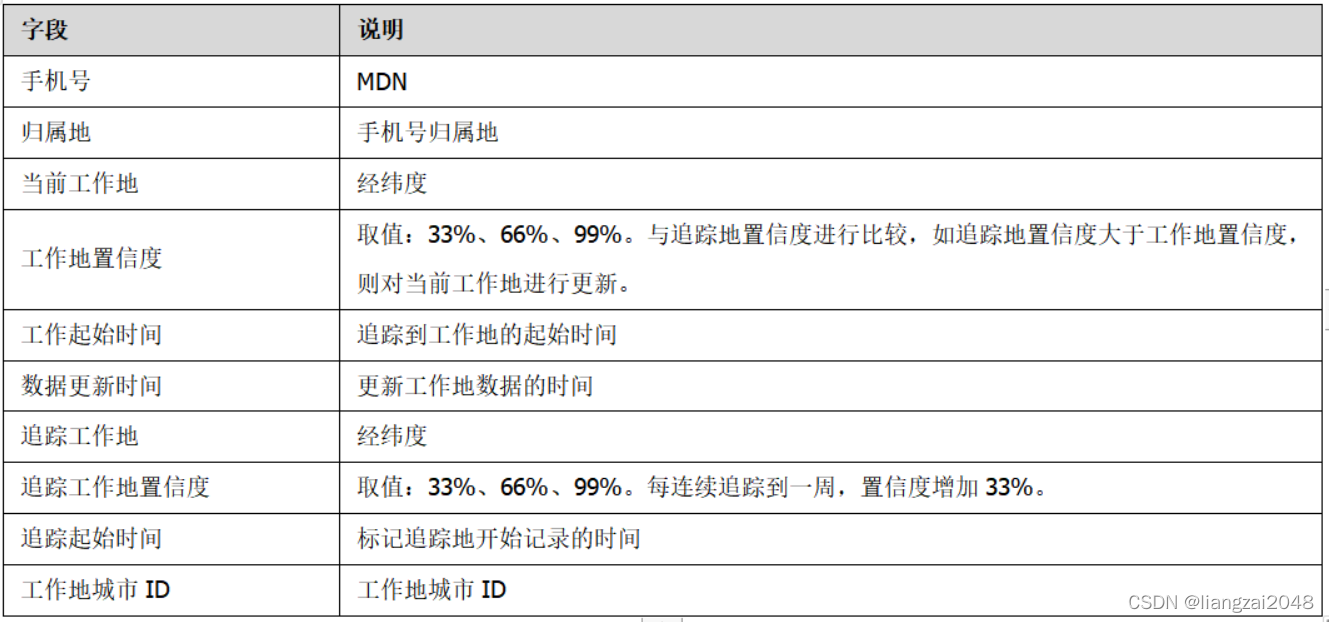

2.3.2工作追踪地

通过手机信令上报的位置数据,长期跟踪、监测用户在工作时间经常出现的地区,在本文档中定义为工作地。每周进行追踪,得到这周的工作地。

工作追踪地表字段如下:

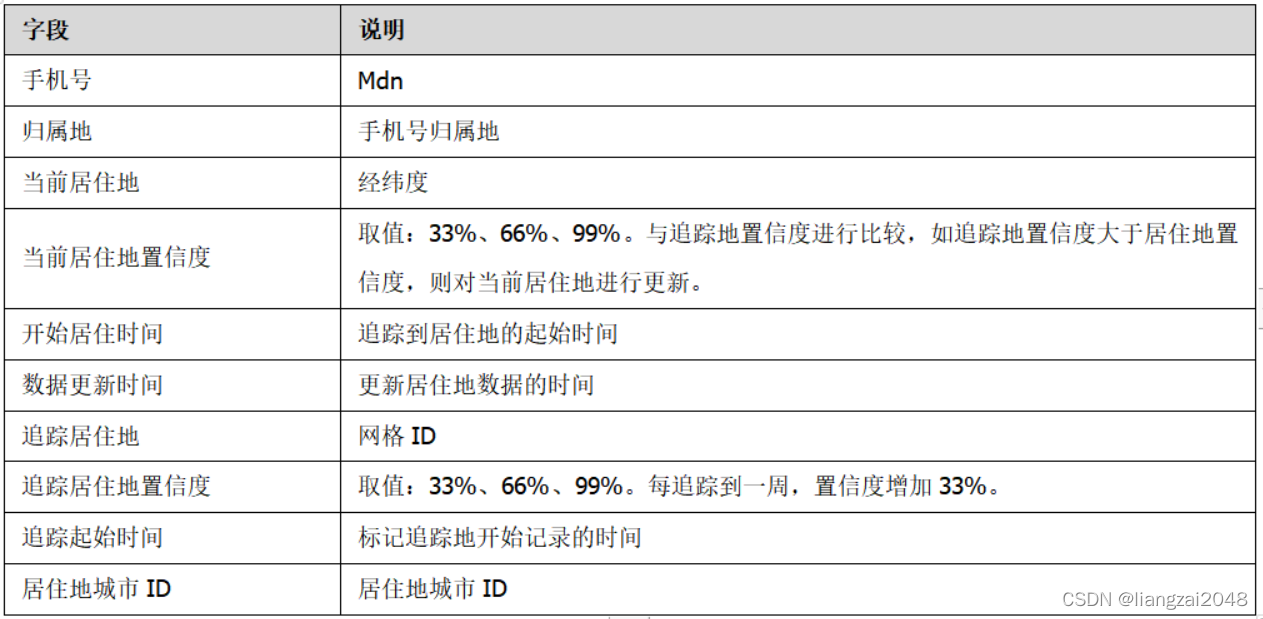

2.3.3当前居住地表

通过手机信令上报的位置数据,长期跟踪、监测用户在休息时间经常出现的地区,在本文档中定义为居住地。通过对比追踪地表,综合分析得到用户的居住地。

当前居住地表字段如下:

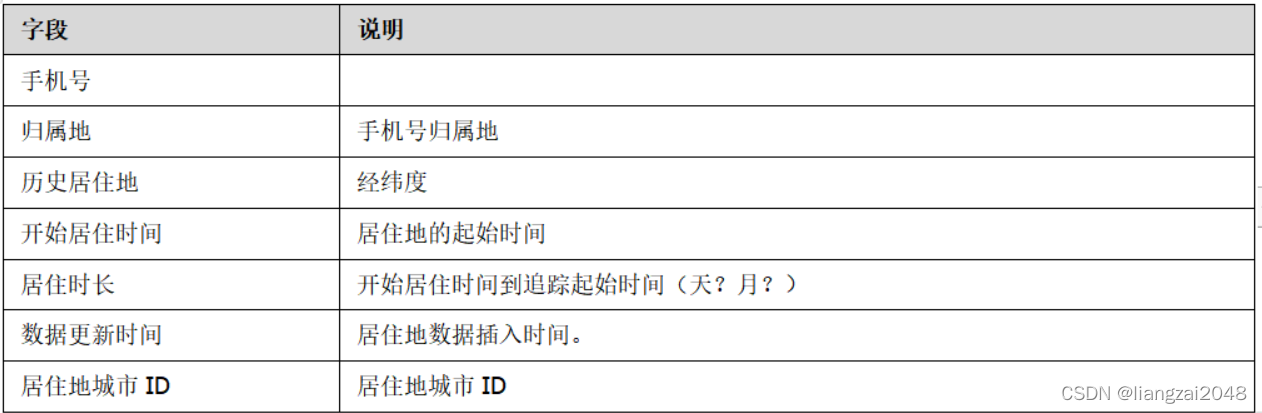

2.3.4历史居住地表

历史居住地表记录用户居住过至少一周的居住地信息。

历史居住地表字段如下:

2.3.5当前工作地表

通过手机信令上报的位置数据,长期跟踪、监测用户在工作时间经常出现的地区,在本文档中定义为工作地。通过对比追踪地表,综合分析得到用户的工作地。

当前工作地表字段如下:

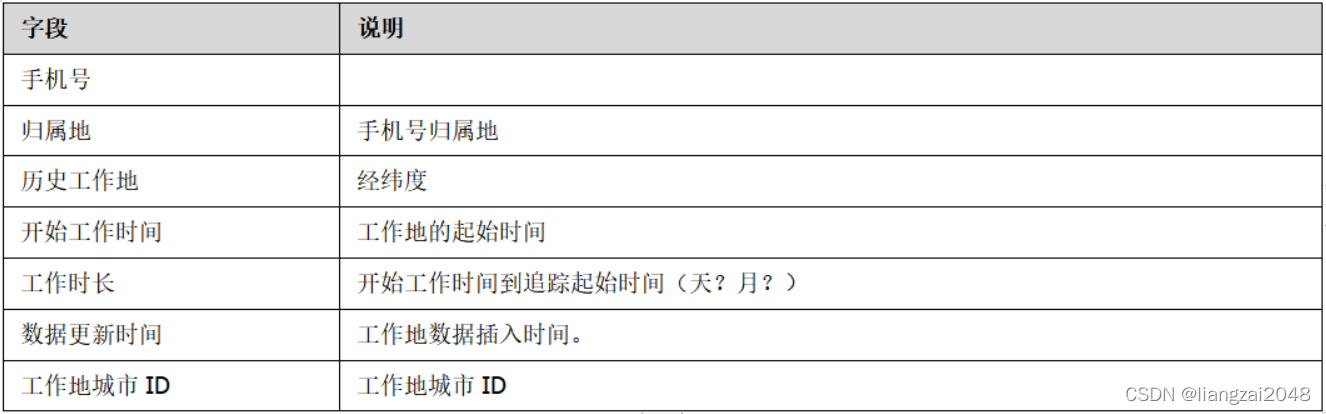

2.3.6历史工作地表

历史工作地表记录用户工作过至少一周的工作地信息。

历史工作地表字段如下:

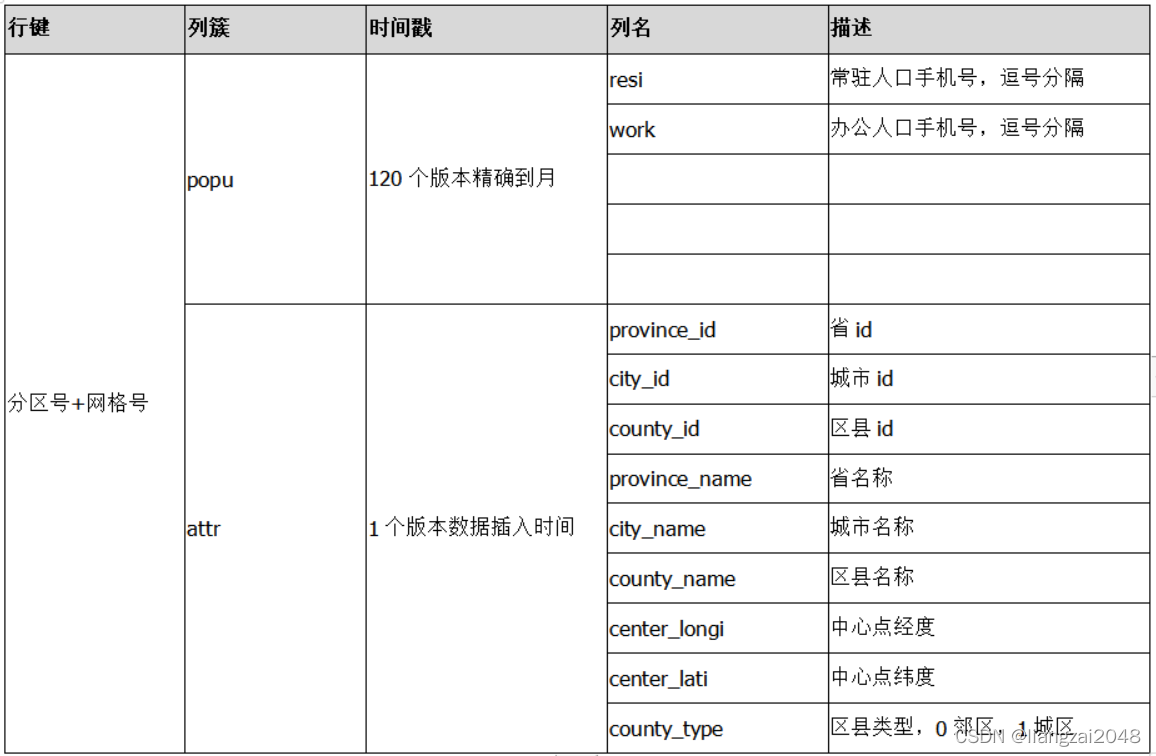

2.3.7网格标签表

网格标签表为实时接口提供网格级别的职住信息。

网格标签表字段如下:

2.3.8旅游专题职住表

旅游专题职住表汇总了各个职住表的信息。

旅游专题职住表字段如下:

2.4 跨域

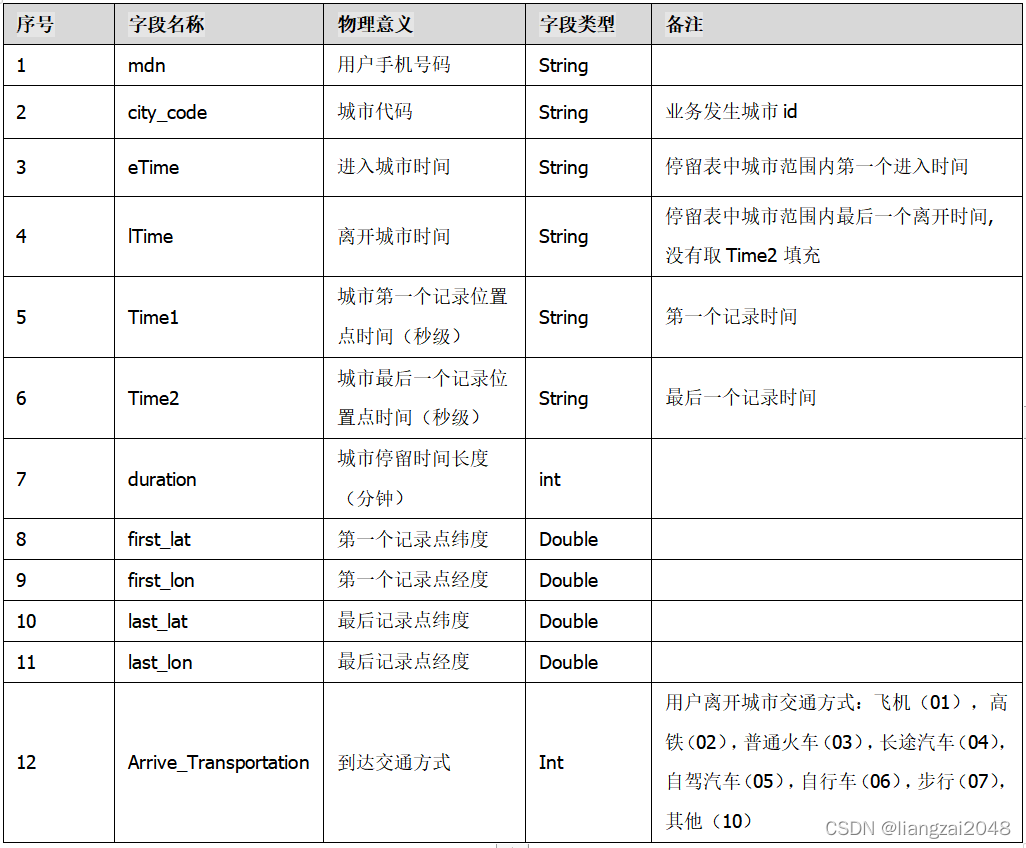

2.4.1最新城市跨域表

城市间跨域主要服务于城市间的人口流动,城市间的交通等。最新城市位置状态为未离开,用户仅有一条最新的记录。

最新城市跨域表字段如下:

2.4.2历史城市跨域表

城市间跨域主要服务于城市间的人口流动,城市间的交通等。城市跨域表要求实现历史跨城市的位置变化。

历史城市跨域表字段如下:

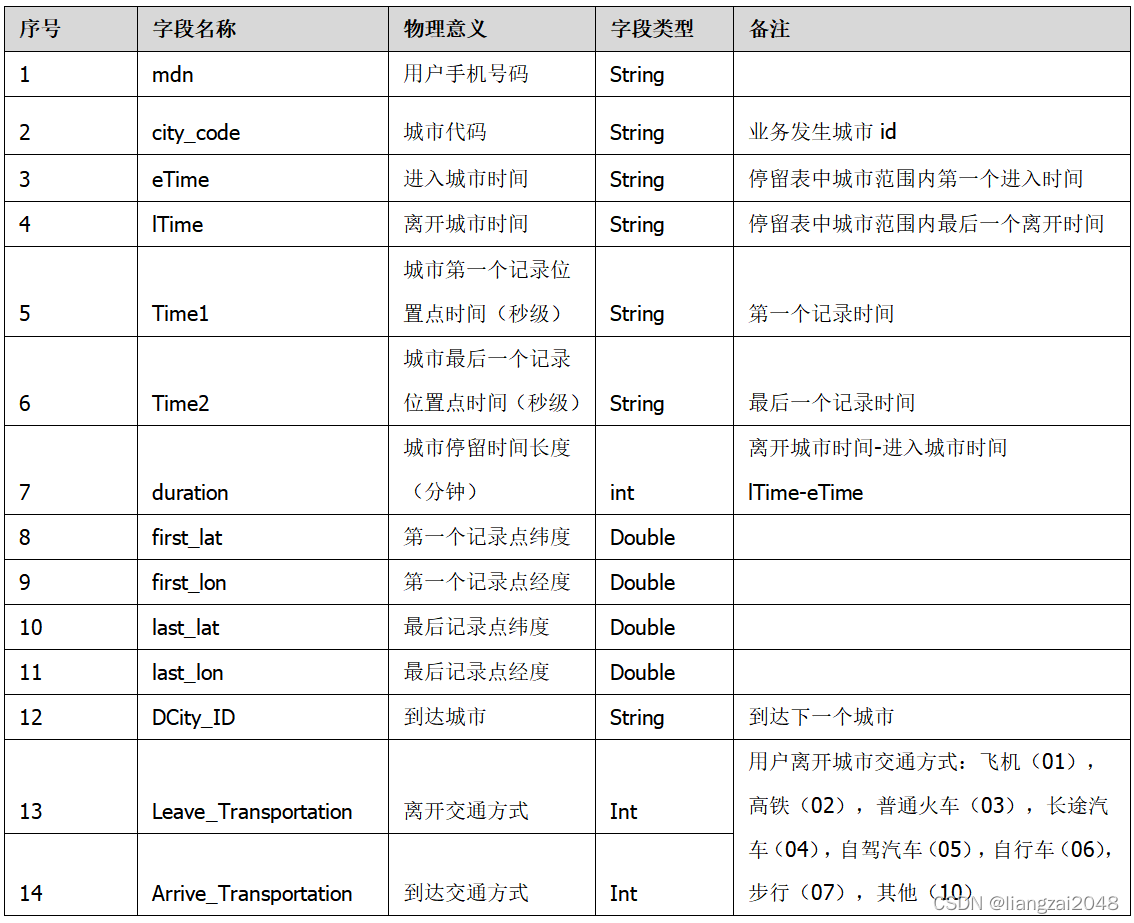

2.4.3最新区县跨域表

区县间跨域表反映用户在区县间的位置变化,通过停留表格中的区县字段获取变化区县间的数据信息。最新区县位置状态为未离开,用户仅有一条最新的记录。

最新区县跨域表字段如下:

2.4.4历史区县跨域表

区县间跨域表反映用户在区县间的位置变化,通过停留表格中的区县字段获取变化区县间的数据信息。区县跨域表要求实现历史跨区县的位置变化。

历史城市跨域表字段如下:

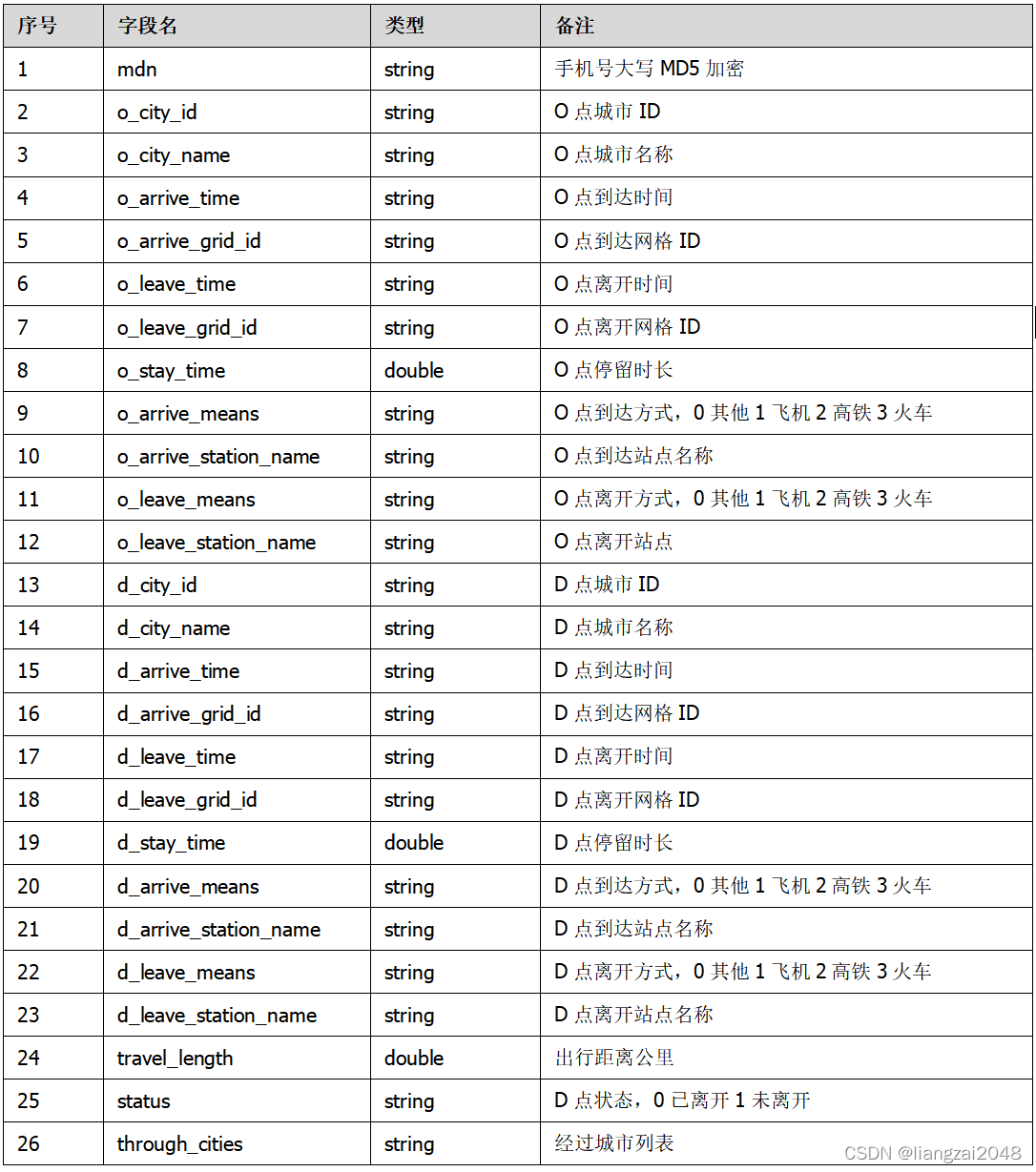

2.4.5城市OD表

记录用户在城市间的出行信息,不包括中间经过的城市。

城市OD表字段如下:

2.4.6区县OD表

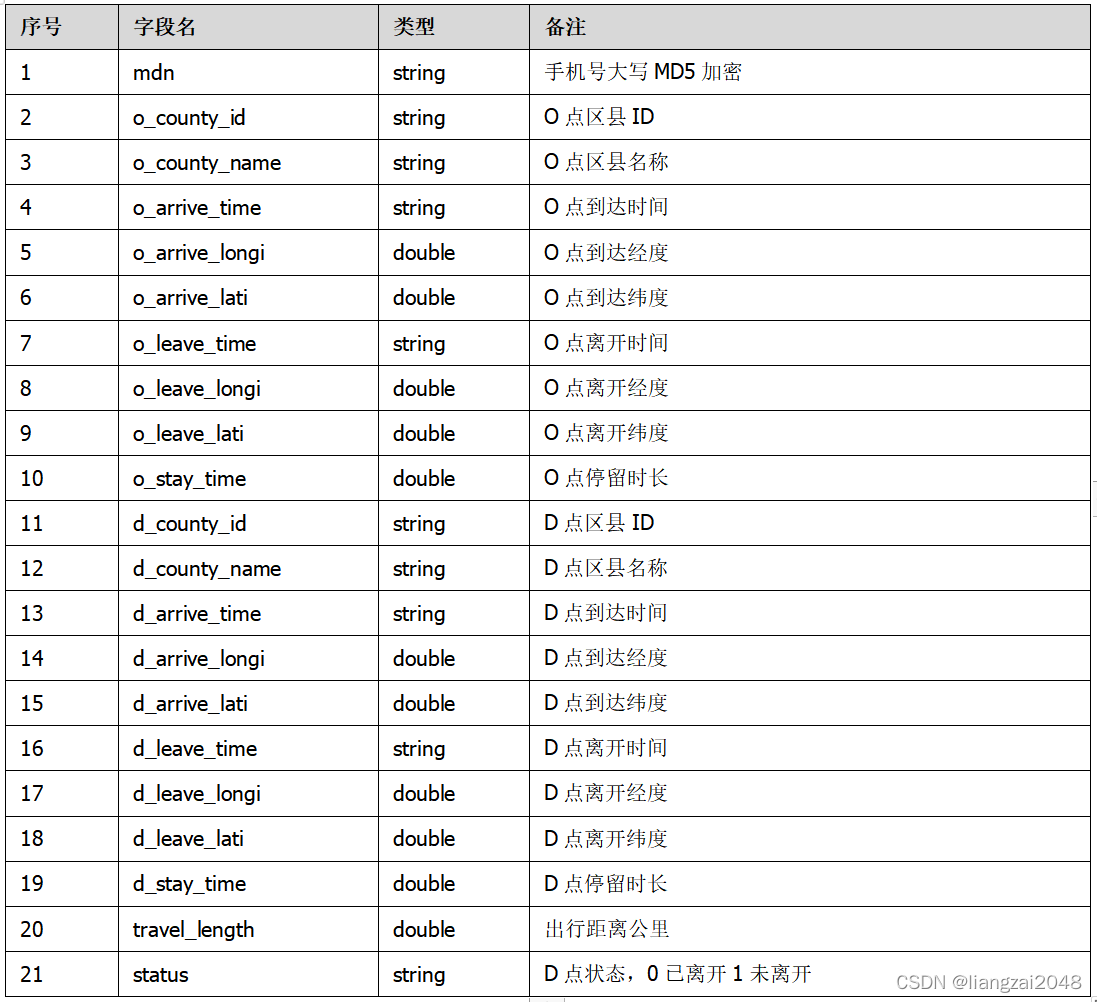

记录用户在区县间的出行信息。不包括中间经过的区县。

区县OD表字段如下:

2.5 准实时

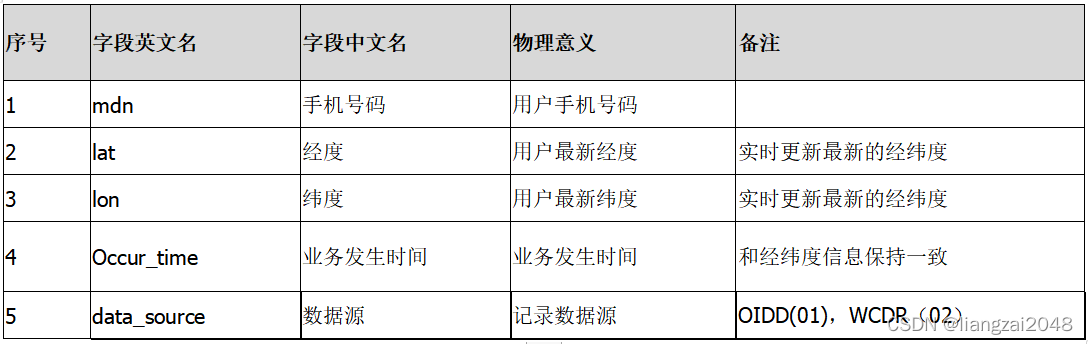

2.5.1准实时位置字段表

准实时位置数据是位置服务的基础数据之一,融合电信的OIDD信令数据和无线WCDR数据,提供用户的准实时的位置信息。

准实时位置字段表结构如下:

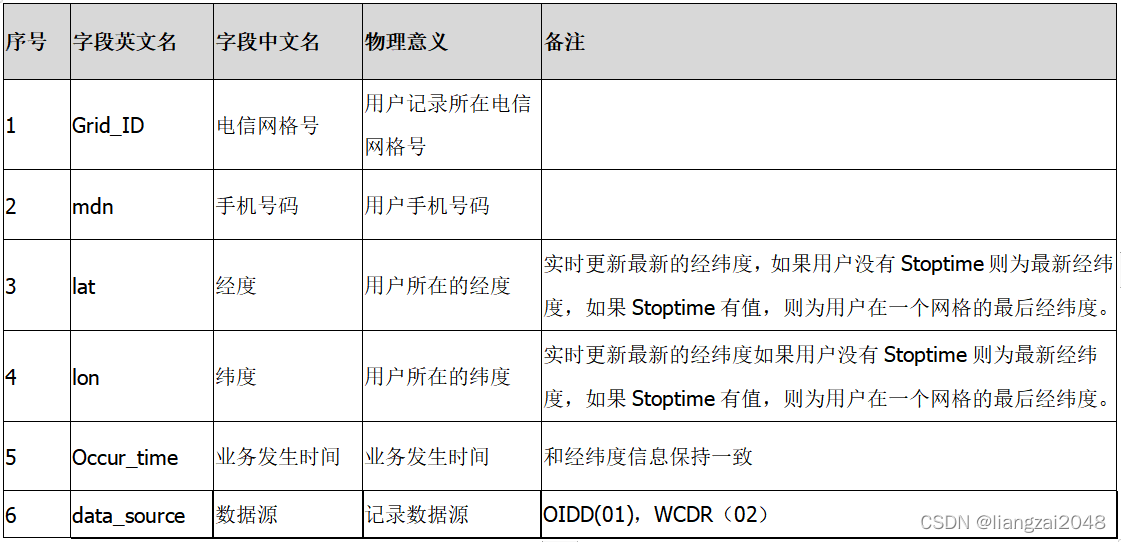

2.5.1准实时区域字段表

根据WCDR和OIDD的实时上传的位置数据,提取用户、时间、和位置信息,匹配网格信息和判断用户状态信息。准实时区域表主要应用于某最新时间点区域内的人口、当天历史区域停留。

准实时区域字段表结构如下:

3.分析层

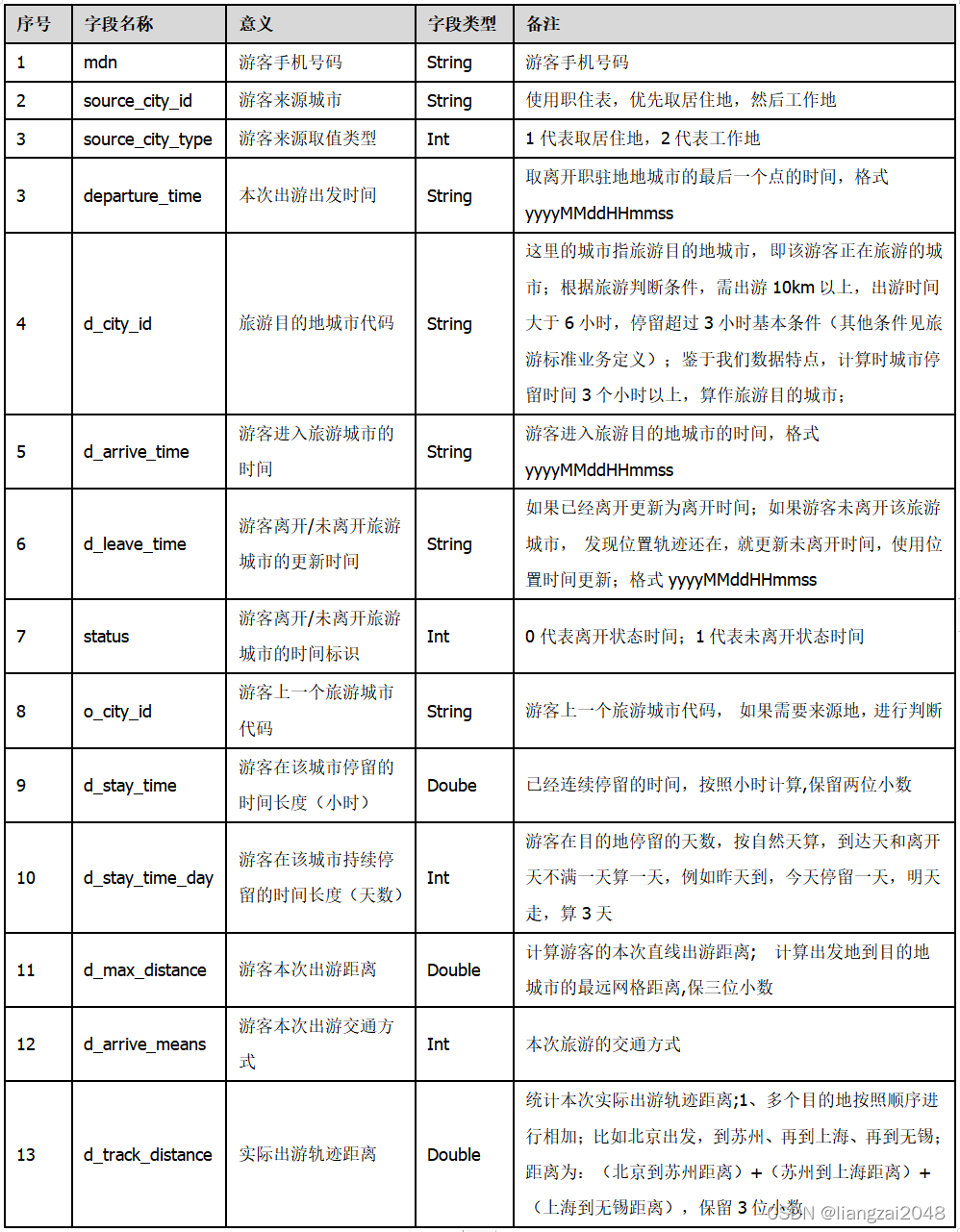

3.1省市目的地游客分析专题逻辑表-市级别

–

首先依据省市游客定义,基于区域底层融合表、停留表、职住表、OD表进行初次筛选分析,形成该表,用于向上分析省市游客,是目的地游客分析的基础表。

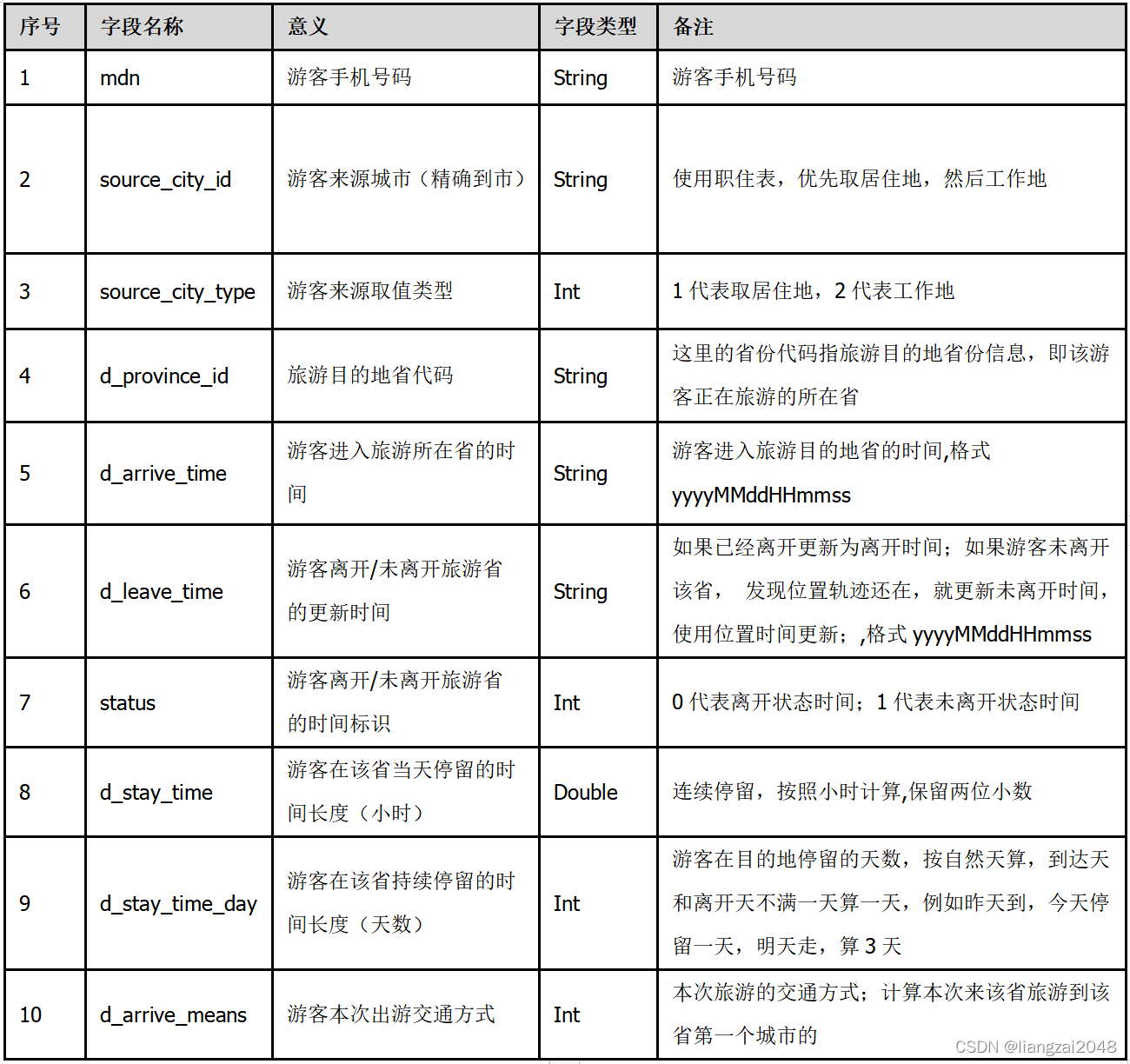

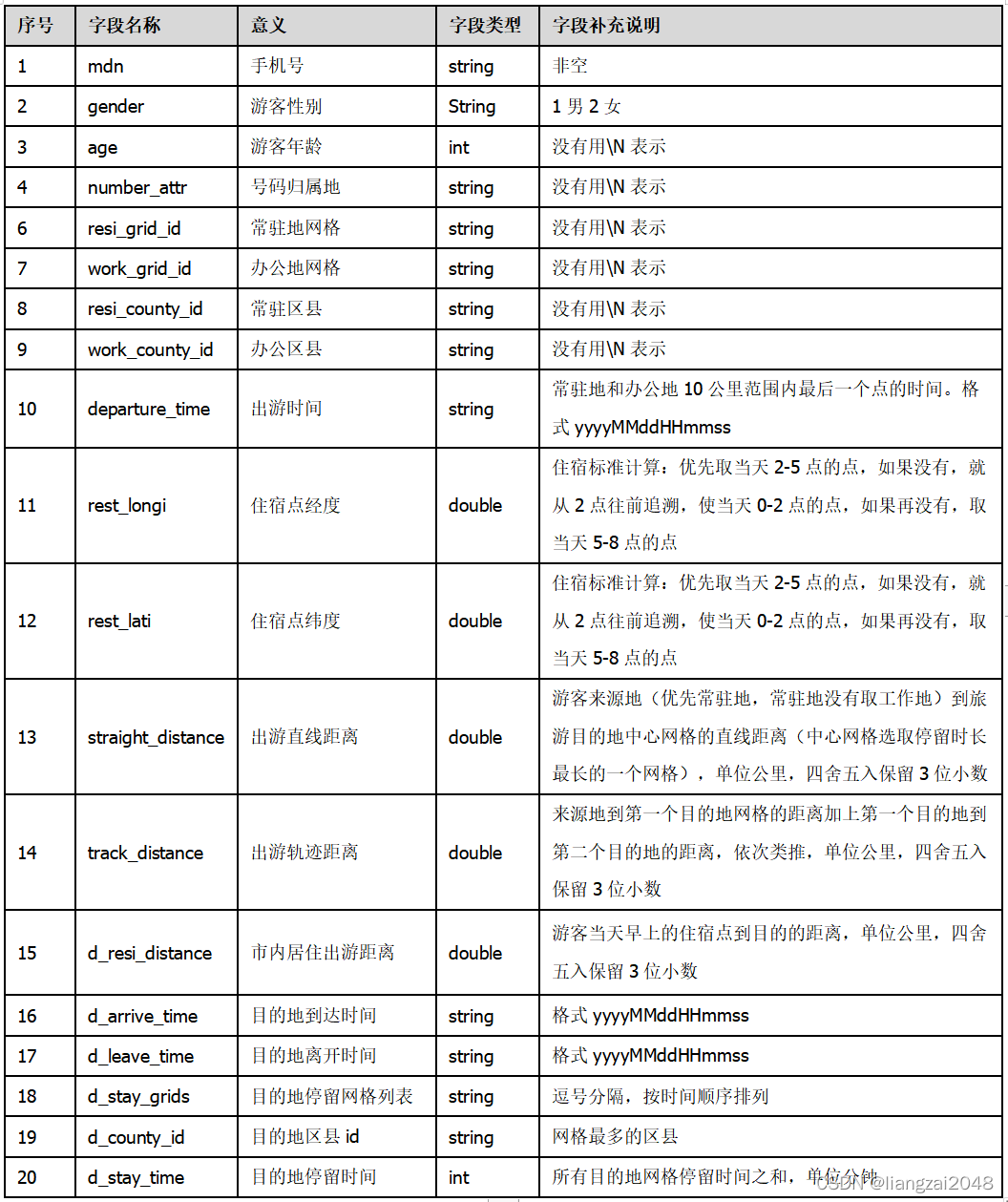

该表格字段如下:

3.2省市目的地游客分析专题逻辑表-省级别

首先依据省市游客定义,基于区域底层融合表、停留表、职住表、OD表进行初次筛选分析,形成该表,用于向上分析省市游客,是目的地游客分析的基础表。

该表格字段如下:

3.3区县级别目的地游客分析专题逻辑表

首先依据游客定义,基于区域底层融合表、停留表、职住表、OD表进行初次筛选分析,形成该表,用于向上分析省市游客,是目的地游客分析的基础表。

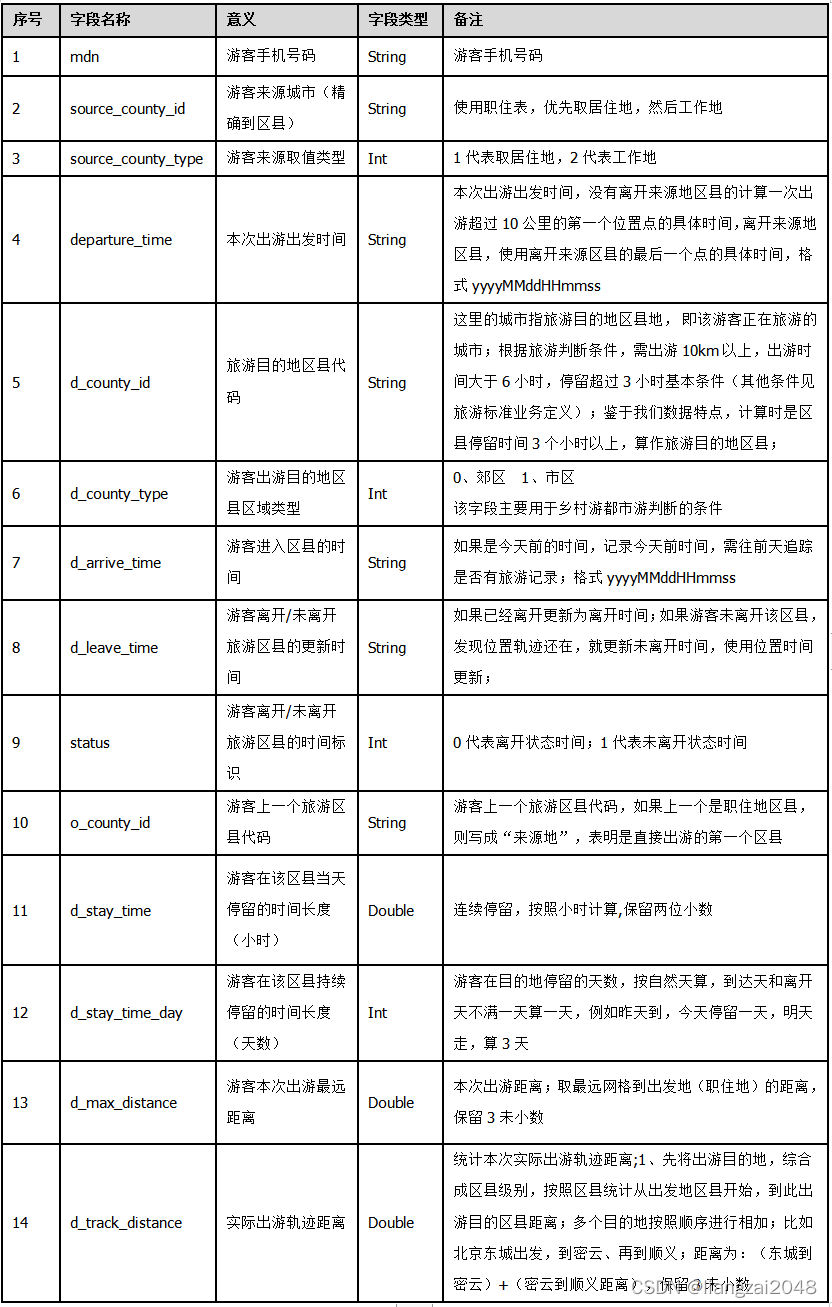

该表格字段如下:

3.4景区目的地游客分析专题逻辑表

依据游客定义,基于区域底层表格初次分析,形成该表,用于向上分析景区游客。

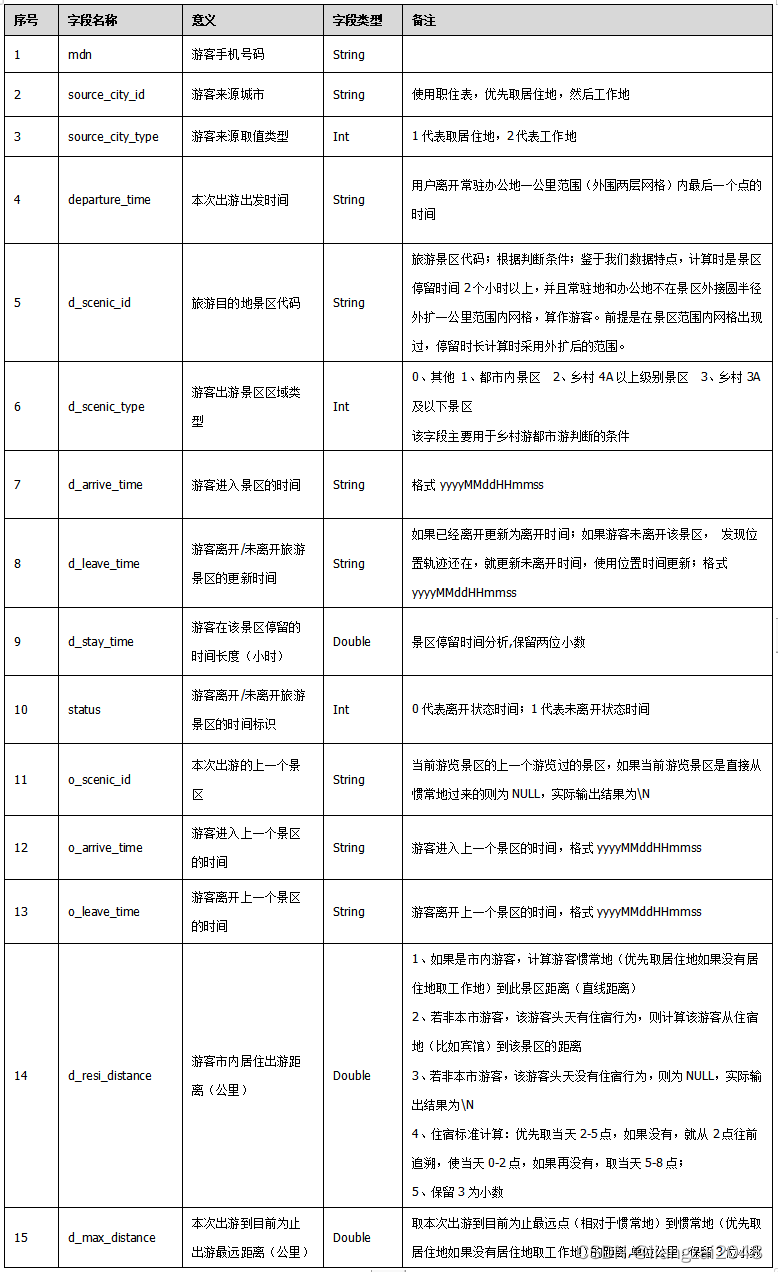

该表格字段如下:

3.5出行基础表

离开常驻地和办公地地十公里,一次出行后回到常驻办公地,整个行程称为一次出行。

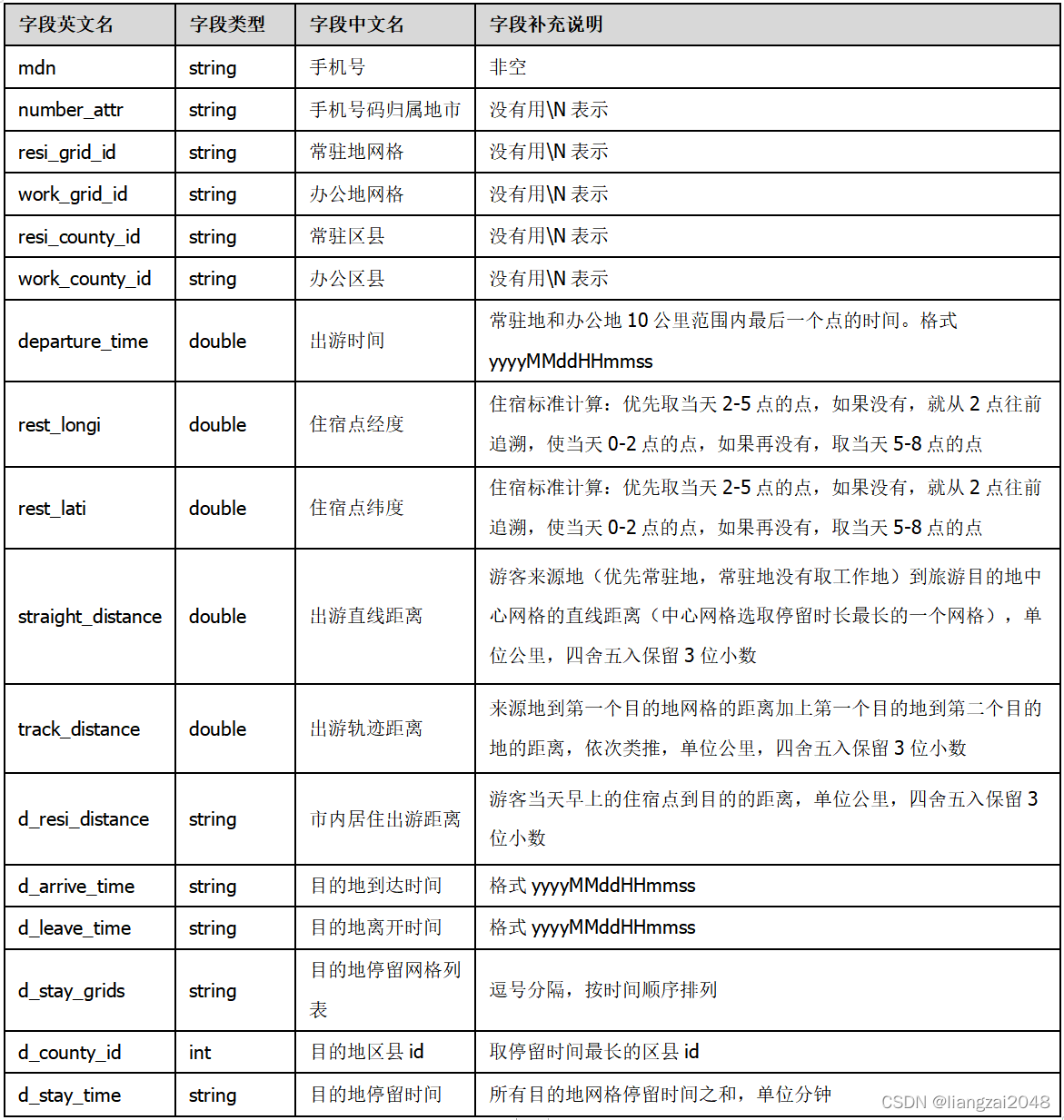

该表格字段如下:

3.6乡村游出游表

总的出行时长小于6小时,除去4A及以上景区,火车站,飞机场,城市核心区的停留时长剩余停留时长大于两小时的出行记录被筛选为乡村游出游。

该表格字段如下:

3.7游客旅游历史信息表

自公司有数据开始,统计游客旅游爱好历史信息,从位置上统计实际旅游行为,记录全国游客的基本旅游习惯信息。

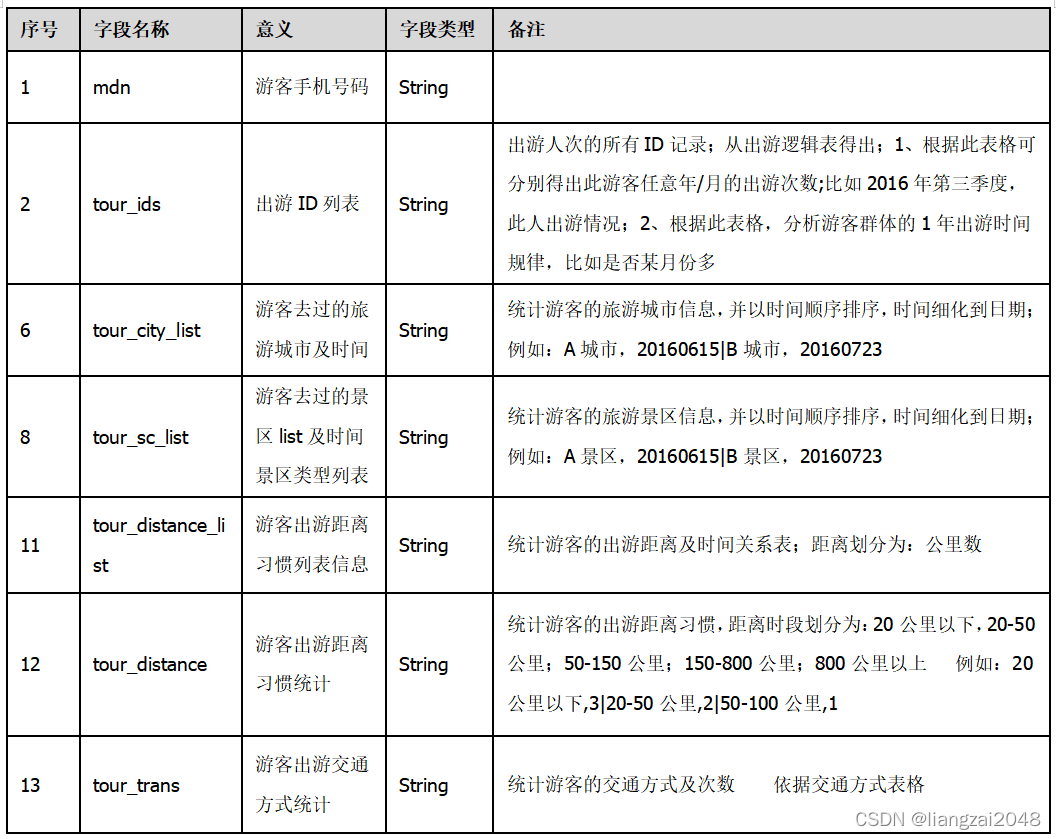

该表格字段如下:

3.8用户画像表

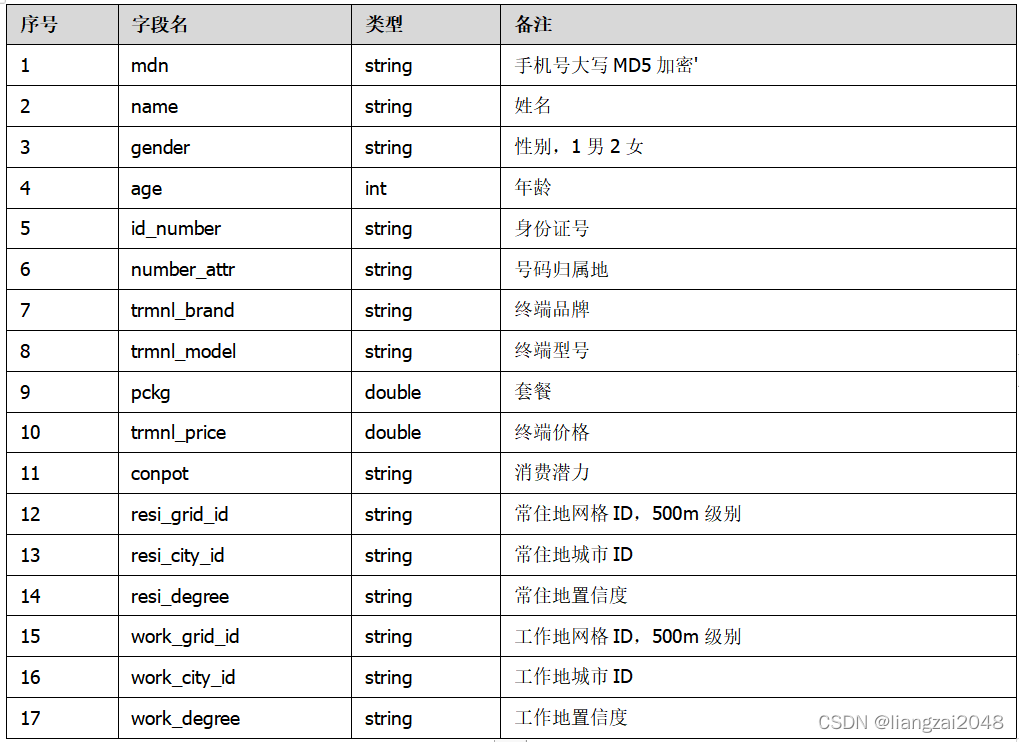

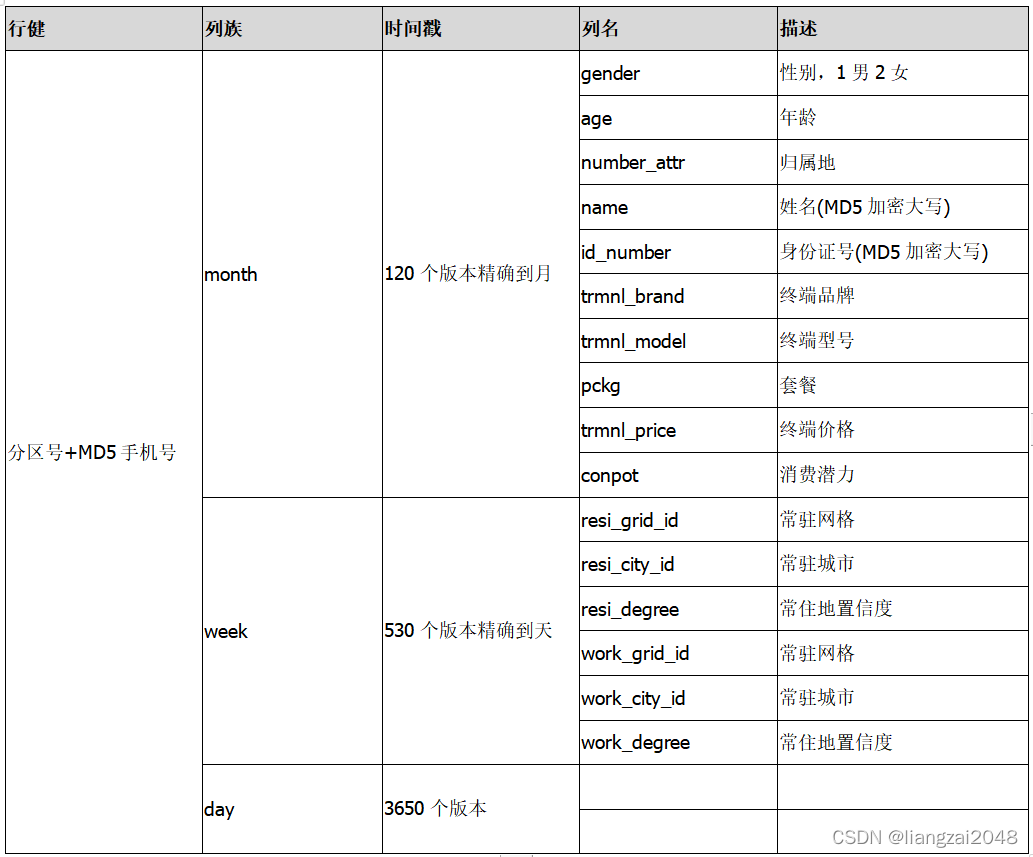

记录用户的基础信息,包括性别,年龄,身份证号,套餐,终端价格等。

该表格字段如下:

3.9用户标签表

记录用户的基础信息,包括性别,年龄,身份证号,套餐,终端价格等。

该表格字段如下:

4.应用层

4.1实时查询指标

提供标准化的实时查询接口,可供授权用户调用。

具体字段如下:

4.2景区指标

提供标准化的景区接口,可供授权用户调用。

具体字段如下:

4.3行政区指标

提供标准化的行政区接口,可供授权用户调用。

具体字段如下:

第三章

1、数据仓库搭建

1.1、开启hdfs的权限认证,以及ACL认证

1、普通权限认证只能控制到当前用户,当前用户所属 的组,其它用户 ,不能精确到每一个 其它用户。

2、ACl可以做到每一个用户权限认证。

修改hdfs-site.xml文件,将权限认证打开

vim /usr/local/soft/hadoop-2.7.6/etc/hadoop/hdfs-site.xml# 修改hdfs-site.xml文件,将权限认证打开<property><name>dfs.permissions</name><value>true</value></property><property><name>dfs.namenode.acls.enabled</name><value>true</value></property>

解决hive tmp目录权限不够问题

1、修改hive-site.xml文件

删除以下三行配置的value

hive.exec.local.scratchdir

hive.downloaded.resources.dir

hive.querylog.location

2、替换spark中的hive-site.xml文件

cp /usr/local/soft/hive-1.2.1/conf/hive-site.xml /usr/local/soft/spark-2.4.5/conf/

重启hadoop

# 重启hadoopstop-all.shstart-all.sh# 启动hive的元数据服务nohup hive --service metastore >> metastore.log 2>&1 &

验证http://master:50070是否能访问

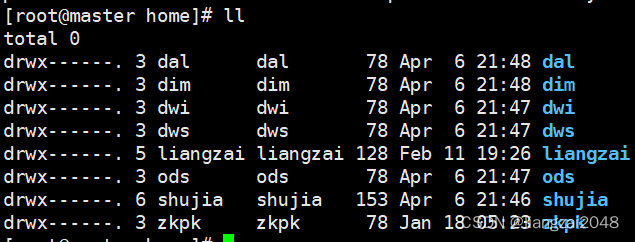

1.2、为每一个层创建一个用户

useradd odspasswd ods123456123456useradd dwipasswd dwi123456123456useradd dwspasswd dws123456123456useradd dimpasswd dim123456123456useradd dalpasswd dal123456123456

增加权限的命令

- 这个跟项目无关仅仅测试

# 增加权限的命令hadoop dfs chmod 755 /user755: 其它用户可以读取,不能修改# acl设置权限hdfs dfs -setfacl -R -m user:ods:r-x /flume/data/dir1# acl删除权限hdfs dfs -setfacl -R -x user:ods /flume/data/dir1# 查看权限hdfs dfs -getfacl /flume/data/dir11.3、为每一个层创建一个hive的库

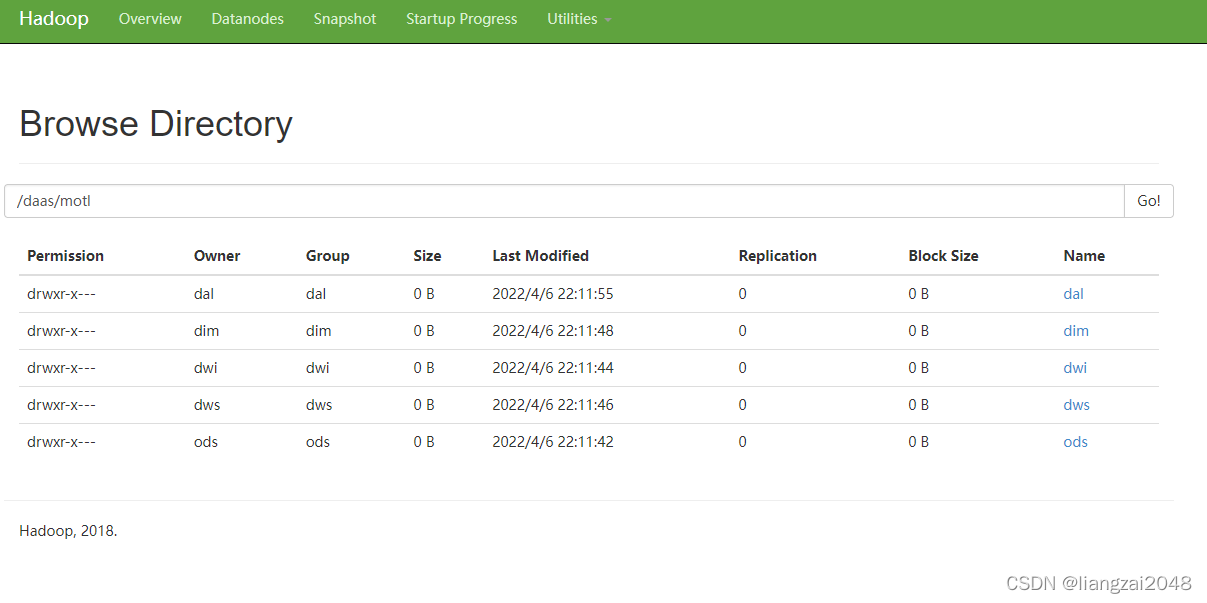

create database ods;create database dwi;create database dws;create database dim;create database dal;1.4、为每一个层在hdfs中创建一个目录

将目录的权限给到层所属的用户。

hadoop dfs -mkdir -p /daas/motl/odshadoop dfs -mkdir -p /daas/motl/dwihadoop dfs -mkdir -p /daas/motl/dwshadoop dfs -mkdir -p /daas/motl/dimhadoop dfs -mkdir -p /daas/motl/dal# 修改权限hadoop dfs -chown ods:ods /daas/motl/odshadoop dfs -chown dwi:dwi /daas/motl/dwihadoop dfs -chown dws:dws /daas/motl/dwshadoop dfs -chown dim:dim /daas/motl/dimhadoop dfs -chown dal:dal /daas/motl/dalhadoop dfs -chmod 750 /daas/motl/odshadoop dfs -chmod 750 /daas/motl/dwihadoop dfs -chmod 750 /daas/motl/dwshadoop dfs -chmod 750 /daas/motl/dimhadoop dfs -chmod 750 /daas/motl/dal

1.5、将本地hive目录的权限设置为777

chmod 777 /usr/local/soft/hive-1.2.1/每次使用其它用户进hie的时候需要使用root用户删除tmp目录# 设置tmp目录的权限chmod 777 tmp# 使用ods用户进入hive测试create table ods.student(id string,name string,age int,gender string,clazz string)ROW FORMAT DELIMITED FIELDS TERMINATED BY ','STORED AS textfilelocation '/daas/motl/ods/student/';# 上传数据hadoop dfs -put students.txt /daas/motl/ods/student# 设置hdfs的 tmp目录权限hadoop dfs -chmod 777 /tmp# 其它用户需要使用这个额表的时候设置权限hdfs dfs -setfacl -m user:dwi:r-x /daas/motl/odshdfs dfs -setfacl -R -m user:dwi:r-x /daas/motl/ods/student/2、数据采集 – 使用ods用户操作

2.1 oidd数据采集

使用flume实时监控基站后台服务器的目录,将数据实时采集过来保存到hdfs中,保存在ods层中。

1、在hive中创建表

CREATE EXTERNAL TABLE IF NOT EXISTS ods.ods_oidd( mdn string comment '手机号码' ,start_time string comment '业务时间' ,county_id string comment '区县编码' ,longi string comment '经度' ,lati string comment '纬度' ,bsid string comment '基站标识' ,grid_id string comment '网格号' ,biz_type string comment '业务类型' ,event_type string comment '事件类型' ,data_source string comment '数据源' ) comment 'oidd'PARTITIONED BY ( day_id string comment '天分区' ) ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t' STORED AS INPUTFORMAT 'org.apache.hadoop.mapred.TextInputFormat' OUTPUTFORMAT 'org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat' location '/daas/motl/ods/ods_oidd';2、将数据上传到服务器

使用ods用户上传

上传到/home/ods

3、使用flume监控基站日志目录采集数据

# rootcd /home/odschmod 777 oidd# odscd /home/ods# 文件名:flume-oss-oidd-to-hdfs.properties# a表示给agent命名为a# 给source组件命名为r1a.sources = r1# 给sink组件命名为k1a.sinks = k1 # 给channel组件命名为c1a.channels = c1#指定spooldir的属性a.sources.r1.type = spooldir a.sources.r1.spoolDir = /home/ods/oidda.sources.r1.fileHeader = true a.sources.r1.interceptors = i1 a.sources.r1.interceptors.i1.type = timestamp#指定sink的类型a.sinks.k1.type = hdfsa.sinks.k1.hdfs.path = /daas/motl/ods/ods_oidd/day_id=%Y%m%d# 指定文件名前缀a.sinks.k1.hdfs.filePrefix = oidd# 指定达到多少数据量写一次文件 单位:bytesa.sinks.k1.hdfs.rollSize = 70240000# 指定多少条写一次文件a.sinks.k1.hdfs.rollCount = 600000# 指定文件类型为 流 来什么输出什么a.sinks.k1.hdfs.fileType = DataStream# 指定文件输出格式 为texta.sinks.k1.hdfs.writeFormat = text# 指定文件名后缀a.sinks.k1.hdfs.fileSuffix = .txt#指定channela.channels.c1.type = memory a.channels.c1.capacity = 10000# 表示sink每次会从channel里取多少数据a.channels.c1.transactionCapacity = 1000# 组装a.sources.r1.channels = c1 a.sinks.k1.channel = c14、启动flume

nohup flume-ng agent -n a -f ./flume-oss-oidd-to-hdfs.properties -Dflume.root.logger=DEBUG,console &5、查看结果

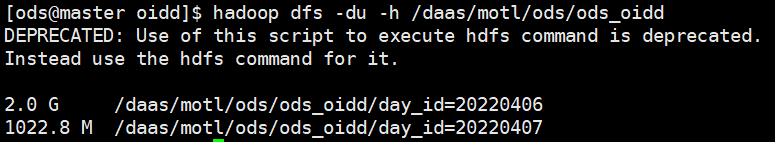

# 查看flume是否运行成功tail -f nohup.outhadoop dfs -du -h /daas/motl/ods/ods_oidd/day_id=20220406hadoop dfs -du -h /daas/motl/ods/ods_oidd

6、增加分区

# 增加一个分区# alter table ods.ods_oidd add if not exists partition(day_id='20220407') ;# 刷新分区,会自动扫描hdfs中目录,增加所有分区MSCK REPAIR TABLE ods.ods_oidd;2.2、采集crm系统中的数据

1、在mysql中创建crm数据库

create database crm default character set utf8 default collate utf8_general_ci;2、创建表

用户画像表

CREATE TABLE `usertag` ( mdn varchar(255) ,name varchar(255) ,gender varchar(255) ,age int(10) ,id_number varchar(255) ,number_attr varchar(255) ,trmnl_brand varchar(255) ,trmnl_price varchar(255) ,packg varchar(255) ,conpot varchar(255) ,resi_grid_id varchar(255) ,resi_county_id varchar(255)) ENGINE=InnoDB DEFAULT CHARSET=utf8;# 加载数据LOAD DATA LOCAL INFILE 'usertag.txt' INTO TABLE usertag FIELDS TERMINATED BY ',' ;

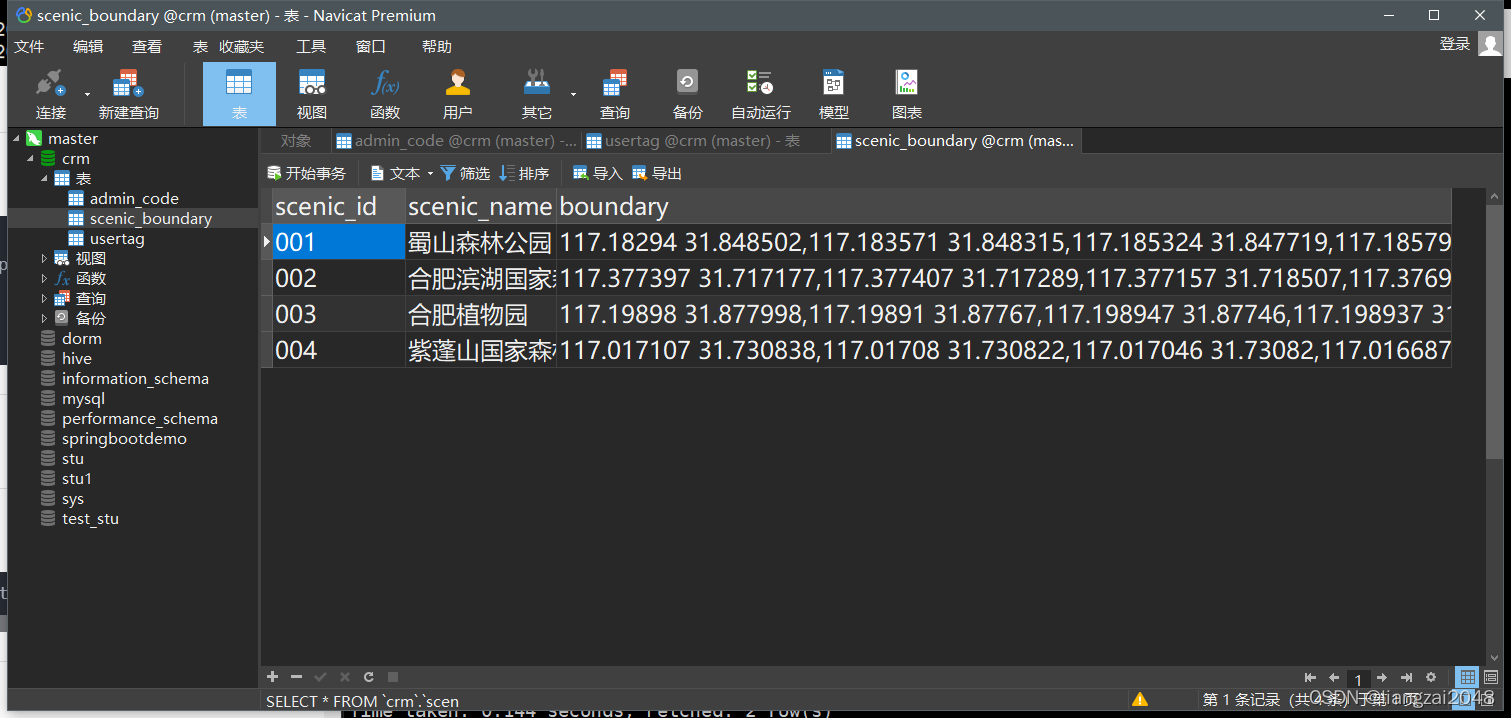

景区配置表

CREATE TABLE scenic_boundary ( scenic_id varchar(255) , scenic_name varchar(255) , boundary text ) ;# 加载数据LOAD DATA LOCAL INFILE 'scenic_boundary.txt' INTO TABLE scenic_boundary FIELDS TERMINATED BY '|' ;

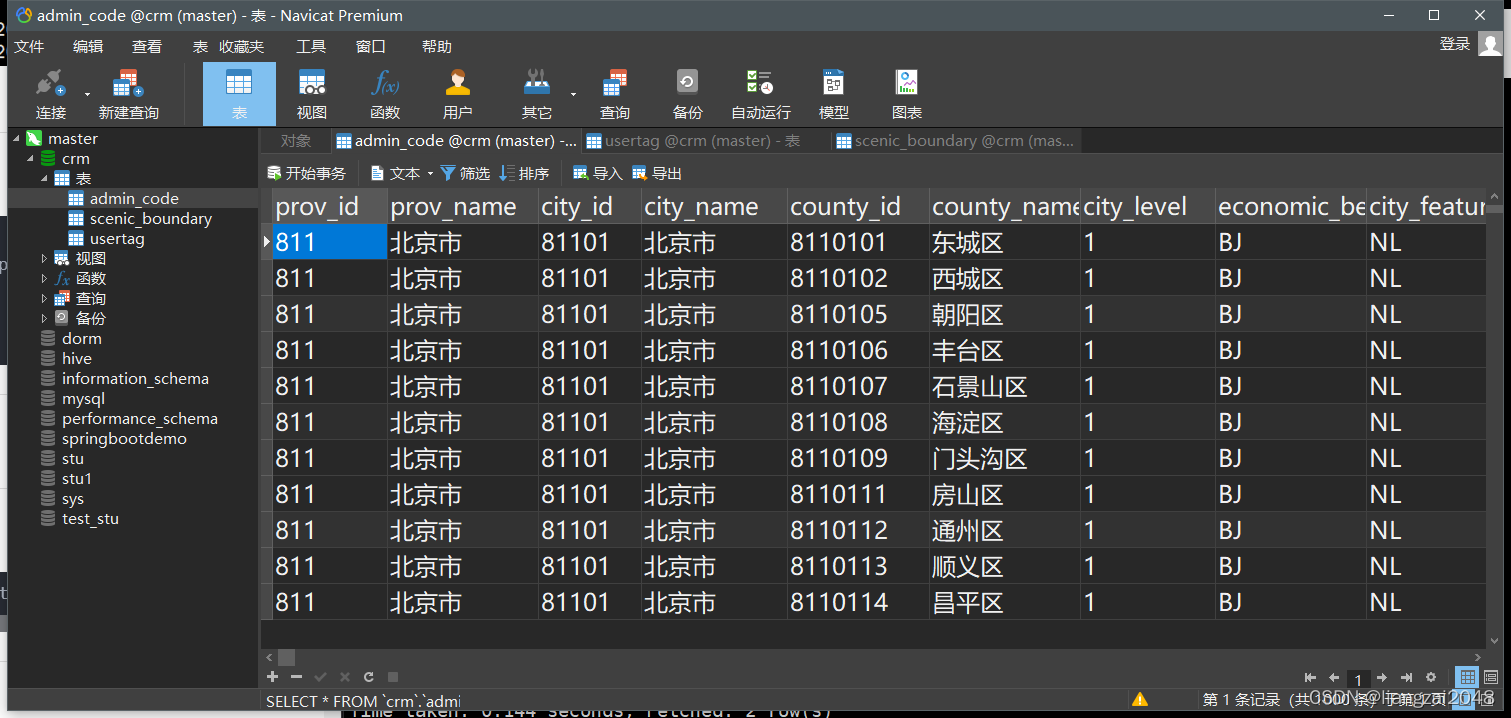

行政区配置表

CREATE TABLE admin_code ( prov_id varchar(255) ,prov_name varchar(255) ,city_id varchar(255) ,city_name varchar(255) ,county_id varchar(255) ,county_name varchar(255) ,city_level varchar(255) ,economic_belt varchar(255),city_feature1 varchar(255) ) ;# 加载数据LOAD DATA LOCAL INFILE 'ssxdx.txt' INTO TABLE admin_code FIELDS TERMINATED BY ',' ;

3、先在hive的ods层中创建表

用户画像表

CREATE EXTERNAL TABLE IF NOT EXISTS ods.ods_usertag_d ( mdn string comment '手机号大写MD5加密' ,name string comment '姓名' ,gender string comment '性别,1男2女' ,age string comment '年龄' ,id_number string comment '证件号码' ,number_attr string comment '号码归属地' ,trmnl_brand string comment '终端品牌' ,trmnl_price string comment '终端价格' ,packg string comment '套餐' ,conpot string comment '消费潜力' ,resi_grid_id string comment '常住地网格' ,resi_county_id string comment '常住地区县' ) comment '用户画像表'PARTITIONED BY ( day_id string comment '天分区' ) ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t' STORED AS INPUTFORMAT 'org.apache.hadoop.mapred.TextInputFormat' OUTPUTFORMAT 'org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat' location '/daas/motl/ods/ods_usertag_d'; # 增加分区alter table ods.ods_usertag_d add if not exists partition(day_id='20220409') ;景区配置表

CREATE EXTERNAL TABLE IF NOT EXISTS ods.ods_scenic_boundary ( scenic_id string comment '景区id' ,scenic_name string comment '景区名称' ,boundary string comment '景区边界' ) comment '景区配置表'ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t' STORED AS INPUTFORMAT 'org.apache.hadoop.mapred.TextInputFormat' OUTPUTFORMAT 'org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat' location '/daas/motl/ods/ods_scenic_boundary'; 行政区配置表

CREATE EXTERNAL TABLE IF NOT EXISTS ods.ods_admincode ( prov_id string comment '省id' ,prov_name string comment '省名称' ,city_id string comment '市id' ,city_name string comment '市名称' ,county_id string comment '区县id' ,county_name string comment '区县名称' ,city_level string comment '城市级别,一级为1;二级为2...依此类推' ,economic_belt string comment 'BJ为首都经济带、ZSJ为珠三角经济带、CSJ为长三角经济带、DB为东北经济带、HZ为华中经济带、HB为华北经济带、HD为华东经济带、HN为华南经济带、XB为西北经济带、XN为西南经济带' ,city_feature1 string comment 'NL代表内陆、YH代表沿海' ) comment '行政区配置表'ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t' STORED AS INPUTFORMAT 'org.apache.hadoop.mapred.TextInputFormat' OUTPUTFORMAT 'org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat' location '/daas/motl/ods/ods_admincode'; 4、使用datax 采集数据

用户画像表

编写datax 脚本

datax-crm-usertag-mysql-to-hive.json

{ "job": { "content": [ { "reader": { "name": "mysqlreader", "parameter": { "connection": [{ "jdbcUrl": [ "jdbc:mysql://master:3306/crm" ], "table": [ "usertag" ],} ], "column": ["*"], "password": "123456", "username": "root" } }, "writer": { "name": "hdfswriter", "parameter": { "defaultFS": "hdfs://master:9000", "fileType": "text", "path": "/daas/motl/ods/ods_usertag_d/day_id=${day_id}", "fileName": "data", "column": [{ "name": "mdn", "type": "STRING"},{ "name": "name", "type": "STRING"},{ "name": "gender", "type": "STRING"},{ "name": "age", "type": "INT"},{ "name": "id_number", "type": "STRING"},{ "name": "number_attr", "type": "STRING"},{ "name": "trmnl_brand", "type": "STRING"},{ "name": "trmnl_price", "type": "STRING"},{ "name": "packg", "type": "STRING"},{ "name": "conpot", "type": "STRING"},{ "name": "resi_grid_id", "type": "STRING"},{ "name": "resi_county_id", "type": "STRING"} ], "writeMode": "append", "fieldDelimiter": "\t" } } } ], "setting": { "errorLimit": { "percentage": 0, "record": 0 }, "speed": { "channel": 4, "record": 1000 } } }}运行脚本

datax.py -p "-Dday_id=20220406" datax-crm-usertag-mysql-to-hive.json

行政区配置表

编写脚本

datax-crm-admin-code-mysql-to-hive.json

{ "job": { "content": [ { "reader": { "name": "mysqlreader", "parameter": { "connection": [{ "jdbcUrl": [ "jdbc:mysql://master:3306/crm" ], "table": [ "admin_code" ],} ], "column": ["*"], "password": "123456", "username": "root" } }, "writer": { "name": "hdfswriter", "parameter": { "defaultFS": "hdfs://master:9000", "fileType": "text", "path": "/daas/motl/ods/ods_admincode", "fileName": "data", "column": [{ "name": "prov_id", "type": "STRING"},{ "name": "prov_name", "type": "STRING"},{ "name": "city_id", "type": "STRING"},{ "name": "city_name", "type": "STRING"},{ "name": "county_id", "type": "STRING"},{ "name": "county_name", "type": "STRING"},{ "name": "city_level", "type": "STRING"},{ "name": "economic_belt", "type": "STRING"},{ "name": "city_feature1", "type": "STRING"} ], "writeMode": "append", "fieldDelimiter": "\t" } } } ], "setting": { "errorLimit": { "percentage": 0, "record": 0 }, "speed": { "channel": 1, "record": 1000 } } }}运行脚本

datax.py datax-crm-admin-code-mysql-to-hive.json

景区配置表

编写脚本

datax-crm-scenic_boundary-mysql-to-hive.json

{ "job": { "content": [ { "reader": { "name": "mysqlreader", "parameter": { "connection": [{ "jdbcUrl": [ "jdbc:mysql://master:3306/crm" ], "table": [ "scenic_boundary" ],} ], "column": ["*"], "password": "123456", "username": "root" } }, "writer": { "name": "hdfswriter", "parameter": { "defaultFS": "hdfs://master:9000", "fileType": "text", "path": "/daas/motl/ods/ods_scenic_boundary", "fileName": "data", "column": [{ "name": "scenic_id", "type": "STRING"},{ "name": "scenic_name", "type": "STRING"},{ "name": "boundary", "type": "STRING"} ], "writeMode": "append", "fieldDelimiter": "\t" } } } ], "setting": { "errorLimit": { "percentage": 0, "record": 0 }, "speed": { "channel": 1, "record": 1000 } } }}运行脚本

datax.py datax-crm-scenic_boundary-mysql-to-hive.json

``第四章```

位置融合表

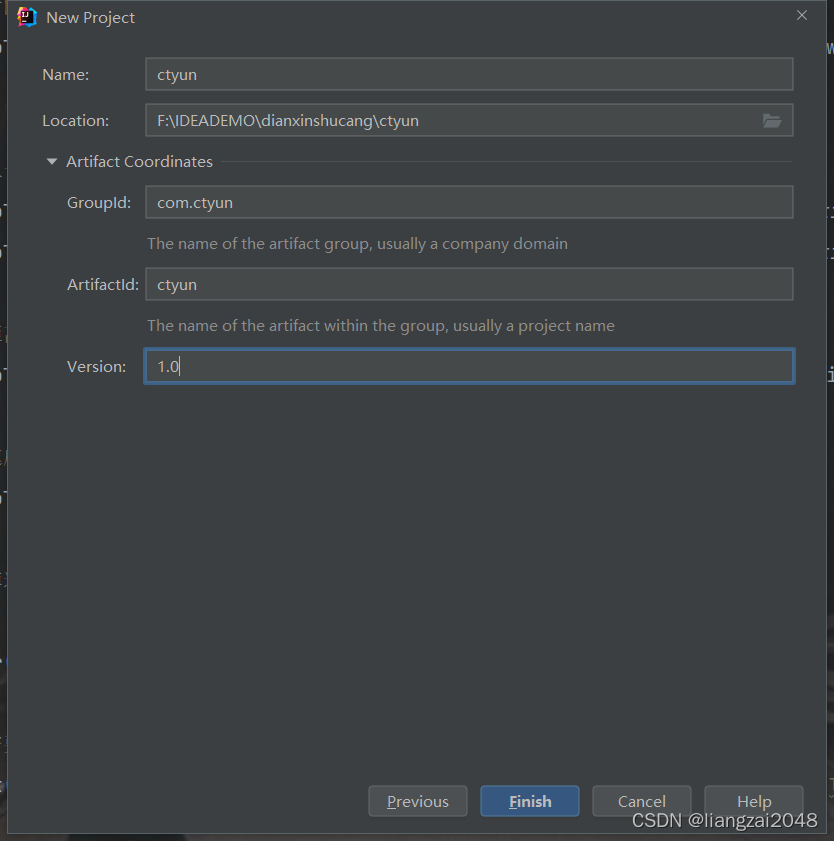

新建IDEA的Maven项目

新建ods层

新建dwi层

新建common公共模块

将ods本地脚本上传到ods的resources目录下

在外部 pom文件导入spark依赖(和公司spark版本一致)

<properties> <scala.version>2.11.12</scala.version> <spark.version>2.4.5</spark.version> <scala.library.version>2.11</scala.library.version> <java.version>1.8</java.version> </properties> <dependencyManagement> <dependencies> <dependency> <groupId>org.scala-lang</groupId> <artifactId>scala-library</artifactId> <version>${scala.version}</version> </dependency> <dependency> <groupId>org.scala-lang</groupId> <artifactId>scala-compiler</artifactId> <version>${scala.version}</version> </dependency> <dependency> <groupId>org.scala-lang</groupId> <artifactId>scala-reflect</artifactId> <version>${scala.version}</version> </dependency> <dependency> <groupId>org.apache.spark</groupId> <artifactId>spark-sql_${scala.library.version}</artifactId> <version>${spark.version}</version> </dependency> </dependencies> </dependencyManagement> <build> <pluginManagement> <plugins> <plugin> <groupId>org.apache.maven.plugins</groupId> <artifactId>maven-compiler-plugin</artifactId> <version>3.1</version> <configuration> <source>${java.version}</source> <target>${java.version}</target> </configuration> </plugin> <plugin> <groupId>org.scala-tools</groupId> <artifactId>maven-scala-plugin</artifactId> <version>2.15.2</version> <executions> <execution><goals> <goal>compile</goal> <goal>testCompile</goal></goals> </execution> </executions> </plugin> </plugins> </pluginManagement> </build>在dwi层导入ctyun的scala依赖

<dependencies> <dependency> <groupId>org.scala-lang</groupId> <artifactId>scala-library</artifactId> </dependency> <dependency> <groupId>org.scala-lang</groupId> <artifactId>scala-compiler</artifactId> </dependency> <dependency> <groupId>org.scala-lang</groupId> <artifactId>scala-reflect</artifactId> </dependency> <dependency> <groupId>org.apache.spark</groupId> <artifactId>spark-sql_${scala.library.version}</artifactId> </dependency> </dependencies> <build> <plugins> <plugin> <groupId>org.apache.maven.plugins</groupId> <artifactId>maven-compiler-plugin</artifactId> </plugin> <plugin> <groupId>org.scala-tools</groupId> <artifactId>maven-scala-plugin</artifactId> </plugin> </plugins> </build>导入依赖完成后新建一个spark文件测试一下编译环境是否可用。

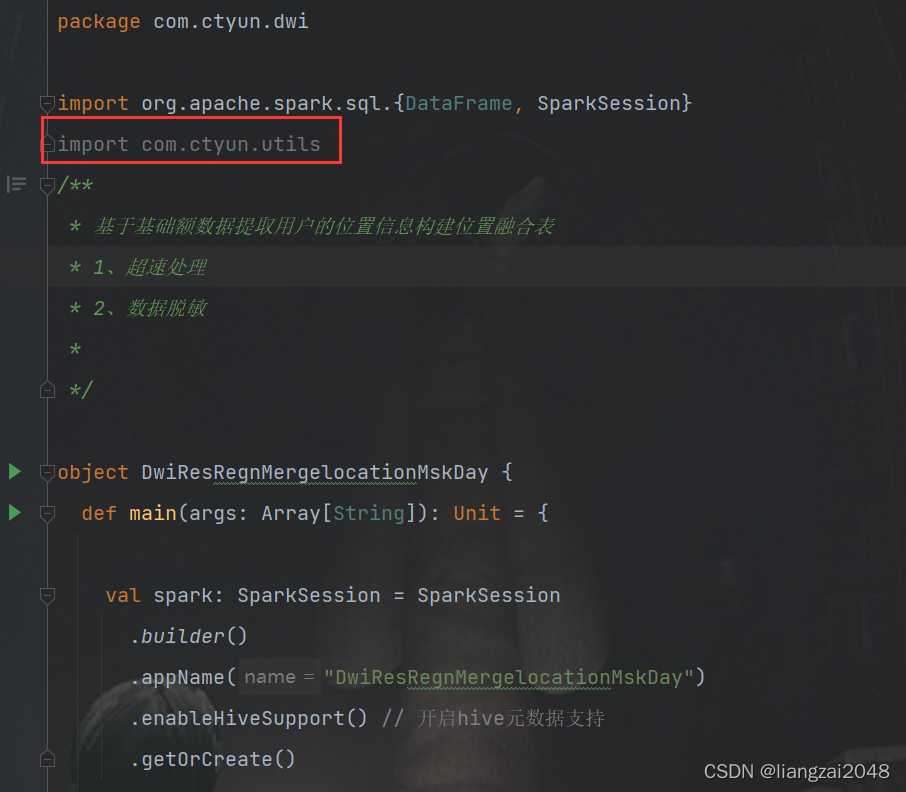

在dwi层创建DwiResRegnMergelocationMskDay类

new package

com.ctyun.dwi

基于基础额数据提取用户的位置信息构建位置融合表:

- 超速处理

- 数据脱敏

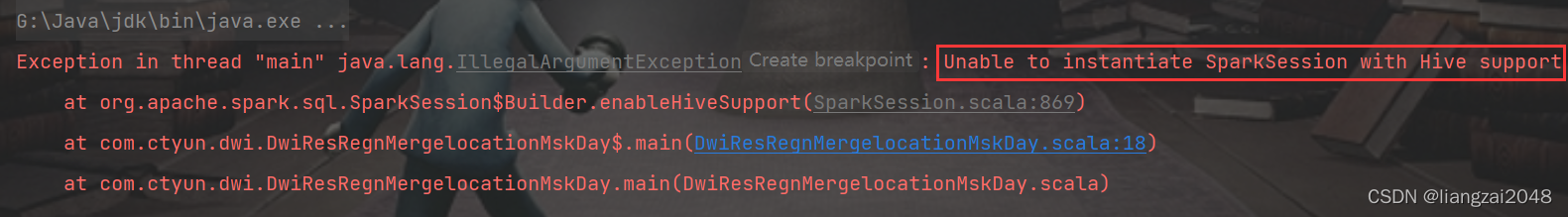

package com.ctyun.dwiimport org.apache.spark.sql.{DataFrame, SparkSession}/ * 基于基础额数据提取用户的位置信息构建位置融合表 * 1、超速处理 * 2、数据脱敏 * */object DwiResRegnMergelocationMskDay { def main(args: Array[String]): Unit = { val spark: SparkSession = SparkSession .builder() .appName("DwiResRegnMergelocationMskDay") .enableHiveSupport() // 开启hive元数据支持 .getOrCreate() / * 读取hive中oidd表 */ val oiddDF: DataFrame = spark.table("ods.ods_oidd") oiddDF.show() }}代码不能在本地运行,因为找不到相关的hive配置文件和依赖

只能写代码或sql打包运行

导入隐式转换

import spark.implicits._import org.apache.spark.sql.functions._计算两个经纬度之间的距离

在common中创建Geography的java类

package com.ctyun.utils;public class Geography { / * 地球半径 */ private static final double EARTH_RADIUS = 6378137; / * 计算两个经纬度之间的距离 * * @param longi1 经度1 * @param lati1 纬度1 * @param longi2 经度2 * @param lati2 纬度2 * @return 距离, 返回的是米级别的距离 */ public static double calculateLength(double longi1, double lati1, double longi2, double lati2) { double lat21 = lati1 * Math.PI / 180.0; double lat22 = lati2 * Math.PI / 180.0; double a = lat21 - lat22; double b = (longi1 - longi2) * Math.PI / 180.0; double sa2 = Math.sin(a / 2.0); double sb2 = Math.sin(b / 2.0); double d = 2 * EARTH_RADIUS * Math.asin(Math.sqrt(sa2 * sa2 + Math.cos(lat21) * Math.cos(lat22) * sb2 * sb2)); return Math.abs(d); }}dwi层引入 common层的依赖

- 外部pom

<dependency> <groupId>com.ctyun</groupId> <artifactId>common</artifactId> <version>1.0</version> </dependency>- dwi层

<dependency> <groupId>com.ctyun</groupId> <artifactId>common</artifactId> </dependency>- DwiResRegnMergelocationMskDay类

在时间线上做聚类操作

例如从家到公司上班,起始位置和结束位置都在一个地方,拿结束位置减去起始位置肯定是不行的。

在root的hive建表merge用于测试

CREATE EXTERNAL TABLE IF NOT EXISTS merge ( mdn string ,sdate string ,grid string) ROW FORMAT DELIMITED FIELDS TERMINATED BY ',' STORED AS INPUTFORMAT 'org.apache.hadoop.mapred.TextInputFormat' OUTPUTFORMAT 'org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat'location '/data/merge'; 时间聚类测试数据

17600195923,212111201532,1956003207504017600195923,212111201533,1956003207504017600195923,212111201534,1956003207504117600195923,212111201535,1956003207504217600195923,212111201536,1956003207504317600195923,212111201537,1956003207504017600195923,212111201538,1956003207504017600195923,212111201539,19560032075040上传数据

hadoop dfs -put a.txt /data/merge通过 spark-sql进行测试

hive的底层是MapReduce

spark-sql的底层是spark速度快

1、获取上一条数据2、如果当前位置和上一条数据的位置一致在后面记0,不一致记13、按照flag累加求和,将不同的数据分到不同的类中select mdn,grid,clazz,min(sdate) start_date,max(sdate) as end_date from (select *,sum(flag) over(partition by mdn order by sdate) as clazz from (select * ,case when grid=last_grid then 0 else 1 end as flagfrom (select * ,lag(grid,1) over(partition by mdn order by sdate) as last_gridfrom merge) as a) as b) as cgroup by mdn,grid,clazz;

超速处理

- 步行(0.5-2)m/s

- 骑行(2 -5)

- 开车(10-30)

- 高铁 (60-100)

- 飞机(100-340)

查看ods_oidd是否有读权限

hdfs dfs -getfacl /daas/motl/ods/ods_oiddhadoop dfs -du -h /daas/motl/dwi/dwi_res_regn_mergelocation_msk_dhdfs dfs -setfacl -R -m user:dwi:r-x /daas/motl/ods/ods_oidd/day_id=20220407

删除之前运行结果

- 通过root对回收站增加权限

- 或者跳过垃圾回收机制

hadoop dfs -chmod 777 /userhadoop dfs -rmr /daas/motl/dwi/dwi_res_regn_mergelocation_msk_d位置数据融合表

- 通过dwi用户的hive创建位置数据融合表

CREATE EXTERNAL TABLE IF NOT EXISTS dwi.dwi_res_regn_mergelocation_msk_d ( mdn string comment '手机号码' ,start_date string comment '开始时间' ,end_date string comment '结束时间' ,county_id string comment '区县编码' ,longi string comment '经度' ,lati string comment '纬度' ,bsid string comment '基站标识' ,grid_id string comment '网格号' ) comment '位置数据融合表'PARTITIONED BY ( day_id string comment '天分区' ) ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t' STORED AS INPUTFORMAT 'org.apache.hadoop.mapred.TextInputFormat' OUTPUTFORMAT 'org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat' location '/daas/motl/dwi/dwi_res_regn_mergelocation_msk_d';

编写DwiResRegnMergelocationMskDay代码

- ods_oidd表结构

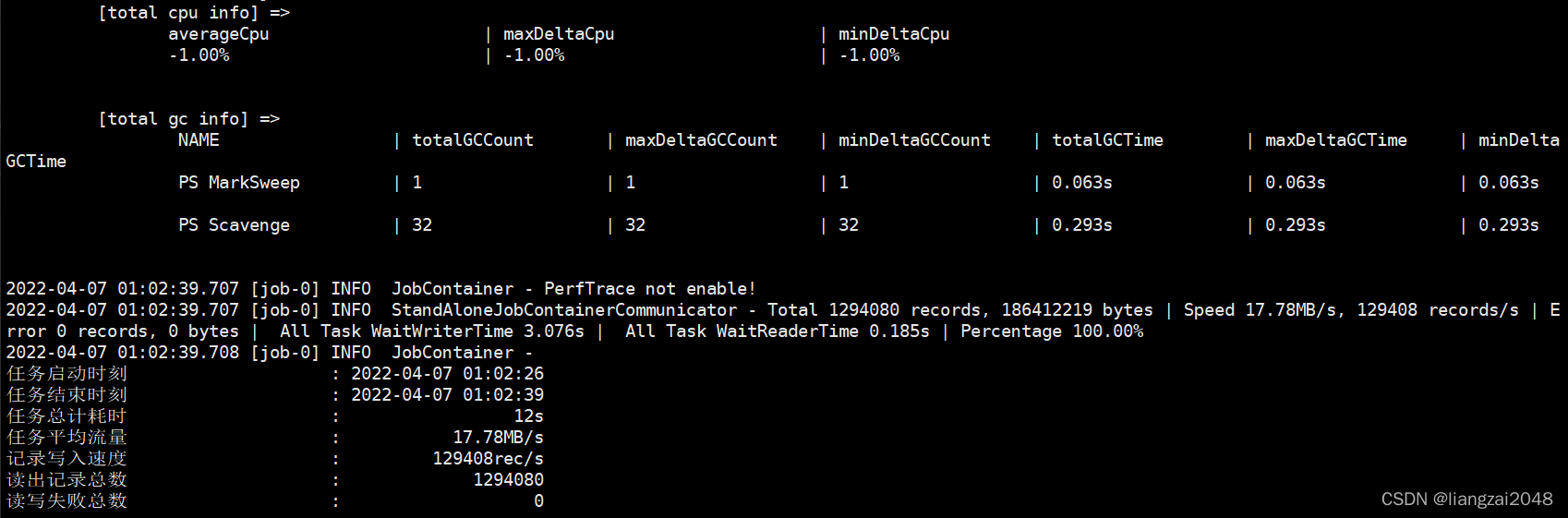

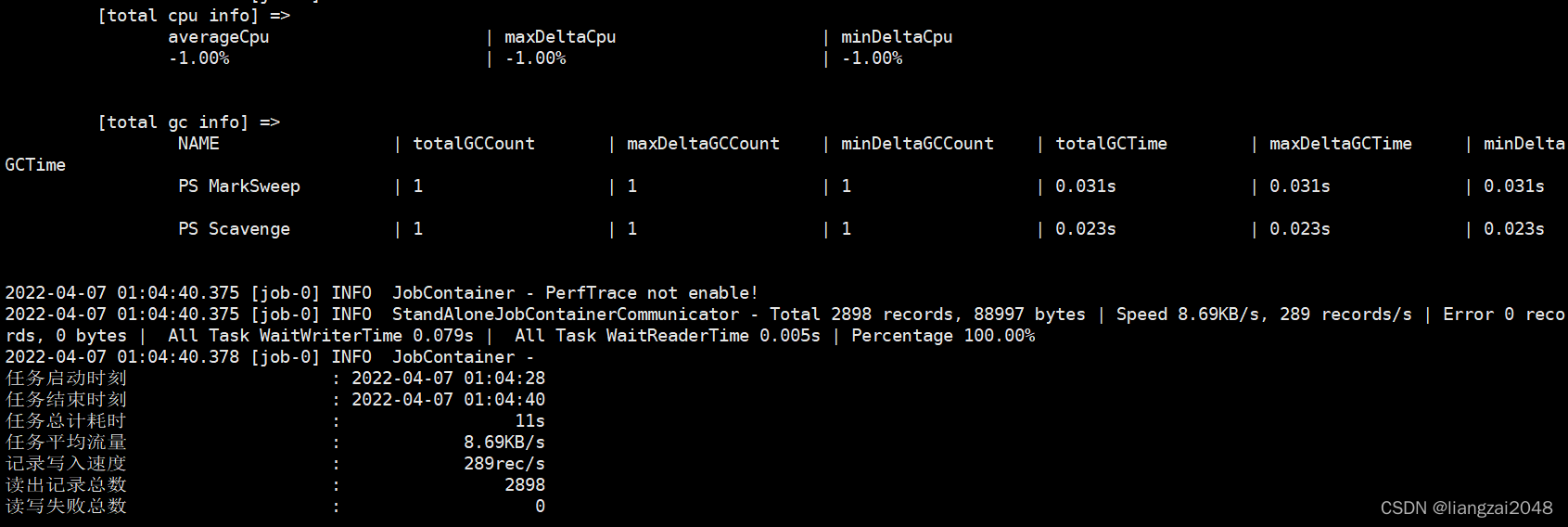

package com.ctyun.dwiimport org.apache.spark.sql.{DataFrame, SaveMode, SparkSession}import com.ctyun.utilsimport com.ctyun.utils.Geographyimport org.apache.sparkimport org.apache.spark.sql.expressions.{UserDefinedFunction, Window}/ * 基于基础额数据提取用户的位置信息构建位置融合表 * 1、超速处理 * 2、数据脱敏 * */object DwiResRegnMergelocationMskDay { def main(args: Array[String]): Unit = { if (args.length == 0) { println("请指定时间参数") return } //时间参数 val day_id: String = args.head val spark: SparkSession = SparkSession .builder() .appName("DwiResRegnMergelocationMskDay") .enableHiveSupport() // 开启hive元数据支持 .getOrCreate() import spark.implicits._ import org.apache.spark.sql.functions._ / * spark中的自定义函数 * */ val calculateLength: UserDefinedFunction = udf((longi: String, lati: String, last_longi: String, last_lati: String) => { //计算距离 Geography.calculateLength(longi.toDouble, lati.toDouble, last_longi.toDouble, last_lati.toDouble) }) / * 读取hive中oidd表 */ val oiddDF: DataFrame = spark.table("ods.ods_oidd") oiddDF //按时间过滤数据,取一天的数据 .filter($"day_id" === day_id) .select($"mdn", $"start_time", $"county_id", $"longi", $"lati", $"bsid", $"grid_id") //数据去重 .distinct() //开始时间 .withColumn("start_date", split($"start_time", ",")(1)) //结束时间 .withColumn("end_date", split($"start_time", ",")(0)) //计算时间差和距离差 //1、获取上一条数据的位置 .withColumn("last_grid", lag($"grid_id", 1) over Window.partitionBy($"mdn").orderBy($"start_date")) //2、如果当前位置和上一条数据的位置一致在后面记0,不一致记1 .withColumn("flag", when($"grid_id" === $"last_grid", 0).otherwise(1)) //3、增加类别 .withColumn("clazz", sum("flag") over Window.partitionBy($"mdn").orderBy($"start_date")) //4、按照手机号分组获取开始时间和结束时间 .groupBy($"mdn", $"county_id", $"grid_id", $"longi", $"bsid", $"lati", $"clazz") //取第一个点的开始时间和最后一个点的结束时间 .agg(min($"start_date") as "start_date", max($"end_date") as "end_date") //获取上一条数据的时间 .withColumn("last_date", lag($"start_date", 1) over Window.partitionBy($"mdn").orderBy($"start_date")) //计算时间差,秒级别 .withColumn("diff_time", when($"last_date".isNull, 1).otherwise(unix_timestamp($"start_date", "yyyyMMddHHmmss") - unix_timestamp($"last_date", "yyyyMMddHHmmss"))) //获取上一条数据的经纬度 .withColumn("last_longi", lag($"longi", 1) over Window.partitionBy($"mdn").orderBy($"start_date")) .withColumn("last_lati", lag($"lati", 1) over Window.partitionBy($"mdn").orderBy($"start_date")) //计算距离,使用自定义的函数计算距离 .withColumn("distance", when($"last_lati".isNull, 1).otherwise(calculateLength($"longi", $"lati", $"last_longi", $"last_lati"))) //计算速度 .withColumn("speed", round($"distance" / $"diff_time", 3)) //过滤掉速度较大的数据 .filter($"speed" <= 340) //取出需要的字段 .select($"mdn", $"start_date", $"end_date", $"county_id", $"longi", $"lati", $"bsid", $"grid_id") .write .format("csv") .mode(SaveMode.Overwrite) .option("sep", "\t") .save(s"/daas/motl/dwi/dwi_res_regn_mergelocation_msk_d/day_id=$day_id") / * spark-submit --master local --class com.ctyun.dwi.DwiResRegnMergelocationMskDay --jars common-1.0.jar dwi-1.0.jar * */ / * 增加分区 */ spark.sql( s""" |alter table dwi.dwi_res_regn_mergelocation_msk_d add if not exists partition(day_id='$day_id') | """.stripMargin) }}打包、上传、运行(dwi)

cd /home/dwi

spark-submit --master local --class com.ctyun.dwi.DwiResRegnMergelocationMskDay --jars common-1.0.jar dwi-1.0.jar 20220409访问master:4040和master:8088的web ui 查看运行结果

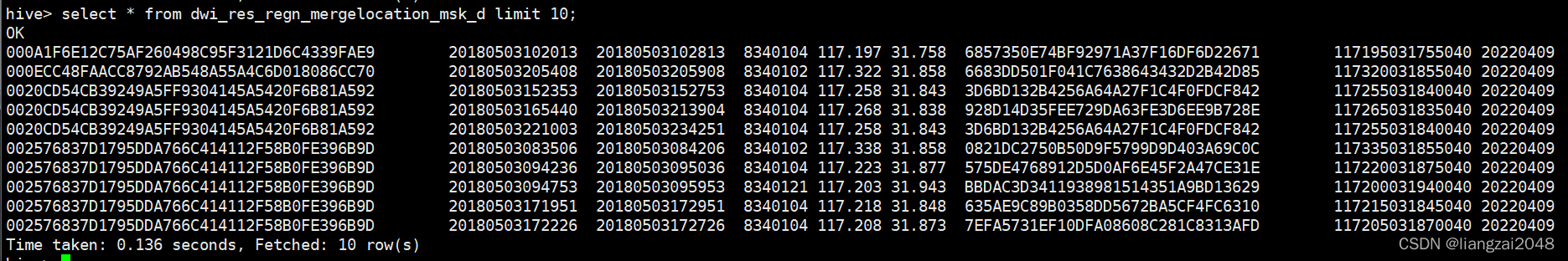

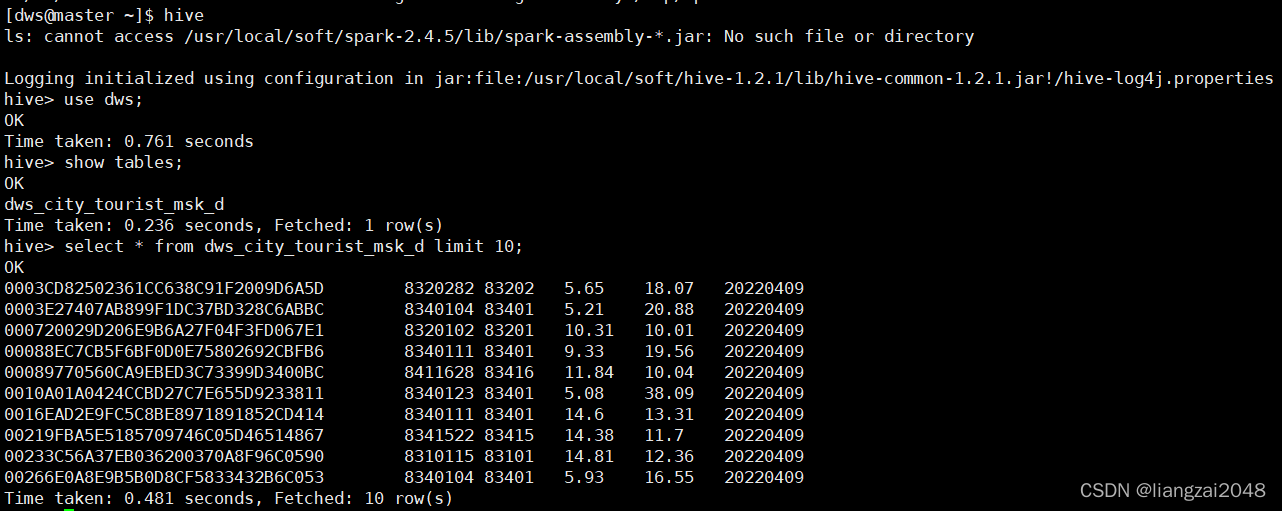

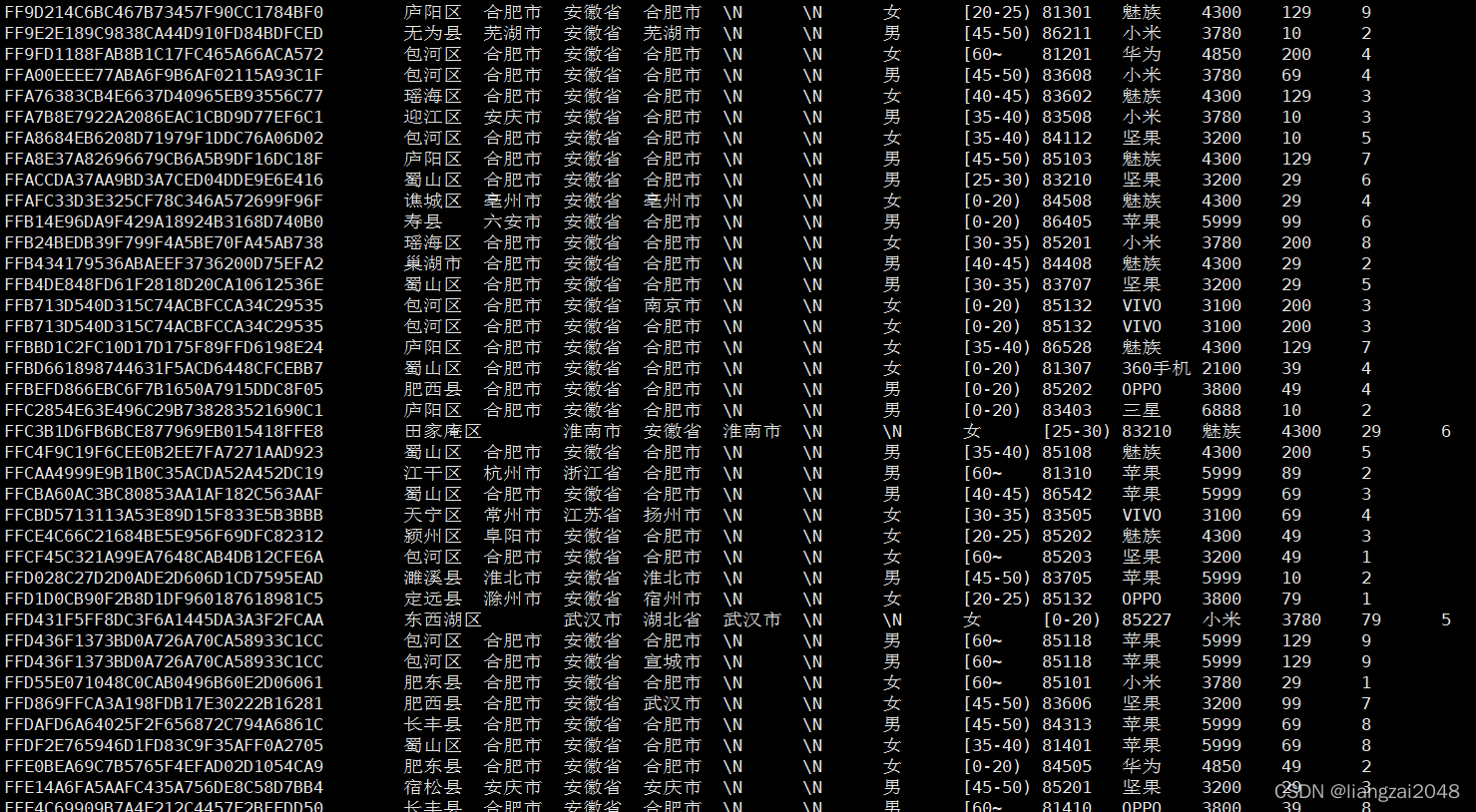

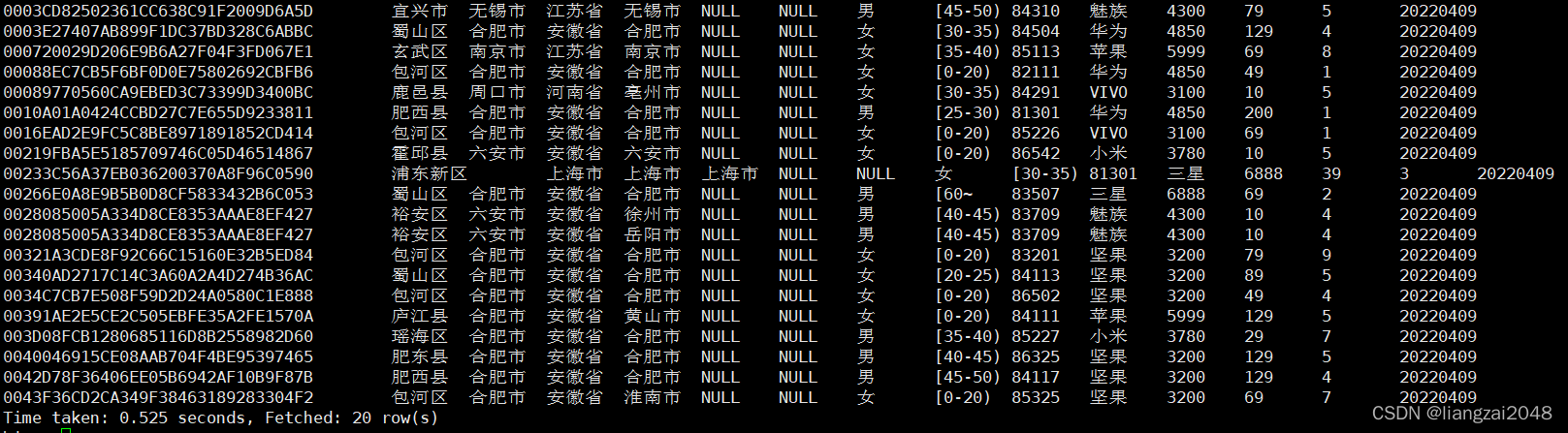

在dwi登陆hive查看运行结果

use dwi;select * from dwi_res_regn_mergelocation_msk_d limit 10;

对手机号敏感数据进行加密

使用md5对手机号进行加密,先拼接字符串后加密,并将md5加密后的数据转换为大写。

.select(upper(md5(concat($"mdn", expr("'liangzai'")))) as "mdn", //对手机号进行加密$"start_date",$"end_date",$"county_id",$"longi",$"lati",$"bsid",$"grid_id")第四章

用户画像表

创建dim层

添加依赖

<dependencies> <dependency> <groupId>org.scala-lang</groupId> <artifactId>scala-library</artifactId> </dependency> <dependency> <groupId>org.scala-lang</groupId> <artifactId>scala-compiler</artifactId> </dependency> <dependency> <groupId>org.scala-lang</groupId> <artifactId>scala-reflect</artifactId> </dependency> <dependency> <groupId>org.apache.spark</groupId> <artifactId>spark-sql_${scala.library.version}</artifactId> </dependency> <dependency> <groupId>com.ctyun</groupId> <artifactId>common</artifactId> </dependency> </dependencies> <build> <plugins> <plugin> <groupId>org.apache.maven.plugins</groupId> <artifactId>maven-compiler-plugin</artifactId> </plugin> <plugin> <groupId>org.scala-tools</groupId> <artifactId>maven-scala-plugin</artifactId> </plugin> </plugins> </build> <modules> <module>common</module> <module>ods</module> <module>dwi</module> <module>dim</module> </modules>新建包

com.ctyun.dim新建DimUserTagDay类

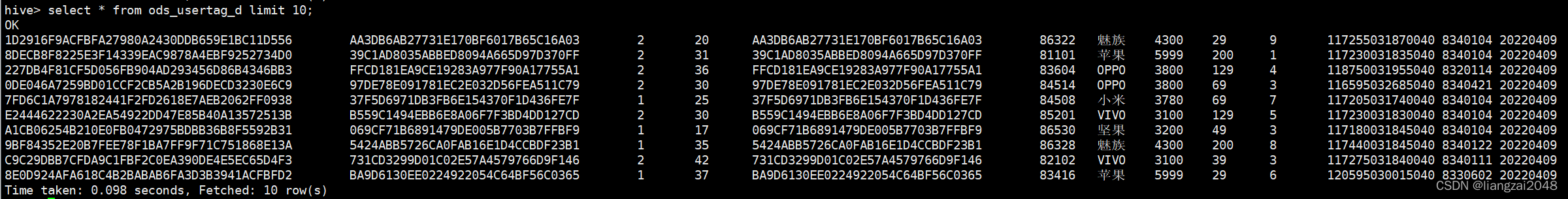

登录ods的hive查看用户画像表 是否存在

# 登录odsssh ods@master# 输入密码123456# 登录hive hive# 切换用户use ods;# 查询用户画像表select * from ods_usertag_d limit 10;

hive表如何导入本地目录里

hive -e "select * from 库名.表名" >> 本地文件名.csv封装工具

在common里增加spark依赖

<dependencies> <dependency> <groupId>org.scala-lang</groupId> <artifactId>scala-library</artifactId> </dependency> <dependency> <groupId>org.scala-lang</groupId> <artifactId>scala-compiler</artifactId> </dependency> <dependency> <groupId>org.scala-lang</groupId> <artifactId>scala-reflect</artifactId> </dependency> <dependency> <groupId>org.apache.spark</groupId> <artifactId>spark-sql_${scala.library.version}</artifactId> </dependency> </dependencies> <build> <plugins> <plugin> <groupId>org.apache.maven.plugins</groupId> <artifactId>maven-compiler-plugin</artifactId> </plugin> <plugin> <groupId>org.scala-tools</groupId> <artifactId>maven-scala-plugin</artifactId> </plugin> </plugins> </build>新建SparkTool的class工具类并编写

测试

package com.ctyun.utilsabstract class SparkTool { def main(args: Array[String]): Unit = { println("SprakTool") this.run() } def run()}这里是不能直接运行的

新建Test的object类

package com.ctyun.utilsobject Test extends SparkTool { override def run(): Unit = { println("test.run") }}运行Test:

编写电信数仓的SparkTool封装工具

package com.ctyun.utilsimport org.apache.spark.internal.Loggingimport org.apache.spark.sql.SparkSession/ * Spark 工具,将通用的代码封装到父类中 * * 继承Logging类,使用log打印日志 * */abstract class SparkTool extends Logging{ var day_id: String = _ def main(args: Array[String]): Unit = { // 1、获取时间参数 if (args.length == 0) { log.error("请传入时间参数") return } day_id= args.head // 获取类名 val simpleName: String = this.getClass.getSimpleName.replace("$", "") // 2、创建spark环境 val spark: SparkSession = SparkSession .builder() .appName(simpleName) // 开启hive元数据支持 .enableHiveSupport() .getOrCreate() // 调用子类的方法,将spark传过去 this.run(spark) } def run(spark: SparkSession)}使用SparkTool的位置融合表代码

package com.ctyun.dwiimport org.apache.spark.sql.{DataFrame, SaveMode, SparkSession}import com.ctyun.utils.{Geography, SparkTool}import org.apache.spark.sql.expressions.{UserDefinedFunction, Window}/ * 基于基础额数据提取用户的位置信息构建位置融合表 * 1、超速处理 * 2、数据脱敏 * */object DwiResRegnMergelocationMskDay extends SparkTool{ override def run(spark: SparkSession): Unit = { import spark.implicits._ import org.apache.spark.sql.functions._ / * spark中的自定义函数 * */ val calculateLength: UserDefinedFunction = udf((longi: String, lati: String, last_longi: String, last_lati: String) => { //计算距离 Geography.calculateLength(longi.toDouble, lati.toDouble, last_longi.toDouble, last_lati.toDouble) }) / * 读取hive中oidd表 */ val oiddDF: DataFrame = spark.table("ods.ods_oidd") val mergeDF: DataFrame = oiddDF //按时间过滤数据,取一天的数据 .filter($"day_id" === day_id) .select($"mdn", $"start_time", $"county_id", $"longi", $"lati", $"bsid", $"grid_id") //数据去重 .distinct() //开始时间 .withColumn("start_date", split($"start_time", ",")(1)) //结束时间 .withColumn("end_date", split($"start_time", ",")(0)) //计算时间差和距离差 //1、获取上一条数据的位置 .withColumn("last_grid", lag($"grid_id", 1) over Window.partitionBy($"mdn").orderBy($"start_date")) //2、如果当前位置和上一条数据的位置一致在后面记0,不一致记1 .withColumn("flag", when($"grid_id" === $"last_grid", 0).otherwise(1)) //3、增加类别 .withColumn("clazz", sum("flag") over Window.partitionBy($"mdn").orderBy($"start_date")) //4、按照手机号分组获取开始时间和结束时间 .groupBy($"mdn", $"county_id", $"grid_id", $"longi", $"bsid", $"lati", $"clazz") //取第一个点的开始时间和最后一个点的结束时间 .agg(min($"start_date") as "start_date", max($"end_date") as "end_date") //获取上一条数据的时间 .withColumn("last_date", lag($"start_date", 1) over Window.partitionBy($"mdn").orderBy($"start_date")) //计算时间差,秒级别 .withColumn("diff_time", when($"last_date".isNull, 1).otherwise(unix_timestamp($"start_date", "yyyyMMddHHmmss") - unix_timestamp($"last_date", "yyyyMMddHHmmss"))) //获取上一条数据的经纬度 .withColumn("last_longi", lag($"longi", 1) over Window.partitionBy($"mdn").orderBy($"start_date")) .withColumn("last_lati", lag($"lati", 1) over Window.partitionBy($"mdn").orderBy($"start_date")) //计算距离,使用自定义的函数计算距离 .withColumn("distance", when($"last_lati".isNull, 1).otherwise(calculateLength($"longi", $"lati", $"last_longi", $"last_lati"))) //计算速度 .withColumn("speed", round($"distance" / $"diff_time", 3)) //过滤掉速度较大的数据 .filter($"speed" <= 340) //取出需要的字段 .select( upper(md5(concat($"mdn", expr("'liangzai'")))) as "mdn", //对手机号进行加密 $"start_date", $"end_date", $"county_id", $"longi", $"lati", $"bsid", $"grid_id" ) mergeDF.write .format("csv") .mode(SaveMode.Overwrite) .option("sep", "\t") .save(s"/daas/motl/dwi/dwi_res_regn_mergelocation_msk_d/day_id=$day_id") / * spark-submit --master local --class com.ctyun.dwi.DwiResRegnMergelocationMskDay --jars common-1.0.jar dwi-1.0.jar 20220406 * */ / * 增加分区 */ spark.sql( s""" |alter table dwi.dwi_res_regn_mergelocation_msk_d add if not exists partition(day_id='$day_id') | """.stripMargin) }}修改ods的权限查看数据

# 查看权限hdfs dfs -getfacl /daas/motl/ods/ods_usertag_d# 增加权限hdfs dfs -setfacl -R -m user:dim:r-x /daas/motl/ods/ods_usertag_d# 查看 hadoop dfs -ls /daas/motl/ods/ods_usertag_d# 增加ods权限hdfs dfs -setfacl -R -m user:dim:r-x /daas/motl/ods# 查看数据hadoop dfs -cat /daas/motl/ods/ods_usertag_d/day_id=20220409/*# 运行spark-submit --master yarn-client --class 组列名 包名 20220409编写运行script脚本

在dim层新建dim-usertag-day.sh脚本并编写

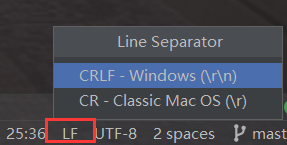

#!/usr/bin/env bash#*# 文件名称: dim-usertag-day.sh# 创建日期: 2022年4月10日# 编写人员: liangzai# 输入信息: 用户画像表# 输出信息: 用户画像表# # 功能描述: 用户画像表# 处理过程:# Copyright(c) 2016 TianYi Cloud Technologies (China), Inc.# All Rights Reserved.#*#*#==修改日期==|===修改人=====|======================================================|##*# 时间参数day_id=$1spark-submit \--master yarn-client \--class com.ctyun.dim.DimUserTagDay \--jars common-1.0.jar \dim-1.0.jar $day_id注意脚本的换行一定是LF

修改默认换行符为Unix and macOS(\n)

打包上传到dim用户

将common包、dim包、脚本一并上传

运行用户画像表脚本

sh dim-usertag-day.sh 20220409

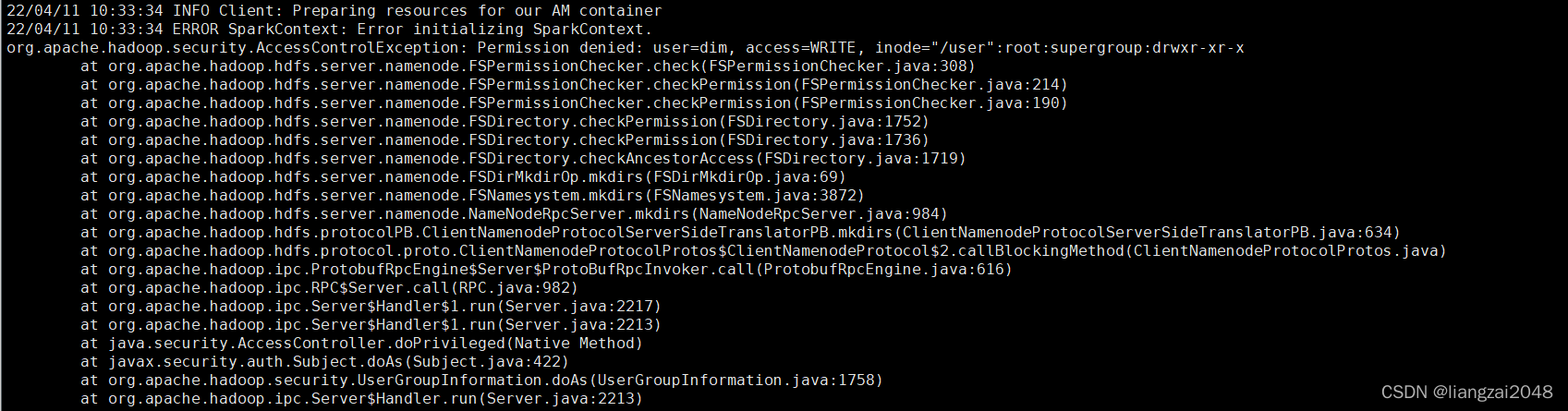

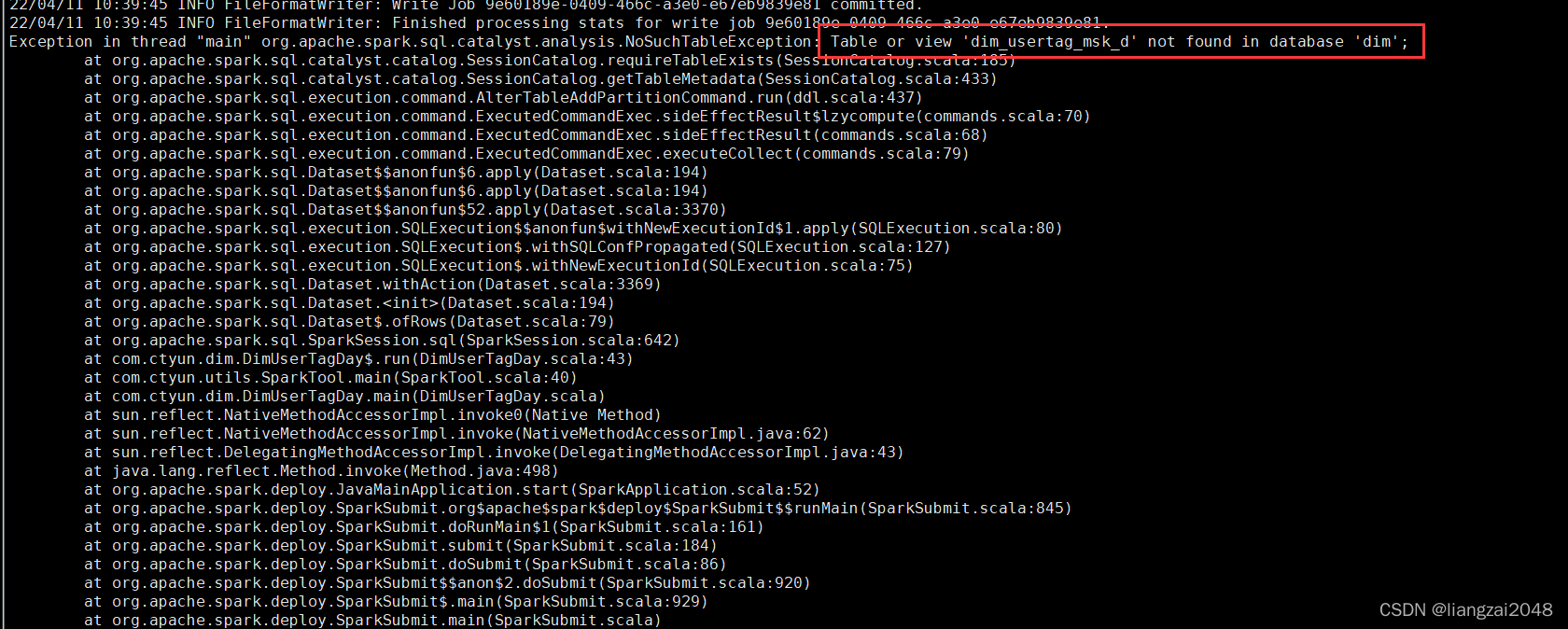

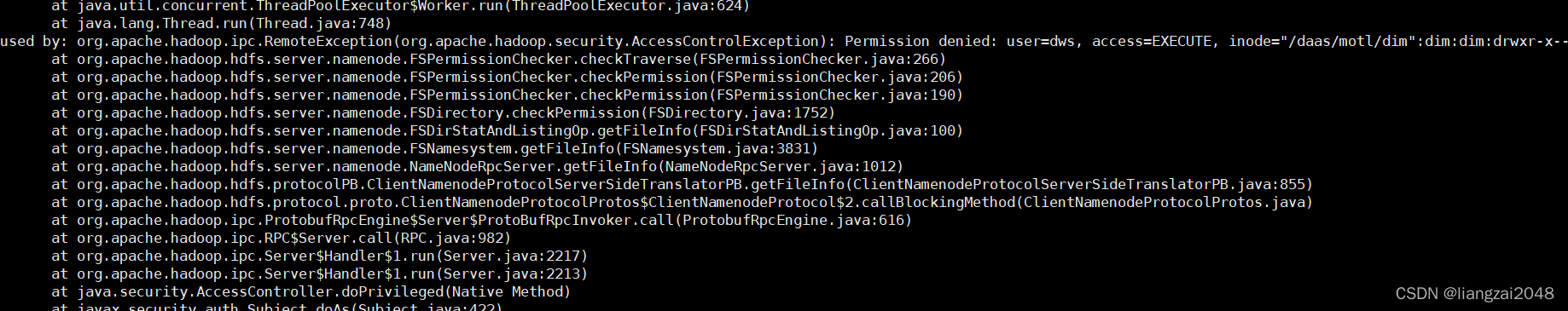

报错,在root目录下申请权限

hdfs dfs -chmod 777 /user再执行一遍脚本

报错创建hive的dim.dim_usertag_msk_d表

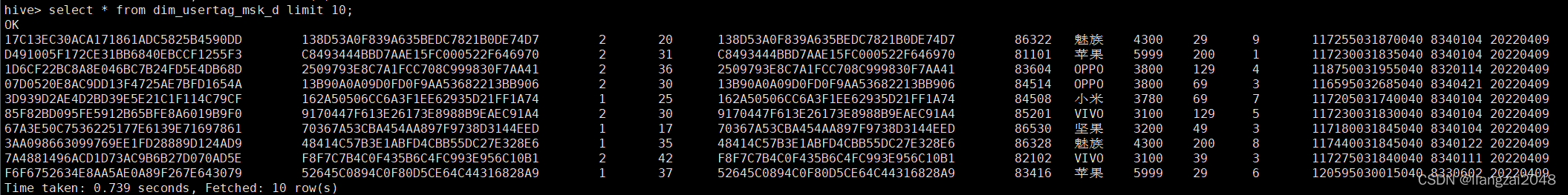

CREATE EXTERNAL TABLE IF NOT EXISTS dim.dim_usertag_msk_d ( mdn string comment '手机号大写MD5加密' ,name string comment '姓名' ,gender string comment '性别,1男2女' ,age string comment '年龄' ,id_number string comment '证件号码' ,number_attr string comment '号码归属地' ,trmnl_brand string comment '终端品牌' ,trmnl_price string comment '终端价格' ,packg string comment '套餐' ,conpot string comment '消费潜力' ,resi_grid_id string comment '常住地网格' ,resi_county_id string comment '常住地区县' ) comment '用户画像表'PARTITIONED BY ( day_id string comment '月分区' ) ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t' STORED AS INPUTFORMAT 'org.apache.hadoop.mapred.TextInputFormat' OUTPUTFORMAT 'org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat' location '/daas/motl/dim/dim_usertag_msk_d'; alter table dim.dim_usertag_msk_d add if not exists partition(day_id='20220409') ;查看用户画像表运行结果

hiveuse dim;select * from dim_usertag_msk_d limit 10;

在dwi层新建dwi-res-regn-mergelocation-msk-day.sh脚本并编写

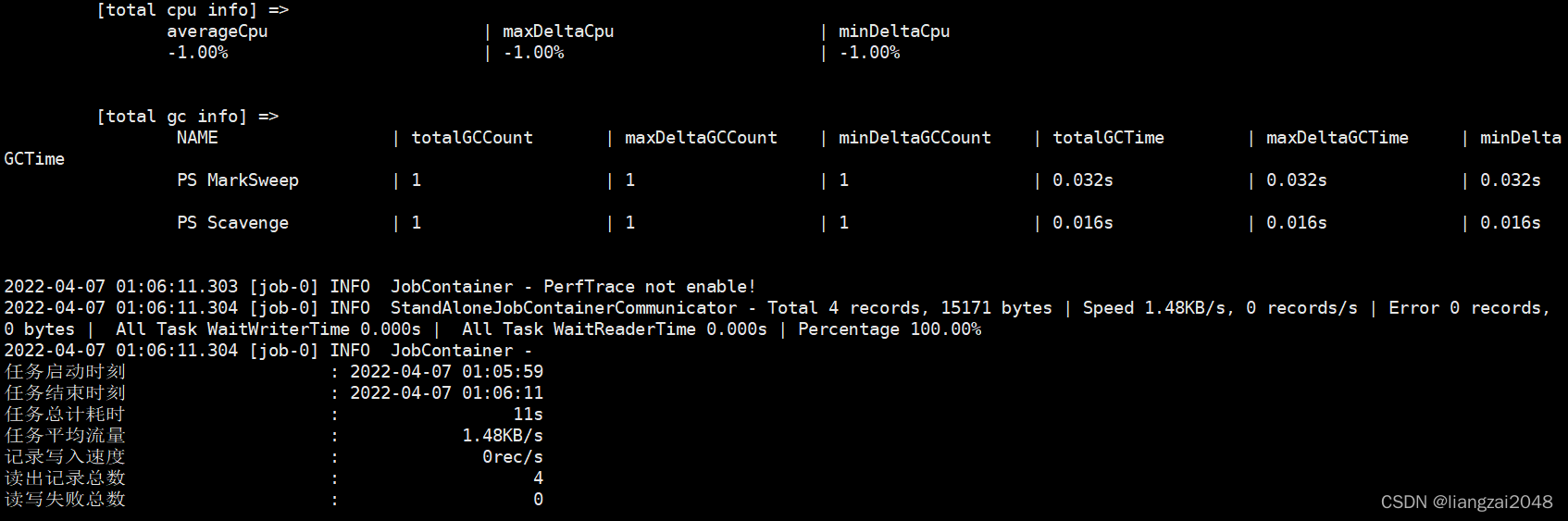

#!/usr/bin/env bash#*# 文件名称:dwi-res-regn-mergelocation-msk-day.sh# 创建日期: 2022年4月10日# 编写人员: liangzai# 输入信息: oidd# 输出信息: 位置融合表# # 功能描述: 位置融合表# 处理过程:# Copyright(c) 2016 TianYi Cloud Technologies (China), Inc.# All Rights Reserved.#*#*#==修改日期==|===修改人=====|======================================================|##*# 时间参数day_id=$1spark-submit \--master yarn-client \--class com.ctyun.dwi.DwiResRegnMergelocationMskDay \--conf spark.sql.shuffle.partitions=10 \--jars common-1.0.jar \dwi-1.0.jar $day_id运行位置融合表脚本

sh dwi-res-regn-mergelocation-msk-day.sh 20220409在dim层的hive创建行政区配置表

CREATE EXTERNAL TABLE IF NOT EXISTS dim.dim_admincode ( prov_id string comment '省id' ,prov_name string comment '省名称' ,city_id string comment '市id' ,city_name string comment '市名称' ,county_id string comment '区县id' ,county_name string comment '区县名称' ,city_level string comment '城市级别,一级为1;二级为2...依此类推' ,economic_belt string comment 'BJ为首都经济带、ZSJ为珠三角经济带、CSJ为长三角经济带、DB为东北经济带、HZ为华中经济带、HB为华北经济带、HD为华东经济带、HN为华南经济带、XB为西北经济带、XN为西南经济带' ,city_feature1 string comment 'NL代表内陆、YH代表沿海' ) comment '行政区配置表'ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t' STORED AS INPUTFORMAT 'org.apache.hadoop.mapred.TextInputFormat' OUTPUTFORMAT 'org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat' location '/daas/motl/dim/dim_admincode'; 在dim层新建dim-admincode.sh脚本并编写

由于spark工具类有时间参数,而admincode不需要,这里就不用spark代码,写脚本即可

这里是将ods_admincode的数据导入dim_admincode表

#!/usr/bin/env bash#*# 文件名称: dim-usertag-day.sh# 创建日期: 2022年4月10日# 编写人员: liangzai# 输入信息: 用户画像表# 输出信息: 用户画像表# # 功能描述: 用户画像表# 处理过程:# Copyright(c) 2016 TianYi Cloud Technologies (China), Inc.# All Rights Reserved.#*#*#==修改日期==|===修改人=====|======================================================|##*spark-sql \--master yarn-client \-e "insert into dim.dim_admincode select * from ods.ods_admincode "不需要打包上传脚本运行即可

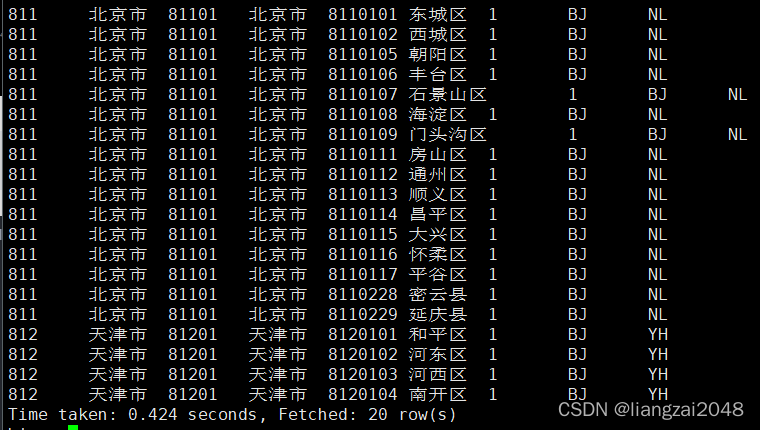

运行dim-admincode.sh脚本

sh dim-admincode.sh查看dim-admincode.sh脚本运行结果

在dim层 创建景区配置表

CREATE EXTERNAL TABLE IF NOT EXISTS dim.dim_scenic_boundary ( scenic_id string comment '景区id' ,scenic_name string comment '景区名称' ,boundary string comment '景区边界' ) comment '景区配置表'ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t' STORED AS INPUTFORMAT 'org.apache.hadoop.mapred.TextInputFormat' OUTPUTFORMAT 'org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat' location '/daas/motl/dim/dim_scenic_boundary';在dim层新建dim-scenic-boundary.sh脚本并编写

#!/usr/bin/env bash#*# 文件名称: dim-scenic-boundary.sh# 创建日期: 2022年4月10日# 编写人员: liangzai# 输入信息: 景区配置表# 输出信息: 景区配置表# # 功能描述: 景区配置表# 处理过程:# Copyright(c) 2016 TianYi Cloud Technologies (China), Inc.# All Rights Reserved.#*#*#==修改日期==|===修改人=====|======================================================|##*spark-sql \--master yarn-client \-e "insert into dim.dim_scenic_boundary select * from ods.ods_scenic_boundary "上传运行dim-scenic-boundary.sh脚本

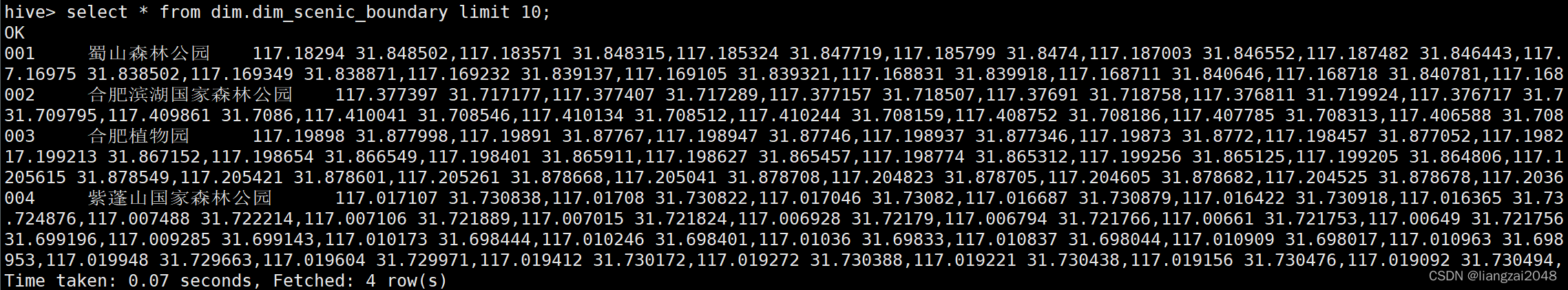

sh dim-scenic-boundary.sh查看dim-scenic-boundary.sh脚本运行结果

select * from dim.dim_scenic_boundary limit 10;

编写ods层脚本

编写datax-crm-admin-code-mysql-to-hive.sh脚本

#!/usr/bin/env bash#*# 文件名称:datax-crm-admin-code-mysql-to-hive.sh# 创建日期: 2022年4月10日# 编写人员: liangzai# 输入信息: oidd# 输出信息: 位置融合表# # 功能描述: 位置融合表# 处理过程:# Copyright(c) 2016 TianYi Cloud Technologies (China), Inc.# All Rights Reserved.#*#*#==修改日期==|===修改人=====|======================================================|##*datax.py datax-crm-admin-code-mysql-to-hive.json编写datax-crm-scenic_boundary-mysql-to-hive.sh脚本

#!/usr/bin/env bash#*# 文件名称:datax-crm-admin-code-mysql-to-hive.sh# 创建日期: 2022年4月10日# 编写人员: qinxiao# 输入信息: oidd# 输出信息: 位置融合表# # 功能描述: 位置融合表# 处理过程:# Copyright(c) 2016 TianYi Cloud Technologies (China), Inc.# All Rights Reserved.#*#*#==修改日期==|===修改人=====|======================================================|##*datax.py datax-crm-scenic_boundary-mysql-to-hive.json编写datax-crm-usertag-mysql-to-hive.sh脚本

#!/usr/bin/env bash#*# 文件名称:datax-crm-admin-code-mysql-to-hive.sh# 创建日期: 2022年4月10日# 编写人员: liangzai# 输入信息: oidd# 输出信息: 位置融合表# # 功能描述: 位置融合表# 处理过程:# Copyright(c) 2016 TianYi Cloud Technologies (China), Inc.# All Rights Reserved.#*#*#==修改日期==|===修改人=====|======================================================|##*day_id=$1hive -e "alter table ods.ods_usertag_d add if not exists partition(day_id='$day_id') ;"datax.py -p "-Dday_id=$day_id" datax-crm-usertag-mysql-to-hive.json编写flume-oss-oidd-to-hdfs.sh脚本

#!/usr/bin/env bash#*# 文件名称: flume-oss-oidd-to-hdfs.sh# 创建日期: 2022年4月10日# 编写人员: liangzai# 输入信息: flume数据采集# 输出信息: flume数据采集# # 功能描述: flume数据采集# 处理过程:# Copyright(c) 2016 TianYi Cloud Technologies (China), Inc.# All Rights Reserved.#*#*#==修改日期==|===修改人=====|======================================================|##*flume-ng agent -n a -f ./flume-oss-oidd-to-hdfs.properties -Dflume.root.logger=DEBUG,console第五章

时空伴随着

判断一个人是否是景区的游客

- 时间过滤

- 空间过滤

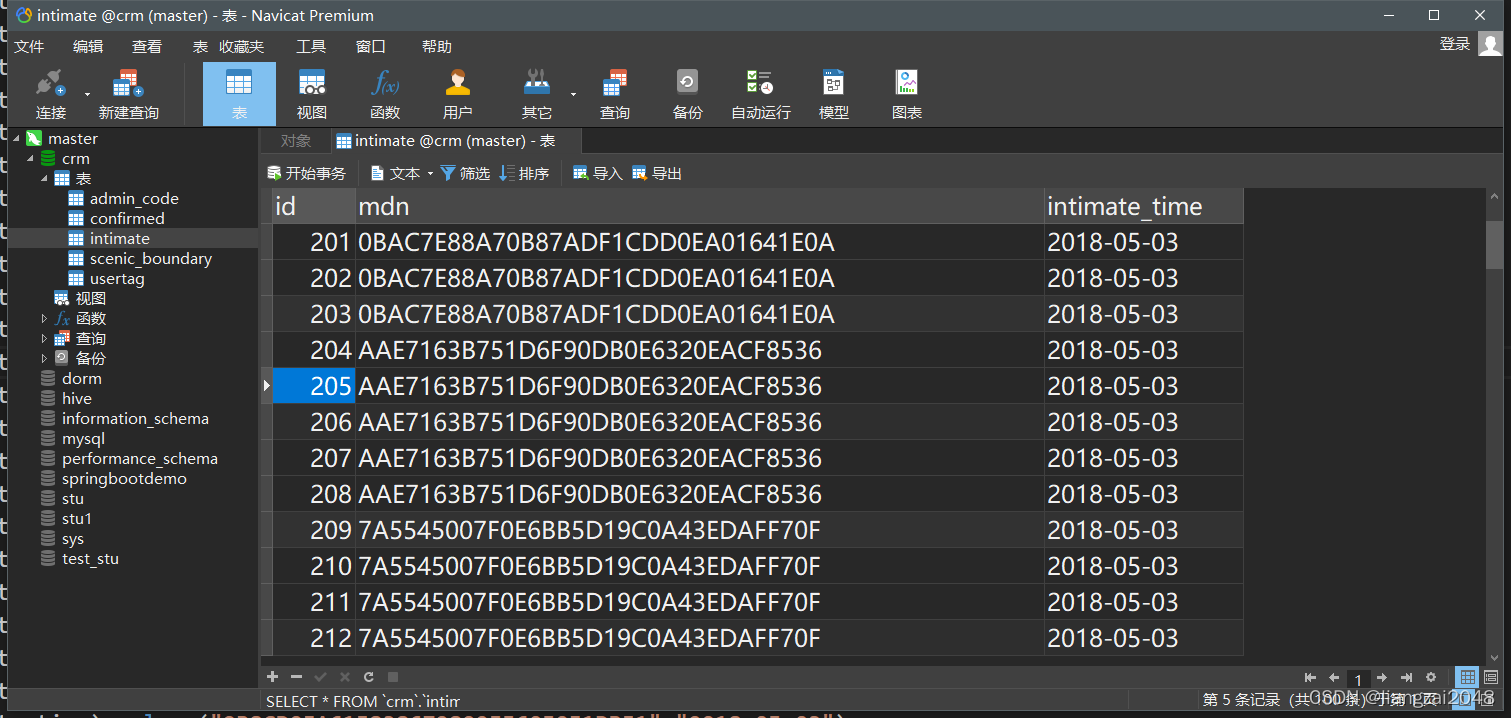

在MySQL中创建intimate表(疫情密接者)

向intimate表插入数据

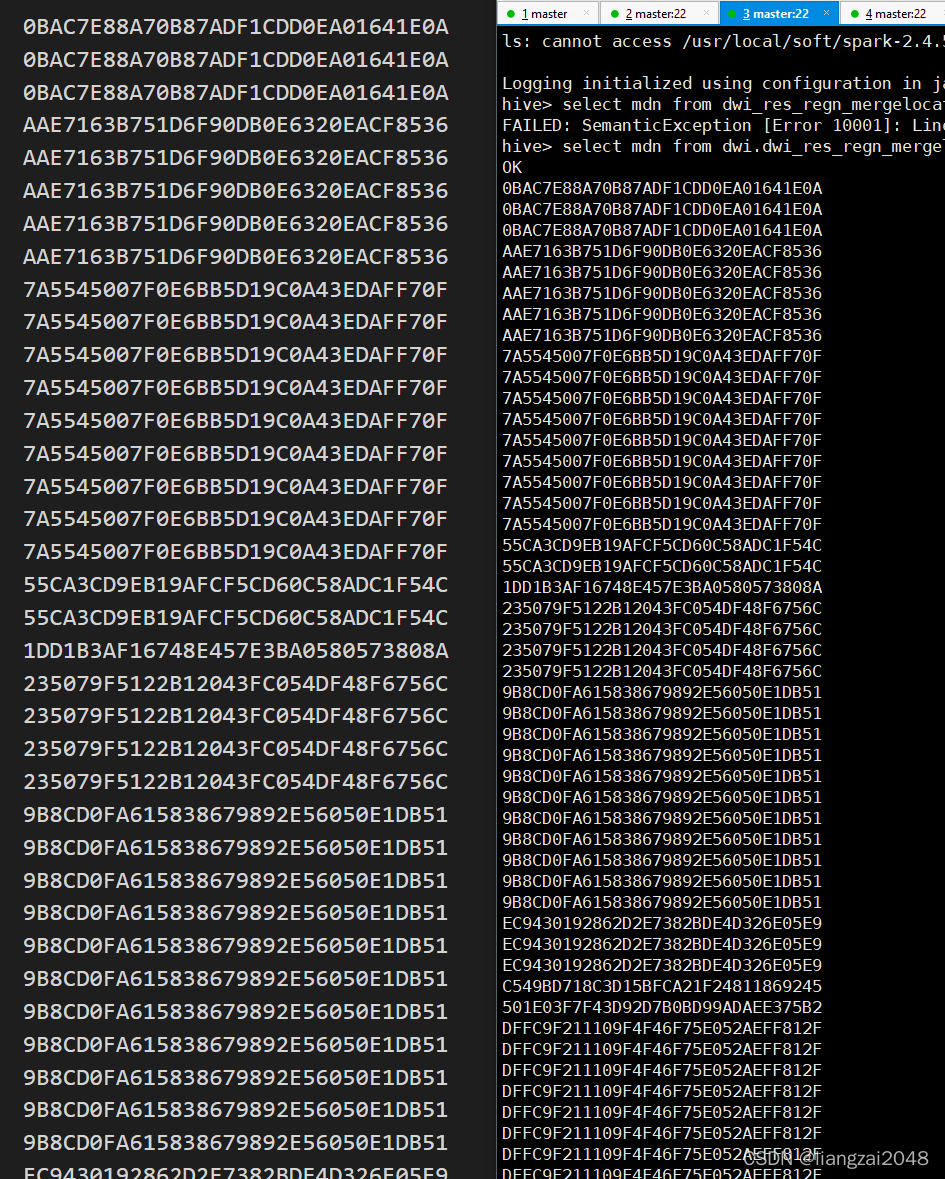

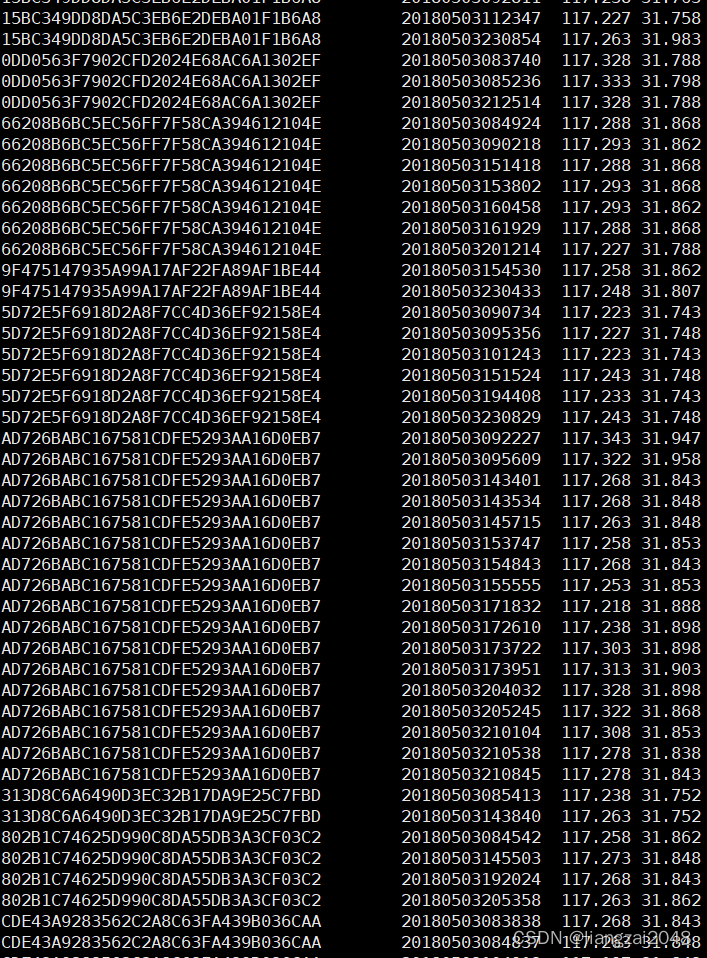

进入dwi层的hive

select mdn from dwi.dwi_res_regn_mergelocation_msk_d limit 100;查询到的手机号复制到visual Studio code进行处理

insert into intimate (mdn,intimate_time) values("0BAC7E88A70B87ADF1CDD0EA01641E0A","2018-05-03");insert into intimate (mdn,intimate_time) values("0BAC7E88A70B87ADF1CDD0EA01641E0A","2018-05-03");insert into intimate (mdn,intimate_time) values("0BAC7E88A70B87ADF1CDD0EA01641E0A","2018-05-03");insert into intimate (mdn,intimate_time) values("AAE7163B751D6F90DB0E6320EACF8536","2018-05-03");insert into intimate (mdn,intimate_time) values("AAE7163B751D6F90DB0E6320EACF8536","2018-05-03");insert into intimate (mdn,intimate_time) values("AAE7163B751D6F90DB0E6320EACF8536","2018-05-03");insert into intimate (mdn,intimate_time) values("AAE7163B751D6F90DB0E6320EACF8536","2018-05-03");insert into intimate (mdn,intimate_time) values("AAE7163B751D6F90DB0E6320EACF8536","2018-05-03");insert into intimate (mdn,intimate_time) values("7A5545007F0E6BB5D19C0A43EDAFF70F","2018-05-03");insert into intimate (mdn,intimate_time) values("7A5545007F0E6BB5D19C0A43EDAFF70F","2018-05-03");insert into intimate (mdn,intimate_time) values("7A5545007F0E6BB5D19C0A43EDAFF70F","2018-05-03");insert into intimate (mdn,intimate_time) values("7A5545007F0E6BB5D19C0A43EDAFF70F","2018-05-03");insert into intimate (mdn,intimate_time) values("7A5545007F0E6BB5D19C0A43EDAFF70F","2018-05-03");insert into intimate (mdn,intimate_time) values("7A5545007F0E6BB5D19C0A43EDAFF70F","2018-05-03");insert into intimate (mdn,intimate_time) values("7A5545007F0E6BB5D19C0A43EDAFF70F","2018-05-03");insert into intimate (mdn,intimate_time) values("7A5545007F0E6BB5D19C0A43EDAFF70F","2018-05-03");insert into intimate (mdn,intimate_time) values("7A5545007F0E6BB5D19C0A43EDAFF70F","2018-05-03");insert into intimate (mdn,intimate_time) values("55CA3CD9EB19AFCF5CD60C58ADC1F54C","2018-05-03");insert into intimate (mdn,intimate_time) values("55CA3CD9EB19AFCF5CD60C58ADC1F54C","2018-05-03");insert into intimate (mdn,intimate_time) values("1DD1B3AF16748E457E3BA0580573808A","2018-05-03");insert into intimate (mdn,intimate_time) values("235079F5122B12043FC054DF48F6756C","2018-05-03");insert into intimate (mdn,intimate_time) values("235079F5122B12043FC054DF48F6756C","2018-05-03");insert into intimate (mdn,intimate_time) values("235079F5122B12043FC054DF48F6756C","2018-05-03");insert into intimate (mdn,intimate_time) values("235079F5122B12043FC054DF48F6756C","2018-05-03");insert into intimate (mdn,intimate_time) values("9B8CD0FA615838679892E56050E1DB51","2018-05-03");insert into intimate (mdn,intimate_time) values("9B8CD0FA615838679892E56050E1DB51","2018-05-03");insert into intimate (mdn,intimate_time) values("9B8CD0FA615838679892E56050E1DB51","2018-05-03");insert into intimate (mdn,intimate_time) values("9B8CD0FA615838679892E56050E1DB51","2018-05-03");insert into intimate (mdn,intimate_time) values("9B8CD0FA615838679892E56050E1DB51","2018-05-03");insert into intimate (mdn,intimate_time) values("9B8CD0FA615838679892E56050E1DB51","2018-05-03");insert into intimate (mdn,intimate_time) values("9B8CD0FA615838679892E56050E1DB51","2018-05-03");insert into intimate (mdn,intimate_time) values("9B8CD0FA615838679892E56050E1DB51","2018-05-03");insert into intimate (mdn,intimate_time) values("9B8CD0FA615838679892E56050E1DB51","2018-05-03");insert into intimate (mdn,intimate_time) values("9B8CD0FA615838679892E56050E1DB51","2018-05-03");insert into intimate (mdn,intimate_time) values("9B8CD0FA615838679892E56050E1DB51","2018-05-03");insert into intimate (mdn,intimate_time) values("EC9430192862D2E7382BDE4D326E05E9","2018-05-03");insert into intimate (mdn,intimate_time) values("EC9430192862D2E7382BDE4D326E05E9","2018-05-03");insert into intimate (mdn,intimate_time) values("EC9430192862D2E7382BDE4D326E05E9","2018-05-03");insert into intimate (mdn,intimate_time) values("C549BD718C3D15BFCA21F24811869245","2018-05-03");insert into intimate (mdn,intimate_time) values("501E03F7F43D92D7B0BD99ADAEE375B2","2018-05-03");insert into intimate (mdn,intimate_time) values("DFFC9F211109F4F46F75E052AEFF812F","2018-05-03");insert into intimate (mdn,intimate_time) values("DFFC9F211109F4F46F75E052AEFF812F","2018-05-03");insert into intimate (mdn,intimate_time) values("DFFC9F211109F4F46F75E052AEFF812F","2018-05-03");insert into intimate (mdn,intimate_time) values("DFFC9F211109F4F46F75E052AEFF812F","2018-05-03");insert into intimate (mdn,intimate_time) values("DFFC9F211109F4F46F75E052AEFF812F","2018-05-03");insert into intimate (mdn,intimate_time) values("DFFC9F211109F4F46F75E052AEFF812F","2018-05-03");insert into intimate (mdn,intimate_time) values("DFFC9F211109F4F46F75E052AEFF812F","2018-05-03");insert into intimate (mdn,intimate_time) values("DFFC9F211109F4F46F75E052AEFF812F","2018-05-03");insert into intimate (mdn,intimate_time) values("DFFC9F211109F4F46F75E052AEFF812F","2018-05-03");insert into intimate (mdn,intimate_time) values("DFFC9F211109F4F46F75E052AEFF812F","2018-05-03");insert into intimate (mdn,intimate_time) values("DFFC9F211109F4F46F75E052AEFF812F","2018-05-03");insert into intimate (mdn,intimate_time) values("DFFC9F211109F4F46F75E052AEFF812F","2018-05-03");insert into intimate (mdn,intimate_time) values("DFFC9F211109F4F46F75E052AEFF812F","2018-05-03");insert into intimate (mdn,intimate_time) values("DFFC9F211109F4F46F75E052AEFF812F","2018-05-03");insert into intimate (mdn,intimate_time) values("5183424C72FB8853C60E946FA79FA9BB","2018-05-03");insert into intimate (mdn,intimate_time) values("5183424C72FB8853C60E946FA79FA9BB","2018-05-03");insert into intimate (mdn,intimate_time) values("5183424C72FB8853C60E946FA79FA9BB","2018-05-03");insert into intimate (mdn,intimate_time) values("B6C09D637B26DFC39D75D462669FBB4F","2018-05-03");insert into intimate (mdn,intimate_time) values("CC3FB0A6EB61421CA35870465C53D578","2018-05-03");insert into intimate (mdn,intimate_time) values("CC3FB0A6EB61421CA35870465C53D578","2018-05-03");insert into intimate (mdn,intimate_time) values("CC3FB0A6EB61421CA35870465C53D578","2018-05-03");insert into intimate (mdn,intimate_time) values("4BFB2FE788D22D33CA36CEFF87C05C43","2018-05-03");insert into intimate (mdn,intimate_time) values("4BFB2FE788D22D33CA36CEFF87C05C43","2018-05-03");insert into intimate (mdn,intimate_time) values("4BFB2FE788D22D33CA36CEFF87C05C43","2018-05-03");insert into intimate (mdn,intimate_time) values("4BFB2FE788D22D33CA36CEFF87C05C43","2018-05-03");insert into intimate (mdn,intimate_time) values("DE0C5768F2549C3D803F4DB6E2A8378E","2018-05-03");insert into intimate (mdn,intimate_time) values("DE0C5768F2549C3D803F4DB6E2A8378E","2018-05-03");insert into intimate (mdn,intimate_time) values("DE0C5768F2549C3D803F4DB6E2A8378E","2018-05-03");insert into intimate (mdn,intimate_time) values("DE0C5768F2549C3D803F4DB6E2A8378E","2018-05-03");insert into intimate (mdn,intimate_time) values("DE0C5768F2549C3D803F4DB6E2A8378E","2018-05-03");insert into intimate (mdn,intimate_time) values("DE0C5768F2549C3D803F4DB6E2A8378E","2018-05-03");insert into intimate (mdn,intimate_time) values("DE0C5768F2549C3D803F4DB6E2A8378E","2018-05-03");insert into intimate (mdn,intimate_time) values("DE0C5768F2549C3D803F4DB6E2A8378E","2018-05-03");insert into intimate (mdn,intimate_time) values("DE0C5768F2549C3D803F4DB6E2A8378E","2018-05-03");insert into intimate (mdn,intimate_time) values("DE0C5768F2549C3D803F4DB6E2A8378E","2018-05-03");insert into intimate (mdn,intimate_time) values("DE0C5768F2549C3D803F4DB6E2A8378E","2018-05-03");insert into intimate (mdn,intimate_time) values("DE0C5768F2549C3D803F4DB6E2A8378E","2018-05-03");insert into intimate (mdn,intimate_time) values("D2C75DD8D4B2ED05C4BA3CE09E2DD996","2018-05-03");insert into intimate (mdn,intimate_time) values("D2C75DD8D4B2ED05C4BA3CE09E2DD996","2018-05-03");insert into intimate (mdn,intimate_time) values("D2C75DD8D4B2ED05C4BA3CE09E2DD996","2018-05-03");insert into intimate (mdn,intimate_time) values("D2C75DD8D4B2ED05C4BA3CE09E2DD996","2018-05-03");insert into intimate (mdn,intimate_time) values("D2C75DD8D4B2ED05C4BA3CE09E2DD996","2018-05-03");insert into intimate (mdn,intimate_time) values("D2C75DD8D4B2ED05C4BA3CE09E2DD996","2018-05-03");insert into intimate (mdn,intimate_time) values("D2C75DD8D4B2ED05C4BA3CE09E2DD996","2018-05-03");insert into intimate (mdn,intimate_time) values("D2C75DD8D4B2ED05C4BA3CE09E2DD996","2018-05-03");insert into intimate (mdn,intimate_time) values("D2C75DD8D4B2ED05C4BA3CE09E2DD996","2018-05-03");insert into intimate (mdn,intimate_time) values("BF965887C70EB829977EE31B3310D1BA","2018-05-03");insert into intimate (mdn,intimate_time) values("95DA579000B57B59B1E9AB1748BFC817","2018-05-03");insert into intimate (mdn,intimate_time) values("95DA579000B57B59B1E9AB1748BFC817","2018-05-03");insert into intimate (mdn,intimate_time) values("17E67FB53C07E07FDBDAF7917CEAE6F9","2018-05-03");insert into intimate (mdn,intimate_time) values("33FE139A0FECB13E118DE4CC66DFC410","2018-05-03");insert into intimate (mdn,intimate_time) values("33FE139A0FECB13E118DE4CC66DFC410","2018-05-03");insert into intimate (mdn,intimate_time) values("33FE139A0FECB13E118DE4CC66DFC410","2018-05-03");insert into intimate (mdn,intimate_time) values("33FE139A0FECB13E118DE4CC66DFC410","2018-05-03");insert into intimate (mdn,intimate_time) values("F0EE065223D8AA495916559DB2BAD4DE","2018-05-03");insert into intimate (mdn,intimate_time) values("F0EE065223D8AA495916559DB2BAD4DE","2018-05-03");insert into intimate (mdn,intimate_time) values("F0EE065223D8AA495916559DB2BAD4DE","2018-05-03");insert into intimate (mdn,intimate_time) values("F0EE065223D8AA495916559DB2BAD4DE","2018-05-03");insert into intimate (mdn,intimate_time) values("F0EE065223D8AA495916559DB2BAD4DE","2018-05-03");insert into intimate (mdn,intimate_time) values("CEDC133FD2AB2E9E1B66F419BE4A27FB","2018-05-03");

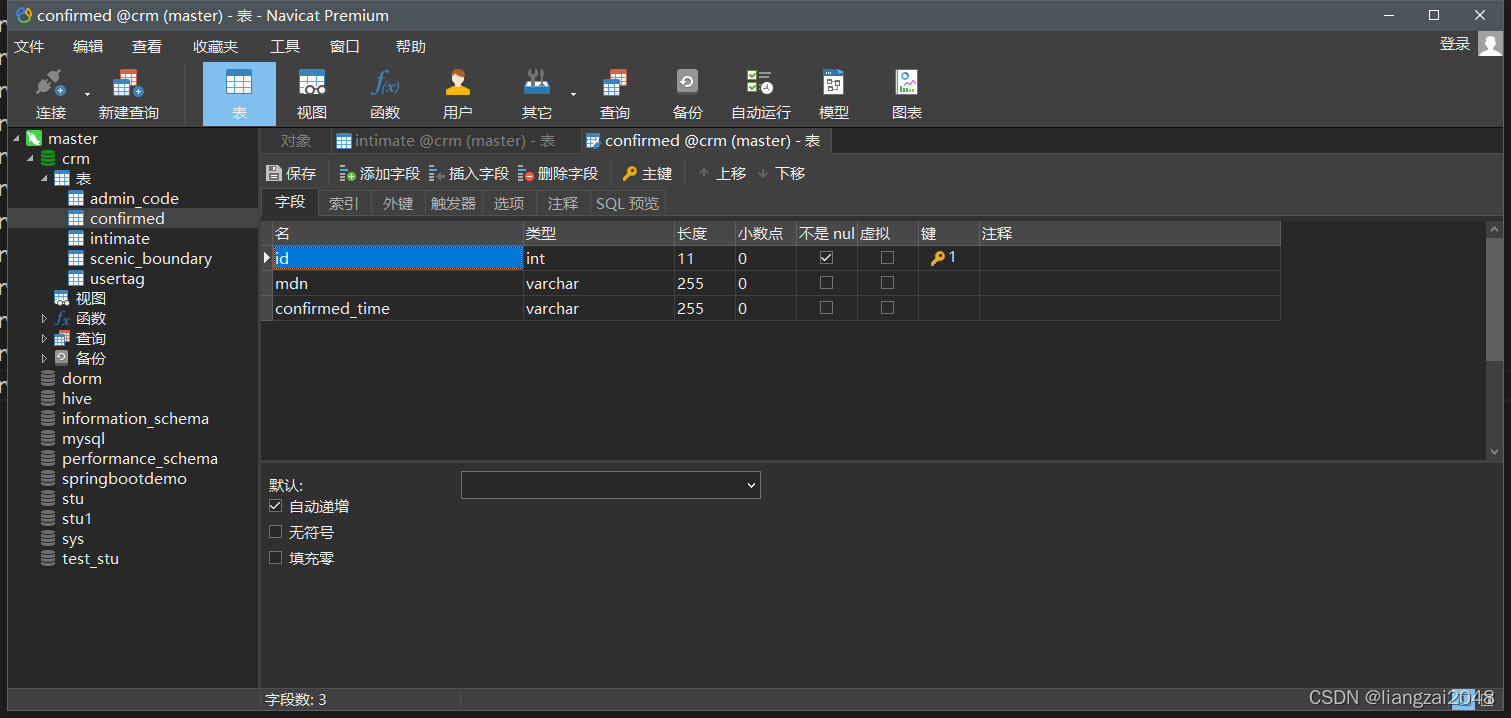

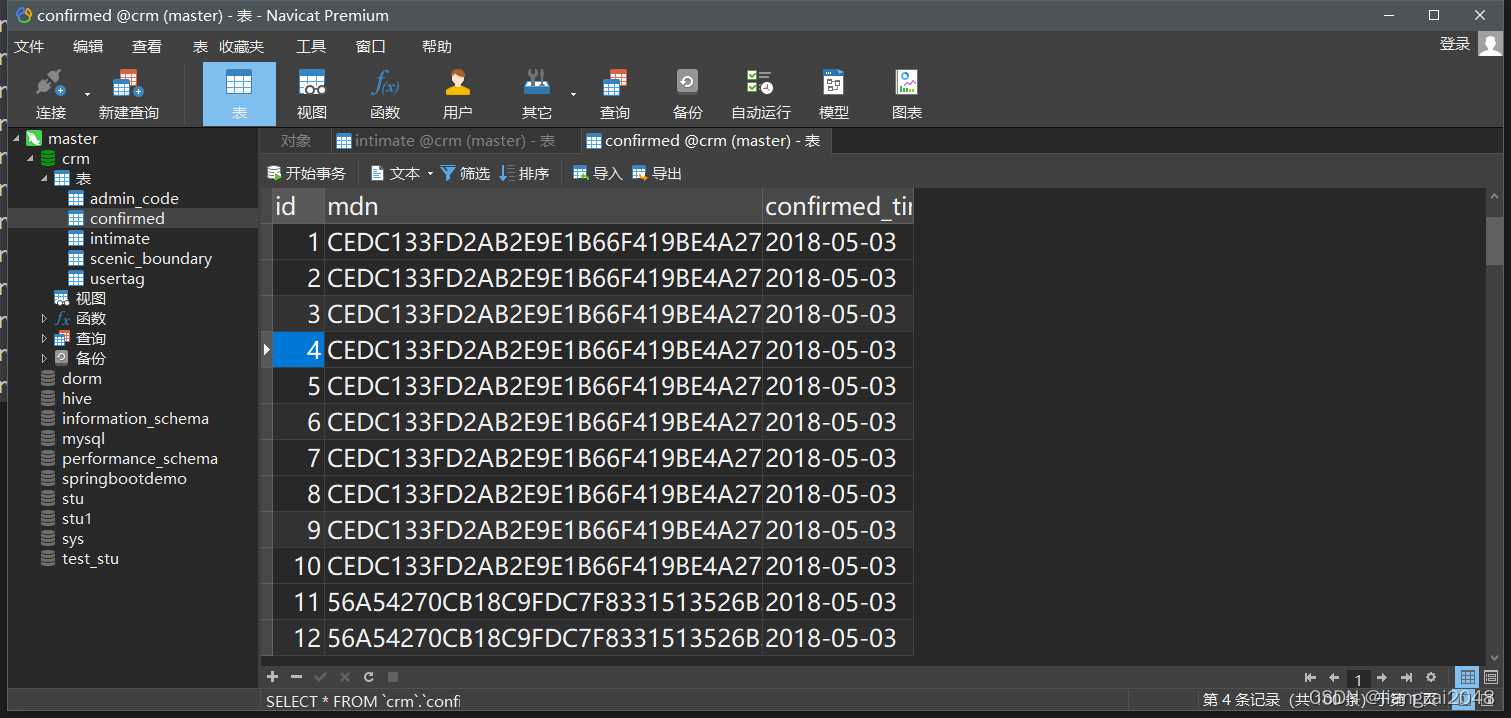

在MySQL中创建confirmed表(疫情确诊)

向confirmed表插入数据

insert into confirmed (mdn,confirmed_time) values("CEDC133FD2AB2E9E1B66F419BE4A27FB","2018-05-03");insert into confirmed (mdn,confirmed_time) values("CEDC133FD2AB2E9E1B66F419BE4A27FB","2018-05-03");insert into confirmed (mdn,confirmed_time) values("CEDC133FD2AB2E9E1B66F419BE4A27FB","2018-05-03");insert into confirmed (mdn,confirmed_time) values("CEDC133FD2AB2E9E1B66F419BE4A27FB","2018-05-03");insert into confirmed (mdn,confirmed_time) values("CEDC133FD2AB2E9E1B66F419BE4A27FB","2018-05-03");insert into confirmed (mdn,confirmed_time) values("CEDC133FD2AB2E9E1B66F419BE4A27FB","2018-05-03");insert into confirmed (mdn,confirmed_time) values("CEDC133FD2AB2E9E1B66F419BE4A27FB","2018-05-03");insert into confirmed (mdn,confirmed_time) values("CEDC133FD2AB2E9E1B66F419BE4A27FB","2018-05-03");insert into confirmed (mdn,confirmed_time) values("CEDC133FD2AB2E9E1B66F419BE4A27FB","2018-05-03");insert into confirmed (mdn,confirmed_time) values("CEDC133FD2AB2E9E1B66F419BE4A27FB","2018-05-03");insert into confirmed (mdn,confirmed_time) values("56A54270CB18C9FDC7F8331513526B3F","2018-05-03");insert into confirmed (mdn,confirmed_time) values("56A54270CB18C9FDC7F8331513526B3F","2018-05-03");insert into confirmed (mdn,confirmed_time) values("56A54270CB18C9FDC7F8331513526B3F","2018-05-03");insert into confirmed (mdn,confirmed_time) values("63D1926013BE71837415DD735C5DE59C","2018-05-03");insert into confirmed (mdn,confirmed_time) values("63D1926013BE71837415DD735C5DE59C","2018-05-03");insert into confirmed (mdn,confirmed_time) values("63D1926013BE71837415DD735C5DE59C","2018-05-03");insert into confirmed (mdn,confirmed_time) values("B27CF1A7FED8650F601A6DB3708A5F10","2018-05-03");insert into confirmed (mdn,confirmed_time) values("B27CF1A7FED8650F601A6DB3708A5F10","2018-05-03");insert into confirmed (mdn,confirmed_time) values("B27CF1A7FED8650F601A6DB3708A5F10","2018-05-03");insert into confirmed (mdn,confirmed_time) values("B27CF1A7FED8650F601A6DB3708A5F10","2018-05-03");insert into confirmed (mdn,confirmed_time) values("B27CF1A7FED8650F601A6DB3708A5F10","2018-05-03");insert into confirmed (mdn,confirmed_time) values("B27CF1A7FED8650F601A6DB3708A5F10","2018-05-03");insert into confirmed (mdn,confirmed_time) values("B27CF1A7FED8650F601A6DB3708A5F10","2018-05-03");insert into confirmed (mdn,confirmed_time) values("B27CF1A7FED8650F601A6DB3708A5F10","2018-05-03");insert into confirmed (mdn,confirmed_time) values("B27CF1A7FED8650F601A6DB3708A5F10","2018-05-03");insert into confirmed (mdn,confirmed_time) values("B27CF1A7FED8650F601A6DB3708A5F10","2018-05-03");insert into confirmed (mdn,confirmed_time) values("B27CF1A7FED8650F601A6DB3708A5F10","2018-05-03");insert into confirmed (mdn,confirmed_time) values("B27CF1A7FED8650F601A6DB3708A5F10","2018-05-03");insert into confirmed (mdn,confirmed_time) values("B27CF1A7FED8650F601A6DB3708A5F10","2018-05-03");insert into confirmed (mdn,confirmed_time) values("B27CF1A7FED8650F601A6DB3708A5F10","2018-05-03");insert into confirmed (mdn,confirmed_time) values("B27CF1A7FED8650F601A6DB3708A5F10","2018-05-03");insert into confirmed (mdn,confirmed_time) values("B27CF1A7FED8650F601A6DB3708A5F10","2018-05-03");insert into confirmed (mdn,confirmed_time) values("B27CF1A7FED8650F601A6DB3708A5F10","2018-05-03");insert into confirmed (mdn,confirmed_time) values("3B725237D68D29843A036E5E14ABF129","2018-05-03");insert into confirmed (mdn,confirmed_time) values("3B725237D68D29843A036E5E14ABF129","2018-05-03");insert into confirmed (mdn,confirmed_time) values("3B725237D68D29843A036E5E14ABF129","2018-05-03");insert into confirmed (mdn,confirmed_time) values("3B725237D68D29843A036E5E14ABF129","2018-05-03");insert into confirmed (mdn,confirmed_time) values("92F5DFD7BBC8C9A691FB78F590C0CC49","2018-05-03");insert into confirmed (mdn,confirmed_time) values("8985508EC4F1125B9255C3C266BCF92E","2018-05-03");insert into confirmed (mdn,confirmed_time) values("8985508EC4F1125B9255C3C266BCF92E","2018-05-03");insert into confirmed (mdn,confirmed_time) values("9A756E56B7861780D8180A1D508A84F3","2018-05-03");insert into confirmed (mdn,confirmed_time) values("9A756E56B7861780D8180A1D508A84F3","2018-05-03");insert into confirmed (mdn,confirmed_time) values("9A756E56B7861780D8180A1D508A84F3","2018-05-03");insert into confirmed (mdn,confirmed_time) values("9A756E56B7861780D8180A1D508A84F3","2018-05-03");insert into confirmed (mdn,confirmed_time) values("C7D5730F0F655987C02A0E8281F1BA29","2018-05-03");insert into confirmed (mdn,confirmed_time) values("C7D5730F0F655987C02A0E8281F1BA29","2018-05-03");insert into confirmed (mdn,confirmed_time) values("C7D5730F0F655987C02A0E8281F1BA29","2018-05-03");insert into confirmed (mdn,confirmed_time) values("B8BF953999758B9E4D92BA8F5C6DC899","2018-05-03");insert into confirmed (mdn,confirmed_time) values("B8BF953999758B9E4D92BA8F5C6DC899","2018-05-03");insert into confirmed (mdn,confirmed_time) values("B8BF953999758B9E4D92BA8F5C6DC899","2018-05-03");insert into confirmed (mdn,confirmed_time) values("F4B24D0D2CB102F72B93D69D68DBC8C6","2018-05-03");insert into confirmed (mdn,confirmed_time) values("F4B24D0D2CB102F72B93D69D68DBC8C6","2018-05-03");insert into confirmed (mdn,confirmed_time) values("F4B24D0D2CB102F72B93D69D68DBC8C6","2018-05-03");insert into confirmed (mdn,confirmed_time) values("F4B24D0D2CB102F72B93D69D68DBC8C6","2018-05-03");insert into confirmed (mdn,confirmed_time) values("F4B24D0D2CB102F72B93D69D68DBC8C6","2018-05-03");insert into confirmed (mdn,confirmed_time) values("F4B24D0D2CB102F72B93D69D68DBC8C6","2018-05-03");insert into confirmed (mdn,confirmed_time) values("F4B24D0D2CB102F72B93D69D68DBC8C6","2018-05-03");insert into confirmed (mdn,confirmed_time) values("F4B24D0D2CB102F72B93D69D68DBC8C6","2018-05-03");insert into confirmed (mdn,confirmed_time) values("217487EA19A42DC0A150E4275F8897B9","2018-05-03");insert into confirmed (mdn,confirmed_time) values("217487EA19A42DC0A150E4275F8897B9","2018-05-03");insert into confirmed (mdn,confirmed_time) values("F5223784F4FB35D129ABBD8570C487B3","2018-05-03");insert into confirmed (mdn,confirmed_time) values("F5223784F4FB35D129ABBD8570C487B3","2018-05-03");insert into confirmed (mdn,confirmed_time) values("F5223784F4FB35D129ABBD8570C487B3","2018-05-03");insert into confirmed (mdn,confirmed_time) values("F5223784F4FB35D129ABBD8570C487B3","2018-05-03");insert into confirmed (mdn,confirmed_time) values("F5223784F4FB35D129ABBD8570C487B3","2018-05-03");insert into confirmed (mdn,confirmed_time) values("F5223784F4FB35D129ABBD8570C487B3","2018-05-03");insert into confirmed (mdn,confirmed_time) values("F3AA00F1D22668EE461B21A61A2A45E3","2018-05-03");insert into confirmed (mdn,confirmed_time) values("A89D32C5629CA1B038539D7B9A1BCE7F","2018-05-03");insert into confirmed (mdn,confirmed_time) values("A89D32C5629CA1B038539D7B9A1BCE7F","2018-05-03");insert into confirmed (mdn,confirmed_time) values("A89D32C5629CA1B038539D7B9A1BCE7F","2018-05-03");insert into confirmed (mdn,confirmed_time) values("A9EFA156D6CB1C217AAC87F3A70DB0F7","2018-05-03");insert into confirmed (mdn,confirmed_time) values("A9EFA156D6CB1C217AAC87F3A70DB0F7","2018-05-03");insert into confirmed (mdn,confirmed_time) values("A9EFA156D6CB1C217AAC87F3A70DB0F7","2018-05-03");insert into confirmed (mdn,confirmed_time) values("06C01770754A2AF2B796CCF158BA0049","2018-05-03");insert into confirmed (mdn,confirmed_time) values("252B7A074A59FDEF26E72484F02AA915","2018-05-03");insert into confirmed (mdn,confirmed_time) values("252B7A074A59FDEF26E72484F02AA915","2018-05-03");insert into confirmed (mdn,confirmed_time) values("1F085F951C3B4E783C1373431E09FD0F","2018-05-03");insert into confirmed (mdn,confirmed_time) values("1F085F951C3B4E783C1373431E09FD0F","2018-05-03");insert into confirmed (mdn,confirmed_time) values("1F085F951C3B4E783C1373431E09FD0F","2018-05-03");insert into confirmed (mdn,confirmed_time) values("D5C8CBEB8762FEB815E7709B32EB5E82","2018-05-03");insert into confirmed (mdn,confirmed_time) values("D5C8CBEB8762FEB815E7709B32EB5E82","2018-05-03");insert into confirmed (mdn,confirmed_time) values("D5C8CBEB8762FEB815E7709B32EB5E82","2018-05-03");insert into confirmed (mdn,confirmed_time) values("C309E7CFBEE0635CB081C56A25A3B816","2018-05-03");insert into confirmed (mdn,confirmed_time) values("C309E7CFBEE0635CB081C56A25A3B816","2018-05-03");insert into confirmed (mdn,confirmed_time) values("C309E7CFBEE0635CB081C56A25A3B816","2018-05-03");insert into confirmed (mdn,confirmed_time) values("C309E7CFBEE0635CB081C56A25A3B816","2018-05-03");insert into confirmed (mdn,confirmed_time) values("C309E7CFBEE0635CB081C56A25A3B816","2018-05-03");insert into confirmed (mdn,confirmed_time) values("C309E7CFBEE0635CB081C56A25A3B816","2018-05-03");insert into confirmed (mdn,confirmed_time) values("C309E7CFBEE0635CB081C56A25A3B816","2018-05-03");insert into confirmed (mdn,confirmed_time) values("C309E7CFBEE0635CB081C56A25A3B816","2018-05-03");insert into confirmed (mdn,confirmed_time) values("C33E6AB1DA0E28E02380DFD05D486ADA","2018-05-03");insert into confirmed (mdn,confirmed_time) values("C33E6AB1DA0E28E02380DFD05D486ADA","2018-05-03");insert into confirmed (mdn,confirmed_time) values("C33E6AB1DA0E28E02380DFD05D486ADA","2018-05-03");insert into confirmed (mdn,confirmed_time) values("C33E6AB1DA0E28E02380DFD05D486ADA","2018-05-03");insert into confirmed (mdn,confirmed_time) values("C33E6AB1DA0E28E02380DFD05D486ADA","2018-05-03");insert into confirmed (mdn,confirmed_time) values("C33E6AB1DA0E28E02380DFD05D486ADA","2018-05-03");insert into confirmed (mdn,confirmed_time) values("C33E6AB1DA0E28E02380DFD05D486ADA","2018-05-03");insert into confirmed (mdn,confirmed_time) values("C33E6AB1DA0E28E02380DFD05D486ADA","2018-05-03");insert into confirmed (mdn,confirmed_time) values("C33E6AB1DA0E28E02380DFD05D486ADA","2018-05-03");insert into confirmed (mdn,confirmed_time) values("C33E6AB1DA0E28E02380DFD05D486ADA","2018-05-03");

时空伴随着计算

新建dws模块

新建com.ctyun.dws包

com.ctyun.dws导入试试park依赖

<dependencies> <dependency> <groupId>org.scala-lang</groupId> <artifactId>scala-library</artifactId> </dependency> <dependency> <groupId>org.scala-lang</groupId> <artifactId>scala-compiler</artifactId> </dependency> <dependency> <groupId>org.scala-lang</groupId> <artifactId>scala-reflect</artifactId> </dependency> <dependency> <groupId>org.apache.spark</groupId> <artifactId>spark-sql_${scala.library.version}</artifactId> </dependency> <dependency> <groupId>com.ctyun</groupId> <artifactId>common</artifactId> </dependency> </dependencies> <build> <plugins> <plugin> <groupId>org.apache.maven.plugins</groupId> <artifactId>maven-compiler-plugin</artifactId> </plugin> <plugin> <groupId>org.scala-tools</groupId> <artifactId>maven-scala-plugin</artifactId> </plugin> </plugins> </build>新建SpacetimeAdjoint类

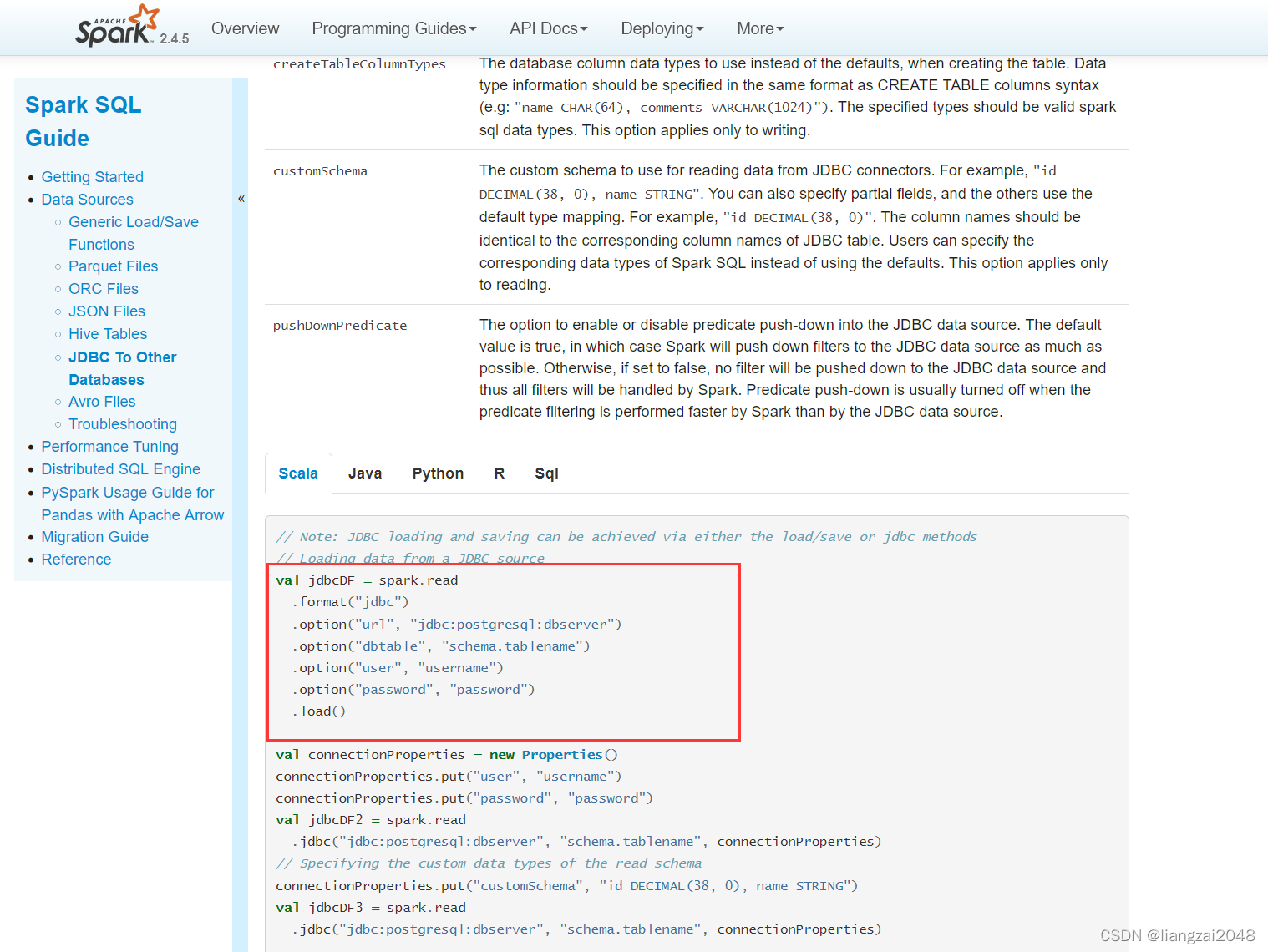

访问spark官网将jdbc的驱动拿下来

在common新建DateUtil并编辑

package com.ctyun.utilsimport java.text.SimpleDateFormatobject DateUtil { def diff(date1: String, data2: String): Long = { //将时间字符串转换成时间戳 val format = new SimpleDateFormat("yyyyMMddHHmmss") //获取时间戳 val time1: Long = format.parse(date1).getTime val time2: Long = format.parse(data2).getTime Math.abs(time1 - time2) / 1000 } def main(args: Array[String]): Unit = { println(diff("20180503100029", "20180503100050")) }}代码优化

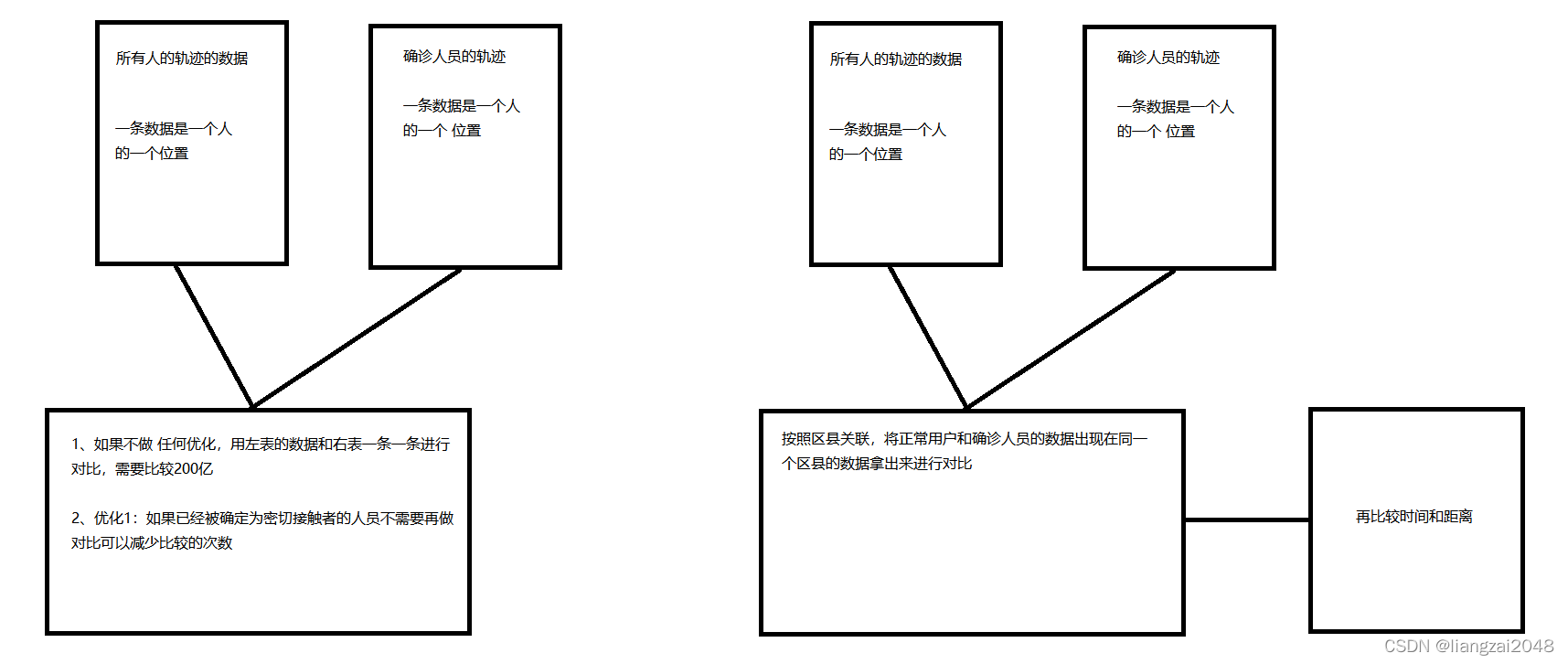

这里代码是笛卡尔积 ,需要进行优化。

#运行慢可以执行命令杀死进程yarn application -kill application_1649504042939_0009

这里已经运行完毕,需再次调优

编辑SpacetimeAdjoint类(已优化)

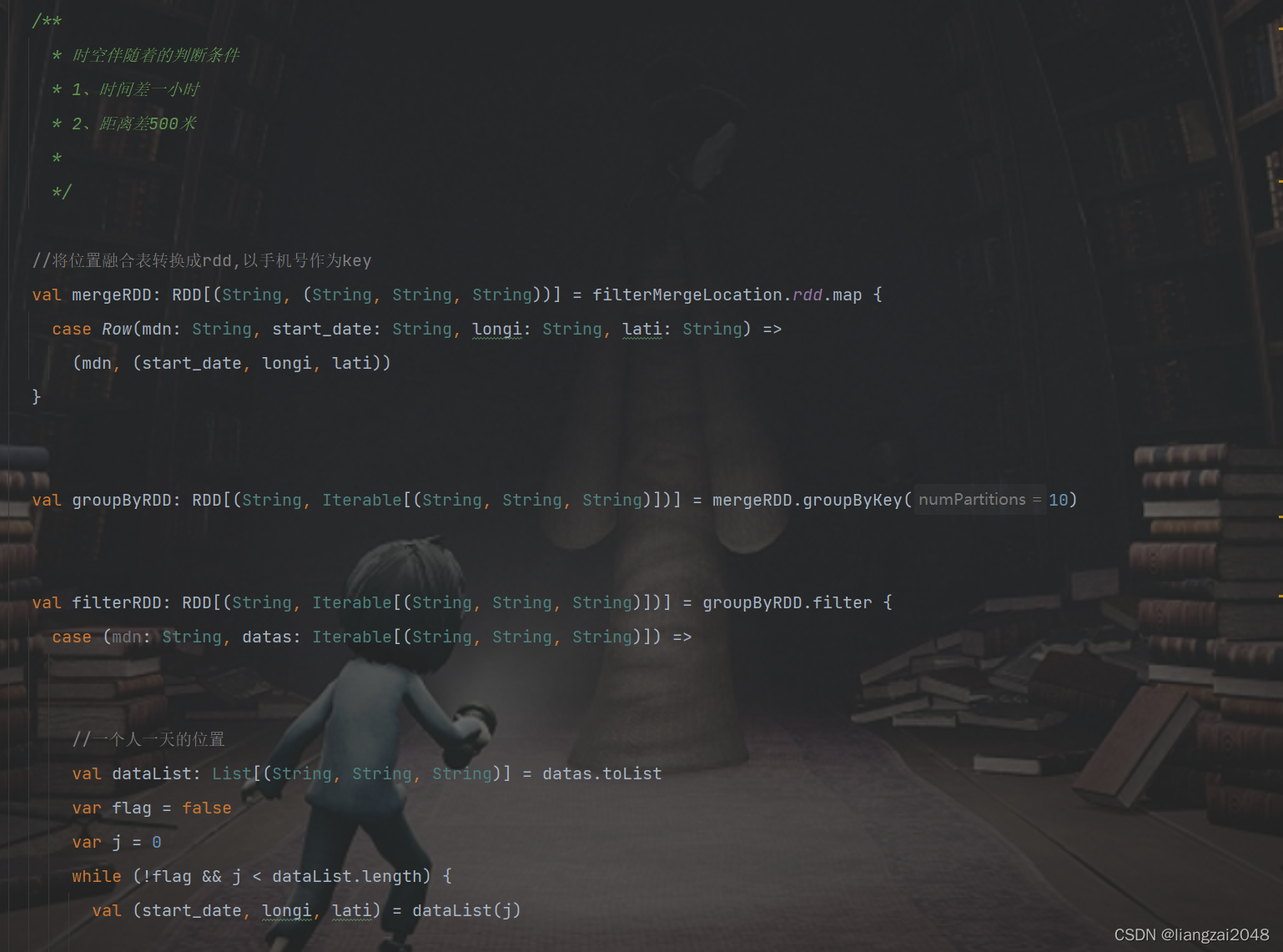

package com.ctyun.dwsimport com.ctyun.utils.{DateUtil, Geography, SparkTool}import org.apache.spark.broadcast.Broadcastimport org.apache.spark.rdd.RDDimport org.apache.spark.sql._import org.apache.spark.storage.StorageLevelobject SpacetimeAdjoint extends SparkTool { override def run(spark: SparkSession): Unit = { import spark.implicits._ //1、读取位置融合表 val mergeLocation: DataFrame = spark .table("dwi.dwi_res_regn_mergelocation_msk_d") .where($"day_id" === day_id) //2、使用jdb读取mysql中的确诊列表和密接列表 //确诊列表 val confirmed: DataFrame = spark .read .format("jdbc") .option("url", "jdbc:mysql://master:3306") .option("dbtable", "crm.confirmed") .option("user", "root") .option("password", "123456") .load() //密接列表 val intimate: DataFrame = spark .read .format("jdbc") .option("url", "jdbc:mysql://master:3306") .option("dbtable", "crm.intimate") .option("user", "root") .option("password", "123456") .load() / * 1、获取确诊人员的行动轨迹 */ val confirmedLocation: DataFrame = mergeLocation.join(confirmed, "mdn") //确诊人员轨迹列表 val confirmedLocationArray: Array[(String, String, String, String)] = confirmedLocation .select($"mdn", $"start_date", $"longi", $"lati") .as[(String, String, String, String)] .collect() //将确诊列表广播出去 val confirmedLocationArraybro: Broadcast[Array[(String, String, String, String)]] = spark.sparkContext.broadcast(confirmedLocationArray) //确诊人员的手机号 val confirmedMdns: Array[String] = confirmed.select($"mdn").as[String].collect() //过滤掉以及确诊的人员 val filterMergeLocation: DataFrame = mergeLocation .select($"mdn", $"start_date", $"longi", $"lati") .filter(row => { val mdn: String = row.getAs[String]("mdn") !confirmedMdns.contains(mdn) }) / * 时空伴随着的判断条件 * 1、时间差一小时 * 2、距离差500米 * */ //将位置融合表转换成rdd,以手机号作为key val mergeRDD: RDD[(String, (String, String, String))] = filterMergeLocation.rdd.map { case Row(mdn: String, start_date: String, longi: String, lati: String) => (mdn, (start_date, longi, lati)) } val groupByRDD: RDD[(String, Iterable[(String, String, String)])] = mergeRDD.groupByKey(10) val filterRDD: RDD[(String, Iterable[(String, String, String)])] = groupByRDD.filter { case (mdn: String, datas: Iterable[(String, String, String)]) => //一个人一天的位置 val dataList: List[(String, String, String)] = datas.toList var flag = false var j = 0 while (!flag && j < dataList.length) { val (start_date, longi, lati) = dataList(j) val confirmedLocationArray: Array[(String, String, String, String)] = confirmedLocationArraybro.value var i = 0 while (!flag && i < confirmedLocationArray.length) { val (confirmedMdn, confirmedStart_date, confirmedlLongi, confirmedLati) = confirmedLocationArray(i) //1、计算时间戳 val diff: Long = DateUtil.diff(start_date, confirmedStart_date) if (diff < 3600) {//2、计算距离差val length: Double = Geography.calculateLength(longi.toDouble, lati.toDouble, confirmedlLongi.toDouble, confirmedLati.toDouble)if (length < 500) { flag = true} } i += 1 } j += 1 } true } //将数据转换成多行 val resultrRDD: RDD[(String, String, String, String)] = filterRDD.flatMap { case (mdn: String, datas: Iterable[(String, String, String)]) => datas.map { case (start_date, longi, lati) => (mdn, start_date, longi, lati) } } val filterDF: DataFrame = resultrRDD.toDF("mdn", "start_date", "longi", "lati") //filterDF.persist(StorageLevel.MEMORY_AND_DISK_SER) //保存密切接触者规则 filterDF .write .format("csv") .mode(SaveMode.Overwrite) .option("sep", "\t") .save(s"/daas/motl/dws/spacetime_adjoint/day_id=$day_id") //取出密切接触者手机号 // filterDF // .select("mdn") // .distinct() // .write // .format("csv") // .mode(SaveMode.Overwrite) // .option("sep", "\t") // .save(s"/daas/motl/dws/spacetime_adjoint_mdns/day_id=$day_id") }}在dws层 编写spacetime-adjoint.sh脚本

#!/usr/bin/env bash#*# 文件名称:spacetime-adjoint.sh# 创建日期: 2022年4月10日# 编写人员: liangzai# 输入信息: oidd# 输出信息: 时空伴随着# # 功能描述: 时空伴随着# 处理过程:# Copyright(c) 2016 TianYi Cloud Technologies (China), Inc.# All Rights Reserved.#*#*#==修改日期==|===修改人=====|======================================================|##*# 时间参数day_id=$1spark-submit \--master yarn-client \--num-executors 1 \--executor-cores 4 \--executor-memory 6G \--conf spark.sql.shuffle.partitions=10 \--class com.ctyun.dws.SpacetimeAdjoint \--jars common-1.0.jar \dws-1.0.jar $day_id在dwi层增加权限

hdfs dfs -setfacl -R -m user:dws:r-x /daas/motl/dwi/上传包并运行spacetime-adjoint.sh脚本

sh spacetime-adjoint.sh 20220409查看spacetime-adjoint.sh脚本运行结果

hadoop dfs -cat /daas/motl/dws/spacetime_adjoint/day_id=20220409/part-00000-a7bb9404-4d01-4410-a583-e07b0cce2e68-c000.csv

再次优化

在SparkTool里新增代码块

/ * spark中的自定义函数 * */ //计算两个经纬度距离 val calculateLength: UserDefinedFunction = udf((longi: String, lati: String, last_longi: String, last_lati: String) => { //计算距离 Geography.calculateLength(longi.toDouble, lati.toDouble, last_longi.toDouble, last_lati.toDouble) })优化后的SpacetimeAdjoint代码

package com.ctyun.dwsimport com.ctyun.utils.{DateUtil, Geography, SparkTool}import org.apache.spark.broadcast.Broadcastimport org.apache.spark.rdd.RDDimport org.apache.spark.sql._import org.apache.spark.storage.StorageLevelobject SpacetimeAdjoint extends SparkTool { override def run(spark: SparkSession): Unit = { import spark.implicits._ import org.apache.spark.sql.functions._ //1、读取位置融合表 val mergeLocation: DataFrame = spark .table("dwi.dwi_res_regn_mergelocation_msk_d") .where($"day_id" === day_id) //2、使用jdb读取mysql中的确诊列表和密接列表 //确诊列表 val confirmed: DataFrame = spark .read .format("jdbc") .option("url", "jdbc:mysql://master:3306") .option("dbtable", "crm.confirmed") .option("user", "root") .option("password", "123456") .load() / * 1、获取确诊人员的行动轨迹 */ val confirmedLocation: DataFrame = mergeLocation .join(confirmed.hint("broadcast"), "mdn") .select($"mdn" as "confirmed_mdn", $"start_date" as "confirmed_start_date", $"longi" as "confirmed_longi", $"lati" as "confirmed_lati", $"county_id") / * 时空伴随着的判断条件 * 1、时间差一小时 * 2、距离差500米 * */ //按照区县关联 val filterDF: DataFrame = mergeLocation .join(confirmedLocation.hint("broadcast"), "county_id") //排除确诊人员 .where($"confirmed_mdn" =!= $"mdn") //1、计算用户和确诊人员数据相差的时间 .withColumn("diff", abs(unix_timestamp($"confirmed_start_date", "yyyyMMddHHmmss") - unix_timestamp($"start_date", "yyyyMMddHHmmss"))) //过滤数据 .where($"diff" < 3600) //计算距离 .withColumn("length", calculateLength($"confirmed_longi", $"confirmed_lati", $"longi", $"lati")) //过滤数据 .where($"length" < 500) .select($"mdn" , $"start_date" , $"end_date" , $"county_id" , $"longi" , $"lati" , $"bsid" , $"grid_id") filterDF.persist(StorageLevel.MEMORY_AND_DISK_SER) //保存密切接触者规则 filterDF .write .format("csv") .mode(SaveMode.Overwrite) .option("sep", "\t") .save(s"/daas/motl/dws/spacetime_adjoint/day_id=$day_id") //取出密切接触者手机号 filterDF .select("mdn") .distinct() .write .format("csv") .mode(SaveMode.Overwrite) .option("sep", "\t") .save(s"/daas/motl/dws/spacetime_adjoint_mdns/day_id=$day_id") }}打包上传并运行spacetime-adjoint.sh脚本

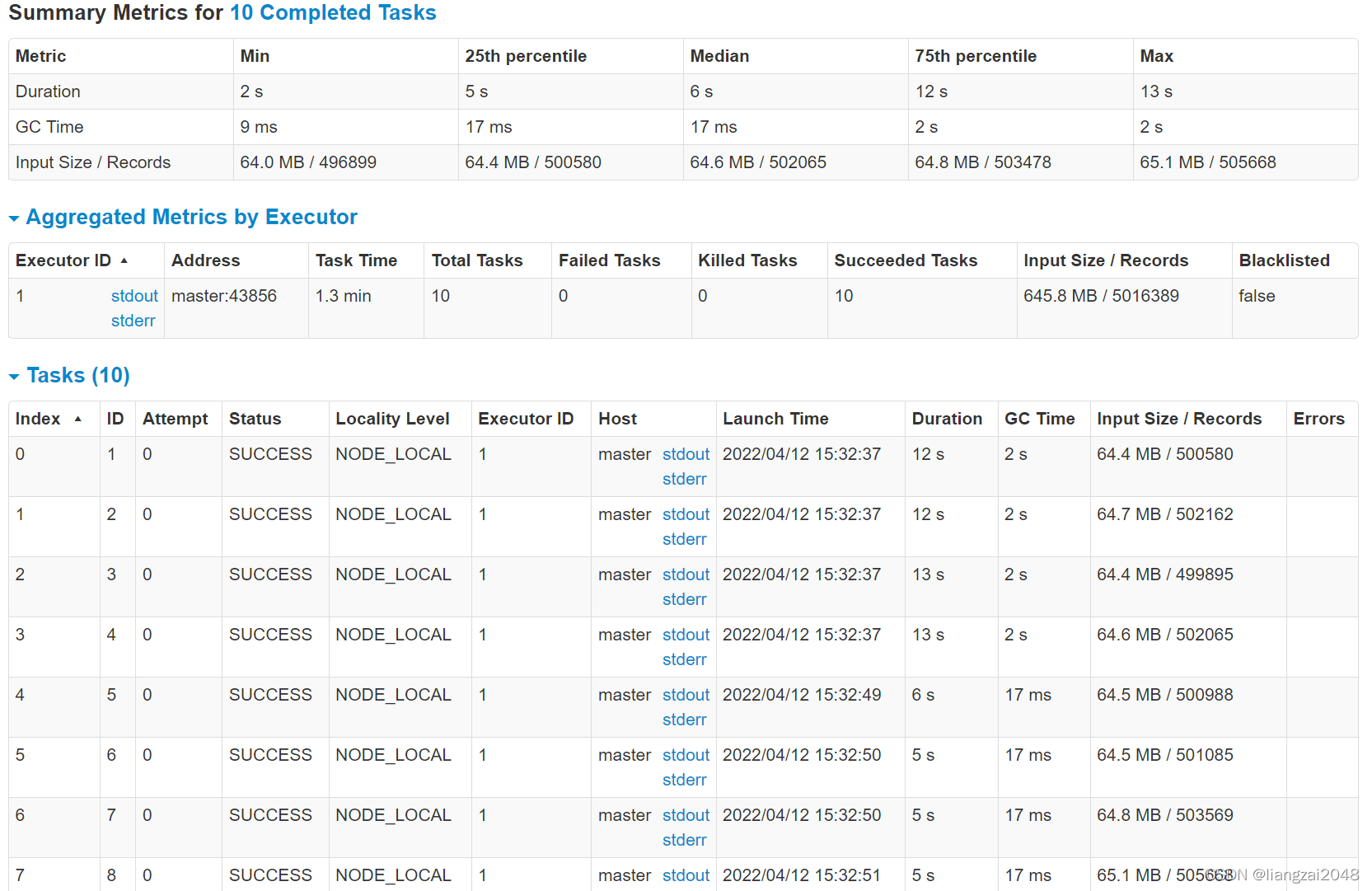

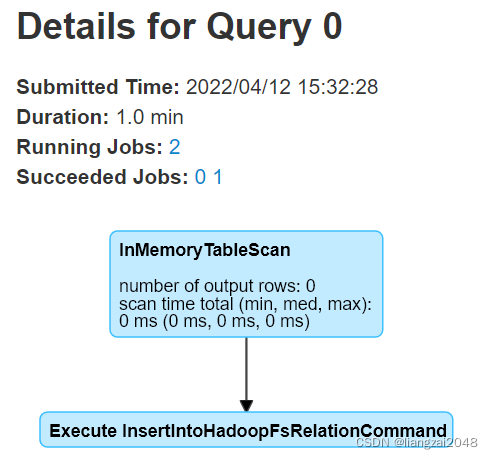

sh spacetime-adjoint.sh 20220409访问mater:8088和master:4040查看运行 状态

可见优化后速度得到了显著的提升,关联而不是笛卡尔积

查看优化后的运行结果

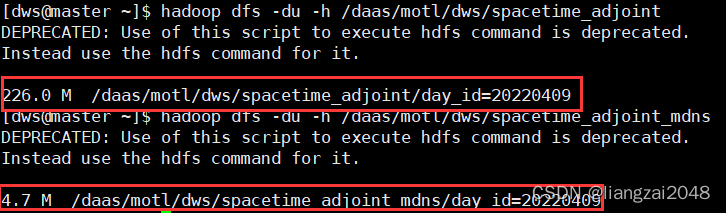

hadoop dfs -du -h /daas/motl/dws/spacetime_adjointhadoop dfs -du -h /daas/motl/dws/spacetime_adjoint_mdnshadoop dfs -cat /daas/motl/dws/spacetime_adjoint_mdns/day_id=20220409/part-00001-a4aa9fe8-5f8e-4a0d-b5a3-7ba98a677e47-c000.csv

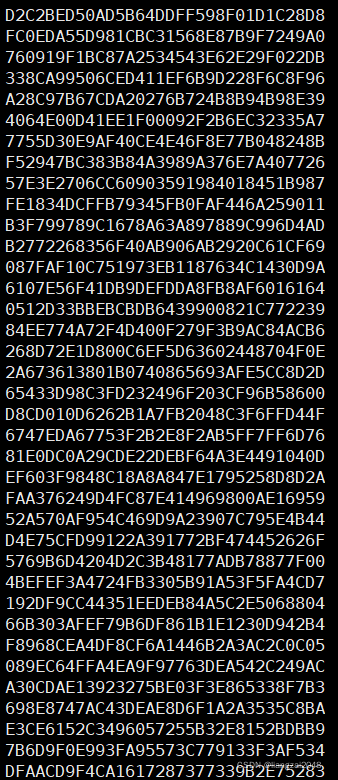

- 密接者手机号

可以看出,优化后代码大大减少了数据的冗余

第六章

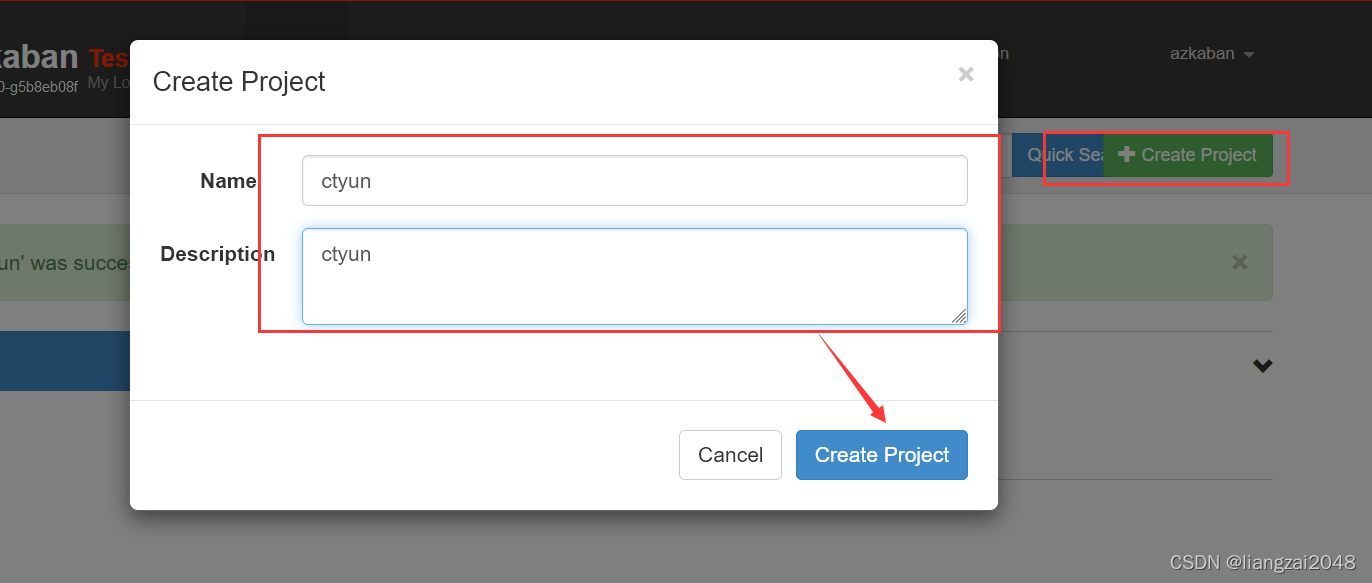

Azkaban定时调度

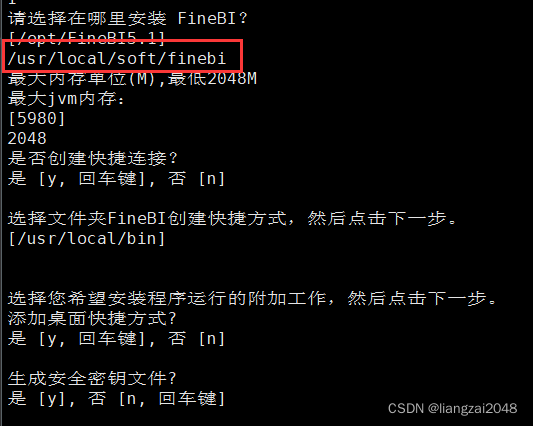

在root安装搭建Azkaban

1、上传解压

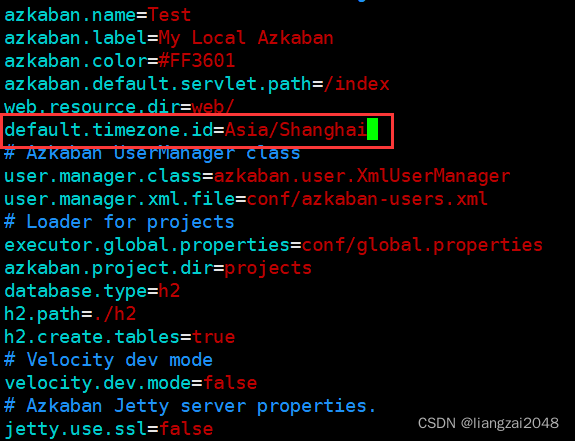

yum install unzipyunzip azkaban-solo-server.zip2、修改配置文件

cd /usr/local/soft/azkaban-solo-server/confvim azkaban.properties# 修改时区default.timezone.id=Asia/Shanghai

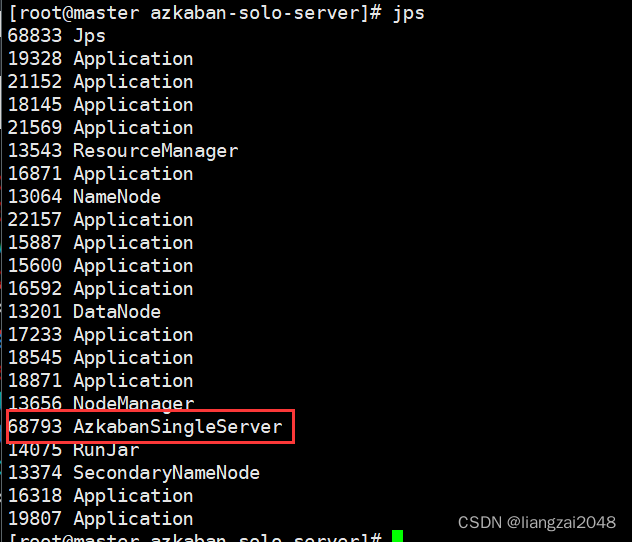

3、启动azkaban

cd /usr/local/soft/azkaban-solo-server# 启动./bin/start-solo.sh jps

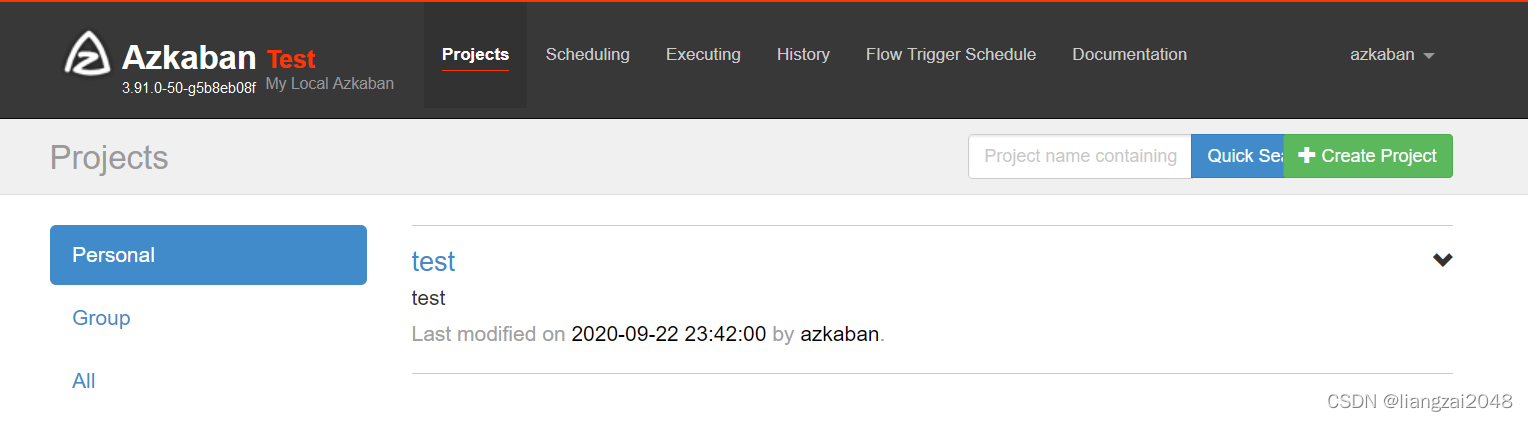

4、访问azkaban

http://master:8081用户名密码 azkaban/azkaban

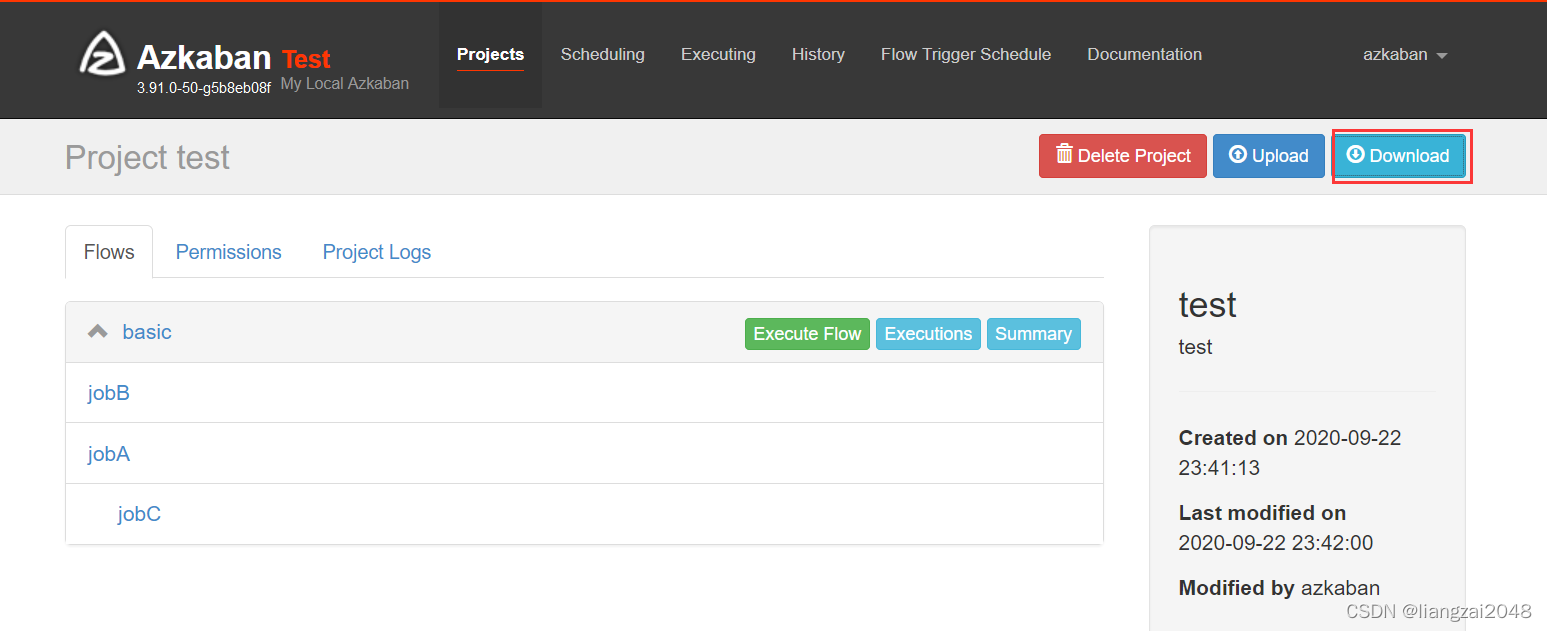

- 下载Azkaban案例观察配置文件

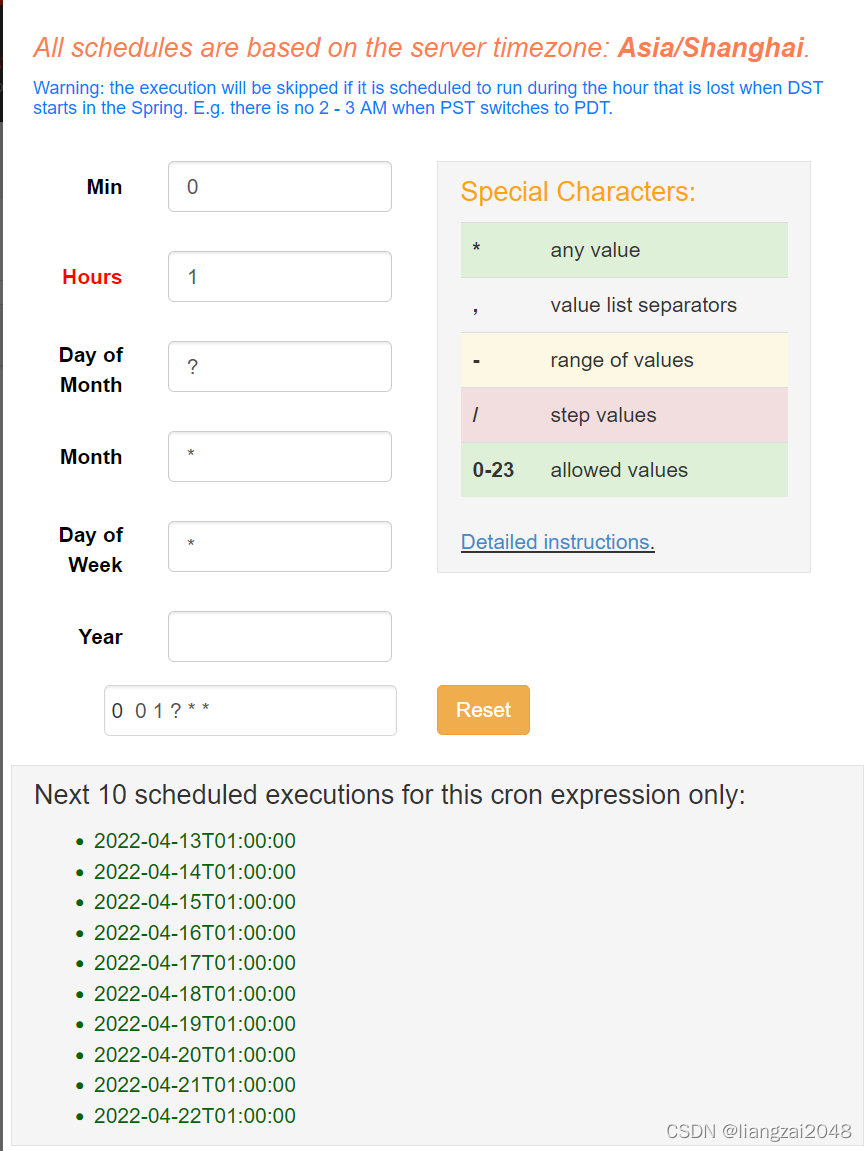

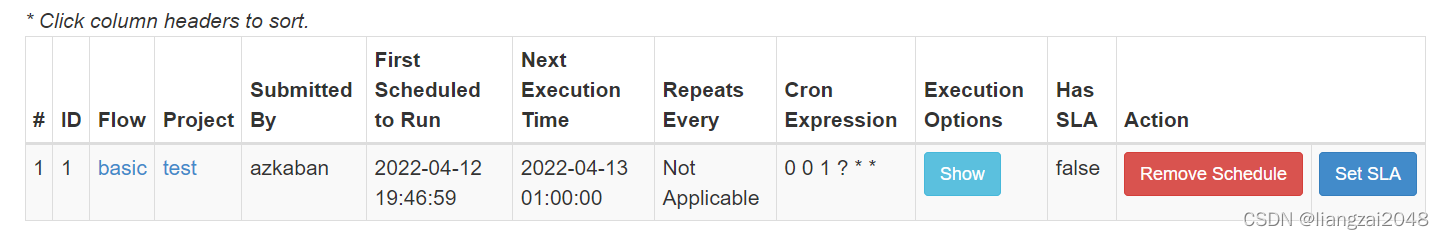

flowchatflowchat---config: failure.emails: noreply@foo.comnodes: - name: jobC type: noop # jobC depends on jobA and jobB dependsOn: - jobA - jobB - name: jobA type: command config: command: echo "This is an echoed text." - name: jobB type: command config: command: pwd- Azkaban调度策略(每天1点执行一次)

5、配置邮箱服务

vim conf/azkaban.propertiesmail.sender 发送方mail.host 邮箱服务器的地址mail.user 用户名mail.password 授权码# 增加以下配置mail.sender=987262086@qq.commail.host=smtp.qq.commail.user=987262086@qq.commail.password=aaaaa6、重启 、关闭、启动

# 重启azkabancd /usr/local/soft/azkaban-solo-server# 关闭./bin/shutdown-solo.sh# 启动./bin/start-solo.sh 在IDEA中ctyun目录下创建jobs目录

- 将下载的Azkaban文件解压放到jobs目录下

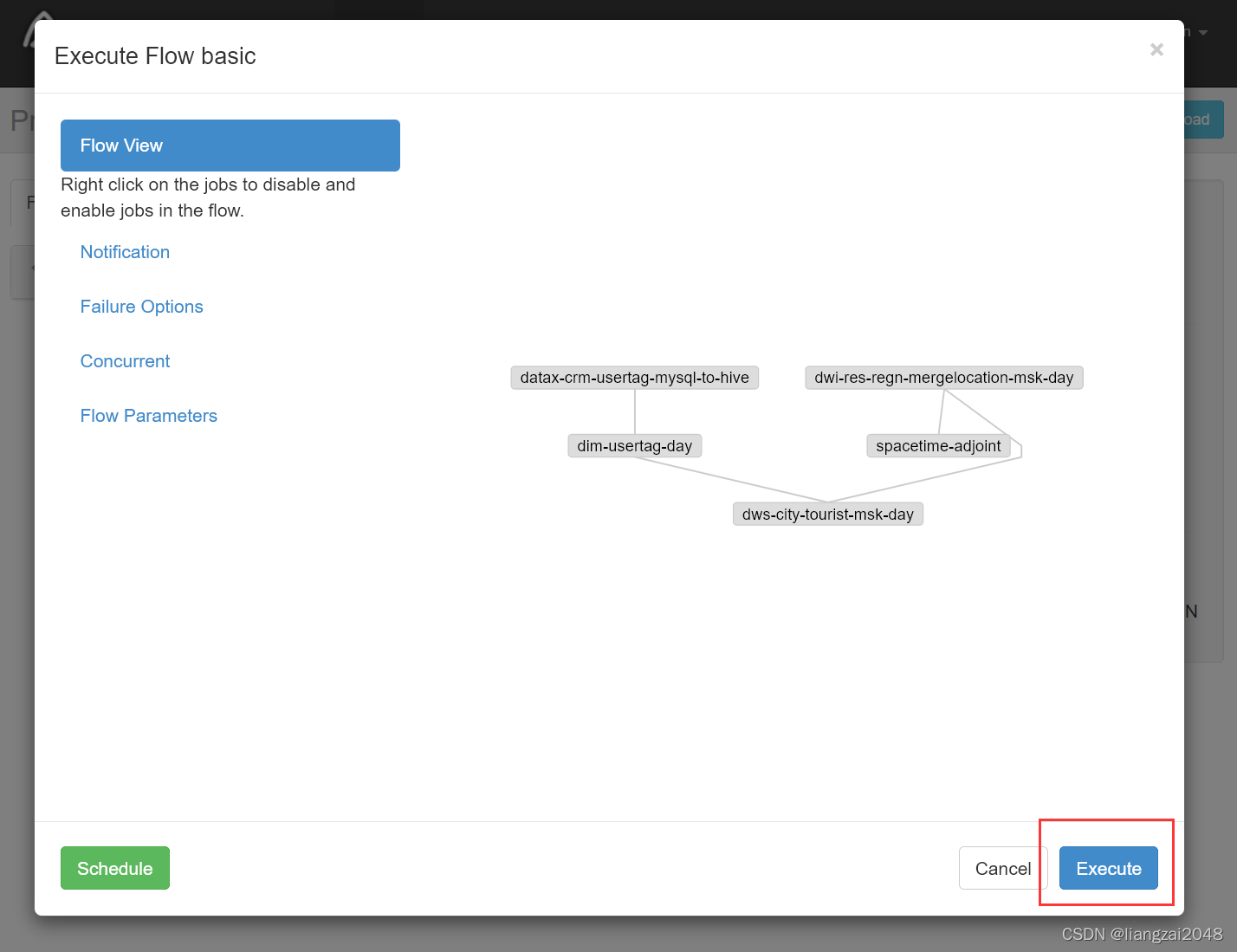

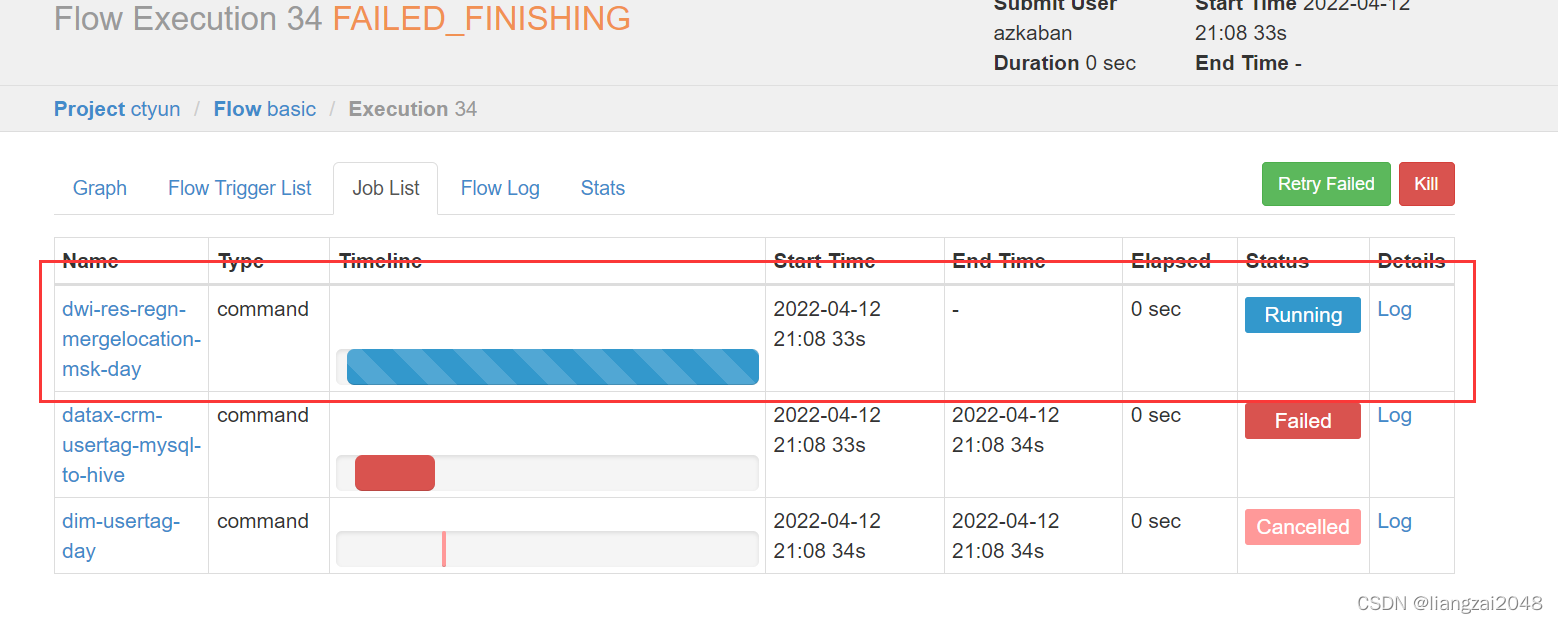

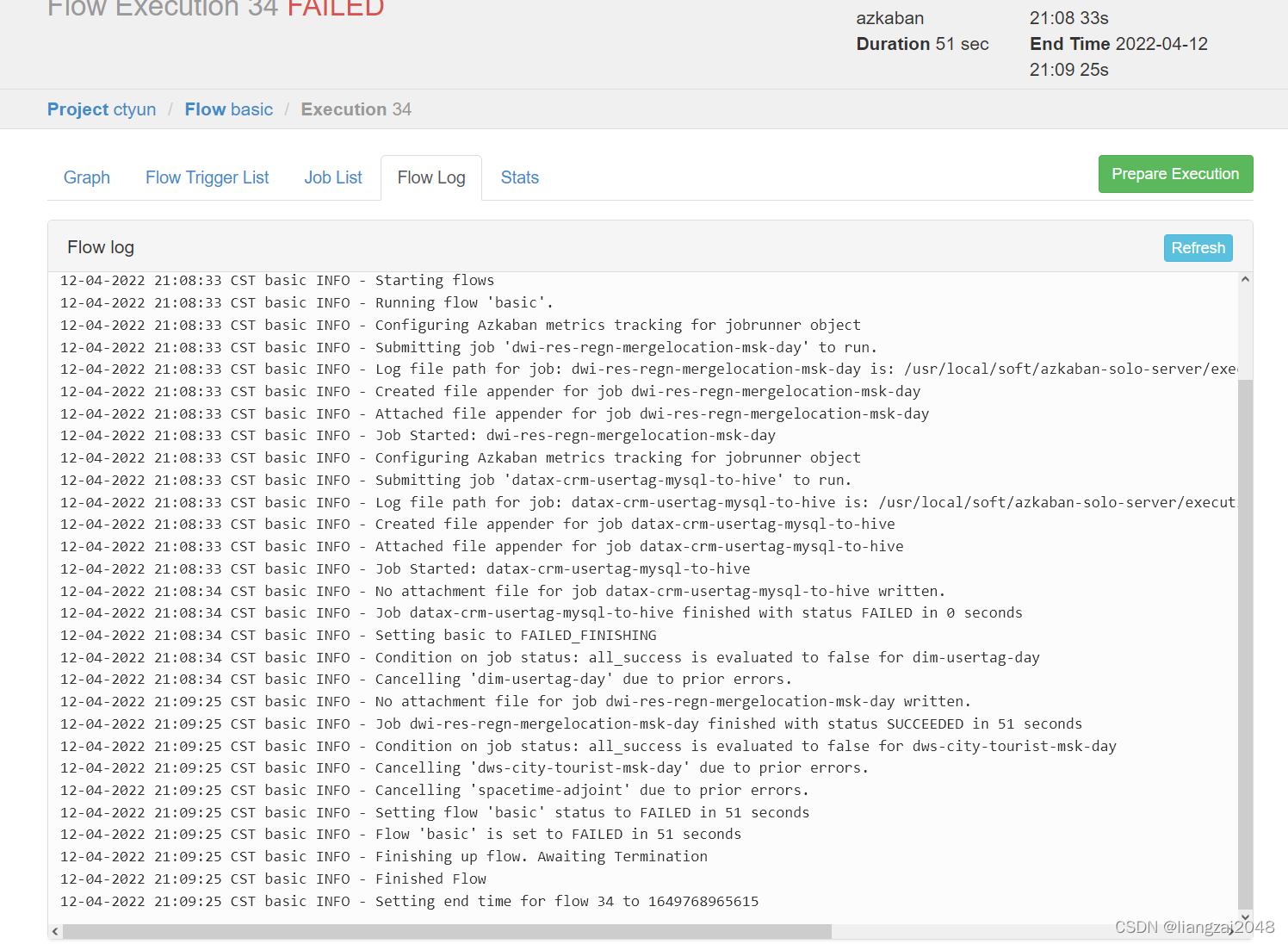

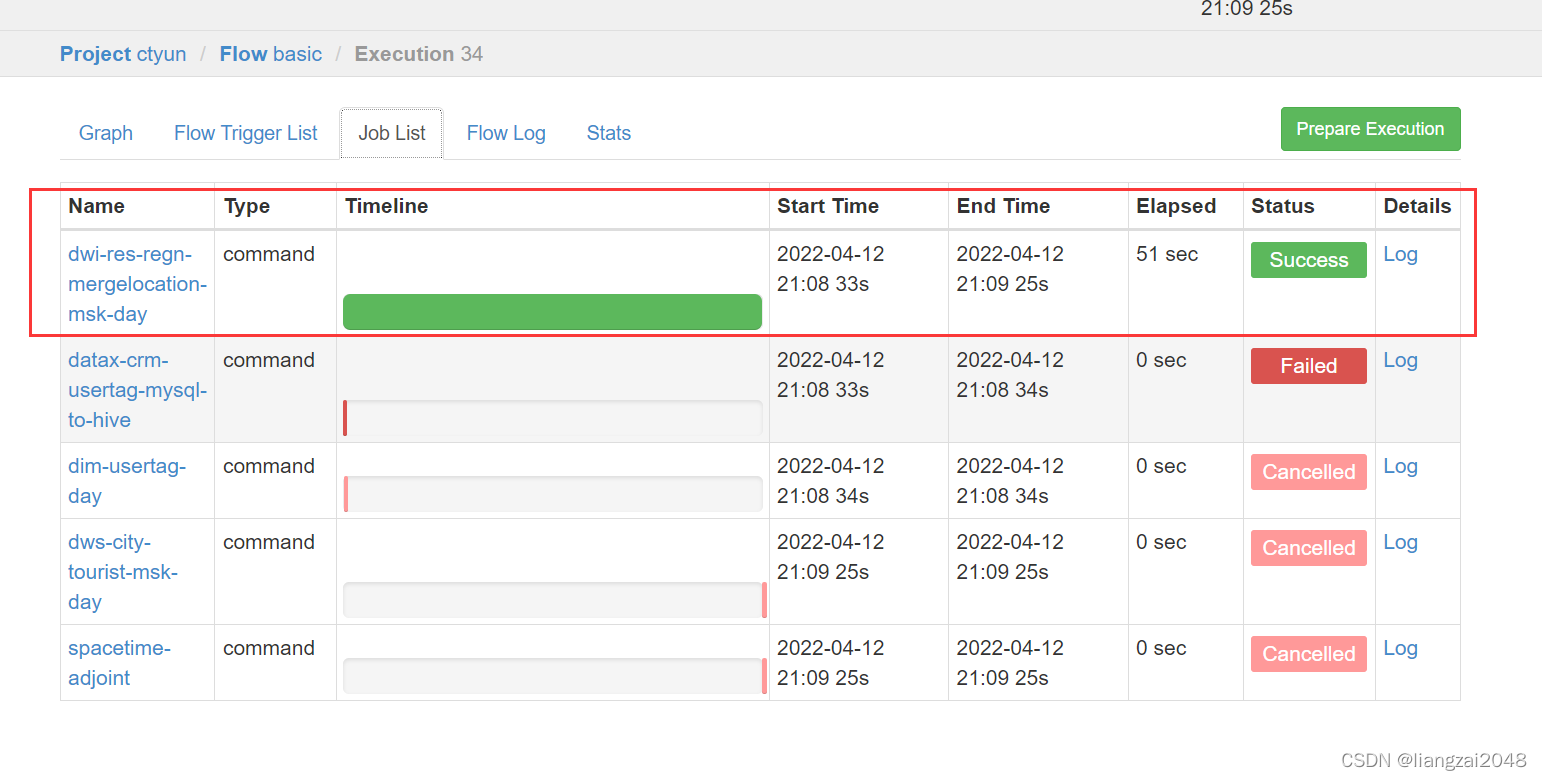

编写basic.flow

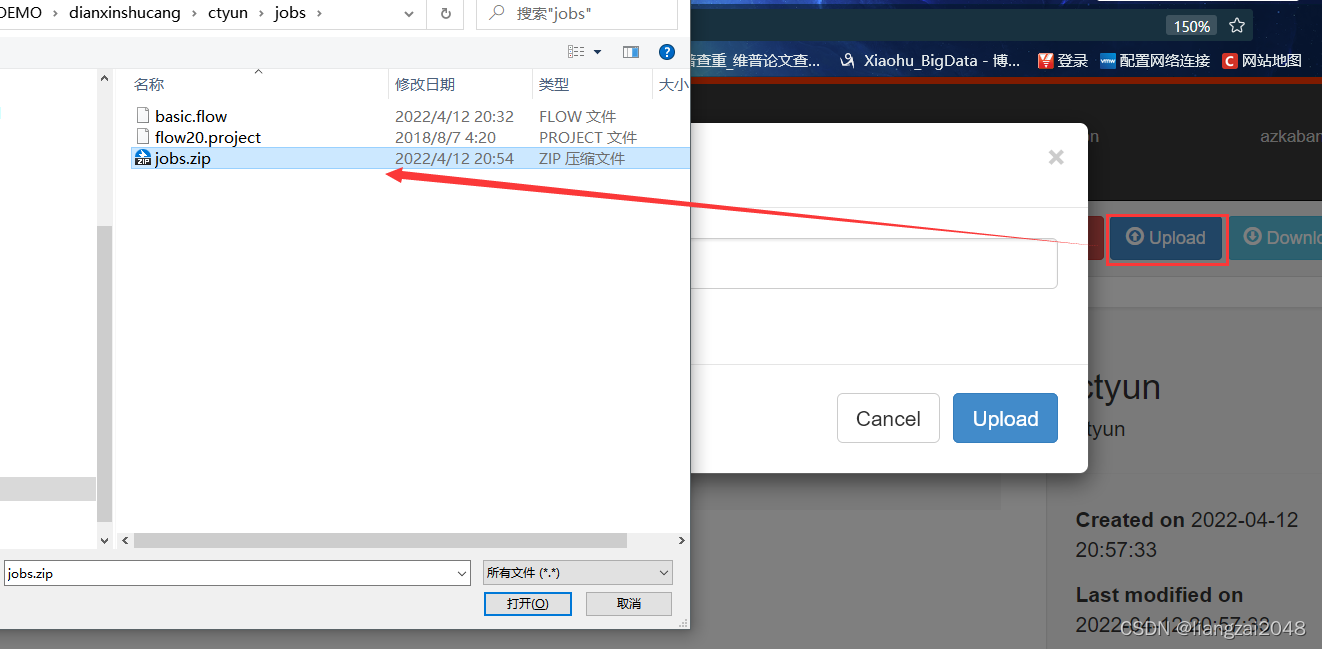

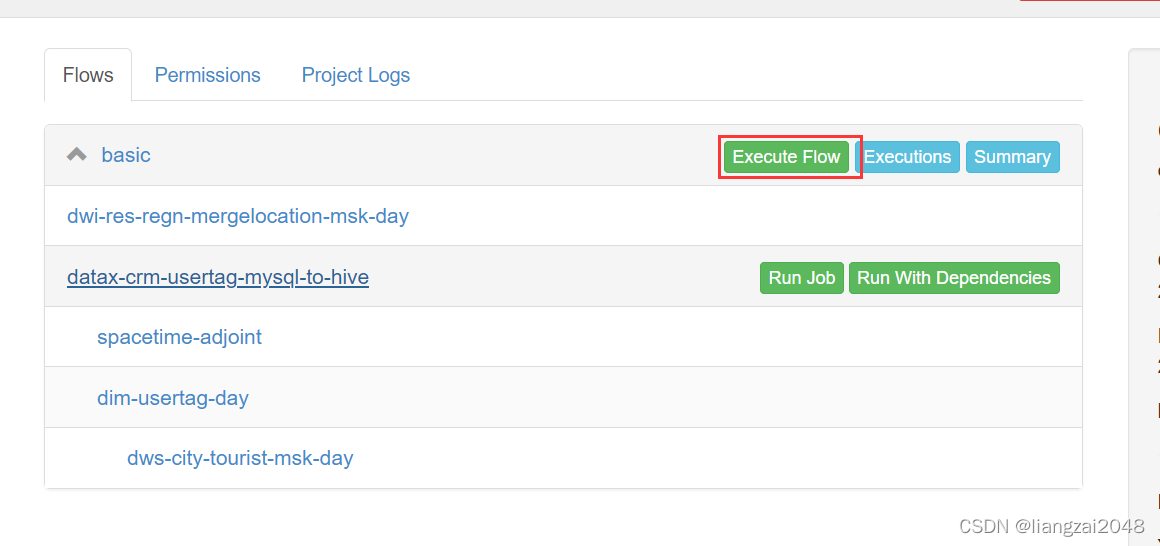

---config: failure.emails: noreply@foo.com day_id: $(new("org.joda.time.DateTime").minusDays(1).toString("yyyyMMdd"))nodes: - name: dwi-res-regn-mergelocation-msk-day type: command config: command: su - dwi -c "sh /home/dwi/dwi-res-regn-mergelocation-msk-day.sh ${day_id}" - name: spacetime-adjoint type: command # jobC depends on jobA and jobB config: command: su - dws -c "sh /home/dws/spacetime-adjoint.sh ${day_id}" dependsOn: - dwi-res-regn-mergelocation-msk-day - name: datax-crm-usertag-mysql-to-hive type: command config: command: su - ods -c "sh /home/ods/datax-crm-usertag-mysql-to-hive.sh ${day_id}" - name: dim-usertag-day type: command config: command: su - dim -c "sh /home/dim/dim-usertag-day.sh ${day_id}" dependsOn: - datax-crm-usertag-mysql-to-hive这里command需要切换 用户,不然会变成root用户,其它用户无法读取

- 打开资源管理路径将jobs目录下的两个文件压缩成jobs.zip文件并上传到Azkaban

- 查看运行日志

没有报错

- 执行成功

市游客表

判断一个人是否是景区的游客

在dws层创建dws_city_tourist_msk_d游客表

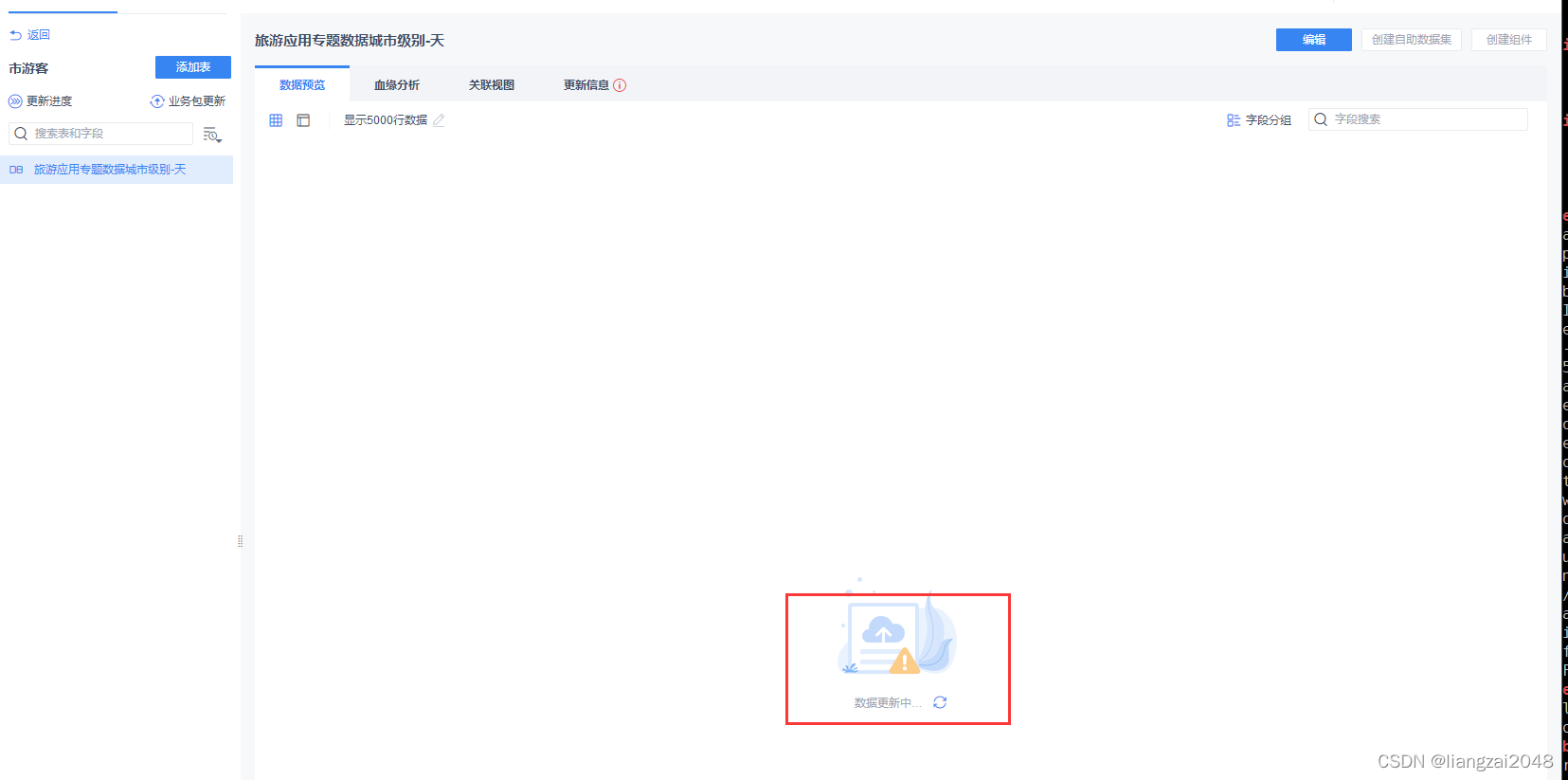

CREATE EXTERNAL TABLE IF NOT EXISTS dws.dws_city_tourist_msk_d ( mdn string comment '手机号大写MD5加密' ,source_county_id string comment '游客来源区县' ,d_city_id string comment '旅游目的地市代码' ,d_stay_time double comment '游客在该省停留的时间长度(小时)' ,d_max_distance double comment '游客本次出游距离' ) comment '旅游应用专题数据城市级别-天'PARTITIONED BY ( day_id string comment '日分区' ) ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t' STORED AS INPUTFORMAT 'org.apache.hadoop.mapred.TextInputFormat' OUTPUTFORMAT 'org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat'location '/daas/motl/dws/dws_city_tourist_msk_d';alter table dws.dws_city_tourist_msk_d add if not exists partition(day_id='20220409') ;新建并编写DwsCityTouristMskDay类