Istio注入与分析

Istio注入

注入资源类型

- Job,DaemonSet,ReplicaSet,Pod,Deployment

- Service、Secrets、ConfigMap(

即使注入了,也不会产生什么结果)

# service注入# 部署测试[root@k8s-master-1 manual-inject.yaml]# kubectl apply -f manual-inject.yaml [root@k8s-master-1 manual-inject.yaml]# kubectl expose deployment nginx -n istio-inject service/nginx exposed[root@k8s-master-1 manual-inject.yaml]# kubectl get svc -n istio-inject NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEnginx ClusterIP 10.0.151.39 <none> 80/TCP 46s# 查看service[root@k8s-master-1 manual-inject.yaml]# kubectl get svc -n istio-inject nginx -o yamlapiVersion: v1kind: Servicemetadata: creationTimestamp: "2022-04-17T12:23:20Z" managedFields: - apiVersion: v1 fieldsType: FieldsV1 fieldsV1: f:spec: f:ports: .: {} k:{"port":80,"protocol":"TCP"}: .: {} f:port: {} f:protocol: {} f:targetPort: {} f:selector: .: {} f:app: {} f:sessionAffinity: {} f:type: {} manager: kubectl-expose operation: Update time: "2022-04-17T12:23:20Z" name: nginx namespace: istio-inject resourceVersion: "806644" uid: 66c6e512-7113-464d-a460-cc01d7e8d9c0spec: clusterIP: 10.0.151.39 clusterIPs: - 10.0.151.39 ports: - port: 80 protocol: TCP targetPort: 80 selector: app: nginx sessionAffinity: None type: ClusterIPstatus: loadBalancer: {} # 进行service注入,可见service没有发生任何变化[root@k8s-master-1 manual-inject.yaml]# kubectl get svc -n istio-inject nginx -o yaml > svc.yaml[root@k8s-master-1 manual-inject.yaml]# istioctl kube-inject -f svc.yaml apiVersion: v1kind: Servicemetadata: creationTimestamp: "2022-04-17T12:23:20Z" managedFields: - apiVersion: v1 fieldsType: FieldsV1 fieldsV1: f:spec: f:ports: .: {} k:{"port":80,"protocol":"TCP"}: .: {} f:port: {} f:protocol: {} f:targetPort: {} f:selector: .: {} f:app: {} f:sessionAffinity: {} f:type: {} manager: kubectl-expose operation: Update time: "2022-04-17T12:23:20Z" name: nginx namespace: istio-inject resourceVersion: "806644" uid: 66c6e512-7113-464d-a460-cc01d7e8d9c0spec: clusterIP: 10.0.151.39 clusterIPs: - 10.0.151.39 ports: - port: 80 protocol: TCP targetPort: 80 selector: app: nginx sessionAffinity: None type: ClusterIPstatus: loadBalancer: {}---自动注入

# 注入发生在 Pod 创建时。杀死正在运行的 Pod 并验证新创建的 Pod 是否注入 Sidecar。原来的 Pod 具有 READY 为 1/1 的容器,注入 Sidecar 后的 Pod 则具有 READY 为 2/2 的容器kubectl label namespace default istio-injection=enabled --overwrite手工注入

# 创建测试文件[root@k8s-master-1 manual-inject.yaml]# cat test.yaml apiVersion: v1kind: Namespacemetadata: name: istio-inject---apiVersion: apps/v1kind: Deploymentmetadata: name: nginx namespace: istio-injectspec: selector: matchLabels: app: "nginx" template: metadata: labels: app: "nginx" spec: containers: - name: nginx image: nginx imagePullPolicy: IfNotPresent# 查看结果[root@k8s-master-1 manual-inject.yaml]# kubectl get pods -n istio-injectNAMEREADY STATUS RESTARTS AGEnginx-7cf7d6dbc8-rprc8 1/1 Running 0 91s# 执行手动注入[root@k8s-master-1 manual-inject.yaml]# istioctl kube-inject -f test.yaml | kubectl apply -f - -n istio-injectnamespace/istio-inject unchangeddeployment.apps/nginx configured注入分析

# 测试yaml[root@k8s-master-1 manual-inject.yaml]# cat manual-inject.yamlapiVersion: v1kind: Namespacemetadata: name: istio-inject---apiVersion: apps/v1kind: Deploymentmetadata: name: nginx namespace: istio-injectspec: selector: matchLabels: app: "nginx" template: metadata: labels: app: "nginx" spec: containers: - name: nginx image: nginx imagePullPolicy: IfNotPresent - name: busybox image: busybox:1.28 imagePullPolicy: IfNotPresent容器分析

# 查看pod[root@k8s-master-1 manual-inject.yaml]# kubectl get pods -n istio-injectNAMEREADY STATUS RESTARTS AGEnginx-698dbb497d-7l6xl 2/2 Running 0 33s# 执行手动注入[root@k8s-master-1 manual-inject.yaml]# istioctl kube-inject -f manual-inject.yaml | kubectl apply -f - -n istio-inject# 查看容器,可以发现之前的pod和deployment名称变了,说明deployment和pod均被删除后重新部署了,且pod数量也增加了[root@k8s-master-1 manual-inject.yaml]# kubectl get pods -n istio-injectNAMEREADY STATUS RESTARTS AGEnginx-698dbb497d-7l6xl 2/2 Terminating 0 3m44snginx-747947f95d-hrj6r 3/3 Running0 53s# 查看创建的容器,可以发现实际上比原先多了2个容器,一个用于istio-init初始化,然后就消亡了,另一个还在工作[root@k8s-master-1 manual-inject.yaml]# kubectl describe pods nginx-747947f95d-hrj6r -n istio-inject | tail -n 17Events: Type Reason Age From Message ---- ------ ---- ---- ------- Normal Scheduled 2m27s default-scheduler Successfully assigned istio-inject/nginx-747947f95d-hrj6r to k8s-master-1 Normal Pulled 2m27s kubelet Container image "docker.io/istio/proxyv2:1.12.6" already present on machine Normal Created 2m27s kubelet Created container istio-init Normal Started 2m26s kubelet Started container istio-init Normal Pulling 2m26s kubelet Pulling image "nginx" Normal Pulled 111s kubelet Successfully pulled image "nginx" in 34.891822213s Normal Created 111s kubelet Created container nginx Normal Started 110s kubelet Started container nginx Normal Pulled 110s kubelet Container image "busybox:1.28" already present on machine Normal Created 110s kubelet Created container busybox Normal Started 110s kubelet Started container busybox Normal Pulled 110s kubelet Container image "docker.io/istio/proxyv2:1.12.6" already present on machine Normal Created 110s kubelet Created container istio-proxy Normal Started 110s kubelet Started container istio-proxy网络改变

# 查看初始化容器,可以发现初始化容器进行了iptables修改,为后续的istio-proxy提供了iptables规则[root@k8s-master-1 manual-inject.yaml]# kubectl logs nginx-747947f95d-hrj6r -c istio-init -n istio-inject2022-04-17T11:29:38.086022ZinfoIstio iptables environment:ENVOY_PORT=INBOUND_CAPTURE_PORT=ISTIO_INBOUND_INTERCEPTION_MODE=ISTIO_INBOUND_TPROXY_ROUTE_TABLE=ISTIO_INBOUND_PORTS=ISTIO_OUTBOUND_PORTS=ISTIO_LOCAL_EXCLUDE_PORTS=ISTIO_EXCLUDE_INTERFACES=ISTIO_SERVICE_CIDR=ISTIO_SERVICE_EXCLUDE_CIDR=ISTIO_META_DNS_CAPTURE=INVALID_DROP=2022-04-17T11:29:38.086070ZinfoIstio iptables variables:PROXY_PORT=15001PROXY_INBOUND_CAPTURE_PORT=15006PROXY_TUNNEL_PORT=15008PROXY_UID=1337PROXY_GID=1337INBOUND_INTERCEPTION_MODE=REDIRECTINBOUND_TPROXY_MARK=1337INBOUND_TPROXY_ROUTE_TABLE=133INBOUND_PORTS_INCLUDE=*INBOUND_PORTS_EXCLUDE=15090,15021,15020OUTBOUND_IP_RANGES_INCLUDE=*OUTBOUND_IP_RANGES_EXCLUDE=OUTBOUND_PORTS_INCLUDE=OUTBOUND_PORTS_EXCLUDE=KUBE_VIRT_INTERFACES=ENABLE_INBOUND_IPV6=falseDNS_CAPTURE=falseDROP_INVALID=falseCAPTURE_ALL_DNS=falseDNS_SERVERS=[],[]OUTPUT_PATH=NETWORK_NAMESPACE=CNI_MODE=falseEXCLUDE_INTERFACES=2022-04-17T11:29:38.086706ZinfoWriting following contents to rules file: /tmp/iptables-rules-1650194978086430069.txt2863691863* nat-N ISTIO_INBOUND-N ISTIO_REDIRECT-N ISTIO_IN_REDIRECT-N ISTIO_OUTPUT-A ISTIO_INBOUND -p tcp --dport 15008 -j RETURN-A ISTIO_REDIRECT -p tcp -j REDIRECT --to-ports 15001-A ISTIO_IN_REDIRECT -p tcp -j REDIRECT --to-ports 15006-A PREROUTING -p tcp -j ISTIO_INBOUND-A ISTIO_INBOUND -p tcp --dport 22 -j RETURN-A ISTIO_INBOUND -p tcp --dport 15090 -j RETURN-A ISTIO_INBOUND -p tcp --dport 15021 -j RETURN-A ISTIO_INBOUND -p tcp --dport 15020 -j RETURN-A ISTIO_INBOUND -p tcp -j ISTIO_IN_REDIRECT-A OUTPUT -p tcp -j ISTIO_OUTPUT-A ISTIO_OUTPUT -o lo -s 127.0.0.6/32 -j RETURN-A ISTIO_OUTPUT -o lo ! -d 127.0.0.1/32 -m owner --uid-owner 1337 -j ISTIO_IN_REDIRECT-A ISTIO_OUTPUT -o lo -m owner ! --uid-owner 1337 -j RETURN-A ISTIO_OUTPUT -m owner --uid-owner 1337 -j RETURN-A ISTIO_OUTPUT -o lo ! -d 127.0.0.1/32 -m owner --gid-owner 1337 -j ISTIO_IN_REDIRECT-A ISTIO_OUTPUT -o lo -m owner ! --gid-owner 1337 -j RETURN-A ISTIO_OUTPUT -m owner --gid-owner 1337 -j RETURN-A ISTIO_OUTPUT -d 127.0.0.1/32 -j RETURN-A ISTIO_OUTPUT -j ISTIO_REDIRECTCOMMIT2022-04-17T11:29:38.086773ZinfoRunning command: iptables-restore --noflush /tmp/iptables-rules-1650194978086430069.txt28636918632022-04-17T11:29:38.096650ZinfoWriting following contents to rules file: /tmp/ip6tables-rules-1650194978096589774.txt11190384602022-04-17T11:29:38.096703ZinfoRunning command: ip6tables-restore --noflush /tmp/ip6tables-rules-1650194978096589774.txt11190384602022-04-17T11:29:38.098130ZinfoRunning command: iptables-save 2022-04-17T11:29:38.101882ZinfoCommand output: # Generated by iptables-save v1.8.4 on Sun Apr 17 11:29:38 2022*nat:PREROUTING ACCEPT [0:0]:INPUT ACCEPT [0:0]:OUTPUT ACCEPT [0:0]:POSTROUTING ACCEPT [0:0]:ISTIO_INBOUND - [0:0]:ISTIO_IN_REDIRECT - [0:0]:ISTIO_OUTPUT - [0:0]:ISTIO_REDIRECT - [0:0]-A PREROUTING -p tcp -j ISTIO_INBOUND-A OUTPUT -p tcp -j ISTIO_OUTPUT-A ISTIO_INBOUND -p tcp -m tcp --dport 15008 -j RETURN-A ISTIO_INBOUND -p tcp -m tcp --dport 22 -j RETURN-A ISTIO_INBOUND -p tcp -m tcp --dport 15090 -j RETURN-A ISTIO_INBOUND -p tcp -m tcp --dport 15021 -j RETURN-A ISTIO_INBOUND -p tcp -m tcp --dport 15020 -j RETURN-A ISTIO_INBOUND -p tcp -j ISTIO_IN_REDIRECT-A ISTIO_IN_REDIRECT -p tcp -j REDIRECT --to-ports 15006-A ISTIO_OUTPUT -s 127.0.0.6/32 -o lo -j RETURN-A ISTIO_OUTPUT ! -d 127.0.0.1/32 -o lo -m owner --uid-owner 1337 -j ISTIO_IN_REDIRECT-A ISTIO_OUTPUT -o lo -m owner ! --uid-owner 1337 -j RETURN-A ISTIO_OUTPUT -m owner --uid-owner 1337 -j RETURN-A ISTIO_OUTPUT ! -d 127.0.0.1/32 -o lo -m owner --gid-owner 1337 -j ISTIO_IN_REDIRECT-A ISTIO_OUTPUT -o lo -m owner ! --gid-owner 1337 -j RETURN-A ISTIO_OUTPUT -m owner --gid-owner 1337 -j RETURN-A ISTIO_OUTPUT -d 127.0.0.1/32 -j RETURN-A ISTIO_OUTPUT -j ISTIO_REDIRECT-A ISTIO_REDIRECT -p tcp -j REDIRECT --to-ports 15001COMMIT# Completed on Sun Apr 17 11:29:38 2022进程分析

# 查看此时的进程监听端口,可以发现多了很多进程,按照常理来说应该是只有一个80进程,可以发现多了很多envoy和pilot-agent进程[root@k8s-master-1 manual-inject.yaml]# kubectl exec -it nginx-747947f95d-hrj6r -n istio-inject -c istio-proxy -- netstat -tunlpActive Internet connections (only servers)Proto Recv-Q Send-Q Local Address Foreign Address StatePID/Program name tcp 0 0 0.0.0.0:15021 0.0.0.0:* LISTEN 14/envoy tcp 0 0 0.0.0.0:15021 0.0.0.0:* LISTEN 14/envoy tcp 0 0 0.0.0.0:800.0.0.0:* LISTEN - tcp 0 0 0.0.0.0:15090 0.0.0.0:* LISTEN 14/envoy tcp 0 0 0.0.0.0:15090 0.0.0.0:* LISTEN 14/envoy tcp 0 0 127.0.0.1:15000 0.0.0.0:* LISTEN 14/envoy tcp 0 0 0.0.0.0:15001 0.0.0.0:* LISTEN 14/envoy tcp 0 0 0.0.0.0:15001 0.0.0.0:* LISTEN 14/envoy tcp 0 0 127.0.0.1:15004 0.0.0.0:* LISTEN 1/pilot-agenttcp 0 0 0.0.0.0:15006 0.0.0.0:* LISTEN 14/envoy tcp 0 0 0.0.0.0:15006 0.0.0.0:* LISTEN 14/envoy tcp60 0 :::15020 :::* LISTEN 1/pilot-agenttcp60 0 :::80 :::* LISTEN - # 查看进程[root@k8s-master-1 manual-inject.yaml]# kubectl exec -it nginx-747947f95d-hrj6r -n istio-inject -c istio-proxy -- ps -efUID PID PPID C STIME TTY TIME CMDistio-p+ 1 0 0 11:30 ? 00:00:00 /usr/local/bin/pilot-agent proistio-p+ 14 1 0 11:30 ? 00:00:03 /usr/local/bin/envoy -c etc/isistio-p+ 67 0 0 11:45 pts/0 00:00:00 ps -efpilot-agent

- 生成envoy启动配置,从PID和PPID就可以看出来

- 启动envoy

- 监控并管理envoy的运行情况,比如envoy出错时负载重启,envoy配置变更后重新加载

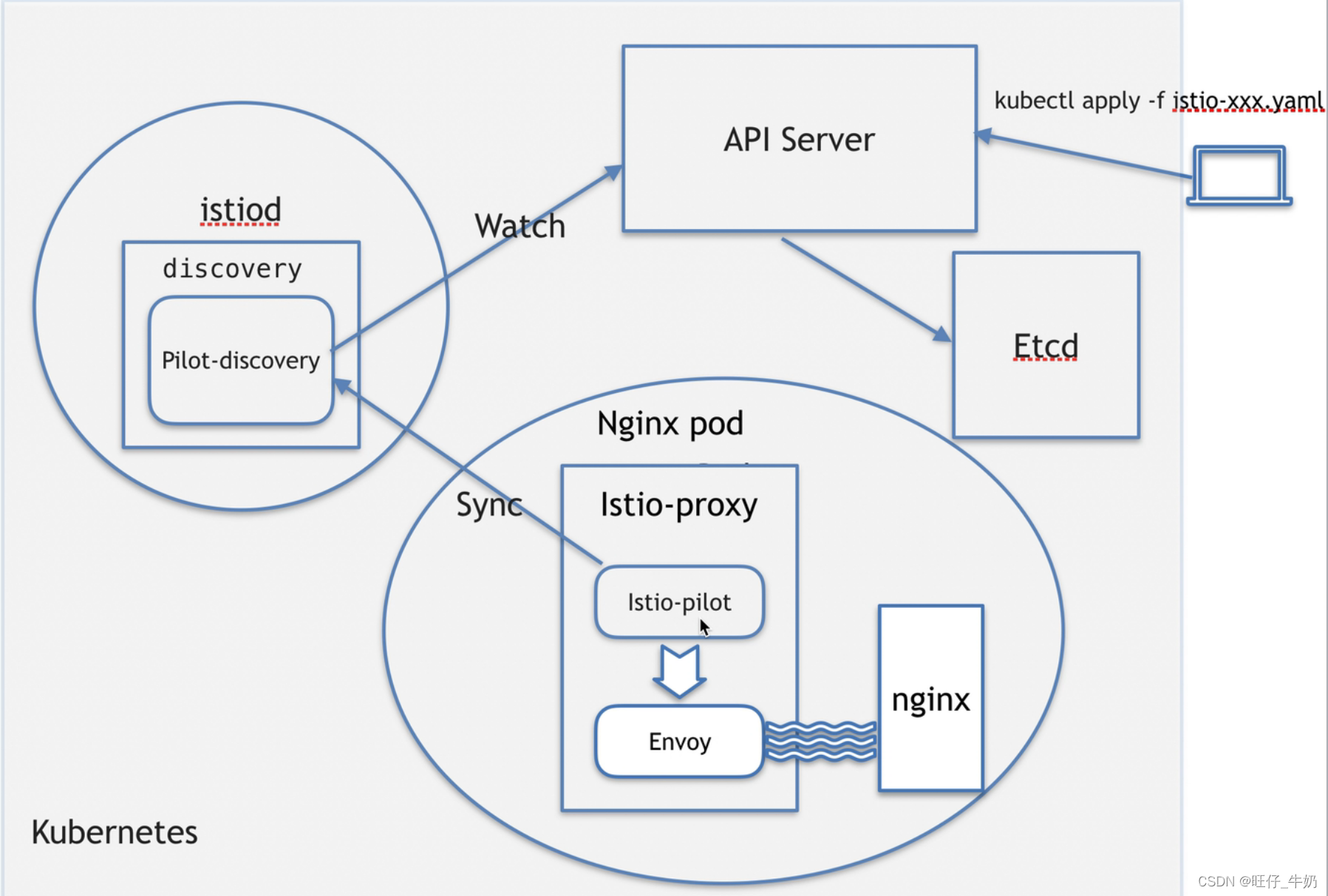

# 查看istiod的进程,pilot-discovery这个进程实际就是pilot-agent的服务端,它会持续去监控apiserver[root@k8s-master-1 manual-inject.yaml]# kubectl exec -it -n istio-system istiod-7b78cd84bb-dq7xx -- ps -efUID PID PPID C STIME TTY TIME CMDistio-p+ 1 0 0 09:22 ? 00:00:28 /usr/local/bin/pilot-discovery discovery --monitoringAddr=:15014 --log_output_level=default:info -istio-p+ 15 0 0 11:51 pts/0 00:00:00 ps -ef