centos7.8使用kubeadm创建kubernetes集群--> 完整版

一、前期准备 #所有机器上执行

- 更改主机名

# 在a虚拟机上,设置master01节点hostnamectl set-hostname master01bash #立马生效# 查看 /etc/hostname为master01cat /etc/hostnamemaster01# reboot #重启后永久生效同理在b虚拟机上,设置node01节点hostnamectl set-hostname node01bash #立马生效# 查看/etc/hostname为master01cat /etc/hostnamenode01#reboot #重启后永久生效- 关闭防火墙

systemctl stop firewalldsystemctl disable firewalld- 允许 iptables 检查桥接流量

cat <<EOF | sudo tee /etc/modules-load.d/k8s.confbr_netfilterEOFcat <<EOF | sudo tee /etc/sysctl.d/k8s.confnet.bridge.bridge-nf-call-ip6tables = 1net.bridge.bridge-nf-call-iptables = 1EOFsudo sysctl --system- 将 SELinux 设置为 permissive 模式(相当于将其禁用)

sudo setenforce 0sudo sed -i 's/^SELINUX=enforcing$/SELINUX=permissive/' /etc/selinux/config- 关闭swap(k8s禁止虚拟内存提供性能)

swapoff -ased -ri 's/.*swap.*/#&/' /etc/fstab #关闭swap分区- 配置/etc/hosts

# 自定义master与node IP,请根据个人情况修改cat >> /etc/hosts << EOF172.28.12.148 master1172.28.12.149 node1EOF6.1 安装docker

#清理过往版本docker sudo yum remove docker \ docker-client \ docker-client-latest \ docker-common \ docker-latest \ docker-latest-logrotate \ docker-logrotate \ docker-engine#安装dockersudo yum install -y yum-utilssudo yum-config-manager \ --add-repo \ http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.reposudo yum makecache fastsudo yum install docker-ce docker-ce-cli containerd.io -ysudo systemctl start dockersudo systemctl enable dockersudo systemctl status docker6.2 修改docker驱动

执行kubeadm init集群初始化时遇到:

[WARNING IsDockerSystemdCheck]: detected “cgroupfs” as the Docker

cgroup driver. The recommended driver is “systemd”.[警告IsDockerSystemdCheck]:检测到“cgroupfs”作为Docker cgroup驱动程序。

推荐的驱动程序是“systemd”

#新增配置文件cat >> /etc/docker/daemon.json << EOF{ "exec-opts":["native.cgroupdriver=systemd"]}EOF#重启dockersystemctl restart dockersystemctl status docker- 配置阿里云kubernetes软件源

报错:[Errno -1] repomd.xml signature could not be verified for kubernetes Trying other mirror.

解决:https://github.com/kubernetes/kubernetes/issues/60134

处理:repo_gpgcheck=0

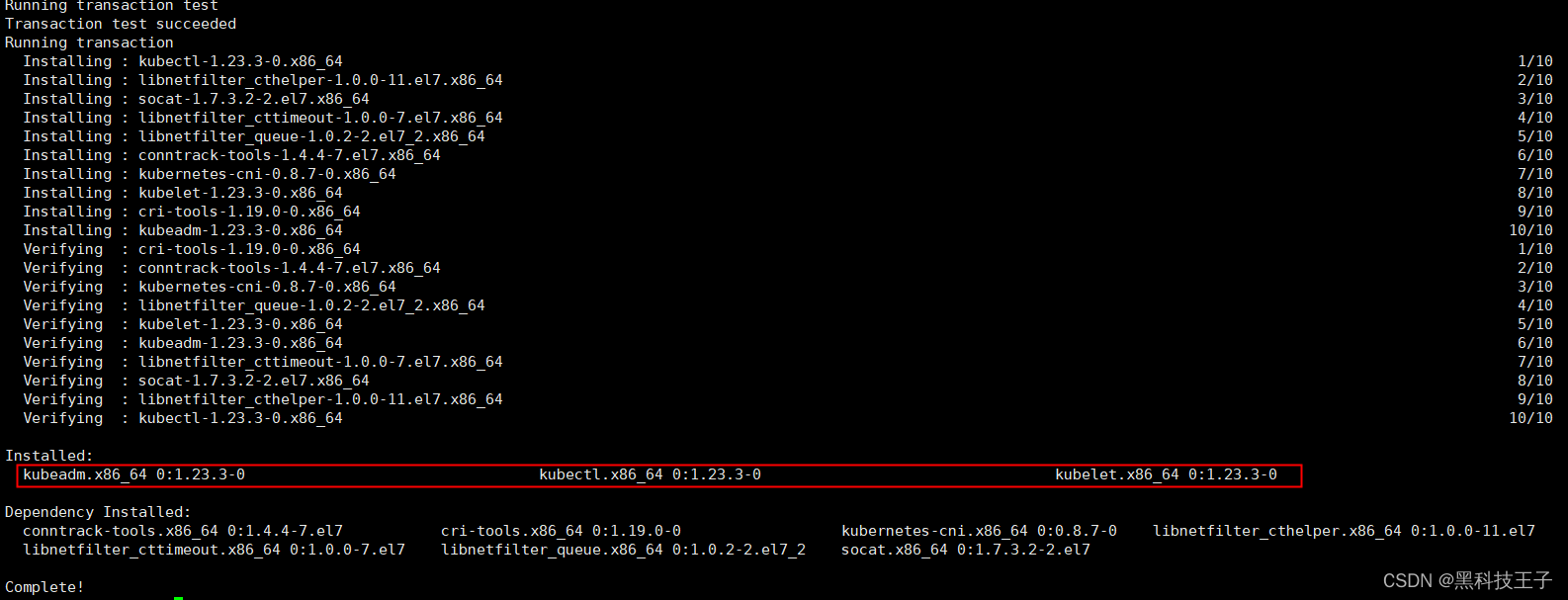

cat <<EOF > /etc/yum.repos.d/kubernetes.repo[kubernetes]name=Kubernetesbaseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/enabled=1gpgcheck=1#repo_gpgcheck=1repo_gpgcheck=0gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpgEOF- 安装kubelet kubeadm kubectl

sudo yum update -y #针对修改repo_gpgcheck=0# sudo yum install -y kubelet-1.19.4 kubeadm-1.19.4 kubectl-1.19.4 #可以根据github发布版本,指定# sudo yum install -y kubelet-1.20.0 kubeadm-1.20.0 kubectl-1.20.0 #可以根据github发布版本,指定sudo yum install -y kubelet kubeadm kubectl #最好不要指定版本,默认更新为最新sudo systemctl enable --now kubeletsudo systemctl start kubelet#sudo systemctl status kubelet 此时kubelet还没有正常准备,待kubeadm init后master节点会ok,将node节点join添加后kubelet也会正常

- 检查工具安装

yum list installed | grep kubeletyum list installed | grep kubeadmyum list installed | grep kubectlkubelet --version #查看集群版本结果 Kubernetes v1.23.3二、kubeadm创建集群

-

kubeadm初始化集群 #在master上执行

-

切记修改为master的IP地址, --apiserver-advertise-address 172.28.12.148

#apiserver-advertise-address 172.28.12.148为master节点IP,根据个人master IP修改kubeadm init --apiserver-advertise-address 172.28.12.148 \--image-repository registry.aliyuncs.com/google_containers \--pod-network-cidr 10.244.0.0/16 \--service-cidr 10.96.0.0/12#--kubernetes-version v1.23.3 \ #本行,可以不添加,默认使用最新的版本- 此处如果执行失败,可能master的IP填写错误或者未填写 --apiserver-advertise-address

172.28.12.148

kubeadm reset- 然后输入:y

- 已完成 kubeadm init 重置,重新执行以上命令kubeadm init …

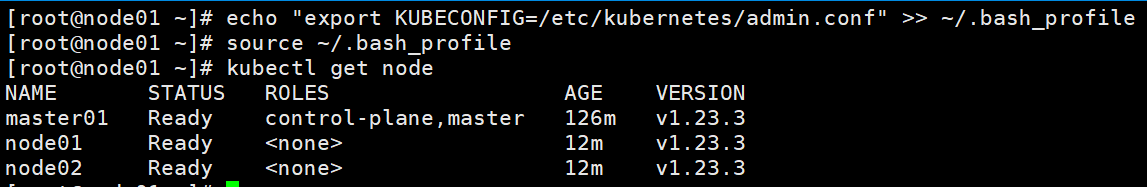

#客户端kubectl接入集群mkdir -p $HOME/.kubesudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/configsudo chown $(id -u):$(id -g) $HOME/.kube/config#在worker节点上执行

#worker节点添加到集群 ---> 在worker节点node1上执行#自动生成,请保留kubeadm join 172.28.12.148:6443 --token bofh8w.5r6qwmvargj3d0do \ --discovery-token-ca-cert-hash sha256:0724d03bf5ca008808b4dc9c68643c90e54d36733a487dc7d73`在这里插入代码片`0dca35a952b89 [root@iZ0jlhvtxignmaozy30vffZ ~]# kubectl get nodesNAME STATUS ROLES AGE VERSIONiz0jlhvtxignmaozy30vffz NotReady master 8m19s v1.19.4iz0jlhvtxignmaozy30vfgz NotReady <none> 21s v1.19.4- 添加pod网络 flannel --> master节点执行

wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml- 若上面网址较慢或无反应,请复制一下内容放置 kube-flannel.yml

- cat kube-flannel.yml

---apiVersion: policy/v1beta1kind: PodSecurityPolicymetadata: name: psp.flannel.unprivileged annotations: seccomp.security.alpha.kubernetes.io/allowedProfileNames: docker/default seccomp.security.alpha.kubernetes.io/defaultProfileName: docker/default apparmor.security.beta.kubernetes.io/allowedProfileNames: runtime/default apparmor.security.beta.kubernetes.io/defaultProfileName: runtime/defaultspec: privileged: false volumes: - configMap - secret - emptyDir - hostPath allowedHostPaths: - pathPrefix: "/etc/cni/net.d" - pathPrefix: "/etc/kube-flannel" - pathPrefix: "/run/flannel" readOnlyRootFilesystem: false # Users and groups runAsUser: rule: RunAsAny supplementalGroups: rule: RunAsAny fsGroup: rule: RunAsAny # Privilege Escalation allowPrivilegeEscalation: false defaultAllowPrivilegeEscalation: false # Capabilities allowedCapabilities: ['NET_ADMIN', 'NET_RAW'] defaultAddCapabilities: [] requiredDropCapabilities: [] # Host namespaces hostPID: false hostIPC: false hostNetwork: true hostPorts: - min: 0 max: 65535 # SELinux seLinux: # SELinux is unused in CaaSP rule: 'RunAsAny'---kind: ClusterRoleapiVersion: rbac.authorization.k8s.io/v1metadata: name: flannelrules:- apiGroups: ['extensions'] resources: ['podsecuritypolicies'] verbs: ['use'] resourceNames: ['psp.flannel.unprivileged']- apiGroups: - "" resources: - pods verbs: - get- apiGroups: - "" resources: - nodes verbs: - list - watch- apiGroups: - "" resources: - nodes/status verbs: - patch---kind: ClusterRoleBindingapiVersion: rbac.authorization.k8s.io/v1metadata: name: flannelroleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: flannelsubjects:- kind: ServiceAccount name: flannel namespace: kube-system---apiVersion: v1kind: ServiceAccountmetadata: name: flannel namespace: kube-system---kind: ConfigMapapiVersion: v1metadata: name: kube-flannel-cfg namespace: kube-system labels: tier: node app: flanneldata: cni-conf.json: | { "name": "cbr0", "cniVersion": "0.3.1", "plugins": [ { "type": "flannel", "delegate": { "hairpinMode": true, "isDefaultGateway": true } }, { "type": "portmap", "capabilities": { "portMappings": true } } ] } net-conf.json: | { "Network": "10.244.0.0/16", "Backend": { "Type": "vxlan" } }---apiVersion: apps/v1kind: DaemonSetmetadata: name: kube-flannel-ds namespace: kube-system labels: tier: node app: flannelspec: selector: matchLabels: app: flannel template: metadata: labels: tier: node app: flannel spec: affinity: nodeAffinity: requiredDuringSchedulingIgnoredDuringExecution: nodeSelectorTerms: - matchExpressions:- key: kubernetes.io/os operator: In values: - linux hostNetwork: true priorityClassName: system-node-critical tolerations: - operator: Exists effect: NoSchedule serviceAccountName: flannel initContainers: - name: install-cni-plugin#image: flannelcni/flannel-cni-plugin:v1.0.1 for ppc64le and mips64le (dockerhub limitations may apply) image: rancher/mirrored-flannelcni-flannel-cni-plugin:v1.0.1 command: - cp args: - -f - /flannel - /opt/cni/bin/flannel volumeMounts: - name: cni-plugin mountPath: /opt/cni/bin - name: install-cni#image: flannelcni/flannel:v0.16.3 for ppc64le and mips64le (dockerhub limitations may apply) image: rancher/mirrored-flannelcni-flannel:v0.16.3 command: - cp args: - -f - /etc/kube-flannel/cni-conf.json - /etc/cni/net.d/10-flannel.conflist volumeMounts: - name: cni mountPath: /etc/cni/net.d - name: flannel-cfg mountPath: /etc/kube-flannel/ containers: - name: kube-flannel#image: flannelcni/flannel:v0.16.3 for ppc64le and mips64le (dockerhub limitations may apply) image: rancher/mirrored-flannelcni-flannel:v0.16.3 command: - /opt/bin/flanneld args: - --ip-masq - --kube-subnet-mgr resources: requests: cpu: "100m" memory: "50Mi" limits: cpu: "100m" memory: "50Mi" securityContext: privileged: false capabilities: add: ["NET_ADMIN", "NET_RAW"] env: - name: POD_NAME valueFrom: fieldRef:fieldPath: metadata.name - name: POD_NAMESPACE valueFrom: fieldRef:fieldPath: metadata.namespace volumeMounts: - name: run mountPath: /run/flannel - name: flannel-cfg mountPath: /etc/kube-flannel/ - name: xtables-lock mountPath: /run/xtables.lock volumes: - name: run hostPath: path: /run/flannel - name: cni-plugin hostPath: path: /opt/cni/bin - name: cni hostPath: path: /etc/cni/net.d - name: flannel-cfg configMap: name: kube-flannel-cfg - name: xtables-lock hostPath: path: /run/xtables.lock type: FileOrCreatekubectl apply -f ./kube-flannel.yml #此处应用后node状态由NotReady --> Ready12.控制面master1查看集群

[root@iZ0jlhvtxignmaozy30vffZ ~]# kubectl get nodes NAME STATUS ROLES AGE VERSIONiz0jlhvtxignmaozy30vffz Ready master 11m v1.19.4iz0jlhvtxignmaozy30vfgz Ready <none> 3m14s v1.19.4[root@iZ0jlhvtxignmaozy30vffZ ~]# kubectl get pod -ANAMESPACE NAME READY STATUS RESTARTS AGEkube-system coredns-6d56c8448f-n7f9k 1/1 Running 0 20mkube-system coredns-6d56c8448f-tz6m4 1/1 Running 0 20mkube-system etcd-iz0jlhvtxignmaozy30vffz 1/1 Running 0 20mkube-system kube-apiserver-iz0jlhvtxignmaozy30vffz 1/1 Running 0 20mkube-system kube-controller-manager-iz0jlhvtxignmaozy30vffz 1/1 Running 0 20mkube-system kube-flannel-ds-7j7jn 1/1 Running 0 9m51skube-system kube-flannel-ds-fdkbv 1/1 Running 0 9m51skube-system kube-proxy-fdz49 1/1 Running 0 12mkube-system kube-proxy-kgzcp 1/1 Running 0 20mkube-system kube-scheduler-iz0jlhvtxignmaozy30vffz 1/1 Running 0 20m13.工作负载worker节点node1查看集群

#在master1传文件到node1,其中172.28.12.149为master01节点scp -r /etc/kubernetes/admin.conf root@172.28.12.149:/etc/kubernetes/#node1暴露echo "export KUBECONFIG=/etc/kubernetes/admin.conf" >> ~/.bash_profilesource ~/.bash_profile

三、新增work节点

14、请按顺序执行0-9步骤

15、新worker节点添加到集群

#在新worker节点上kubeadm join --token <token> --discovery-token-ca-cert-hash sha256:<hash>kubeadm token list #集群创建在24小时以内,获取或者kubeadm token create #集群创超过24小时,获取openssl x509 -pubkey -in /etc/kubernetes/pki/ca.crt | openssl rsa -pubin -outform der 2>/dev/null | \ openssl dgst -sha256 -hex | sed 's/^.* //' #获取16、新节点配置kubectl客户端工具

请执行第13步骤