Pytorch神经网络实战学习笔记_35 【实战】带W散度的WGAN-div模型生成Fashon-MNST模拟数据

1 WGAN-div 简介

W散度的损失函数GAN-dv模型使用了W散度来替换W距离的计算方式,将原有的真假样本采样操作换为基于分布层面的计算。

2 代码实现

在WGAN-gp的基础上稍加改动来实现,重写损失函数的实现。

2.1 代码实战:引入模块并载入样本----WGAN_div_241.py(第1部分)

import torchimport torchvisionfrom torchvision import transformsfrom torch.utils.data import DataLoaderfrom torch import nnimport torch.autograd as autogradimport matplotlib.pyplot as pltimport numpy as npimport matplotlibimport osos.environ["KMP_DUPLICATE_LIB_OK"]="TRUE"# 1.1 引入模块并载入样本:定义基本函数,加载FashionMNIST数据集def to_img(x): x = 0.5 * (x+1) x = x.clamp(0,1) x = x.view(x.size(0),1,28,28) return xdef imshow(img,filename = None): npimg = img.numpy() plt.axis('off') array = np.transpose(npimg,(1,2,0)) if filename != None: matplotlib.image.imsave(filename,array) else: plt.imshow(array) # plt.savefig(filename) # 保存图片 注释掉,因为会报错,暂时不知道什么原因 2022.3.26 15:20 plt.show()img_transform = transforms.Compose( [ transforms.ToTensor(), transforms.Normalize(mean=[0.5],std=[0.5]) ])data_dir = './fashion_mnist'train_dataset = torchvision.datasets.FashionMNIST(data_dir,train=True,transform=img_transform,download=True)train_loader = DataLoader(train_dataset,batch_size=1024,shuffle=True)# 测试数据集val_dataset = torchvision.datasets.FashionMNIST(data_dir,train=False,transform=img_transform)test_loader = DataLoader(val_dataset,batch_size=10,shuffle=False)# 指定设备device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")print(device)2.2 代码实战:实现生成器和判别器----WGAN_div_241.py(第2部分)

# 1.2 实现生成器和判别器 :因为复杂部分都放在loss值的计算方面了,所以生成器和判别器就会简单一些。# 生成器和判别器各自有两个卷积和两个全连接层。生成器最终输出与输入图片相同维度的数据作为模拟样本。# 判别器的输出不需要有激活函数,并且输出维度为1的数值用来表示结果。# 在GAN模型中,因判别器的输入则是具体的样本数据,要区分每个数据的分布特征,所以判别器使用实例归一化,class WGAN_D(nn.Module): # 定义判别器类D :有两个卷积和两个全连接层 def __init__(self,inputch=1): super(WGAN_D, self).__init__() self.conv1 = nn.Sequential( nn.Conv2d(inputch,64,4,2,1), # 输出形状为[batch,64,28,28] nn.LeakyReLU(0.2,True), nn.InstanceNorm2d(64,affine=True) ) self.conv2 = nn.Sequential( nn.Conv2d(64,128,4,2,1),# 输出形状为[batch,64,14,14] nn.LeakyReLU(0.2,True), nn.InstanceNorm2d(128,affine=True) ) self.fc = nn.Sequential( nn.Linear(128*7*7,1024), nn.LeakyReLU(0.2,True) ) self.fc2 = nn.Sequential( nn.InstanceNorm1d(1,affine=True), nn.Flatten(), nn.Linear(1024,1) ) def forward(self,x,*arg): # 正向传播 x = self.conv1(x) x = self.conv2(x) x = x.view(x.size(0),-1) x = self.fc(x) x = x.reshape(x.size(0),1,-1) x = self.fc2(x) return x.view(-1,1).squeeze(1)# 在GAN模型中,因生成器的初始输入是随机值,所以生成器使用批量归一化。class WGAN_G(nn.Module): # 定义生成器类G:有两个卷积和两个全连接层 def __init__(self,input_size,input_n=1): super(WGAN_G, self).__init__() self.fc1 = nn.Sequential( nn.Linear(input_size * input_n,1024), nn.ReLU(True), nn.BatchNorm1d(1024) ) self.fc2 = nn.Sequential( nn.Linear(1024,7*7*128), nn.ReLU(True), nn.BatchNorm1d(7*7*128) ) self.upsample1 = nn.Sequential( nn.ConvTranspose2d(128,64,4,2,padding=1,bias=False), # 输出形状为[batch,64,14,14] nn.ReLU(True), nn.BatchNorm2d(64) ) self.upsample2 = nn.Sequential( nn.ConvTranspose2d(64,1,4,2,padding=1,bias=False), # 输出形状为[batch,64,28,28] nn.Tanh() ) def forward(self,x,*arg): # 正向传播 x = self.fc1(x) x = self.fc2(x) x = x.view(x.size(0),128,7,7) x = self.upsample1(x) img = self.upsample2(x) return img2.3 代码实战:计算w散度(WGAN-gp基础新增)----WGAN_div_241.py(第3部分)

# 1.3 计算w散度:返回值充当WGAN-gp中的惩罚项,用于计算判别器的损失def compute_w_div(real_samples,real_out,fake_samples,fake_out): # 定义参数 k = 2 p = 6 # 计算真实空间的梯度 weight = torch.full((real_samples.size(0),),1,device=device) real_grad = autograd.grad(outputs=real_out, inputs=real_samples, grad_outputs=weight, create_graph=True, retain_graph=True, only_inputs=True)[0] # L2范数 real_grad_norm = real_grad.view(real_grad.size(0),-1).pow(2).sum(1) # 计算模拟空间的梯度 fake_grad = autograd.grad(outputs=fake_out, inputs=fake_samples, grad_outputs=weight, create_graph=True, retain_graph=True, only_inputs=True)[0] # L2范数 fake_grad_norm = fake_grad.view(fake_grad.size(0),-1).pow(2).sum(1) # 计算W散度距离 div_gp = torch.mean(real_grad_norm (p/2)+fake_grad_norm(p/2))*k/2 return div_gp2.4 代码实战:定义模型的训练函数(WGAN-gp基础修改)----WGAN_div_241.py(第4部分)

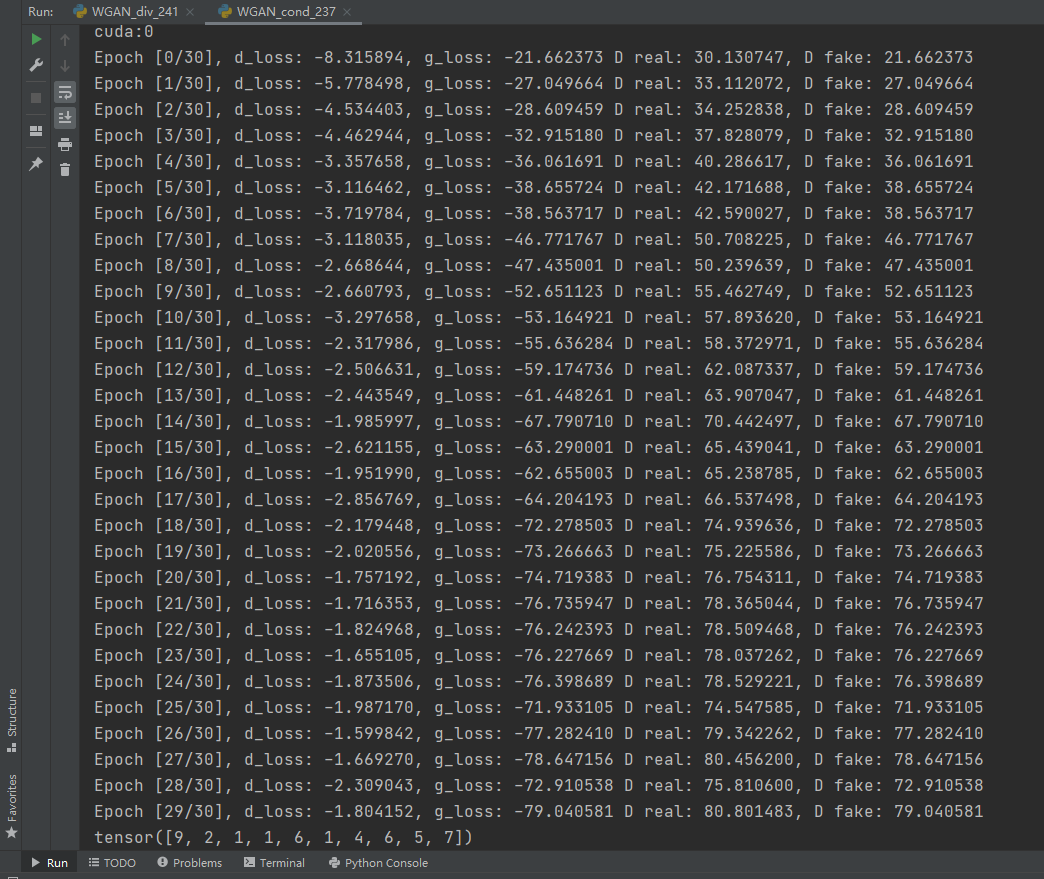

## 1.4 定义模型的训练函数# 定义函数train(),实现模型的训练过程。# 在函数train()中,按照对抗神经网络专题(一)中的式(8-24)实现模型的损失函数。# 判别器的loss为D(fake_samples)-D(real_samples)再加上联合分布样本的梯度惩罚项gradient_penalties,其中fake_samples为生成的模拟数据,real_Samples为真实数据,# 生成器的loss为-D(fake_samples)。def train(D,G,outdir,z_dimension,num_epochs=30): d_optimizer = torch.optim.Adam(D.parameters(),lr=0.001) # 定义优化器 g_optimizer = torch.optim.Adam(G.parameters(),lr=0.001) os.makedirs(outdir,exist_ok=True) # 创建输出文件夹 # 在函数train()中,判别器和生成器是分开训练的。让判别器学习的次数多一些,判别器每训练5次,生成器优化1次。 # WGAN_gp不会因为判别器准确率太高而引起生成器梯度消失的问题,所以好的判别器会让生成器有更好的模拟效果。 for epoch in range(num_epochs): for i,(img,lab) in enumerate(train_loader): num_img = img.size(0) # 训练判别器 real_img = img.to(device) y_one_hot = torch.zeros(lab.shape[0],10).scatter_(1,lab.view(lab.shape[0],1),1).to(device) for ii in range(5): # 循环训练5次 d_optimizer.zero_grad() # 梯度清零 real_img = real_img.requires_grad_(True) # 在WGAN-gp基础上新增,将输入参数real_img设置为可导 # 对real_img进行判别 real_out = D(real_img, y_one_hot) # 生成随机值 z = torch.randn(num_img, z_dimension).to(device) fake_img = G(z, y_one_hot) # 生成fake_img fake_out = D(fake_img, y_one_hot) # 对fake_img进行判别 # 计算梯度惩罚项 gradient_penalty_div = compute_w_div(real_img, real_out, fake_img, fake_out) # 使用gradient_penalty_div()求梯度 # 计算判别器的loss d_loss = -torch.mean(real_out) + torch.mean(fake_out) + gradient_penalty_div d_loss.backward() d_optimizer.step() # 训练生成器 for ii in range(1): g_optimizer.zero_grad() # 梯度清0 z = torch.randn(num_img, z_dimension).to(device) fake_img = G(z, y_one_hot) fake_out = D(fake_img, y_one_hot) g_loss = -torch.mean(fake_out) g_loss.backward() g_optimizer.step() # 输出可视化结果 fake_images = to_img(fake_img.cpu().data) real_images = to_img(real_img.cpu().data) rel = torch.cat([to_img(real_images[:10]), fake_images[:10]], axis=0) imshow(torchvision.utils.make_grid(rel, nrow=10), os.path.join(outdir, 'fake_images-{}.png'.format(epoch + 1))) # 输出训练结果 print('Epoch [{}/{}], d_loss: {:.6f}, g_loss: {:.6f} ''D real: {:.6f}, D fake: {:.6f}'.format(epoch, num_epochs, d_loss.data, g_loss.data, real_out.data.mean(), fake_out.data.mean())) # 保存训练模型 torch.save(G.state_dict(), os.path.join(outdir, 'div-generator.pth')) torch.save(D.state_dict(), os.path.join(outdir, 'div-discriminator.pth'))2.5 代码实战:实现可视化模型结果----WGAN_div_241.py(第5部分)

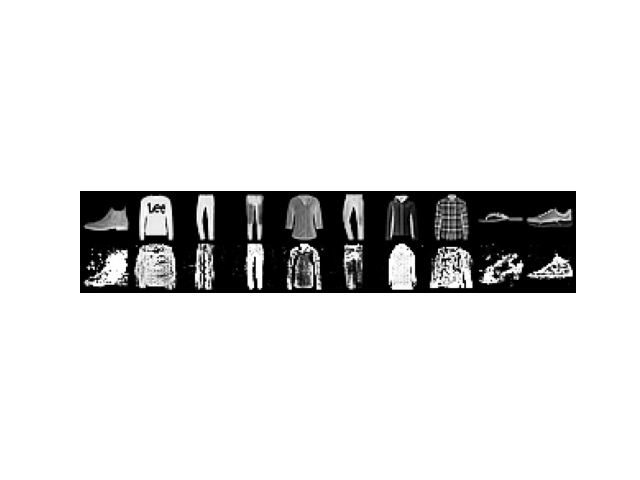

# 1.5 定义函数,实现可视化模型结果:获取一部分测试数据,显示由模型生成的模拟数据。def displayAndTest(D,G,z_dimension): # 可视化结果 sample = iter(test_loader) images, labels = sample.next() y_one_hot = torch.zeros(labels.shape[0], 10).scatter_(1,labels.view(labels.shape[0], 1), 1).to(device) num_img = images.size(0) # 获取样本个数 with torch.no_grad(): z = torch.randn(num_img, z_dimension).to(device) # 生成随机数 fake_img = G(z, y_one_hot) fake_images = to_img(fake_img.cpu().data) # 生成模拟样本 rel = torch.cat([to_img(images[:10]), fake_images[:10]], axis=0) imshow(torchvision.utils.make_grid(rel, nrow=10)) print(labels[:10])2.6 代码实战:调用函数并训练模型----WGAN_div_241.py(第6部分)

# 1.6 调用函数并训练模型:实例化判别器和生成器模型,并调用函数进行训练if __name__ == '__main__': z_dimension = 40 # 设置输入随机数的维度 D = WGAN_D().to(device) # 实例化判别器 G = WGAN_G(z_dimension).to(device) # 实例化生成器 train(D, G, './w_img', z_dimension) # 训练模型 displayAndTest(D, G, z_dimension) # 输出可视化结果:

WGAN-dⅳ模型也会输出非常清晰的模拟样本。在有关WGAN-div的论文中,曾拿WGAN-div模型与WGAN-gp模型进行比较,发现WGAN-diⅳ模型的FID分数更高一些(FID是评价GAN生成图片质量的一种指标)。

3 代码总览(WGAN_div_241.py)

import torchimport torchvisionfrom torchvision import transformsfrom torch.utils.data import DataLoaderfrom torch import nnimport torch.autograd as autogradimport matplotlib.pyplot as pltimport numpy as npimport matplotlibimport osos.environ["KMP_DUPLICATE_LIB_OK"]="TRUE"# 1.1 引入模块并载入样本:定义基本函数,加载FashionMNIST数据集def to_img(x): x = 0.5 * (x+1) x = x.clamp(0,1) x = x.view(x.size(0),1,28,28) return xdef imshow(img,filename = None): npimg = img.numpy() plt.axis('off') array = np.transpose(npimg,(1,2,0)) if filename != None: matplotlib.image.imsave(filename,array) else: plt.imshow(array) # plt.savefig(filename) # 保存图片 注释掉,因为会报错,暂时不知道什么原因 2022.3.26 15:20 plt.show()img_transform = transforms.Compose( [ transforms.ToTensor(), transforms.Normalize(mean=[0.5],std=[0.5]) ])data_dir = './fashion_mnist'train_dataset = torchvision.datasets.FashionMNIST(data_dir,train=True,transform=img_transform,download=True)train_loader = DataLoader(train_dataset,batch_size=1024,shuffle=True)# 测试数据集val_dataset = torchvision.datasets.FashionMNIST(data_dir,train=False,transform=img_transform)test_loader = DataLoader(val_dataset,batch_size=10,shuffle=False)# 指定设备device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")print(device)# 1.2 实现生成器和判别器 :因为复杂部分都放在loss值的计算方面了,所以生成器和判别器就会简单一些。# 生成器和判别器各自有两个卷积和两个全连接层。生成器最终输出与输入图片相同维度的数据作为模拟样本。# 判别器的输出不需要有激活函数,并且输出维度为1的数值用来表示结果。# 在GAN模型中,因判别器的输入则是具体的样本数据,要区分每个数据的分布特征,所以判别器使用实例归一化,class WGAN_D(nn.Module): # 定义判别器类D :有两个卷积和两个全连接层 def __init__(self,inputch=1): super(WGAN_D, self).__init__() self.conv1 = nn.Sequential( nn.Conv2d(inputch,64,4,2,1), # 输出形状为[batch,64,28,28] nn.LeakyReLU(0.2,True), nn.InstanceNorm2d(64,affine=True) ) self.conv2 = nn.Sequential( nn.Conv2d(64,128,4,2,1),# 输出形状为[batch,64,14,14] nn.LeakyReLU(0.2,True), nn.InstanceNorm2d(128,affine=True) ) self.fc = nn.Sequential( nn.Linear(128*7*7,1024), nn.LeakyReLU(0.2,True) ) self.fc2 = nn.Sequential( nn.InstanceNorm1d(1,affine=True), nn.Flatten(), nn.Linear(1024,1) ) def forward(self,x,*arg): # 正向传播 x = self.conv1(x) x = self.conv2(x) x = x.view(x.size(0),-1) x = self.fc(x) x = x.reshape(x.size(0),1,-1) x = self.fc2(x) return x.view(-1,1).squeeze(1)# 在GAN模型中,因生成器的初始输入是随机值,所以生成器使用批量归一化。class WGAN_G(nn.Module): # 定义生成器类G:有两个卷积和两个全连接层 def __init__(self,input_size,input_n=1): super(WGAN_G, self).__init__() self.fc1 = nn.Sequential( nn.Linear(input_size * input_n,1024), nn.ReLU(True), nn.BatchNorm1d(1024) ) self.fc2 = nn.Sequential( nn.Linear(1024,7*7*128), nn.ReLU(True), nn.BatchNorm1d(7*7*128) ) self.upsample1 = nn.Sequential( nn.ConvTranspose2d(128,64,4,2,padding=1,bias=False), # 输出形状为[batch,64,14,14] nn.ReLU(True), nn.BatchNorm2d(64) ) self.upsample2 = nn.Sequential( nn.ConvTranspose2d(64,1,4,2,padding=1,bias=False), # 输出形状为[batch,64,28,28] nn.Tanh() ) def forward(self,x,*arg): # 正向传播 x = self.fc1(x) x = self.fc2(x) x = x.view(x.size(0),128,7,7) x = self.upsample1(x) img = self.upsample2(x) return img# 1.3 计算w散度:返回值充当WGAN-gp中的惩罚项,用于计算判别器的损失def compute_w_div(real_samples,real_out,fake_samples,fake_out): # 定义参数 k = 2 p = 6 # 计算真实空间的梯度 weight = torch.full((real_samples.size(0),),1,device=device) real_grad = autograd.grad(outputs=real_out, inputs=real_samples, grad_outputs=weight, create_graph=True, retain_graph=True, only_inputs=True)[0] # L2范数 real_grad_norm = real_grad.view(real_grad.size(0),-1).pow(2).sum(1) # 计算模拟空间的梯度 fake_grad = autograd.grad(outputs=fake_out, inputs=fake_samples, grad_outputs=weight, create_graph=True, retain_graph=True, only_inputs=True)[0] # L2范数 fake_grad_norm = fake_grad.view(fake_grad.size(0),-1).pow(2).sum(1) # 计算W散度距离 div_gp = torch.mean(real_grad_norm (p/2)+fake_grad_norm(p/2))*k/2 return div_gp## 1.4 定义模型的训练函数# 定义函数train(),实现模型的训练过程。# 在函数train()中,按照对抗神经网络专题(一)中的式(8-24)实现模型的损失函数。# 判别器的loss为D(fake_samples)-D(real_samples)再加上联合分布样本的梯度惩罚项gradient_penalties,其中fake_samples为生成的模拟数据,real_Samples为真实数据,# 生成器的loss为-D(fake_samples)。def train(D,G,outdir,z_dimension,num_epochs=30): d_optimizer = torch.optim.Adam(D.parameters(),lr=0.001) # 定义优化器 g_optimizer = torch.optim.Adam(G.parameters(),lr=0.001) os.makedirs(outdir,exist_ok=True) # 创建输出文件夹 # 在函数train()中,判别器和生成器是分开训练的。让判别器学习的次数多一些,判别器每训练5次,生成器优化1次。 # WGAN_gp不会因为判别器准确率太高而引起生成器梯度消失的问题,所以好的判别器会让生成器有更好的模拟效果。 for epoch in range(num_epochs): for i,(img,lab) in enumerate(train_loader): num_img = img.size(0) # 训练判别器 real_img = img.to(device) y_one_hot = torch.zeros(lab.shape[0],10).scatter_(1,lab.view(lab.shape[0],1),1).to(device) for ii in range(5): # 循环训练5次 d_optimizer.zero_grad() # 梯度清零 real_img = real_img.requires_grad_(True) # 在WGAN-gp基础上新增,将输入参数real_img设置为可导 # 对real_img进行判别 real_out = D(real_img, y_one_hot) # 生成随机值 z = torch.randn(num_img, z_dimension).to(device) fake_img = G(z, y_one_hot) # 生成fake_img fake_out = D(fake_img, y_one_hot) # 对fake_img进行判别 # 计算梯度惩罚项 gradient_penalty_div = compute_w_div(real_img, real_out, fake_img, fake_out) # 求梯度 # 计算判别器的loss d_loss = -torch.mean(real_out) + torch.mean(fake_out) + gradient_penalty_div d_loss.backward() d_optimizer.step() # 训练生成器 for ii in range(1): g_optimizer.zero_grad() # 梯度清0 z = torch.randn(num_img, z_dimension).to(device) fake_img = G(z, y_one_hot) fake_out = D(fake_img, y_one_hot) g_loss = -torch.mean(fake_out) g_loss.backward() g_optimizer.step() # 输出可视化结果 fake_images = to_img(fake_img.cpu().data) real_images = to_img(real_img.cpu().data) rel = torch.cat([to_img(real_images[:10]), fake_images[:10]], axis=0) imshow(torchvision.utils.make_grid(rel, nrow=10), os.path.join(outdir, 'fake_images-{}.png'.format(epoch + 1))) # 输出训练结果 print('Epoch [{}/{}], d_loss: {:.6f}, g_loss: {:.6f} ''D real: {:.6f}, D fake: {:.6f}'.format(epoch, num_epochs, d_loss.data, g_loss.data, real_out.data.mean(), fake_out.data.mean())) # 保存训练模型 torch.save(G.state_dict(), os.path.join(outdir, 'div-generator.pth')) torch.save(D.state_dict(), os.path.join(outdir, 'div-discriminator.pth'))# 1.5 定义函数,实现可视化模型结果:获取一部分测试数据,显示由模型生成的模拟数据。def displayAndTest(D,G,z_dimension): # 可视化结果 sample = iter(test_loader) images, labels = sample.next() y_one_hot = torch.zeros(labels.shape[0], 10).scatter_(1,labels.view(labels.shape[0], 1), 1).to(device) num_img = images.size(0) # 获取样本个数 with torch.no_grad(): z = torch.randn(num_img, z_dimension).to(device) # 生成随机数 fake_img = G(z, y_one_hot) fake_images = to_img(fake_img.cpu().data) # 生成模拟样本 rel = torch.cat([to_img(images[:10]), fake_images[:10]], axis=0) imshow(torchvision.utils.make_grid(rel, nrow=10)) print(labels[:10])# 1.6 调用函数并训练模型:实例化判别器和生成器模型,并调用函数进行训练if __name__ == '__main__': z_dimension = 40 # 设置输入随机数的维度 D = WGAN_D().to(device) # 实例化判别器 G = WGAN_G(z_dimension).to(device) # 实例化生成器 train(D, G, './w_img', z_dimension) # 训练模型 displayAndTest(D, G, z_dimension) # 输出可视化