C语言---数据结构(1)--时间复杂和空间复杂度计算_c语言时间复杂度计算

1.1算法效率

算法效率分为时间效率和空间效率

时间效率被称为时间复杂度,而空间效率被称作空间复杂度。 时间复杂度主要衡量的是一个算法的运行速度,而空间复杂度主要衡量一个算法所需要的额外空间,在计算机发展的早期,计算机的存储容量很小。所以对空间复杂度很是在乎。但是经过计算机行业的迅速发展,计算机的存储容量已经达到了很高的程度。所以我们如今已经不需要再特别关注一个算法的空间复杂度,现在主要关注的是空间效率

1.2时间复杂度的概念

时间复杂度的定义:在计算机科学中,算法的时间复杂度是一个函数,它定量描述了该算法的运

行时间。一个算法执行所耗费的时间,从理论上说,是不能算出来的,只有你把你的程序放在机

器上跑起来,才能知道。但是我们需要每个算法都上机测试吗?是可以都上机测试,但是这很麻

烦,所以才有了时间复杂度这个分析方式。一个算法所花费的时间与其中语句的执行次数成正比

例,算法中的基本操作的执行次数,为算法的时间复杂度。

1.3 空间复杂度的概念

空间复杂度是对一个算法在运行过程中临时占用存储空间大小的量度 。空间复杂度不是程序占用

了多少bytes的空间,因为这个也没太大意义,所以空间复杂度算的是变量的个数。空间复杂度计

算规则基本跟实践复杂度类似,也使用大O渐进表示法。

2.如何计算常见算法的时间复杂度和空间复杂度

时间复杂度不算时间,算次数,空间复杂度不算空间,算变量个数

时间复杂度的计算

实际中我们在计算时间复杂度时,我们其实并不一定要计算精确的执行次数,只需要大概执行次数,那么我们这里就使用大O的渐进表示法

大O符号:是用于描述函数渐进行为的数学符号

推导大O阶方法:

1、用常数1取代运行时间中的所有加法常数。

2、在修改后的运行次数函数中,只保留最高阶项。

3、如果最高阶项存在且不是1,则去除与这个项目相乘的常数。得到的结果就是大O阶。

使用大O的渐进表示法以后,

另外有些算法的时间复杂度存在最好、平均和最坏情况:

最坏情况:任意输入规模的最大运行次数(上界)

平均情况:任意输入规模的期望运行次数

最好情况:任意输入规模的最小运行次数(下界)

例如:在一个长度为N数组中搜索一个数据x

最好情况:1次找到

最坏情况:N次找到

平均情况:N/2次找到

在实际中一般情况关注的是算法的最坏运行情况,所以数组中搜索数据时间复杂度为O(N)

比如后面的计算strchr的时间复杂度

// 请计算一下Func1基本操作执行了多少次?void Func1(int N){ int count = 0; for (int i = 0; i < N; ++i) { for (int j = 0; j < N; ++j) { ++count; } } //对于这个循环来说,因为这是一个嵌套的循环,两层循环,外面执行N次,里面执行N次 //所以这个循环总共执行N*N次 for (int k = 0; k < 2 * N; ++k) { ++count; } //这个循环的循环次数是2*N int M = 10; while (M--) { ++count; } printf(\"%d\\n\", count);}//因为M被初始化为10,那么这个while循环会执行10次int main(){ int n=0; scanf(\"%d\", &n); Func1(n); return 0;}//所以看来这个准确的次数是:N*N+2*N+10//F(N)=N^2+2*N+10//N=10F(N)=130//N=100F(N)=10210//N=1000F(N)=1002010//随着N的增大,这个表达式中N^2对结果的影响是最大的//时间复杂度是一个估算,是去看表达式中影响最大的那一项//时间复杂度用到一个//大O的渐进表示法,估算//时间复杂度:O(N^2)Func1的时间复杂度为:O(N6^2)

我们会发现大O的渐进表示法去掉了那些对结果影响不大的项,简洁明了的表达了代码执行的次数

// 计算Func2的时间复杂度?void Func2(int N){ int count = 0; for (int k = 0; k < 2 * N; ++k) { ++count; } int M = 10; while (M--) { ++count; } printf(\"%d\\n\", count);}//2*N+10//时间复杂度就是O(N)//那么为什么不是O(2N)呢?//根据大O阶推导的方法三中提到://3、如果最高阶项存在且不是1,则去除与这个项目相乘的常数。得到的结果就是大O阶。//使用大O的渐进表示法以后,//因为我们的N逐渐变大,前面的2对结果的影响不大//所以这个题目的时间复杂度就是O(N)我们要求去掉N前面的2,随着N的变大,前面的2对结果的影响不是很大,所以去掉

那么这个代码的时间复杂度就是O(N)

// 计算Func3的时间复杂度?void Func3(int N, int M){ int count = 0; for (int k = 0; k < M; ++k) { ++count; } for (int k = 0; k < N; ++k) { ++count; } printf(\"%d\\n\", count);}//这个代码的时间复杂度就是O(N+M)//因为我们现在不知道N和M的大小,现在对于我都是未知数//所以我们在计算时间复杂度的时候,可能遇到的不止一个未知数,可能有多个未知数//一般情况下只有1个未知数的//但是这个题如果有个条件:M远大于N,那么这个代码的时间复杂度就是O(M)//假设M和N差不多大,那么时间复杂度就是O(M)或者O(N)这个代码存在多个未知的值,M和N,那么这个复杂度就要根据这个M和N的大小进行比较了

// 计算Func4的时间复杂度?void Func4(int N)//这个N我们没有用到{ int count = 0; for (int k = 0; k < 100; ++k) { ++count; } printf(\"%d\\n\", count);}//因为这个代码给的N我们没有用到//那么这个代码的时间复杂度是多少呢?//是O(1)//为什么呢/*根据推导大O阶方法1我,们可以知道1、用常数1取代运行时间中的所有加法常数。因为这个循环的次数是100,是常数,那么我们的这个时间复杂度就用1来替换了//那么我们最终就得到了O(1)所以我们在以后遇到的常数次都是O(1)不管这个常数次是多大,N永远都是不变的,N的效率是固定的*/因为这个代码是没有用到未知数的,循环的次数仅仅只是一个常数,那么这个题的时间复杂度我们就用O(1)表示,将常量用1来替换

// 计算strchr的时间复杂度?const char* strchr(const char* str, char character){ //遍历字符串中\'\\0\'之前的字符, while (*str != \'\\0\') { if (*str == character)//将这个指针指向的字符和传过来的字符进行判断 //如果是一样的字符,那么我们就返回这个字符的位置 return str; ++str;//指针++,换下一个字符进行判断 } return NULL;//走到最后都没找到,我们就直接返回一个空指针}//这个函数是我们在字符串里面找到我们想要的字符//那么这个题的时间复杂度怎么说呢?/** 假设这个字符串的长度是N那么就存在三种可能性:最好、最坏、平均最坏:就是运行N次才找到 O(N)平均:进行到一半才找到 O(N/2)最好:一个常数下,假如5次、1次就找到了 O(1)但是在实际中一般情况关注的是算法的最坏运行情况所以数组中搜索数据的这个题的时间复杂度是O(N)我们做出最坏的打算,但是这里也是最靠谱的*//*做出拓展1.我们现在给出一个字符串,是长还是短,这个字符串的长度都是不变的那么这个题时间复杂度就是O(1),效率是不变的2.如果时间复杂度是O(N)的话,是表达的什么呢?N就是字符串的长度,随着字符串的变长,这个题目的效率也线性的变长了*/这里我们用到了最坏运行情况,在这个字符串中找了N次才找到我们想要的字符

// 计算BubbleSort的时间复杂度?void BubbleSort(int* a, int n){ assert(a); for (size_t end = n; end > 0; --end) { int exchange = 0; for (size_t i = 1; i a[i]) { Swap(&a[i - 1], &a[i]); exchange = 1; } } if (exchange == 0) break; }}/*我们先来归纳一下冒泡排序的思想:在这个数组中,如果第一个数大于第二个数,那么就将这两个数的位置进行交换将大的数字放后面,小的数字放前面第一趟冒泡N第二趟冒泡N-1第三趟冒泡N-2第N趟冒泡1那么这里的次数就是首项+尾项乘以项数除以二(N+1)*N/2=(N*N+N)/2上面就是这道题准确的次数但对于结果影响最大的一项是N^2,因为上面将表达式分解了,所以最大影响的是N方那么表达就是O(N^2)这个是阶乘,我们将阶乘的每一项加起来就是这个准确次数了*/这个是阶乘,我们将阶乘的每一项加起来就是这个准确次数了,

不是说一层循环就是O(N),两层循环就是O(N^2)

具体看程序,最好通过过程去分析

// 计算BinarySearch的时间复杂度?//二分查找//二分查找的前提是数组是有序的int BinarySearch(int* a, int n, int x){ assert(a); int begin = 0; int end = n; while (begin > 1); if (a[mid] x) end = mid; else return mid; } return -1;}/*最好的情况:O(1)假设找了x次1*2*2*2*2*2.....找了多少次就乘了多少次2最后的结果就是这整个数组的长度2^x=N所以次数x就是log2N--以2为底N的对数那么对于这个题的话算法的复杂度计算,喜欢省略写成logN,将2省略掉,因为很多地方不好写底数有些书本,或者网上资料会写成O(lgN),严格来说这个是不对的,因为这个底数就是10,不符合那么时间复杂度就是O(logN)*/// 计算阶乘递归Factorial的时间复杂度?//这道题实现阶乘递归long long Factorial(size_t N){ return N < 2 ? N : Factorial(N - 1) * N;}/*整个次数就是1*2*3*4*5*6*7*8*9*10就是10的阶乘那么这个时间复杂度是多少呢?递归调用了N次,每次递归运算是O(1)那么整体就是O(N)*/常见的时间复杂度:O(N^2) O(N) O(logN) O(1)

复杂度对比

O(1)就是随着这个数量的增加,他是一直都是不变的

二分查找,在一个中国有14亿人中找一个人,最多找几次

31次就行 2的30次方是10亿,那么2的31次方就是20亿人

空间复杂度的计算

空间复杂度是对一个算法在运行过程中临时占用存储空间大小的量度 。空间复杂度不是程序占用

了多少bytes的空间,因为这个也没太大意义,所以空间复杂度算的是变量的个数。空间复杂度计

算规则基本跟实践复杂度类似,也使用大O渐进表示法,类似时间复杂度的方式,也是估算

总结:时间复杂度不算时间,算次数,空间复杂度不算空间,算变量个数

// 计算BubbleSort的空间复杂度?void BubbleSort(int* a, int n){ assert(a); for (size_t end = n; end > 0; --end) { int exchange = 0; for (size_t i = 1; i a[i]) { Swap(&a[i - 1], &a[i]); exchange = 1; } } if (exchange == 0) break; }}/*size_t end = nint exchange = 0size_t i = 1三个变量因为这里的3是常数,那么我们就用1将3代替那么空间复杂度就是O(1)循环走了N次,重复利用的是一个空间*/// 计算Fibonacci的空间复杂度?long long* Fibonacci(size_t n){ if (n == 0) return NULL; long long* fibArray = (long long*)malloc((n + 1) * sizeof(long long)); fibArray[0] = 0; fibArray[1] = 1; for (int i = 2; i <= n; ++i) { fibArray[i] = fibArray[i - 1] + fibArray[i - 2]; } return fibArray;}/*size_t nfibArrayfibArray[0] = 0;fibArray[1] = 1;i这里是5个变量,但是那里有一个malloc,开辟了一个N+1个元素的数组,有n+1个所以总共是N+6个,但是随着N的增大,6对结果的影响不大所以这里的空间复杂度就是O(N)大多数情况之下我们遇到的空间复杂度都是O(1)因为变量都是常数个*/这里就不同了,因为我们使用malloc开辟了一个n+1个元素的空间,原本的变量就有5个,所以总共的变量就是N+6,但是6对结果的影响不大,所以这个题的空间复杂度就是O(N)

// 计算阶乘递归Factorial的空间复杂度?long long Factorial(size_t N){ return N < 2 ? N : Factorial(N - 1) * N;}/*递归调用了N层,每次调用简历一个栈帧使用了常数个空间,那么每一层就是O(1)有N个栈帧,空间复杂度就是O(N)空间复杂度看的是我们最多的时候占了多少空间,也就是看最坏的情况之最大空间是多少对于递归,调用时建立栈帧,返回时就会销毁栈帧*/空间复杂度看的是我们最多的时候占了多少空间,也就是看最坏的情况的时候我们用了最大空间是多少

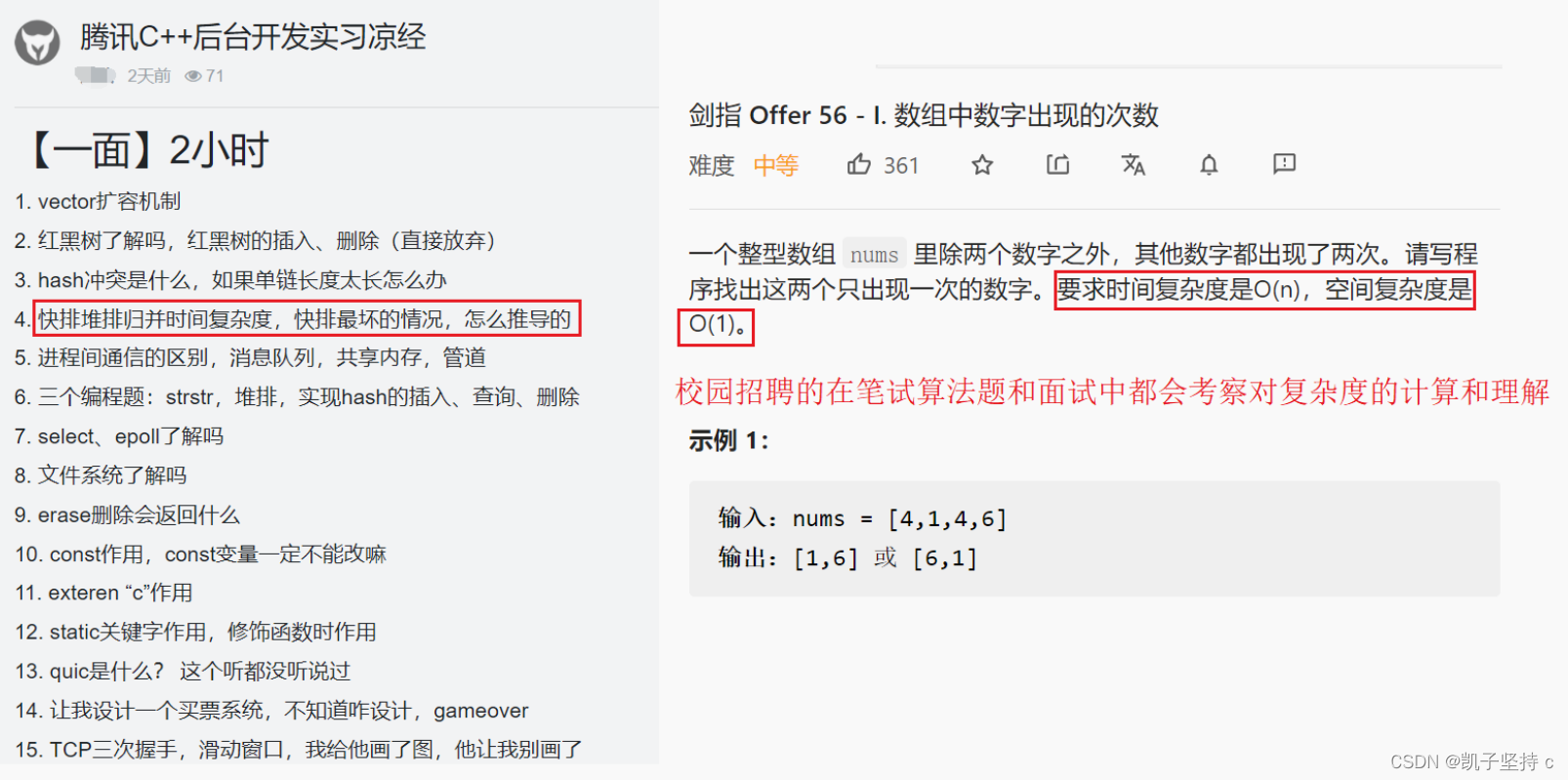

复杂度计算在算法中的意义

3.有复杂度要求的算法题练习

找到消失的数字--类似单身狗问题

重点:0跟任何数异或那么得到的就是任何数

//数组nums包含从0到n的所有整数,但其中缺了一个。请编写代码找出那个缺失的整数。你有办法在O(n)时间内完成吗?/*思路一:给数组排序,我们现将数组排成有序数列,再进行判断,看看两个数之间相差的大小是不是1但是不符合时间复杂度,存在问题,用快速排序的时间复杂度都是O(N*logN),那么其他的排序的时间复杂度更大了排序直接将时间都消耗完了,所以这个题目排序是不行的思路二:将原本不缺少数字的数组内的数字相加得到S1,再将缺少了一个数字的数字内的元素全加,得到了S2那么S2-S1得到的就是缺少的数字思路三:使用异或*(相同就为0,相异为1)2^3000000100000001100000001---异或的结果相同的数异或就是0 1^1=0 2^2=0那么我们将两个数组内的数依次进行异或一个数组不差数字一个数组差一个数字两个数组内的元素进行异或,最后出现两次的数就抵消为0,那么最后的结果就是只出现一个的那个数*/int missingNumber(int*nums,int numsSize)//numsSize是这个却数字的数组的元素个数{ int x=0;//创建变量x并赋值为0,因为0跟任何数异或得到的都是任何数 for(int i=0;i<numsSize;i++) { x^=nums[i];//将数组内的值进行累异或 } for(int j=0;j<numsSize+1;j++)//因为上面传的是一个差了一个数的数组,那么我们现在要将不差数字的那个数组进行累异或操作,所以这个数组的大小是nmusSize+1 { //这里的x最开始是上面缺数字的那个数组累异或的结果 x^=j;//从0开始累异或,0和任何数异或得到的就是那个任何数, } return x;}旋转数组

/*给定一个整数数组 nums,将数组中的元素向右轮转 k 个位置,其中 k 是非负数。输入: nums = [1,2,3,4,5,6,7], k = 3输出: [5,6,7,1,2,3,4]解释:向右轮转 1 步: [7,1,2,3,4,5,6]向右轮转 2 步: [6,7,1,2,3,4,5]向右轮转 3 步: [5,6,7,1,2,3,4]尽可能想出更多的解决方案,至少有 三种 不同的方法可以解决这个问题。你可以使用空间复杂度为 O(1) 的 原地 算法解决这个问题吗?要求空间复杂度为O(1)*/第一种方法:

跑不过去,存在漏洞,不符合题目要求,测试用例中给了一个非常大的数字

/*一次右旋就是将数组中最后的一个数保留,再将前面的数字往后挪一位,再把最后的那个数放到第一位*/void rotate(int* nums, int numsSize, int k){ //当前算法时间复杂度是:O(N*K),内循环走了N次,外循环走了k次,那么总的次数就是N*K while(k--) { int tmp=nums[numsSize-1];//创建一个临时变量将数组中最后一个元素进行保存,总共numsSize个数,那么最后一个数下标就是numsSize-1 for(int end=numsSize-2;end>=0;--end)//创建临时变量end,让end指向这个数组的倒数第二个元素的位置,下标就是numsSize-2 {//循环从这个数组的倒数第二个元素开始,我们每次将数组中两个相邻的数字中的后面一位上的数字赋值为前面的数字 //就是相当于将前面的数字往后挪了一位 nums[end+1]=nums[end]; } nums[0]=tmp;//将数组内的元素整体向后移了一位,那么第一位的数字我们就将之前赋值上的最后一位数组放过去 //那么现在第一位上面就是上次右旋最后一位数字了 //那么上面的代码就是第一次旋转的代码了,如果像旋转k次的话,那我们就将这个旋转一次的代码放到while循环里面去 }}//但是这个方法对于这个题是跑不过的,所以我们得换方法第二种方法:

以空间换时间

/*思路二:以空间换时间将数组中的后k个放到前面开,将前面的剩下的N-K个放到后面去这种的话时间复杂度是O(N),空间复杂度是O(N),但是这样就不满足题目的要求了,题目要求空间复杂度为O(1)*/void rotate(int* nums, int numsSize, int k){}#include void rotate(int nums[], int n, int k) { // 计算实际需要轮转的位数,因为 k 可能大于数组长度 k = k % n; int temp[k]; // 创建临时数组存储 k 个元素 // 将数组的后 k 个元素存储到临时数组 for (int i = 0; i = k; i--) { nums[i] = nums[i - k]; } // 将临时数组中的元素复制到数组的前 k 个位置 for (int i = 0; i < k; i++) { nums[i] = temp[i]; }}第三种方法:

将后K个逆置,前N-K个逆置,再整体逆置

创建逆置的函数,我们再将具体的区间传上去进行逆置

/*思路三:将后K个逆置,前N-K个逆置,再整体逆置1 2 3 4 5 6 71 2 3 4 7 6 54 3 2 1 7 6 5 将4 5 对换位置,6 3对换位置 2 7对换位置5 6 7 1 2 3 4----这就是旋转3步的结果*//*假设k=3假设数组是1 2 3 4 5 6 7我们现在要旋转k次,那么我们先旋转数组后K个最后一个元素7的下表是N-1,那么5的下标就是N-K将N-K到N-1这个范围的元素先进行逆置我们再将前面剩下的N-K个元素进行逆置数组首元素的下标是0,4的下标是N-K-1最后我们就将整体进行逆置就好了*///有可能K大于这个数组的大小,那么就会存在越界的情况,/*假如k是13,但是数组元素是7,那么我们应该怎么办呢?k是13相当于我们旋转了6次,因为旋转7次相当于不变,因为旋转了7次的结果和原来是一样的,那么我们就要进行判断,如果k>=numsSize,旋转次数大于数组元素个数的话,我们就将k的值取模*/void Reverse(int* nums, int left, int right)//对数组的一段区间进行逆置{ /* 1 2 3 4 1和4交换,2和3交换,最开始的left指向1,right指向4,交换完成之后,;left++,right--,再进行2和3的交换 */ while(left=numsSize) { k%=numsSize; } Reverse(nums,numsSize-k,numsSize-1);//先将后K个进行逆置 Reverse(nums,0,numsSize-k-1);//将剩下的N-K个元素进行逆置 Reverse(nums,0,numsSize-1);//将整体进行逆置}