【DataSophon】DataSophon1.2.1 整合Zeppelin并配置Hive|Trino|Spark解释器

目录

一、Zeppelin简介

二、实现步骤

2.1 Zeppelin包下载

2.2 work配置文件

三、配置常用解释器

3.1配置Hive解释器

3.2 配置trino解释器

3.3 配置Spark解释器

一、Zeppelin简介

Zeppelin是Apache基金会下的一个开源框架,它提供了一个数据可视化的框架,是一个基于web的notebook。后台支持接入多种数据引擎,比如jdbc、spark、hive等。同时也支持多种语言进行交互式的数据分析,比如Scala、SQL、Python等等。本文从安装和使用两部分来介绍Zeppelin。

二、实现步骤

官网安装参考文档:datasophon/docs/zh/datasophon集成zeppelin.md at dev · datavane/datasophon · GitHub

2.1 Zeppelin包下载

https://dlcdn.apache.org/zeppelin/zeppelin-0.10.1/zeppelin-0.10.1-bin-all.tgz

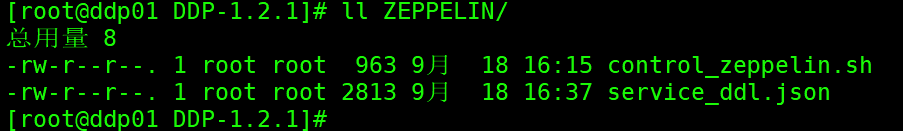

# 创建配置存放目录mkdir -p /opt/datasophon/DDP/packages/datasophon-manager-1.2.1/conf/meta/DDP-1.2.1/ZEPPELIN将配置json文件和启停脚本准备好,还需要将启动脚本复制到安装包根目录下

control_zeppelin.sh

#!/bin/bashSCRIPT_DIR=$(cd \"$(dirname \"$0\")\" && pwd)ZEPPELIN_HOME=$SCRIPT_DIR # 当前目录即为 Zeppelin 安装目录ZEPPELIN_DAEMON=$ZEPPELIN_HOME/bin/zeppelin-daemon.shstart_zeppelin() { echo \"Starting Zeppelin...\" $ZEPPELIN_DAEMON start}stop_zeppelin() { echo \"Stopping Zeppelin...\" $ZEPPELIN_DAEMON stop}check_zeppelin_status() { echo \"Checking Zeppelin status...\" $ZEPPELIN_DAEMON status if [ $? -eq 0 ]; then echo \"Zeppelin is running.\" exit 0 else echo \"Zeppelin is not running.\" exit 1 fi}case \"$1\" in start) start_zeppelin ;; stop) stop_zeppelin ;; restart) stop_zeppelin sleep 5 # 等待一些时间确保Zeppelin完全停止 start_zeppelin ;; status) check_zeppelin_status ;; *) echo \"Usage: $0 {start|stop|restart|status}\" exit 1 ;;esaccontrol_zeppelin.sh

#!/bin/bashSCRIPT_DIR=$(cd \"$(dirname \"$0\")\" && pwd)ZEPPELIN_HOME=$SCRIPT_DIR # 当前目录即为 Zeppelin 安装目录ZEPPELIN_DAEMON=$ZEPPELIN_HOME/bin/zeppelin-daemon.shstart_zeppelin() { echo \"Starting Zeppelin...\" $ZEPPELIN_DAEMON start}stop_zeppelin() { echo \"Stopping Zeppelin...\" $ZEPPELIN_DAEMON stop}check_zeppelin_status() { echo \"Checking Zeppelin status...\" $ZEPPELIN_DAEMON status if [ $? -eq 0 ]; then echo \"Zeppelin is running.\" exit 0 else echo \"Zeppelin is not running.\" exit 1 fi}case \"$1\" in start) start_zeppelin ;; stop) stop_zeppelin ;; restart) stop_zeppelin sleep 5 # 等待一些时间确保Zeppelin完全停止 start_zeppelin ;; status) check_zeppelin_status ;; *) echo \"Usage: $0 {start|stop|restart|status}\" exit 1 ;;esacservice_ddl.json

{ \"name\": \"ZEPPELIN\", \"label\": \"ZEPPELIN\", \"description\": \"交互式数据分析notebook\", \"version\": \"0.10.1\", \"sortNum\": 1, \"dependencies\": [], \"packageName\": \"zeppelin-0.10.1.tar.gz\", \"decompressPackageName\": \"zeppelin-0.10.1\", \"roles\": [ { \"name\": \"ZeppelinServer\", \"label\": \"ZeppelinServer\", \"roleType\": \"master\", \"cardinality\": \"1+\", \"runAs\": {}, \"logFile\": \"logs/zeppelin-root-${host}.log\", \"startRunner\": { \"timeout\": \"60\", \"program\": \"control_zeppelin.sh\", \"args\": [ \"start\" ] }, \"stopRunner\": { \"timeout\": \"600\", \"program\": \"control_zeppelin.sh\", \"args\": [ \"stop\" ] }, \"statusRunner\": { \"timeout\": \"60\", \"program\": \"control_zeppelin.sh\", \"args\": [ \"status\" ] }, \"externalLink\": { \"name\": \"ZeppelinServer UI\", \"label\": \"ZeppelinServer UI\", \"url\": \"http://${host}:8889\" } } ], \"configWriter\": { \"generators\": [ { \"filename\": \"zeppelin-env.sh\", \"configFormat\": \"custom\", \"outputDirectory\": \"conf\", \"templateName\": \"zeppelin-env.ftl\", \"includeParams\": [ \"custom.zeppelin.env\" ] }, { \"filename\": \"zeppelin-site.xml\", \"configFormat\": \"custom\", \"outputDirectory\": \"conf\", \"templateName\": \"zeppelin-site.ftl\", \"includeParams\": [ \"jobmanagerEnable\", \"custom.zeppelin.site.xml\" ] } ] }, \"parameters\": [ { \"name\": \"jobmanagerEnable\", \"label\": \"jobmanagerEnable\", \"description\": \"The Job tab in zeppelin page seems not so useful instead it cost lots of memory and affect the performance.Disable it can save lots of memory\", \"configType\": \"map\", \"required\": true, \"type\": \"switch\", \"value\": true, \"configurableInWizard\": true, \"hidden\": false, \"defaultValue\": true }, { \"name\": \"custom.zeppelin.env\", \"label\": \"自定义配置 zeppelin-env.sh\", \"description\": \"自定义配置\", \"configType\": \"custom\", \"required\": false, \"type\": \"multipleWithKey\", \"value\": [{\"HADOOP_CONF_DIR\":\"${HADOOP_CONF_DIR}\"}], \"configurableInWizard\": true, \"hidden\": false, \"defaultValue\": [{\"HADOOP_CONF_DIR\":\"${HADOOP_CONF_DIR}\"}] }, { \"name\": \"custom.zeppelin.site.xml\", \"label\": \"自定义配置 zeppelin-site.xml\", \"description\": \"自定义配置\", \"configType\": \"custom\", \"required\": false, \"type\": \"multipleWithKey\", \"value\": [], \"configurableInWizard\": true, \"hidden\": false, \"defaultValue\": \"\" } ]}2.2 work配置文件

work下需要准备两个配置文件zeppelin-env.ftl和zeppelin-site.ftl

zeppelin-env.ftl

#!/bin/bashexport ZEPPELIN_ADDR=0.0.0.0export ZEPPELIN_PORT=8889parent_dir=$(dirname \"$(cd \"$(dirname \"$0\")\" && pwd)\")export JAVA_HOME=$parent_dir/jdk1.8.0_333export ${item.name}=${item.value}zeppelin-site.ftl

zeppelin.server.addr 127.0.0.1 Server binding address zeppelin.server.port 8080 Server port. zeppelin.cluster.addr Server cluster address, eg. 127.0.0.1:6000,127.0.0.2:6000,127.0.0.3:6000 zeppelin.server.ssl.port 8443 Server ssl port. (used when ssl property is set to true) zeppelin.server.context.path / Context Path of the Web Application zeppelin.war.tempdir webapps Location of jetty temporary directory zeppelin.notebook.dir notebook path or URI for notebook persist zeppelin.interpreter.include All the inteprreters that you would like to include. You can only specify either \'zeppelin.interpreter.include\' or \'zeppelin.interpreter.exclude\'. Specifying them together is not allowed. zeppelin.interpreter.exclude All the inteprreters that you would like to exclude. You can only specify either \'zeppelin.interpreter.include\' or \'zeppelin.interpreter.exclude\'. Specifying them together is not allowed. zeppelin.notebook.homescreen id of notebook to be displayed in homescreen. ex) 2A94M5J1Z Empty value displays default home screen zeppelin.notebook.homescreen.hide false hide homescreen notebook from list when this value set to true zeppelin.notebook.collaborative.mode.enable true Enable collaborative mode<!-- zeppelin.notebook.gcs.dir A GCS path in the form gs://bucketname/path/to/dir. Notes are stored at {zeppelin.notebook.gcs.dir}/{notebook-id}/note.json zeppelin.notebook.gcs.credentialsJsonFilePath path/to/key.json Path to GCS credential key file for authentication with Google Storage. zeppelin.notebook.storage org.apache.zeppelin.notebook.repo.GCSNotebookRepo notebook persistence layer implementation--><!-- zeppelin.notebook.s3.user user user name for s3 folder structure zeppelin.notebook.s3.bucket zeppelin bucket name for notebook storage zeppelin.notebook.s3.endpoint s3.amazonaws.com endpoint for s3 bucket zeppelin.notebook.s3.timeout 120000 s3 bucket endpoint request timeout in msec. zeppelin.notebook.storage org.apache.zeppelin.notebook.repo.S3NotebookRepo notebook persistence layer implementation--><!-- zeppelin.notebook.s3.kmsKeyID AWS-KMS-Key-UUID AWS KMS key ID used to encrypt notebook data in S3--><!-- zeppelin.notebook.s3.kmsKeyRegion us-east-1 AWS KMS key region in your AWS account--><!-- zeppelin.notebook.s3.encryptionMaterialsProvider provider implementation class name Custom encryption materials provider used to encrypt notebook data in S3--><!-- zeppelin.notebook.s3.sse true Server-side encryption enabled for notebooks--><!-- zeppelin.notebook.s3.pathStyleAccess true Path style access for S3 bucket--><!-- zeppelin.notebook.s3.cannedAcl BucketOwnerFullControl Saves notebooks in S3 with the given Canned Access Control List.--><!-- zeppelin.notebook.s3.signerOverride S3SignerType optional override to control which signature algorithm should be used to sign AWS requests--><!-- zeppelin.notebook.oss.bucket zeppelin bucket name for notebook storage zeppelin.notebook.oss.endpoint http://oss-cn-hangzhou.aliyuncs.com endpoint for oss bucket zeppelin.notebook.oss.accesskeyid Access key id for your OSS account zeppelin.notebook.oss.accesskeysecret Access key secret for your OSS account zeppelin.notebook.storage org.apache.zeppelin.notebook.repo.OSSNotebookRepo notebook persistence layer implementation--><!-- zeppelin.notebook.azure.connectionString DefaultEndpointsProtocol=https;AccountName=;AccountKey= Azure account credentials zeppelin.notebook.azure.share zeppelin share name for notebook storage zeppelin.notebook.azure.user user optional user name for Azure folder structure zeppelin.notebook.storage org.apache.zeppelin.notebook.repo.AzureNotebookRepo notebook persistence layer implementation--><!-- Notebook storage layer using local file system zeppelin.notebook.storage org.apache.zeppelin.notebook.repo.VFSNotebookRepo local notebook persistence layer implementation--><!-- Notebook storage layer using hadoop compatible file system zeppelin.notebook.storage org.apache.zeppelin.notebook.repo.FileSystemNotebookRepo Hadoop compatible file system notebook persistence layer implementation, such as local file system, hdfs, azure wasb, s3 and etc. zeppelin.server.kerberos.keytab keytab for accessing kerberized hdfs zeppelin.server.kerberos.principal principal for accessing kerberized hdfs--><!-- zeppelin.notebook.storage org.apache.zeppelin.notebook.repo.GitNotebookRepo, org.apache.zeppelin.notebook.repo.zeppelinhub.ZeppelinHubRepo two notebook persistence layers (versioned local + ZeppelinHub)--><!-- zeppelin.notebook.storage org.apache.zeppelin.notebook.repo.MongoNotebookRepo notebook persistence layer implementation zeppelin.notebook.mongo.uri mongodb://localhost MongoDB connection URI used to connect to a MongoDB database server zeppelin.notebook.mongo.database zeppelin database name for notebook storage zeppelin.notebook.mongo.collection notes collection name for notebook storage zeppelin.notebook.mongo.autoimport false import local notes into MongoDB automatically on startup, reset to false after import to avoid repeated import--> zeppelin.notebook.storage org.apache.zeppelin.notebook.repo.GitNotebookRepo versioned notebook persistence layer implementation zeppelin.notebook.one.way.sync false If there are multiple notebook storages, should we treat the first one as the only source of truth? zeppelin.interpreter.dir interpreter Interpreter implementation base directory zeppelin.interpreter.localRepo local-repo Local repository for interpreter\'s additional dependency loading zeppelin.interpreter.dep.mvnRepo https://repo1.maven.org/maven2/ Remote principal repository for interpreter\'s additional dependency loading zeppelin.dep.localrepo local-repo Local repository for dependency loader zeppelin.helium.node.installer.url https://nodejs.org/dist/ Remote Node installer url for Helium dependency loader zeppelin.helium.npm.installer.url https://registry.npmjs.org/ Remote Npm installer url for Helium dependency loader zeppelin.helium.yarnpkg.installer.url https://github.com/yarnpkg/yarn/releases/download/ Remote Yarn package installer url for Helium dependency loader<!-- zeppelin.helium.registry helium,https://s3.amazonaws.com/helium-package/helium.json Location of external Helium Registry--> zeppelin.interpreter.group.default spark zeppelin.interpreter.connect.timeout 60000 Interpreter process connect timeout in msec. zeppelin.interpreter.output.limit 102400 Output message from interpreter exceeding the limit will be truncated zeppelin.ssl false Should SSL be used by the servers? zeppelin.ssl.client.auth false Should client authentication be used for SSL connections? zeppelin.ssl.keystore.path keystore Path to keystore relative to Zeppelin configuration directory zeppelin.ssl.keystore.type JKS The format of the given keystore (e.g. JKS or PKCS12) zeppelin.ssl.keystore.password change me Keystore password. Can be obfuscated by the Jetty Password tool<!-- zeppelin.ssl.key.manager.password change me Key Manager password. Defaults to keystore password. Can be obfuscated.--> zeppelin.ssl.truststore.path truststore Path to truststore relative to Zeppelin configuration directory. Defaults to the keystore path zeppelin.ssl.truststore.type JKS The format of the given truststore (e.g. JKS or PKCS12). Defaults to the same type as the keystore type<!-- zeppelin.ssl.truststore.password change me Truststore password. Can be obfuscated by the Jetty Password tool. Defaults to the keystore password--><!-- zeppelin.ssl.pem.key This directive points to the PEM-encoded private key file for the server.--><!-- zeppelin.ssl.pem.key.password Password of the PEM-encoded private key.--><!-- zeppelin.ssl.pem.cert This directive points to a file with certificate data in PEM format.--><!-- zeppelin.ssl.pem.ca This directive sets the all-in-one file where you can assemble the Certificates of Certification Authorities (CA) whose clients you deal with. These are used for Client Authentication. Such a file is simply the concatenation of the various PEM-encoded Certificate files.--> zeppelin.server.allowed.origins * Allowed sources for REST and WebSocket requests (i.e. http://onehost:8080,http://otherhost.com). If you leave * you are vulnerable to https://issues.apache.org/jira/browse/ZEPPELIN-173 zeppelin.username.force.lowercase false Force convert username case to lower case, useful for Active Directory/LDAP. Default is not to change case zeppelin.notebook.default.owner.username Set owner role by default zeppelin.notebook.public true Make notebook public by default when created, private otherwise zeppelin.websocket.max.text.message.size 10240000 Size in characters of the maximum text message to be received by websocket. Defaults to 10240000 zeppelin.server.default.dir.allowed false Enable directory listings on server. zeppelin.interpreter.yarn.monitor.interval_secs 10 Check interval in secs for yarn apps monitors<!-- zeppelin.interpreter.lifecyclemanager.class org.apache.zeppelin.interpreter.lifecycle.TimeoutLifecycleManager LifecycleManager class for managing the lifecycle of interpreters, by default interpreter will be closed after timeout zeppelin.interpreter.lifecyclemanager.timeout.checkinterval 60000 Milliseconds of the interval to checking whether interpreter is time out zeppelin.interpreter.lifecyclemanager.timeout.threshold 3600000 Milliseconds of the interpreter timeout threshold, by default it is 1 hour--> zeppelin.server.jetty.name Hardcoding Application Server name to Prevent Fingerprinting<!-- zeppelin.server.send.jetty.name false If set to false, will not show the Jetty version to prevent Fingerprinting--><!-- zeppelin.server.jetty.request.header.size 8192 Http Request Header Size Limit (to prevent HTTP 413)--><!-- zeppelin.server.jetty.thread.pool.max 400 Max Thread pool number for QueuedThreadPool in Jetty Server--><!-- zeppelin.server.jetty.thread.pool.min 8 Min Thread pool number for QueuedThreadPool in Jetty Server--><!-- zeppelin.server.jetty.thread.pool.timeout 30 Timeout number for QueuedThreadPool in Jetty Server--><!-- zeppelin.server.authorization.header.clear true Authorization header to be cleared if server is running as authcBasic--> zeppelin.server.xframe.options SAMEORIGIN The X-Frame-Options HTTP response header can be used to indicate whether or not a browser should be allowed to render a page in a frame/iframe/object.<!-- zeppelin.server.strict.transport max-age=631138519 The HTTP Strict-Transport-Security response header is a security feature that lets a web site tell browsers that it should only be communicated with using HTTPS, instead of using HTTP. Enable this when Zeppelin is running on HTTPS. Value is in Seconds, the default value is equivalent to 20 years.--> zeppelin.server.xxss.protection 1; mode=block The HTTP X-XSS-Protection response header is a feature of Internet Explorer, Chrome and Safari that stops pages from loading when they detect reflected cross-site scripting (XSS) attacks. When value is set to 1 and a cross-site scripting attack is detected, the browser will sanitize the page (remove the unsafe parts). zeppelin.server.xcontent.type.options nosniff The HTTP X-Content-Type-Options response header helps to prevent MIME type sniffing attacks. It directs the browser to honor the type specified in the Content-Type header, rather than trying to determine the type from the content itself. The default value \"nosniff\" is really the only meaningful value. This header is supported on all browsers except Safari and Safari on iOS.<!-- zeppelin.server.html.body.addon <![CDATA[]]> Addon html code to be placed at the end of the html->body section in index.html delivered by zeppelin server. zeppelin.server.html.head.addon Addon html code to be placed at the end of the html->head section in index.html delivered by zeppelin server.--><!-- zeppelin.interpreter.callback.portRange 10000:10010--><!-- zeppelin.recovery.storage.class org.apache.zeppelin.interpreter.recovery.LocalRecoveryStorage ReoveryStorage implementation based on java native local file system zeppelin.recovery.storage.class org.apache.zeppelin.interpreter.recovery.FileSystemRecoveryStorage ReoveryStorage implementation based on hadoop FileSystem--><!-- zeppelin.recovery.dir recovery Location where recovery metadata is stored--><!-- GitHub configurations zeppelin.notebook.git.remote.url remote Git repository URL zeppelin.notebook.git.remote.username token remote Git repository username zeppelin.notebook.git.remote.access-token remote Git repository password zeppelin.notebook.git.remote.origin origin Git repository remote zeppelin.notebook.cron.enable false Notebook enable cron scheduler feature zeppelin.notebook.cron.folders Notebook cron folders--> zeppelin.run.mode auto \'auto|local|k8s|docker\' zeppelin.k8s.portforward false Port forward to interpreter rpc port. Set \'true\' only on local development when zeppelin.k8s.mode \'on\' zeppelin.k8s.container.image apache/zeppelin:0.9.0-SNAPSHOT Docker image for interpreters zeppelin.k8s.spark.container.image apache/spark:latest Docker image for Spark executors zeppelin.k8s.template.dir k8s Kubernetes yaml spec files zeppelin.docker.container.image apache/zeppelin:0.8.0 Docker image for interpreters zeppelin.search.index.rebuild false Whether rebuild index when zeppelin start. If true, it would read all notes and rebuild the index, this would consume lots of memory if you have large amounts of notes, so by default it is false zeppelin.search.use.disk true Whether using disk for storing search index, if false, memory will be used instead. zeppelin.search.index.path /tmp/zeppelin-index path for storing search index on disk. zeppelin.jobmanager.enable ${jobmanagerEnable} The Job tab in zeppelin page seems not so useful instead it cost lots of memory and affect the performance. Disable it can save lots of memory zeppelin.spark.only_yarn_cluster false Whether only allow yarn cluster mode zeppelin.note.file.exclude.fields fields to be excluded from being saved in note files, with Paragraph prefix mean the fields in Paragraph, e.g. Paragraph.results ${item.name} ${item.value}配置完成后需要重启,work也需要重启

/opt/datasophon/DDP/packages/datasophon-manager-1.2.1/bin/datasophon-api.sh start api重启完成后,我们完成安装。

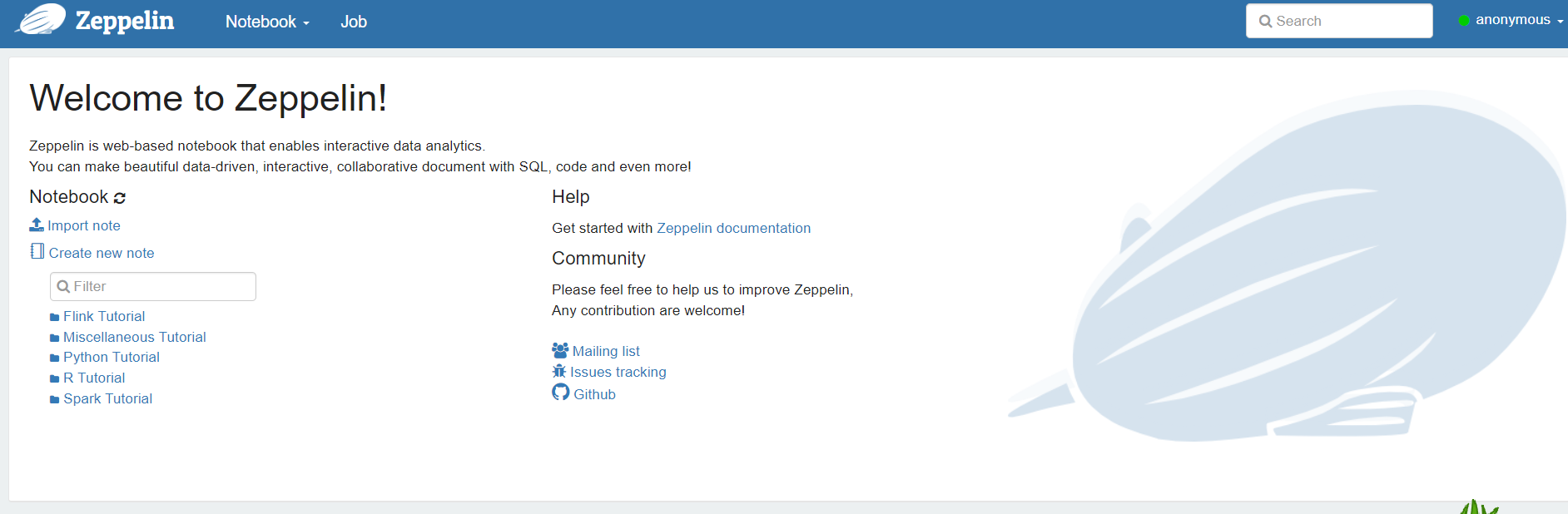

登录页面 http://192.168.2.100:8889/

三、配置常用解释器

3.1配置Hive解释器

前提条件我们需要启动HiverServer2。

复制hive-site.xml到zeppline中

复制hive-site.xml到zeppline中

cp /opt/datasophon/hive-3.1.0/conf/hive-site.xml /opt/datasophon/zeppelin-0.10.1/conf/

将如下jar包拷贝到目录:/opt/datasophon/zeppelin-0.10.1/interpreter/jdbc

commons-lang-2.6.jarcurator-client-2.12.0.jarguava-19.0.jarhadoop-common-3.3.3.jarhive-common-3.1.0.jarhive-exec-3.1.0.jarhive-jdbc-3.1.0.jarhive-serde-3.1.0.jarhive-service-3.1.0.jarhive-service-rpc-3.1.0.jarhttpclient-4.5.2.jarhttpcore-4.4.4.jarlibfb303-0.9.3.jarlibthrift-0.9.3.jarmysql-connector-java-5.1.46-bin.jarmysql-connector-java.jarprotobuf-java-2.5.0.jar

jar包拷贝完成后,重启zeppline。

jar包拷贝完成后,重启zeppline。

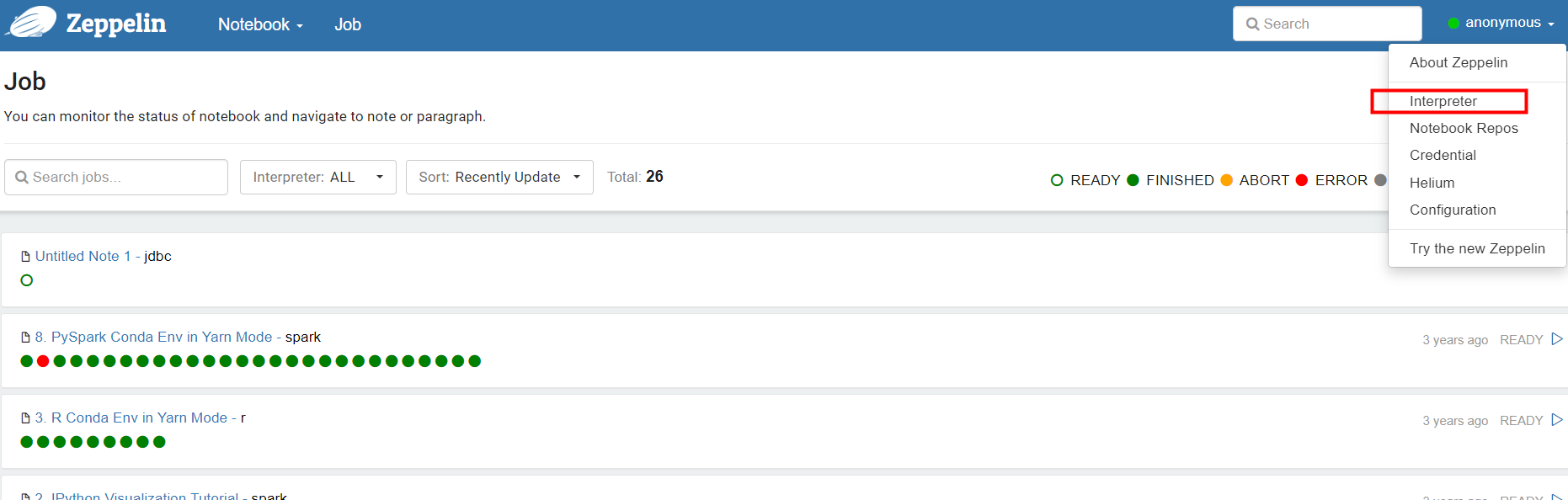

web界面配置集成hive

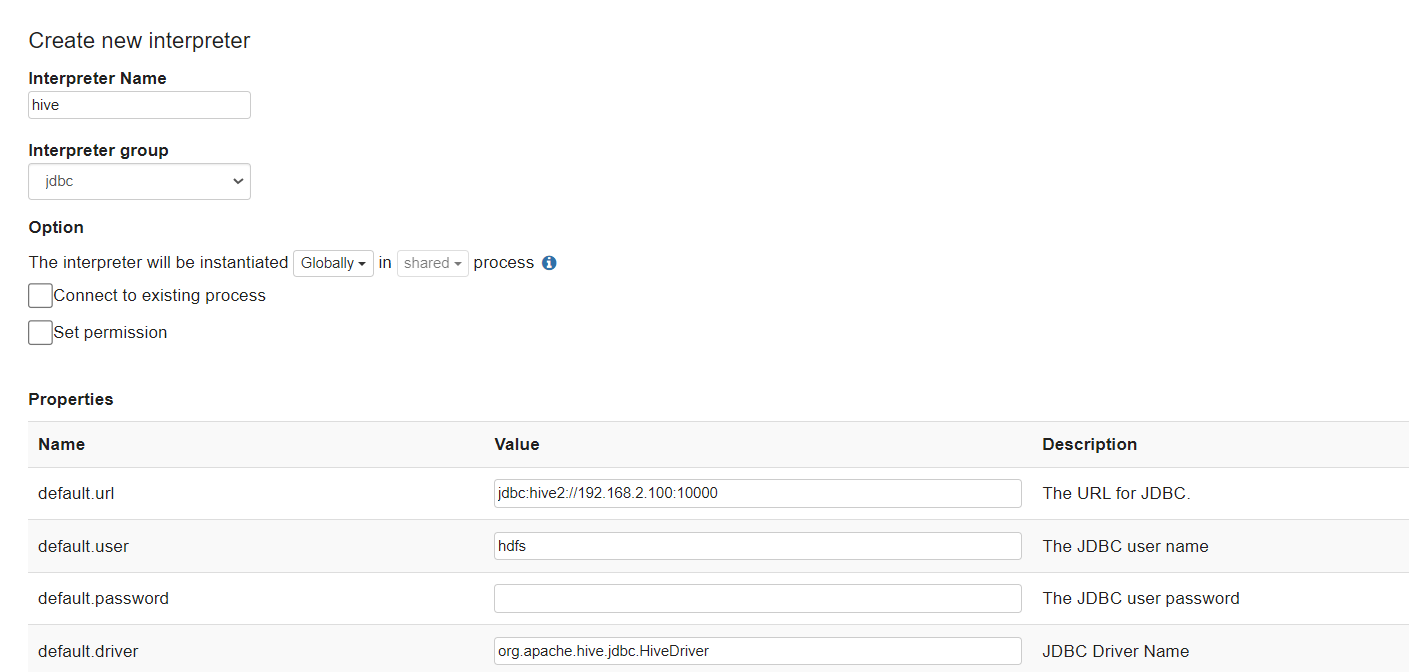

新建一个继承jdbc的解释器,命名为hive,如下图所示

配置默认jdbc URL和USER

配置默认jdbc URL和USER

我的配置如下:

属性名称

属性值

default.url

jdbc:hive2://192.168.21.102:10000

default.user

hdfs

default.driver

org.apache.hive.jdbc.HiveDriver

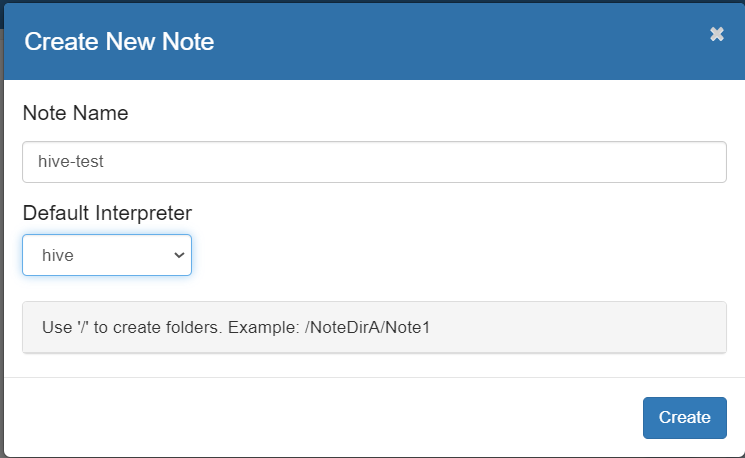

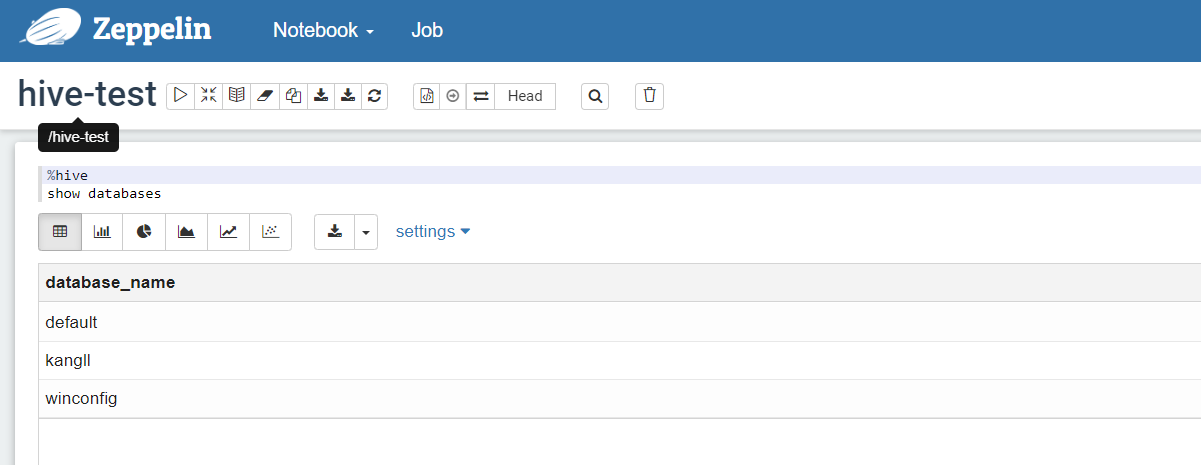

创建新的notebook

Interpreter选择:hive

Interpreter选择:hive

测试使用

3.2 配置trino解释器

将trino服务启动

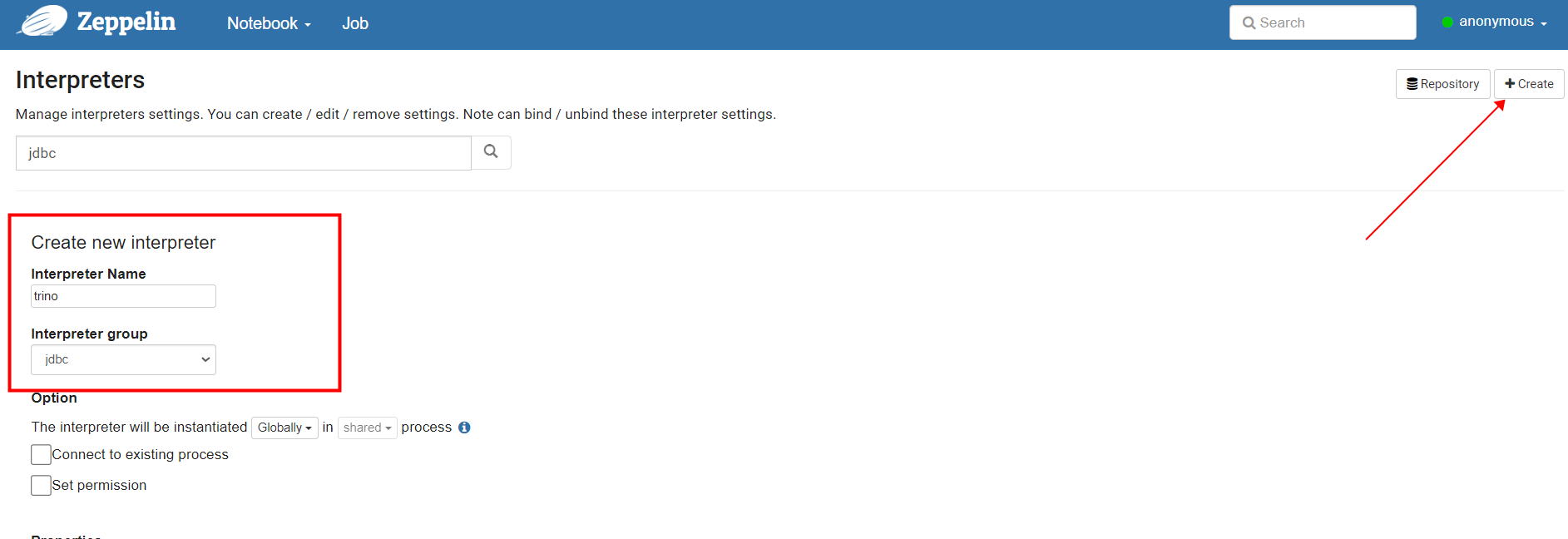

新增拦截器

新增拦截器

拦截器名字为trino,group设置为jdbc

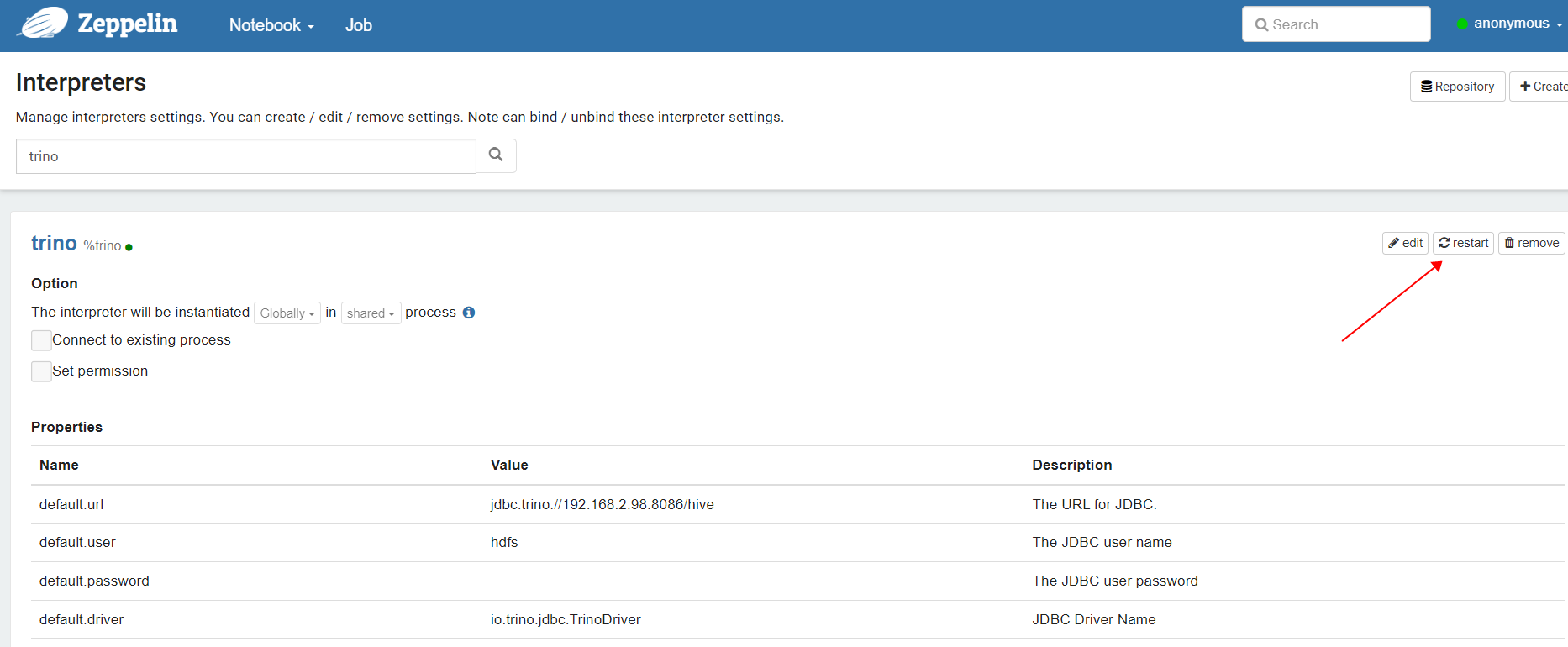

设置属性,添加url和driver,用户名可以随便填,trino默认没有启动用户校验

jdbc:trino://192.168.21.102:10000

属性名称

属性值

default.url

jdbc:trino://192.168.2.98:8086/hive

default.user

hdfs

default.driver

io.trino.jdbc.TrinoDriver

配置完成后我们选择重启

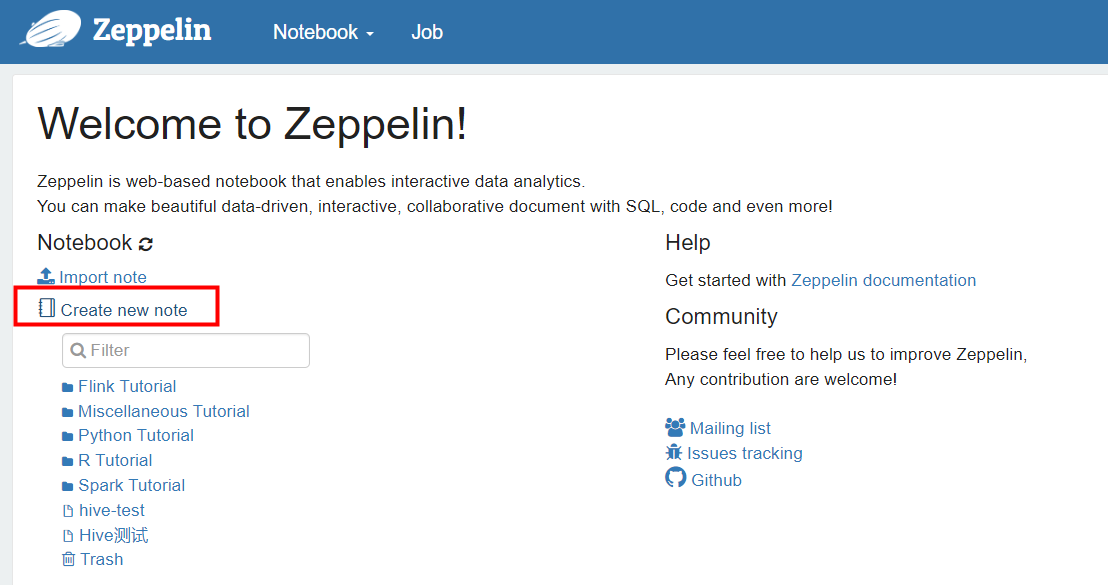

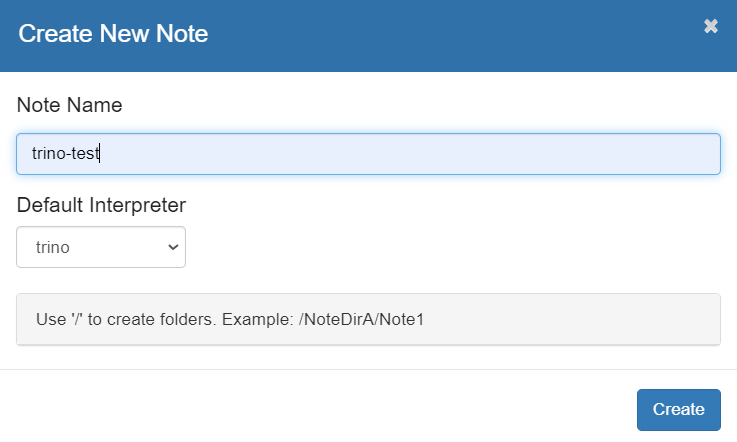

创建一个 new note

Interpreter选择:trino

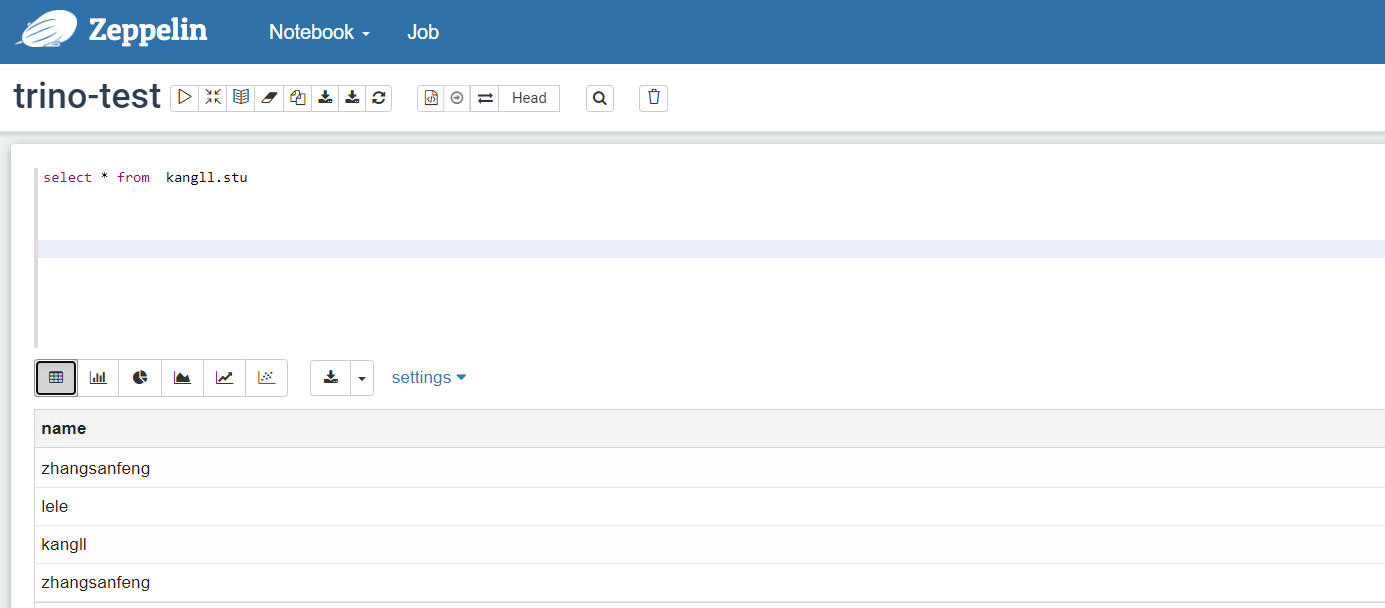

测试查询hive表中数据

3.3 配置Spark解释器

Zeppelin默认的spark解释器包括%spark , %sql , %dep , %pyspark , %ipyspark , %r等子解释器,在实际应用中根据spark集群的参数修改具体的属性进入解释器配置界面,默认为local[*],Spark采用何种运行模式,参数配置信息如下。

- local模式:使用local[*],[]中为线程数,*代表线程数与计算机的CPU核心数一致。

- standalone模式: 使用spark://master:7077

- yarn模式:使用yarn-client或yarn-cluster

- mesos模式:使用mesos://zk://zk1:2181,zk2:2182,zk3:2181/mesos或mesos://host:5050

进入编辑spark拦截器

选择编辑配置SPARK_HOME和spark.master,具体参数含义看官网。

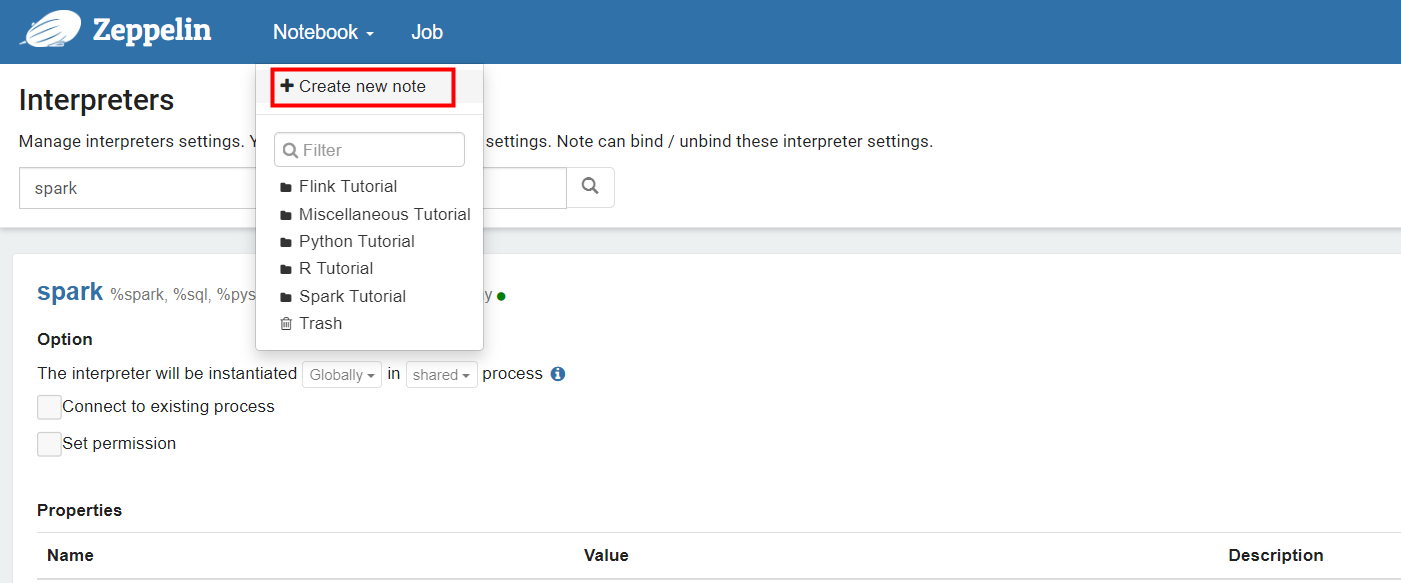

创建note

解释器选择\"spark\"

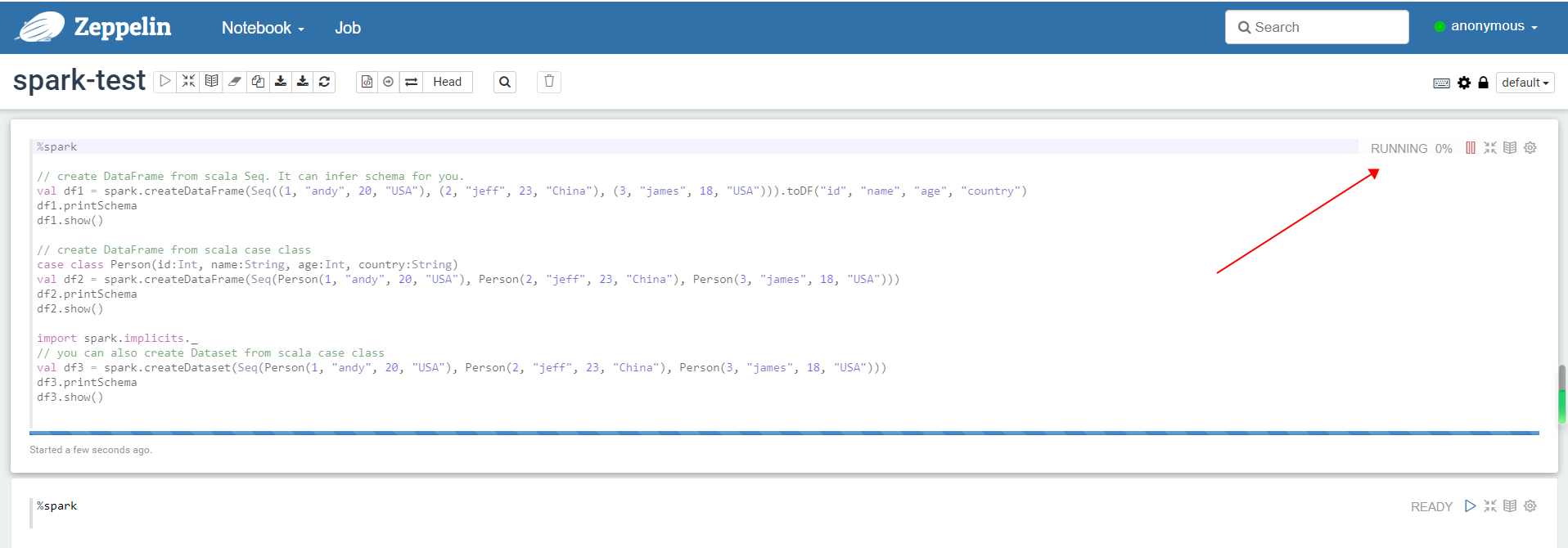

测试运行note

测试运行note

%spark// create DataFrame from scala Seq. It can infer schema for you.val df1 = spark.createDataFrame(Seq((1, \"andy\", 20, \"USA\"), (2, \"jeff\", 23, \"China\"), (3, \"james\", 18, \"USA\"))).toDF(\"id\", \"name\", \"age\", \"country\")df1.printSchemadf1.show()// create DataFrame from scala case classcase class Person(id:Int, name:String, age:Int, country:String)val df2 = spark.createDataFrame(Seq(Person(1, \"andy\", 20, \"USA\"), Person(2, \"jeff\", 23, \"China\"), Person(3, \"james\", 18, \"USA\")))df2.printSchemadf2.show()import spark.implicits._// you can also create Dataset from scala case classval df3 = spark.createDataset(Seq(Person(1, \"andy\", 20, \"USA\"), Person(2, \"jeff\", 23, \"China\"), Person(3, \"james\", 18, \"USA\")))df3.printSchemadf3.show()点击 运行

运行结果输出

Apache Zeppelin 一文打尽

高级工具 zeppelin 整合hive教程_zeppelin hive-CSDN博客

高级工具 zeppelin 整合hive教程_zeppelin hive-CSDN博客