3D点云--配准算法详解(Registration)_深度学习 点云配准

3D点云配准(Registration)是点云处理的核心任务,旨在将多个点云对齐到统一坐标系。以下是经典算法与深度学习方法全解析,涵盖数学原理、代码实现(PCL/Open3D/PyTorch)及工程优化技巧,通过对比表格帮助选型。

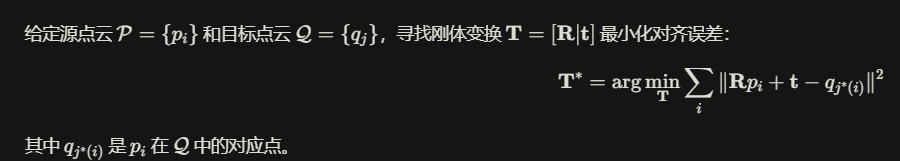

一、配准问题定义

二、经典配准算法

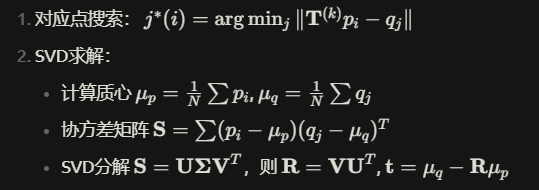

1. ICP (Iterative Closest Point)

原理:交替执行对应点搜索(最近邻)和SVD求解最优变换

数学步骤:

C++ (PCL):

#include pcl::IterativeClosestPoint<pcl::PointXYZ, pcl::PointXYZ> icp;icp.setInputSource(source_cloud);icp.setInputTarget(target_cloud);pcl::PointCloud<pcl::PointXYZ> aligned_cloud;icp.align(aligned_cloud);std::cout << \"Transform:\\n\" << icp.getFinalTransformation() << std::endl;Python (Open3D):

# 使用点对点ICPreg_result = o3d.pipelines.registration.registration_icp( source, target, max_correspondence_distance=0.05, init=initial_transform, estimation_method=o3d.pipelines.registration.TransformationEstimationPointToPoint())print(\"Fitness:\", reg_result.fitness) # 内点比例2. NDT (Normal Distributions Transform)

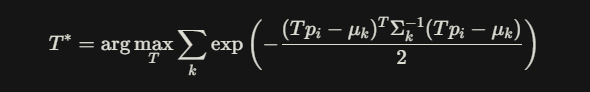

原理:将目标点云划分为网格,用多变量高斯分布描述每个单元格点分布,优化似然函数

数学形式:

Python (Open3D):

reg_result = o3d.pipelines.registration.registration_ndt( source, target, voxel_size=1.0, init=initial_transform, max_iterations=50)三、基于特征的配准

1. FPFH + RANSAC 粗配准

流程:

- 计算FPFH特征

- 使用RANSAC筛选匹配对

- 通过对应点求解变换

Python:

def fpfh_ransac(source, target, voxel_size=0.05): # 降采样 source_down = source.voxel_down_sample(voxel_size) target_down = target.voxel_down_sample(voxel_size) # 计算FPFH特征 source_fpfh = o3d.pipelines.registration.compute_fpfh_feature(source_down, o3d.geometry.KDTreeSearchParamRadius(voxel_size * 5)) target_fpfh = o3d.pipelines.registration.compute_fpfh_feature(target_down, o3d.geometry.KDTreeSearchParamRadius(voxel_size * 5)) # RANSAC配准 result = o3d.pipelines.registration.registration_ransac_based_on_feature_matching( source_down, target_down, source_fpfh, target_fpfh, True, voxel_size * 1.5, o3d.pipelines.registration.TransformationEstimationPointToPoint(False), 3, # RANSAC迭代次数 [0.01, 0.05], # 距离阈值检验对 o3d.pipelines.registration.RANSACConvergenceCriteria(100000, 0.99)) return result.transformation四、深度学习配准方法

1. PointNetLK (全局特征对齐)

架构:PointNet提取全局特征 + Lucas-Kanade优化

PyTorch实现:

class PointNetLK(nn.Module): def __init__(self): super().__init__() self.pointnet = PointNetFeat() # 全局特征提取器 self.fc = nn.Linear(1024, 6) # 6自由度位姿参数 def forward(self, src, tgt): src_feat = self.pointnet(src) tgt_feat = self.pointnet(tgt) # 用L2距离作为损失 residual = tgt_feat - src_feat pose = self.fc(residual) return se3_exp_map(pose) # 将李代数映射到SE(3)2. DCP (Deep Closest Point)

特点:利用Transformer学习匹配权重

关键代码:

# 特征提取src_embed = self.encoder(src)tgt_embed = self.encoder(tgt)# 注意力匹配scores = torch.matmul(src_embed.transpose(1,2), tgt_embed) # [B,N,M]attn = F.softmax(scores, dim=-1)# SVD求解最优变换weighted_tgt = torch.matmul(tgt, attn.transpose(1,2))src_centered = src - src.mean(dim=2, keepdim=True)tgt_centered = weighted_tgt - weighted_tgt.mean(dim=2, keepdim=True)S = torch.matmul(tgt_centered, src_centered.transpose(1,2))U, _, V = torch.svd(S)R = torch.matmul(U, V.transpose(1,2))t = tgt.mean(dim=2) - torch.matmul(R, src.mean(dim=2))五、配准算法对比表

六、工程优化技巧

-

多尺度配准:由粗到精的配准流程

# 第一层:体素降采样配准coarse_result = fpfh_ransac(source, target, voxel_size=0.1)# 第二层:ICP精配准fine_result = icp(source, target, init=coarse_result, max_dist=0.05) -

GPU加速ICP:

# 使用Cupy加速KDTreeimport cupy as cpdef gpu_icp(src, tgt, max_iter=50): src_cp = cp.asarray(src.points) tgt_cp = cp.asarray(tgt.points) # 在GPU上执行最近邻搜索和SVD ... -

异常值剔除:

// PCL中使用SAC-IA时设置距离阈值过滤sac_ia.setMaxCorrespondenceDistance(0.1);sac_ia.setInlierFraction(0.8); // 保留80%最佳匹配

七、前沿研究方向

- 非刚性配准:基于图神经网络处理变形物体

# 示例:使用DiffusionNet预测形变场deform = diffusion_net(src_points, graph_edges)warped_points = src_points + deform - 跨模态配准:LiDAR-相机联合配准(如RegNet)

- 自监督学习:通过渲染一致性损失无监督训练配准网络

完整流程示例:LiDAR里程计

def lidar_odometry(pc_seq): accumulated_cloud = pc_seq[0] trajectory = [np.eye(4)] for i in range(1, len(pc_seq)): # 粗配准(FPFH + RANSAC) init_T = fpfh_ransac(pc_seq[i], accumulated_cloud) # 精配准(多尺度ICP) result = icp_multiscale(pc_seq[i], accumulated_cloud, init_T) # 更新位姿 trajectory.append(trajectory[-1] @ result.transformation) accumulated_cloud += transform_points(pc_seq[i], result.transformation) return trajectory根据具体场景需求(如实时性/精度要求),可灵活组合上述方法。