Android 框架层AudioTrack_android audiotrack

本文转至AudioTrack-CSDN博客

一、前言

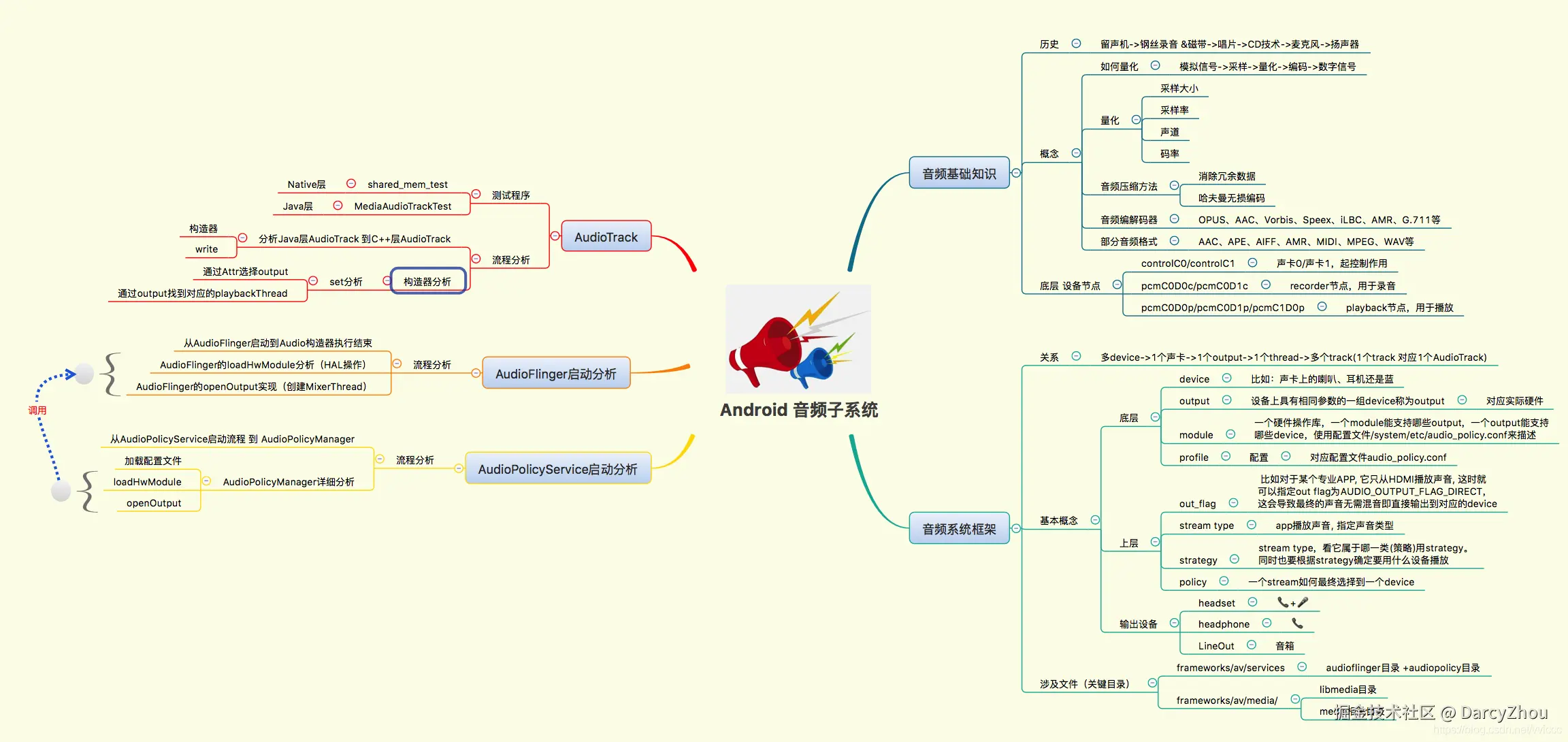

AudioTrack是Android Audio系统提供给应用开发者(java/C++)的API,用于操作音频播放的数据通路。MeidaPlayer在播放音乐时用到的是它,我们可以也可以直接使用AudioTrack进行音频播放。它是最基本的音频数据输出类。

AudioTrack.java的构造函数

AudioTrack.java

public AudioTrack(int streamType, int sampleRateInHz, int channelConfig, int audioFormat, int bufferSizeInBytes, int mode)throws IllegalArgumentException { this(streamType, sampleRateInHz, channelConfig, audioFormat, bufferSizeInBytes, mode, AudioManager.AUDIO_SESSION_ID_GENERATE);}看一下构造函数的参数:

- streamType:音频流类型,用于描述AudioTrack的播放场景。最常见的是AudioManager.STREAM_MUSIC,也就是我们平时用手机进行多媒体音乐播放的情形;

- sampleRateInHz:采样率,如48000,就表示音频数据每秒包含48000个音频采样点,采样率会影响音频数据流的音质效果;

- channelConfig:声道数,如AudioFormat. CHANNEL_ CONFIGURATION_ STEREO,表示双声道;

- audioFormat:音频编码格式;

- bufferSizeInBytes:由音频数据特性来确定的缓冲区的最小size,这个缓冲区是指用于向audioflinger进行跨进程数据传输的FIFO;

- mode:音频数据的传输模式,如AudioTrack.MODE_STREAM;

AudioTrack的简单使用步骤

//1、获取bufferSizeInBytes缓冲区的最小sizeint bufsize= AudioTrack. getMinBufferSize( 8000, AudioFormat.CHANNEL_CONFIGURATION_STEREO,AudioFormat. ENCODING_PCM_16BIT);//2、创建AudioTrackAudioTrack track= new AudioTrack( AudioManager. STREAM_ MUSIC, AudioFormat. CHANNEL_CONFIGURATION_STEREO,AudioFormat.ENCODING_PCM_16BIT,bufsize,AudioTrack.MODE_STREAM);//3、开始播放track. play();......//4、调用write往track中写数据track.write(buffer,0,length);......//5、停止播放track. stop();//6、释放底层资源track.release();AudioTrack被用于PCM音频流的回放,在数据传送上它有两种方式:

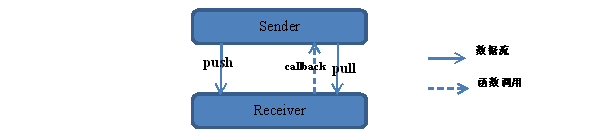

- 调用write(byte[],int,int)或write(short[],int,int)把音频数据“push”到AudioTrack中。

- 与之相对的,当然就是“pull”形式的数据获取,即数据接收方主动索取的过程

在创建AudioTrack的时候,我们需要指明音频流类型和数据播放模式:

数据播放模式

AudioTrack有两种数据播放模式,MODE_STREAM和MODE_STATIC;

MODE_STREAM:常见的一种模式,这种模式下,通过write一次次把音频数据写到AudioTrack中,也就是写进去多少,AudioTrack就播放多少;

MODE_STATIC:这种数据加载模式在play之前就把所有的数据通过一次write调用传递到AudioTrack的内部缓冲区中,这种模式呢,也有缺点,就是如果一次性写进去的数据太多,会导致系统无法分配足够的内存来存储数据;这种模式适用于像铃声这种内存占用量较小,延时要求比较高的文件;

音频流类型

android将系统的声音分为好几种流类型,常见的有:

- STREAM_ALARM:警告;

- STREAM_MUSIC:音乐;

- STREAM_RING:铃声;

- STREAM_SYSTEM:系统声音,如低电量提示音,锁屏声音等;

- STREAM_VOICE_CALL:通话声;

注意:这些类型的划分与音频数据本身并没有关系,如MUSIC和RING类型都可以是某首MP3歌曲,把音频流进行分类,是Audio系统对音频的管理策略有关,以便于管理;

在创建AudioTrack的时候,我们需要指明bufferSizeInBytes缓冲区的大小,也就是调用这个函数:

AudioTrack. getMinBufferSize( 8000, AudioFormat.CHANNEL_CONFIGURATION_STEREO,AudioFormat. ENCODING_PCM_16BIT);

继续回到AudioTrack的构造函数,代码如下:

public AudioTrack(int streamType, int sampleRateInHz, int channelConfig, int audioFormat, int bufferSizeInBytes, int mode, int sessionId)throws IllegalArgumentException { this((new AudioAttributes.Builder()) .setLegacyStreamType(streamType) .build(), (new AudioFormat.Builder()) .setChannelMask(channelConfig) .setEncoding(audioFormat) .setSampleRate(sampleRateInHz) .build(), bufferSizeInBytes, mode, sessionId); deprecateStreamTypeForPlayback(streamType, \"AudioTrack\", \"AudioTrack()\");}public AudioTrack(AudioAttributes attributes, AudioFormat format, int bufferSizeInBytes, int mode, int sessionId) throws IllegalArgumentException { super(attributes, AudioPlaybackConfiguration.PLAYER_TYPE_JAM_AUDIOTRACK); audioParamCheck(rate, channelMask, channelIndexMask, encoding, mode); mStreamType = AudioSystem.STREAM_DEFAULT; audioBuffSizeCheck(bufferSizeInBytes); //调用native层的native_setup,进行初始化 int initResult = native_setup(new WeakReference(this), mAttributes, sampleRate, mChannelMask, mChannelIndexMask, mAudioFormat, mNativeBufferSizeInBytes, mDataLoadMode, session, 0 /*nativeTrackInJavaObj*/); if (mDataLoadMode == MODE_STATIC) { mState = STATE_NO_STATIC_DATA; } else { mState = STATE_INITIALIZED; }}很容易发现AudioTrack.java的构造函数并没有做什么实质性的工作,只是做了些参数的转化和成员变量的初始化。

往下看native_setup()函数。android_media_AudioTrack.cpp

static jintandroid_media_AudioTrack_setup(JNIEnv *env, jobject thiz, jobject weak_this, jobject jaa, jintArray jSampleRate, jint channelPositionMask, jint channelIndexMask, jint audioFormat, jint buffSizeInBytes, jint memoryMode, jintArray jSession, jlong nativeAudioTrack) { //声明Native层的AudioTrack对象,这个是audiotrack的proxy。用于跨进程通信!!!! sp lpTrack = 0; AudioTrackJniStorage* lpJniStorage = NULL; if (nativeAudioTrack == 0) { int* sampleRates = env->GetIntArrayElements(jSampleRate, NULL); int sampleRateInHertz = sampleRates[0]; env->ReleaseIntArrayElements(jSampleRate, sampleRates, JNI_ABORT); audio_channel_mask_t nativeChannelMask = nativeChannelMaskFromJavaChannelMasks( channelPositionMask, channelIndexMask); uint32_t channelCount = audio_channel_count_from_out_mask(nativeChannelMask); audio_format_t format = audioFormatToNative(audioFormat); size_t frameCount; if (audio_is_linear_pcm(format)) { const size_t bytesPerSample = audio_bytes_per_sample(format); frameCount = buffSizeInBytes / (channelCount * bytesPerSample); } else { frameCount = buffSizeInBytes; } lpTrack = new AudioTrack(); lpJniStorage = new AudioTrackJniStorage(); lpJniStorage->mCallbackData.audioTrack_class = (jclass)env->NewGlobalRef(clazz); // we use a weak reference so the AudioTrack object can be garbage collected. lpJniStorage->mCallbackData.audioTrack_ref = env->NewGlobalRef(weak_this); lpJniStorage->mCallbackData.busy = false; status_t status = NO_ERROR; switch (memoryMode) { case MODE_STREAM: status = lpTrack->set( AUDIO_STREAM_DEFAULT,//指定流类型 sampleRateInHertz, format,//采样点精度,即编码格式,一般为PCM16和PCM8 nativeChannelMask, frameCount, AUDIO_OUTPUT_FLAG_NONE, audioCallback, &(lpJniStorage->mCallbackData),//callback, callback data (user) 0, 0,// 共享类型,STREAM模式下为空,实际使用的共享内存有AudioFlinger创建 true, sessionId, AudioTrack::TRANSFER_SYNC, NULL, -1, -1, paa); break; case MODE_STATIC: //数据加载模式为STATIC模式,需要先创建共享内存 if (!lpJniStorage->allocSharedMem(buffSizeInBytes)) { ALOGE(\"Error creating AudioTrack in static mode: error creating mem heap base\"); goto native_init_failure; } status = lpTrack->set( AUDIO_STREAM_DEFAULT, sampleRateInHertz, format, nativeChannelMask, frameCount, AUDIO_OUTPUT_FLAG_NONE, audioCallback, &(lpJniStorage->mCallbackData), 0, lpJniStorage->mMemBase,//STATIC模式,需要传递共享内存 true, sessionId, AudioTrack::TRANSFER_SHARED, NULL, -1, -1, paa); break; default: goto native_init_failure; } } //把JNI层中new出来的AudioTrack对象指针保存到Java对象的一个变量中,这样就把JNI层的AudioTrack对象和Java层的AudioTrack对象关联起来了, setAudioTrack(env, thiz, lpTrack); //lpJniStorage对象指针也保存到Java对象中。 env->SetLongField(thiz, javaAudioTrackFields.jniData, (jlong)lpJniStorage); return (jint) AUDIO_JAVA_SUCCESS;}android_media_AudioTrack_setup的主要工作就是创建在native层的AudioTrack对象,对它进行初始化,并完成JNI层的AudioTrack对象和Java层的AudioTrack对象的关联绑定,这个过程和函数的名字还是很匹配的,setup建立起audiotrack使用需要的一切;

在不同的数据加载模式下,AudioTrack对象的创建也会不同,在MODE_STATIC模式下进行数据传输,需要在audiotrack侧创建共享内存;在MODE_STREAM模式下进行数据传输,需要在audioflinger侧创建共享内存。

play():AudioTrack.java

public void play() throws IllegalStateException { if (mState != STATE_INITIALIZED) { throw new IllegalStateException(\"play() called on uninitialized AudioTrack.\"); } final int delay = getStartDelayMs(); if (delay == 0) { startImpl(); } else { new Thread() { public void run() { try { Thread.sleep(delay); } catch (InterruptedException e) { e.printStackTrace(); } baseSetStartDelayMs(0); try { startImpl(); } catch (IllegalStateException e) { } } }.start(); }}private void startImpl() { synchronized(mPlayStateLock) { baseStart(); native_start(); mPlayState = PLAYSTATE_PLAYING; }}native_start():android_media_AudioTrack.cppstatic void android_media_AudioTrack_start(JNIEnv *env, jobject thiz){ //从java的AudioTrack对象中获取对应的native层的AudioTrack对象指针 sp lpTrack = getAudioTrack(env, thiz); lpTrack->start();}write():AudioTrack.java

public int write(@NonNull byte[] audioData, int offsetInBytes, int sizeInBytes) { return write(audioData, offsetInBytes, sizeInBytes, WRITE_BLOCKING);}public int write(@NonNull byte[] audioData, int offsetInBytes, int sizeInBytes, @WriteMode int writeMode) { //调用native的native_write_byte int ret = native_write_byte(audioData, offsetInBytes, sizeInBytes, mAudioFormat, writeMode == WRITE_BLOCKING); //如果当前的数据加载类型是MODE_STATIC,在write后才会将状态设置为初始化,这跟MODE_STREAM类型是不一样的 if ((mDataLoadMode == MODE_STATIC) && (mState == STATE_NO_STATIC_DATA) && (ret > 0)) { // benign race with respect to other APIs that read mState mState = STATE_INITIALIZED; } return ret;}native_write_byte:android_media_AudioTrack.cpp

static jint android_media_AudioTrack_writeArray(JNIEnv *env, jobject thiz, T javaAudioData, jint offsetInSamples, jint sizeInSamples, jint javaAudioFormat, jboolean isWriteBlocking) { sp lpTrack = getAudioTrack(env, thiz); //调用writeToTrack()方法 jint samplesWritten = writeToTrack(lpTrack, javaAudioFormat, cAudioData, offsetInSamples, sizeInSamples, isWriteBlocking == JNI_TRUE /* blocking */); envReleaseArrayElements(env, javaAudioData, cAudioData, 0); return samplesWritten;}static jint writeToTrack(const sp& track, jint audioFormat, const T *data, jint offsetInSamples, jint sizeInSamples, bool blocking) { ssize_t written = 0; size_t sizeInBytes = sizeInSamples * sizeof(T); //track->sharedBuffer() == 0,表示此时的数据加载是STREAM模式,如果不等于0,那就是STATIC模式 if (track->sharedBuffer() == 0) { //STREAM模式,调用write写数据 written = track->write(data + offsetInSamples, sizeInBytes, blocking); if (written == (ssize_t) WOULD_BLOCK) { written = 0; } } else { if ((size_t)sizeInBytes > track->sharedBuffer()->size()) { sizeInBytes = track->sharedBuffer()->size(); } //在STATIC模式下,直接把数据memcpy到共享内存,这种模式下,先调用write,后调用play memcpy(track->sharedBuffer()->pointer(), data + offsetInSamples, sizeInBytes); written = sizeInBytes; } if (written >= 0) { return written / sizeof(T); } return interpretWriteSizeError(written);}stop():AudioTrack.java

public void stop()throws IllegalStateException { if (mState != STATE_INITIALIZED) { throw new IllegalStateException(\"stop() called on uninitialized AudioTrack.\"); } // stop playing synchronized(mPlayStateLock) { //调用native方法 native_stop(); baseStop(); mPlayState = PLAYSTATE_STOPPED; mAvSyncHeader = null; mAvSyncBytesRemaining = 0; }}android_media_AudioTrack.cppandroid_media_AudioTrack_stop(JNIEnv *env, jobject thiz){ sp lpTrack = getAudioTrack(env, thiz); //调用Native层的stop方法 lpTrack->stop();}release():AudioTrack.java

public void release() { try { //调用stop() stop(); } catch(IllegalStateException ise) { } baseRelease(); //调用native方法 native_release(); //将状态置为未初始化,下次用到AudioTrack时,需要重新创建AudioTrack,才会将状态置为初始化状态, mState = STATE_UNINITIALIZED;}android_media_AudioTrack.cppstatic void android_media_AudioTrack_release(JNIEnv *env, jobject thiz) { //释放资源 sp lpTrack = setAudioTrack(env, thiz, 0); // delete the JNI data AudioTrackJniStorage* pJniStorage = (AudioTrackJniStorage *)env->GetLongField( thiz, javaAudioTrackFields.jniData); //之前保存在java对象中的指针变量此时要设置为0 env->SetLongField(thiz, javaAudioTrackFields.jniData, 0);}*.java层的AudioTrack只是将工作通过二道贩子android_media_AudioTrack(JNI)交给了Native层的AudioTrack。**注意java层AudioTrack的状态,其中mState状态只在AudioTrack被new出来的时候才会被置为STATE_INITIALIZED,而且还要保证此时的数据传输模式为MODE_STREAM,mState状态只有被置为STATE_INITIALIZED后,write,play,stop方法才可以调用,并且在release方法后,mState状态会被置为STATE_UNINITIALIZED,下次要想继续用AudioTrack的话,必须要重新创建它;如果数据传输模式为MODE_STATIC,AudioTrack在调用了write()方法后才会将mState状态置为STATE_INITIALIZED,所以,MODE_STATIC模式下,只能先write,后play。

AudioTrack.cpp

在java层new AudioTrack的时候,会创建出在native层的AudioTrack对象,并且调用该对象的set方法进行初始化,native层的AudioTrack在frameworks\\av\\media\\libaudioclient目录下,我们来分析Native层的AudioTrack;

AudioTrack的无参构造函数:

AudioTrack.cppAudioTrack::AudioTrack() : mStatus(NO_INIT),//把初始状态设置为NO_INIT mState(STATE_STOPPED), mPreviousPriority(ANDROID_PRIORITY_NORMAL), mPreviousSchedulingGroup(SP_DEFAULT), mPausedPosition(0), mSelectedDeviceId(AUDIO_PORT_HANDLE_NONE), mRoutedDeviceId(AUDIO_PORT_HANDLE_NONE), mPortId(AUDIO_PORT_HANDLE_NONE){ mAttributes.content_type = AUDIO_CONTENT_TYPE_UNKNOWN; mAttributes.usage = AUDIO_USAGE_UNKNOWN; mAttributes.flags = 0x0; strcpy(mAttributes.tags, \"\");}AudioTrack初始化方法set():

status_t AudioTrack::set( audio_stream_type_t streamType, uint32_t sampleRate, audio_format_t format, audio_channel_mask_t channelMask, size_t frameCount, audio_output_flags_t flags, callback_t cbf, void* user, int32_t notificationFrames, const sp& sharedBuffer, bool threadCanCallJava, audio_session_t sessionId, transfer_type transferType, const audio_offload_info_t *offloadInfo, uid_t uid, pid_t pid, const audio_attributes_t* pAttributes, bool doNotReconnect, float maxRequiredSpeed){.................................................. //cbf是JNI层传入的回调函数audioCallback,如果设置了回调函数,则启动一个线程 if (cbf != NULL) { mAudioTrackThread = new AudioTrackThread(*this, threadCanCallJava); mAudioTrackThread->run(\"AudioTrack\", ANDROID_PRIORITY_AUDIO, 0 /*stack*/); }.................................... //关键调用 status_t status = createTrack_l(); .................................... return NO_ERROR;}核心调用status_t status = createTrack_l();status_t AudioTrack::createTrack_l(){ //得到AudioFlinger的Binder代理端BpAudioFlinger; const sp& audioFlinger = AudioSystem::get_audio_flinger(); //向audioFlinger发送createTrack请求,返回IAudioTrack对象,后续AudioFlinger和AudioTrack的交互就是围绕着IAudioTrack进行 sp track = audioFlinger->createTrack(streamType, mSampleRate, mFormat, mChannelMask, &temp, &flags, mSharedBuffer, output, mClientPid, tid, &mSessionId, mClientUid, &status, mPortId); //在STREAM模式下,没有在AudioTrack端创建共享内存,共享内存最终由AudioFlinger创建; //取出AudioFlinger创建的共享内存 sp iMem = track->getCblk(); //IMemory的Pointer在此处将返回共享内存的首地址,类型为void * void *iMemPointer = iMem->pointer(); //static_cast直接把这个void *类型转换成audio_track_cblk_t,表明这块内存的首部中存在audio_track_cblk_t这个对象 audio_track_cblk_t* cblk = static_castcreateTrack_l()方法的核心调用是audioFlinger->createTrack(),返回一个IAudioTrack对象;IAudioTrack中有一块共享内存,其头部是一个audio_track_cblk_t对象,在该对象之后是跨进程共享buffer(FIFO);关于这个audio_track_cblk_t对象,在AudioTrackShared.h中声明,在AudioTrack.cpp中定义,它是用来协调和管理AudioTrack和AudioFlinger二者数据传输节奏的。audiotrack向audio_track_cblk_t查询可用的空余空间进行写数据。AudioFlinger向audio_track_cblk_tc查询可用的数据量进行读取。audioflinger和audiotrack构成了一个消费者生产者模型。

mAudioTrackThread = new AudioTrackThread(*this, threadCanCallJava)用于pull的数据传输方式

这个线程与音频数据的传输方式有关系,AudioTrack支持两种数据传输方式:

Push方式:用户主动调用write写数据,这相当于数据被push到AudioTrack;

Pull方式:JNI层的代码中在构造AudioTrack时,传入了一个回调函数audioCallback,pull方式就是AudioTrackThread调用这个回调函数主动从用户那里pull数据。

write()ssize_t AudioTrack::write(const void* buffer, size_t userSize, bool blocking){ //Buffer抽象共享FIFO中一块指定大小可以读写的缓冲区 Buffer audioBuffer; while (userSize >= mFrameSize) { //以帧为单位 audioBuffer.frameCount = userSize / mFrameSize; //从FIFO中得到一块空闲的数据缓冲区 status_t err = obtainBuffer(&audioBuffer, blocking ? &ClientProxy::kForever : &ClientProxy::kNonBlocking); //空闲数据缓冲区的大小是audioBuffer.size,地址在audioBuffer.i8中,数据传递通过memcpy来完成 size_t toWrite = audioBuffer.size; memcpy(audioBuffer.i8, buffer, toWrite); buffer = ((const char *) buffer) + toWrite; userSize -= toWrite; written += toWrite; //释放从FIFO申请的buffer。更新FIFO的读写指针 releaseBuffer(&audioBuffer); } if (written > 0) {//定位音视频不同步问题可以用到这个属性,表示audiotrack一共往audioflinger写过多少数据。 mFramesWritten += written / mFrameSize; } return written;}write函数,就是简单的memcpy,但读进程(audioflinger)和写进程(audiotrack)之间的同步工作则是通过obtainBuffer和releaseBuffer来完成的;obtainBuffer的功能,就是从cblk管理的数据缓冲中得到一块可写的空闲空间,如果没有的话,那就阻塞,而releaseBuffer,则是在使用完这块空间后更新写指针的位置;

stop()

void AudioTrack::stop(){ //mAudioTrack是IAudioTrack类型,通知AudioFlinger端的track进行stop。 mAudioTrack->stop(); sp t = mAudioTrackThread; if (t != 0) { if (!isOffloaded_l()) { //停止AudioTrackThread t->pause(); } } else { setpriority(PRIO_PROCESS, 0, mPreviousPriority); set_sched_policy(0, mPreviousSchedulingGroup); }}调用IAudioTrack的stop,并且并退出回调线程。

start()

status_t AudioTrack::start(){ status = mAudioTrack->start();}播放操作还是通过IAudioTrack通知AudioFlinger端;

总结:

- java层的AudioTrack只是java语言的api(包皮公司),我们对java层AudioTrack的api调用,实际都会传递到native层的AudioTrack去执行;

- native层的AudioTrack在初始化的时候,会跨进程的调用AudioFlinger的createTrack方法,并返回一个IAudioTrack的对象,native层的AudioTrack以及AudioFlinger通过这个对象进行交互;

- 得到IAudioTrack对象以后,native层AudioTrack的start(),stop等方法的最终操作会传递给AudioFlinger去执行;

- 在native层的AudioTrack中将数据write进内存,AudioFlinger通过内存共享与AudioTrack共享同一块资源,从而完成数据的传递;重点记忆:cblk封装FIFO相关的操作!!!!!!!!!!!!!!数据通路。控制通路直接通过IAudioTrack实现。