容器编排K8S

k8s概述

容器部署优势:部署方便,不依赖底层环境,升级镜像

- 本质是一个容器编排工具,golang语言开发

master master管理节点:kube-api-server请求接口,kube-scheduler调度器,kube-controller-manager控制器/管理器,etcd分布式存储数据库

work node服务节点:kubelet代理保证容器运行在pod中,kube-proxy网络代理[一组容器的统一接口]

在 Kubernetes 中,负责管理容器生命周期的核心组件是kubelet

k8s安装和部署

1.源码包安装

2.使用kubeadm部署集群

使用 kubeadm 创建集群 | Kubernetes

centos7.9##https://kubernetes.io/zh-cn/docs/reference/setup-tools/kubeadm/kubeadm-init/---------------------所有主机均配置基础环境【这里以master为例】[root@node1 ~]# cat < overlay> br_netfilter> EOF[root@master ~]# [root@master ~]# sudo modprobe overlay[root@master ~]# sudo modprobe br_netfilter## 设置所需的 sysctl 参数,参数在重新启动后保持不变[root@master ~]# cat < net.bridge.bridge-nf-call-iptables = 1> net.bridge.bridge-nf-call-ip6tables = 1> net.ipv4.ip_forward = 1> EOF## 应用 sysctl 参数而不重新启动[root@master ~]# sudo sysctl --system[root@master ~]# lsmod | grep br_netfilterbr_netfilter 22256 0 bridge 155432 1 br_netfilter[root@master ~]# lsmod | grep overlayoverlay 91659 0 ##查看版本[root@master ~]# cat /etc/redhat-release CentOS Linux release 7.9.2009 (Core)##安装容器源[centos查看aliyun源][root@master ~]# yum install -y yum-utils[root@master ~]# yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo[root@master ~]# ls /etc/yum.repos.d/CentOS-Base.repo docker-ce.repo epel.repo##安装容器[root@master ~]# yum install containerd.io -y[root@master ~]# containerd config default > /etc/containerd/config.toml[root@master ~]# vim /etc/containerd/config.toml#将sandbox镜像注释并修改为阿里云的路径# sandbox_image = \"registry.k8s.io/pause:3.6\" sandbox_image = \"registry.aliyuncs.com/google_containers/pause:3.6\"#查找此行并修改为true SystemdCgroup = true [root@master ~]# systemctl enable containerd --now##需要看到启动成功 [root@master ~]# systemctl status containerd [root@master ~]# cat < [kubernetes]> name=Kubernetes> baseurl=https://mirrors.aliyun.com/kubernetes-new/core/stable/v1.28/rpm/> enabled=1> gpgcheck=1> gpgkey=https://mirrors.aliyun.com/kubernetes-new/core/stable/v1.28/rpm/repodata/repomd.xml.key> EOF##检查基础环境[root@master ~]# free -h total used free shared buff/cache availableMem: 7.4G 237M 6.0G 492K 1.1G 6.9GSwap: 0B 0B 0B[root@master ~]# cat /etc/fstab ## /etc/fstab# Created by anaconda on Fri Jun 28 04:16:23 2024## Accessible filesystems, by reference, are maintained under \'/dev/disk\'# See man pages fstab(5), findfs(8), mount(8) and/or blkid(8) for more info#UUID=c8b5b2da-5565-4dc1-b002-2a8b07573e22 / ext4 defaults 1 1[root@master ~]# netstat -tunlp |grep 6443[root@master ~]# getenforce Disabled##安装kubeadm[root@master ~]# yum install -y kubelet kubeadm kubectl[root@node1 ~]# systemctl enable kubelet --now---------------------------仅在master执行##执行初始化操作【--apiserver-advertise-address为master的IP地址】[root@master ~]# kubeadm init \\> --apiserver-advertise-address=192.168.88.1 \\> --image-repository registry.aliyuncs.com/google_containers \\> --service-cidr=172.10.0.0/12 \\> --pod-network-cidr=10.10.0.0/16 \\> --ignore-preflight-errors=all I0722 15:36:54.413254 12545 version.go:256] remote version is much newer: v1.33.3; falling back to: stable-1.28[init] Using Kubernetes version: v1.28.15[preflight] Running pre-flight checks[WARNING Service-Kubelet]: kubelet service is not enabled, please run \'systemctl enable kubelet.service\'[preflight] Pulling images required for setting up a Kubernetes cluster[preflight] This might take a minute or two, depending on the speed of your internet connection[preflight] You can also perform this action in beforehand using \'kubeadm config images pull\'W0722 15:37:11.464233 12545 checks.go:835] detected that the sandbox image \"registry.aliyuncs.com/google_containers/pause:3.6\" of the container runtime is inconsistent with that used by kubeadm. It is recommended that using \"registry.aliyuncs.com/google_containers/pause:3.9\" as the CRI sandbox image.[certs] Using certificateDir folder \"/etc/kubernetes/pki\"[certs] Generating \"ca\" certificate and key[certs] Generating \"apiserver\" certificate and key[certs] apiserver serving cert is signed for DNS names [kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local master] and IPs [172.0.0.1 192.168.88.1][certs] Generating \"apiserver-kubelet-client\" certificate and key[certs] Generating \"front-proxy-ca\" certificate and key[certs] Generating \"front-proxy-client\" certificate and key[certs] Generating \"etcd/ca\" certificate and key[certs] Generating \"etcd/server\" certificate and key[certs] etcd/server serving cert is signed for DNS names [localhost master] and IPs [192.168.88.1 127.0.0.1 ::1]\"/var/lib/kubelet/kubeadm-flags.env\"[kubelet-start] Writing kubelet configuration to file \"/var/lib/kubelet/config.yaml\"[kubelet-start] Starting the kubelet[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory \"/etc/kubernetes/manifests\". This can take up to 4m0s...Your Kubernetes control-plane has initialized successfully!To start using your cluster, you need to run the following as a regular user: mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/configAlternatively, if you are the root user, you can run: export KUBECONFIG=/etc/kubernetes/admin.confYou should now deploy a pod network to the cluster.Run \"kubectl apply -f [podnetwork].yaml\" with one of the options listed at: https://kubernetes.io/docs/concepts/cluster-administration/addons/Then you can join any number of worker nodes by running the following on each as root:kubeadm join 192.168.88.1:6443 --token 91kaxu.trpl8qwjaumnc910 \\--discovery-token-ca-cert-hash sha256:fdd6b2c0f3e0ec81b3d792c34d925b3c688147d7a87b0993de050460f19adec5 [root@master ~]# mkdir -p $HOME/.kube[root@master ~]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config[root@master ~]# sudo chown $(id -u):$(id -g) $HOME/.kube/config-----------------------------在node节点与master建立连接[root@node1 ~]# kubeadm join 192.168.88.1:6443 --token 91kaxu.trpl8qwjaumnc910 \\> --discovery-token-ca-cert-hash sha256:fdd6b2c0f3e0ec81b3d792c34d925b3c688147d7a87b0993de050460f19adec5[preflight] Running pre-flight checks[WARNING Hostname]: hostname \"node1\" could not be reached[WARNING Hostname]: hostname \"node1\": lookup node1 on 100.100.2.138:53: no such host[preflight] Reading configuration from the cluster...[preflight] FYI: You can look at this config file with \'kubectl -n kube-system get cm kubeadm-config -o yaml\'[kubelet-start] Writing kubelet configuration to file \"/var/lib/kubelet/config.yaml\"[kubelet-start] Writing kubelet environment file with flags to file \"/var/lib/kubelet/kubeadm-flags.env\"[kubelet-start] Starting the kubelet[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...This node has joined the cluster:* Certificate signing request was sent to apiserver and a response was received.* The Kubelet was informed of the new secure connection details.Run \'kubectl get nodes\' on the control-plane to see this node join the cluster.[root@node1 ~]# -----------------------------在master节点查看状态[root@master ~]# kubectl get node NAME STATUS ROLES AGE VERSIONmaster NotReady control-plane 96s v1.28.15node1 NotReady 22s v1.28.15node2 NotReady 7s v1.28.15##上传网络插件[root@master ~]# ls20250621calico.yaml##修改网络插件的位置[root@master ~]# mkdir k8s/calico -p [root@master ~]# cd k8s/calico/[root@master calico]# mv /root/20250621calico.yaml .[root@master calico]# ls20250621calico.yaml[root@master calico]# kubectl create -f 20250621calico.yaml ##查看节点状态[root@master ~]# kubectl get node NAME STATUS ROLES AGE VERSIONmaster Ready control-plane 5m46s v1.28.15node1 Ready 4m32s v1.28.15node2 Ready 4m17s v1.28.15##查看系统名称空间的pod[root@master calico]# watch kubectl get pod -n kube-system.../如图所示:Every 2.0s: kubectl get pod -n kube-system Tue Jul 22 22:18:26 2025NAME READY STATUS RESTARTS AGEcalico-kube-controllers-6fcd5cd66f-gcv2q 1/1 Running 0 6h36mcalico-node-bbqnz 1/1 Running 0 6h36mcalico-node-ls7gm 1/1 Running 0 6h36mcalico-node-n6fz5 1/1 Running 0 6h36mcoredns-66f779496c-jnc4h 1/1 Running 0 6h40mcoredns-66f779496c-x79tt 1/1 Running 0 6h40metcd-master 1/1 Running 0 6h40mkube-apiserver-master1/1 Running 0 6h40mkube-controller-manager-master 1/1 Running 0 6h40mkube-proxy-6jpfs 1/1 Running 0 6h39mkube-proxy-6mxx6 1/1 Running 0 6h40mkube-proxy-cn26w 1/1 Running 0 6h39mkube-scheduler-master1/1 Running 0 6h40m##查看详细信息,方便排错[root@master ~]# kubectl describe pod kube-proxy-vfdmh -n kube-system[root@master ~]# #所有节点Ready 集群就安装ok了![root@master ~]# [root@master ~]# #结束注释:

##初始化集群【**仅在master节点执行**】[root@master containerd]# kubeadm init \\--apiserver-advertise-address=10.38.102.71 \\--image-repository registry.aliyuncs.com/google_containers \\--kubernetes-version v1.26.3 \\--service-cidr=10.96.0.0/12 \\--pod-network-cidr=10.244.0.0/16 \\--ignore-preflight-errors=all##说明:--apiserver-advertise-address=10.38.102.71 \\ #换成自己的master地址##显示此行表示:初始化成功Your Kubernetes control-plane has initialized successfully![root@master containerd]# kubectl get nodesE0702 02:26:32.034057 8125 memcache.go:265] couldn\'\'t get current server API group list: Get \"http://localhost:8080/api?timeout=32s\": dial tcp [::1]:8080: connect: connection refused##根据提示申明环境变量[root@master containerd]# export KUBECONFIG=/etc/kubernetes/admin.conf##开机自启[root@master containerd]# vim /etc/profile[root@master containerd]# tail -1 /etc/profileexport KUBECONFIG=/etc/kubernetes/admin.conf##查看节点[root@master containerd]# kubectl get nodesNAME STATUS ROLES AGE VERSIONmaster NotReady control-plane 2m35s v1.26.3##初始化成功后,服务将默认启动[root@master containerd]# systemctl status kubelet.service● kubelet.service - kubelet: The Kubernetes Node Agent Loaded: loaded (/usr/lib/systemd/system/kubelet.service; enabled; vendor preset: disabled) Drop-In: /usr/lib/systemd/system/kubelet.service.d └─10-kubeadm.conf Active: active (running) since Wed 2025-07-02 02:24:44 EDT; 4min 19s ago Docs: https://kubernetes.io/docs/##查看pod,指定在系统的名称空间中查看[root@master containerd]# kubectl get pod -n kube-systemNAME READY STATUS RESTARTS AGEcoredns-5bbd96d687-hpnxw 0/1 Pending 0 5m24scoredns-5bbd96d687-rfq5n 0/1 Pending 0 5m24setcd-master1/1 Running 0 5m38skube-apiserver-master 1/1 Running 0 5m38skube-controller-manager-master 1/1 Running 0 5m38skube-proxy-dhsn5 1/1 Running 0 5m24skube-scheduler-master 1/1 Running 0 5m38s##安装网络插件[calico,flannel][root@master containerd]# wget http://manongbiji.oss-cn-beijing.aliyuncs.com/ittailkshow/k8s/download/calico.yaml#正常安装显示如下...HTTP request sent, awaiting response... 200 OKLength: 239997 (234K) [text/yaml]Saving to: ‘calico.yaml’100%[===============================================================================================>] 239,997 --.-K/s in 0.06s2025-07-02 02:36:42 (4.03 MB/s) - ‘calico.yaml’ saved [239997/239997][root@master containerd]# wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml#正常安装显示如下...HTTP request sent, awaiting response... 200 OKLength: 4415 (4.3K) [text/plain]Saving to: ‘kube-flannel.yml’100%[===============================================================================================>] 4,415 235B/s in 19s2025-07-02 02:38:53 (235 B/s) - ‘kube-flannel.yml’ saved [4415/4415][root@master containerd]# lscalico.yaml config.toml config.toml.bak kube-flannel.yml[root@master containerd]# mv *.yml /root[root@master containerd]# mv *.yaml /root[root@master containerd]# lltotal 12-rw-r--r--. 1 root root 7074 Jul 2 02:18 config.toml-rw-r--r--. 1 root root 886 Jun 5 2024 config.toml.bak##应用文件[root@master ~]# kubectl apply -f calico.yaml[root@master ~]# kubectl apply -f kube-flannel.yml##查看系统名称空间中的pod[root@master ~]# kubectl get pod -n kube-systemNAME READY STATUS RESTARTS AGEcalico-kube-controllers-6bd6b69df9-gpt7z 0/1 ContainerCreating 0 80scalico-node-5cnrq 0/1 Init:2/3 0 81scalico-typha-77fc8866f5-v764n 0/1 Pending 0 80scoredns-5bbd96d687-hpnxw 0/1 ContainerCreating 0 17mcoredns-5bbd96d687-rfq5n 0/1 ContainerCreating 0 17metcd-master 1/1 Running 0 17mkube-apiserver-master1/1 Running 0 17mkube-controller-manager-master 1/1 Running 0 17mkube-proxy-dhsn5 1/1 Running 0 17mkube-scheduler-master1/1 Running 0 17m##查看节点[root@master ~]# kubectl get nodesNAME STATUS ROLES AGE VERSIONmaster Ready control-plane 18m v1.26.3集群管理命令

##查看节点信息[root@master ~]# kubectl get nodeNAME STATUS ROLES AGE VERSIONmaster Ready control-plane 106m v1.26.3node1 Ready 53m v1.26.3node2 Ready 49m v1.26.3##查看节点详细信息[root@master ~]# kubectl get nodes -o wideNAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIMEmaster Ready control-plane 108m v1.26.3 10.38.102.71 CentOS Linux 7 (Core) 3.10.0-957.el7.x86_64 containerd://1.6.33node1 Ready 55m v1.26.3 10.38.102.72 CentOS Linux 7 (Core) 3.10.0-957.el7.x86_64 containerd://1.6.33node2 Ready 51m v1.26.3 10.38.102.73 CentOS Linux 7 (Core) 3.10.0-957.el7.x86_64 containerd://1.6.33##查看get帮助[root@master ~]# kubectl get -h##查看describe帮助[root@master ~]# kubectl describe -h##描述指定节点详情[root@master ~]# kubectl describe node master##查看系统名称空间的所有pod[root@master ~]# kubectl get pod -n kube-system##查看所有名称空间运行的pod[root@master ~]# kubectl get pod -A ##查看所有名称空间运行的pod详情[root@master ~]# kubectl get pod -A -o wide集群核心概念

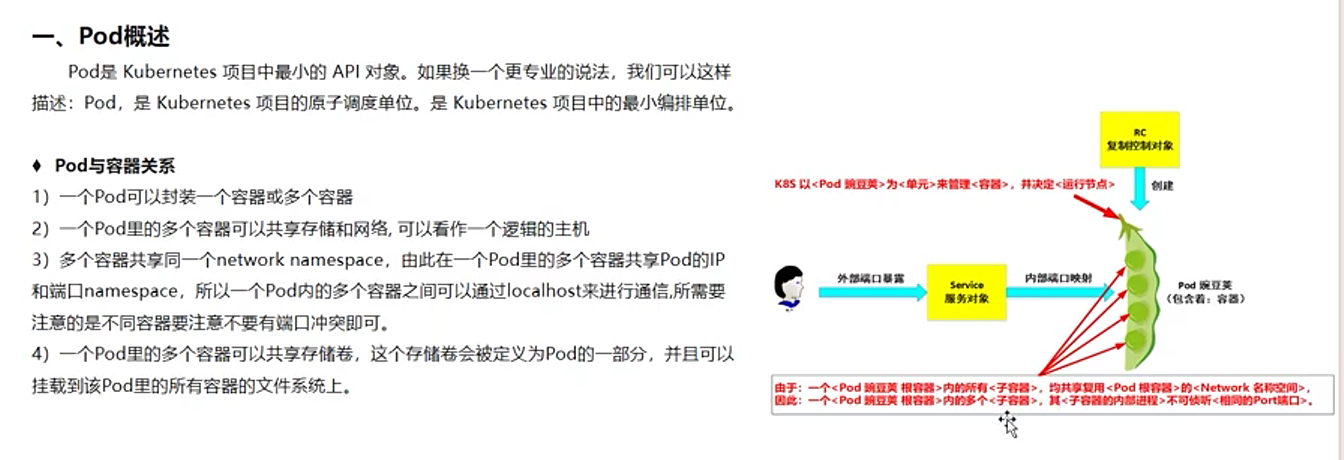

- pod 最小调度或管理单元【一个pod有一个或多个容器】

- service 会记录pod信息,记录与pod之间有关系会进行负载均衡,提供接口,用户通过service访问pod【由于访问pod可以使用IP,但是IP会改变;使用使用service为一组pod提供接口】

- label给k8s资源对象打上标签

- label selector 标签选择器,对资源进行选择【service进行选择】

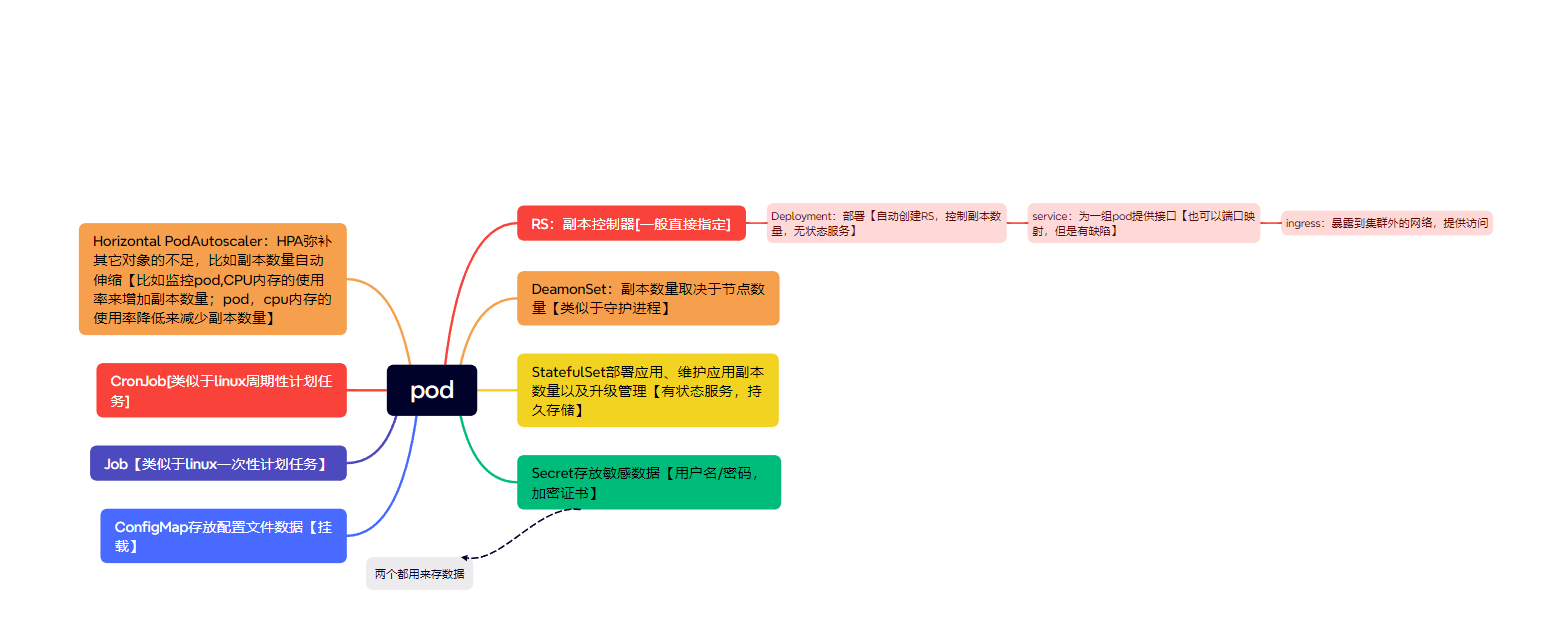

- replication controller 控制器,副本控制器,时刻保持pod数量达到用户的期望值【控制pod数量】

- replication controller manager 副本控制器管理器【监视各种控制器,是一个管理组件】

- scheduler 调度器,接受api-server访问请求,实现pod在某台k8s node上运行【控制pod在哪个节点上运行】

- DNS 通过DNS解决集群内资源名称,达到访问资源目的【负责集群内部的名称解析】

- namespace名称空间 K8S中非常重要的资源,主要用来实现多套环境的资源隔离或多租户的资源隔离【实现多套环境资源隔离或多租户的资源隔离,常见的资源对象都需要放在名称空间中】

资源对象介绍

无状态服务:所有节点的关系都是等价的

有状态服务:节点身份不对等,有主从;对数据持久存储

--------------------------------------核心概念.名称空间##查看当前所有的名称空间#系统名称空间放的是系统的pod;不指定则为默认名称空间[root@master ~]# kubectl get namespaceNAME STATUS AGEdefault Active 100mkube-flannel Active 83mkube-node-lease Active 100mkube-public Active 100mkube-system Active 100m#简写,查看所有名称空间[root@master ~]# kubectl get nsNAME STATUS AGEdefault Active 101mkube-flannel Active 84mkube-node-lease Active 101mkube-public Active 101mkube-system Active 101m##创建名为wll的名称空间【也可以使用yaml文件来创建】[root@master ~]# kubectl create ns wllnamespace/wll created[root@master ~]# kubectl get nsNAME STATUS AGEdefault Active 102mkube-flannel Active 85mkube-node-lease Active 102mkube-public Active 102mkube-system Active 102mwll Active 2s##删除名为wll的名称空间[root@master ~]# kubectl delete ns wllnamespace \"wll\" deleted[root@master ~]# kubectl get nsNAME STATUS AGEdefault Active 103mkube-flannel Active 86mkube-node-lease Active 103mkube-public Active 103mkube-system Active 103m------------------------------------核心概念.标签label标签是一组绑定到k8s资源上的key/value键值对,可以通过多维度定义标签。同一个资源对象上,key不能重复,必须唯一。##查看节点标签信息[root@master ~]# kubectl get nodes --show-labelsNAME STATUS ROLES AGE VERSION LABELSmaster Ready control-plane 112m v1.26.3 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=master,kubernetes.io/os=linux,node-role.kubernetes.io/control-plane=,node.kubernetes.io/exclude-from-external-load-balancers=node1 Ready 59m v1.26.3 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=node1,kubernetes.io/os=linuxnode2 Ready 55m v1.26.3 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=node2,kubernetes.io/os=linux##为node2加上标签【显示节点2被标记】[root@master ~]# kubectl label node node2 env=testnode/node2 labeled##查看指定节点的标签[root@master ~]# kubectl get nodes node2 --show-labelsNAME STATUS ROLES AGE VERSION LABELSnode2 Ready 63m v1.26.3 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,env=test,kubernetes.io/arch=amd64,kubernetes.io/hostname=node2,kubernetes.io/node2=test,kubernetes.io/os=linux##只描述指定标签[root@master ~]# kubectl get nodes -L envNAME STATUS ROLES AGE VERSION ENVmaster Ready control-plane 121m v1.26.3node1 Ready 68m v1.26.3node2 Ready 64m v1.26.3 test##查找具有指定标签的节点【查找具备这个标签的节点】[root@master ~]# kubectl get nodes -l env=testNAME STATUS ROLES AGE VERSIONnode2 Ready 66m v1.26.3##修改标签的值#--overwrite=true 允许覆盖[root@master ~]# kubectl label node node2 env=dev --overwrite=truenode/node2 not labeled##查看指定节点的标签信息[root@master ~]# kubectl get nodes node2 --show-labelsNAME STATUS ROLES AGE VERSION LABELSnode2 Ready 70m v1.26.3 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,env=dev,kubernetes.io/arch=amd64,kubernetes.io/hostname=node2,kubernetes.io/node2=test,kubernetes.io/os=linux##删除标签【删除键】[root@master ~]# kubectl label node node2 env-node/node2 unlabeled[root@master ~]# kubectl get nodes node2 --show-labelsNAME STATUS ROLES AGE VERSION LABELSnode2 Ready 72m v1.26.3 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=node2,kubernetes.io/node2=test,kubernetes.io/os=linux##标签选择器主要有2类:数值关系:=,!=集合关系:key in {value1,value2....}##定义标签[root@master ~]# kubectl label node node2 bussiness=gamenode/node2 labeled[root@master ~]# kubectl label node node1 bussiness=adnode/node1 labeled##通过集合的方式选择[root@master ~]# kubectl get node -l \"bussiness in (game,ad)\"NAME STATUS ROLES AGE VERSIONnode1 Ready 80m v1.26.3node2 Ready 76m v1.26.3---------------------------------------资源对象.注解Annotation升级的时候打注解比较好用,回滚的时候方便查看pod基本概念

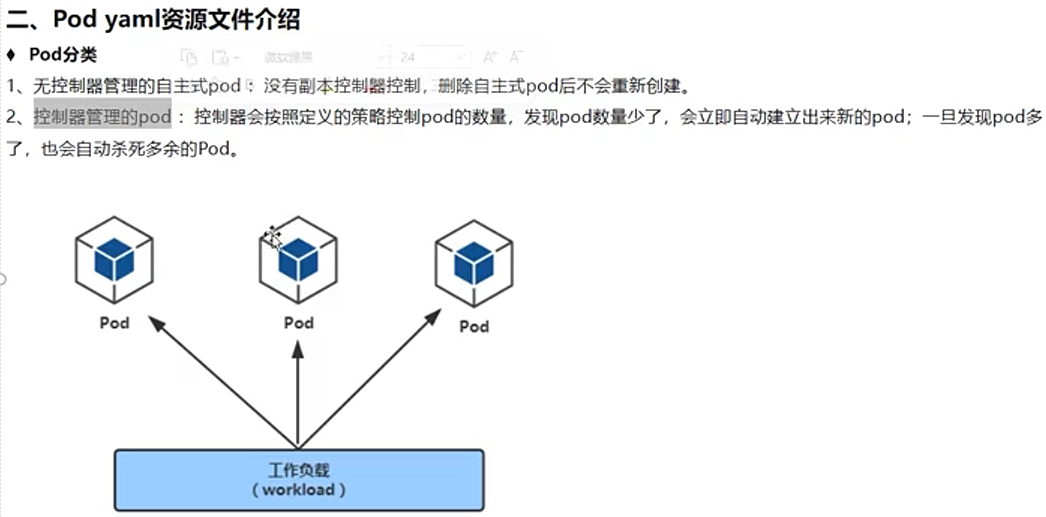

pod分类

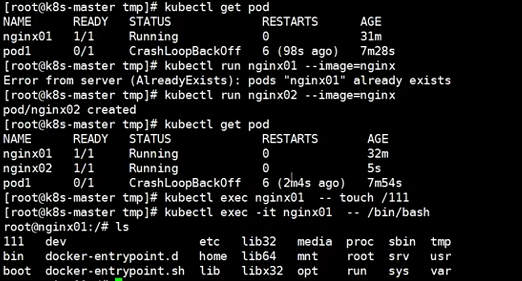

pod的yaml文件

#apiversion版本#kind类型#metadata元数据##name名称;namespace属于哪个名称空间,默认在default#namespace名称空间##labels自定义标签##name自定义标签名称;annotations注释列表#spec详细定义(期望)##containers容器列表###name容器名称;images镜像名称;imagePullPolicy镜像拉取策略【Always一直去拉取;Never从不拉取;IfNotPresent优先本地,本地没有则拉取】;command容器启动命令【参数和工作目录】;volumeMounts挂载容器内部的存储卷配置####env环境变量####resources限制资源,默认根据需要分配#####limits限制资源上限#####requests请求资源下限####livenessprobe检查首次探测时间:表示延时一段时间再检查##定义重启策略##查看yaml的第一层【查看FIELDS:】[root@master ~]# kubectl explain pod##查看指定属性包含的配置项【查看FIELDS:】[root@master ~]# kubectl explain pod.metadata##查看版本[root@master ~]# kubectl api-versions##查看详细版本[root@master ~]# kubectl api-resources | grep podpods po v1 true Pod##创建pod[root@master tmp]# vim pod1.yml[root@master tmp]# kubectl apply -f pod1.ymlpod/nginx created[root@master tmp]# cat pod1.yml---apiVersion: v1kind: Podmetadata: name: nginx labels: name: ng01spec: containers: - name: nginx image: nginx:1.20 ports: - name: webport containerPort: 80##查看默认pod[root@master tmp]# kubectl get podNAME READY STATUS RESTARTS AGEnginx 0/1 ContainerCreating 0 70s[root@master tmp]# kubectl get pod -n defaultNAME READY STATUS RESTARTS AGEnginx 0/1 ContainerCreating 0 109s##查看pod中nginx详细信息[root@master tmp]# kubectl describe pod nginx##删除pod[root@master tmp]# kubectl delete pod nginx

deployment控制器资源

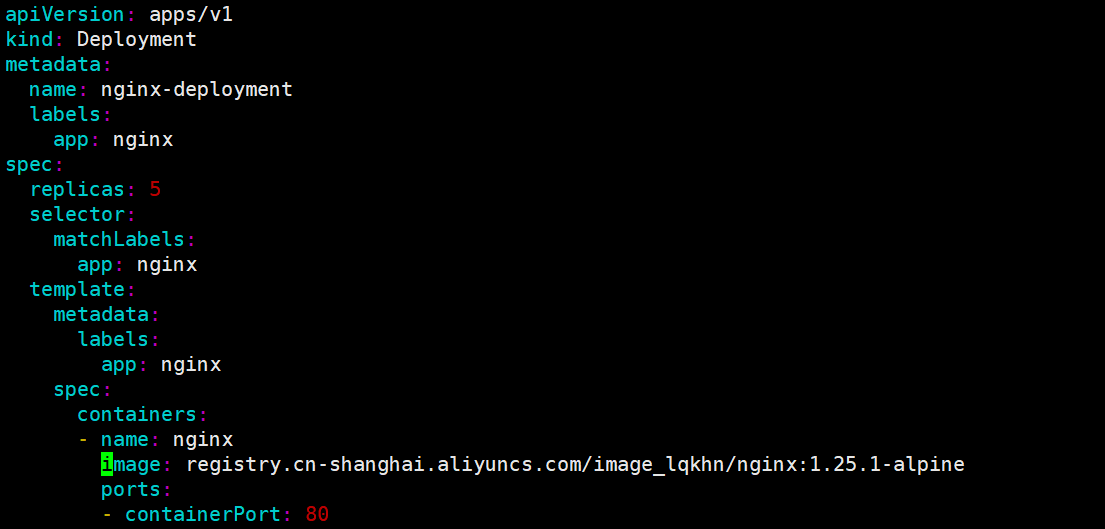

deployment资源控制器创建pod副本[root@master ~]# vim deploy.yaml[root@master tmp]# cat deploy.yaml apiVersion: apps/v1kind: Deploymentmetadata: name: nginx-deployment labels: app: nginxspec: replicas: 5 selector: matchLabels: app: nginx template: metadata: labels: app: nginx spec: ## 注意:这里的 spec 与 template.metadata 同级,缩进需保持一致 containers: - name: nginx image: registry.cn-shanghai.aliyuncs.com/image_lqkhn/nginx:1.25.1-alpine ports: - containerPort: 80##查看pod[root@master ~]# kubectl get podNo resources found in default namespace.#使用deployment创建pod[root@master tmp]# kubectl apply -f deploy.yaml deployment.apps/nginx-deployment created[root@master tmp]# kubectl get deployNAME READY UP-TO-DATE AVAILABLE AGEnginx-deployment 5/5 5 5 11s[root@master tmp]# kubectl get podNAME READY STATUS RESTARTS AGEnginx-deployment-74bd6d8c48-4wlkz 1/1 Running 0 15snginx-deployment-74bd6d8c48-6zdmq 1/1 Running 0 15snginx-deployment-74bd6d8c48-mlsq4 1/1 Running 0 15snginx-deployment-74bd6d8c48-vcmnk 1/1 Running 0 15snginx-deployment-74bd6d8c48-xvn82 1/1 Running 0 15s[root@master tmp]# kubectl get rsNAME DESIRED CURRENT READY AGEnginx-deployment-74bd6d8c48 5 5 5 20s##删除Deployment,它会自动级联删除所有关联的 ReplicaSet 和 Pod##查看资源[root@master tmp]# kubectl get deploymentNAME READY UP-TO-DATE AVAILABLE AGEnginx-deployment 0/5 5 0 13h[root@master tmp]# kubectl get podNAME READY STATUS RESTARTS AGEnginx-deployment-584f64656-44p4s 0/1 ImagePullBackOff 0 13hnginx-deployment-584f64656-kh4bj 0/1 ImagePullBackOff 0 13hnginx-deployment-584f64656-nrb9m 0/1 ImagePullBackOff 0 13hnginx-deployment-584f64656-zc4xx 0/1 ErrImagePull 0 13hnginx-deployment-584f64656-zdqx2 0/1 ImagePullBackOff 0 13h[root@master tmp]# kubectl get rsNAME DESIRED CURRENT READY AGEnginx-deployment-584f64656 5 5 0 13h##删除控制器资源[root@master tmp]# kubectl delete deployment nginx-deploymentdeployment.apps \"nginx-deployment\" deleted##查看[root@master tmp]# kubectl get deployments,pods,replicasetsNo resources found in default namespace.水平扩展

deployment集成了滚动升级,创建pod副本数量等功能,包含并使用了RS1.deployment中pod副本,水平扩展##方法一:直接编辑资源[root@master tmp]# kubectl edit deploy nginx-deployment#/...#spec:# progressDeadlineSeconds: 600# replicas: 3 ##直接将副本数量修改为3个,保存退出文件,#.../deployment.apps/nginx-deployment edited##查看pod,可立即动态生效[root@master tmp]# kubectl get podNAME READY STATUS RESTARTS AGEnginx-deployment-74bd6d8c48-4wlkz 1/1 Running 0 28mnginx-deployment-74bd6d8c48-mlsq4 1/1 Running 0 28mnginx-deployment-74bd6d8c48-vcmnk 1/1 Running 0 28m##方式二:直接修改deploy.yaml文件需要重新启用才能生效##方式三:命令行操作[root@master tmp]# kubectl scale --replicas=2 deploy/nginx-deploymentdeployment.apps/nginx-deployment scaled[root@master tmp]# kubectl get podNAME READY STATUS RESTARTS AGEnginx-deployment-74bd6d8c48-mlsq4 1/1 Running 0 30mnginx-deployment-74bd6d8c48-vcmnk 1/1 Running 0 30m更新deployment

- 更新过程

- 更新前:deployment管理的RS为\"74bd6d\"开头,有五个pod副本数量

- 更新后:生成了新的RS为\"5d55b\"开头,有五个pod副本数量

- 更新过程:杀死旧的RS下面的pod

模板标签或容器镜像被更新,才会触发更新##方式一:直接修改yaml文件##将镜像版本更新为1.27[root@master tmp]# vim deploy.yaml [root@master tmp]# cat deploy.yaml apiVersion: apps/v1kind: Deploymentmetadata: name: nginx-deployment labels: app: nginxspec: replicas: 5 selector: matchLabels: app: nginx template: metadata: labels: app: nginx spec: containers: - name: nginx image: registry.cn-shanghai.aliyuncs.com/aliyun_lqkhn/nginx:1.27.1 ports: - containerPort: 80##查看更新过程,称为更新策略(rolling update滚动更新)【前缀5d55和74bd6为RS】[root@master tmp]# kubectl apply -f deploy.yaml deployment.apps/nginx-deployment configured[root@master tmp]# kubectl get podNAME READY STATUS RESTARTS AGEnginx-deployment-5b55b95f79-9vjl8 0/1 ContainerCreating 0 9snginx-deployment-5b55b95f79-b9wqx 0/1 ContainerCreating 0 9snginx-deployment-5b55b95f79-jfkhj 0/1 ContainerCreating 0 9snginx-deployment-74bd6d8c48-5kzhx 1/1 Running 0 9snginx-deployment-74bd6d8c48-6g4rd 1/1 Running 0 9snginx-deployment-74bd6d8c48-mlsq4 1/1 Running 0 35mnginx-deployment-74bd6d8c48-vcmnk 1/1 Running 0 35m##此时运行的为新版本[root@master tmp]# kubectl get podNAME READY STATUS RESTARTS AGEnginx-deployment-5b55b95f79-77c9r 1/1 Running 0 43snginx-deployment-5b55b95f79-9vjl8 1/1 Running 0 55snginx-deployment-5b55b95f79-b9wqx 1/1 Running 0 55snginx-deployment-5b55b95f79-jfkhj 1/1 Running 0 55snginx-deployment-5b55b95f79-pgx6n 1/1 Running 0 43s##查看某一个pod详细信息,其中包含镜像版本[root@master tmp]# kubectl describe pod nginx-deployment-5b55b95f79-pgx6n...Containers: nginx: Container ID: containerd://707406779de1a7a08f11c9fa22123d662ff88caf2b9ceea9f4b57a7d619b84e5 Image: registry.cn-shanghai.aliyuncs.com/aliyun_lqkhn/nginx:1.27.1##查看deployment详情,观察更新过程[root@master tmp]# kubectl describe deployment...Events: Type Reason Age From Message ---- ------ ---- ---- ------- Normal ScalingReplicaSet 50m deployment-controller Scaled up replica set nginx-deployment-74bd6d8c48 to 5 Normal ScalingReplicaSet 24m deployment-controller Scaled down replica set nginx-deployment-74bd6d8c48 to 3 from 5 Normal ScalingReplicaSet 15m deployment-controller Scaled up replica set nginx-deployment-74bd6d8c48 to 5 from 2 Normal ScalingReplicaSet 14m deployment-controller Scaled up replica set nginx-deployment-5b55b95f79 to 2 Normal ScalingReplicaSet 14m deployment-controller Scaled down replica set nginx-deployment-74bd6d8c48 to 4 from 5 ##旧pod从5到4 Normal ScalingReplicaSet 14m deployment-controller Scaled up replica set nginx-deployment-5b55b95f79 to 3 from 2 ##新pod从2到3 Normal ScalingReplicaSet 14m (x2 over 19m) deployment-controller Scaled down replica set nginx-deployment-74bd6d8c48 to 2 from 3 Normal ScalingReplicaSet 14m deployment-controller Scaled down replica set nginx-deployment-74bd6d8c48 to 3 from 4 Normal ScalingReplicaSet 14m deployment-controller Scaled up replica set nginx-deployment-5b55b95f79 to 4 from 3 Normal ScalingReplicaSet 14m deployment-controller Scaled up replica set nginx-deployment-5b55b95f79 to 5 from 4 Normal ScalingReplicaSet 14m (x2 over 14m) deployment-controller (combined from similar events): Scaled down replica set nginx-deployment-74bd6d8c48 to 0 from 1##查看deployment[root@master tmp]# kubectl describe deploymentName: nginx-deploymentNamespace: defaultCreationTimestamp: Wed, 09 Jul 2025 09:03:18 +0800Labels: app=nginxAnnotations: deployment.kubernetes.io/revision: 2Selector: app=nginxReplicas: 5 desired | 5 updated | 5 total | 5 available | 0 unavailableStrategyType: RollingUpdateMinReadySeconds: 0RollingUpdateStrategy: 25% max unavailable, 25% max surge ##表示至少%75处于运行状态,默认可以超出%25eg:如果期望值为8,pod数量只能处于6~10 如上期望值为5,pod可以减少或增加的数量为1~2个,有时是一个有时是两个##可以通过RollingUpdateStrategy调整更新进度deployment回滚

##查看版本历史[root@master tmp]# kubectl rollout history deployment nginx-deploymentdeployment.apps/nginx-deployment REVISION CHANGE-CAUSE1 2 ##查看版本记录的详细信息[root@master tmp]# kubectl rollout history deployment nginx-deployment --revision=1deployment.apps/nginx-deployment with revision #1Pod Template: Labels:app=nginxpod-template-hash=74bd6d8c48 Containers: ngin