部署K8s v1.32.6版本详细文档_kubernetes 1.32.6

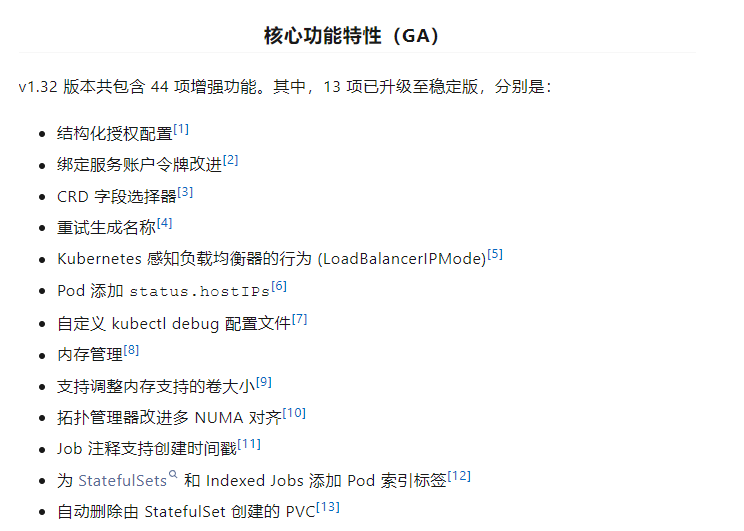

与之前的版本一样,Kubernetes v1.32 版本引入了新的稳定版、测试版和 alpha 版功能。持续交付高质量的版本凸显了我们开发的实力和社区的活力。本次发布总共包含 44 项增强功能。其中 13 项功能升级为稳定版,12 项进入测试版,19 项进入 alpha 版。发布主题和 LOGO,

Kubernetes v1.32 的发布主题是 Penelope(佩涅罗佩)。如果说 Kubernetes 在古希腊语中意为\"舵手\",在这个版本中我们从这个起源出发,回顾 Kubernetes 过去 10 年的成就:每个发布周期都是一段旅程,每个版本都会添加新功能并移除一些旧功能,不过我们有着更明确的目标,那就是不断改进 Kubernetes。在 Kubernetes 十周年之际的最后一个版本 v1.32,我们想要向所有参与过这个全球 Kubernetes 船员队伍致敬,他们在云原生的海洋中航行,克服了重重困难和挑战:愿我们继续一起编织 Kubernetes 的未来。

主机配置

修改主机名,在每台机器单独执行的

hostnamectl set-hostname k8s-masterhostnamectl set-hostname k8s-node1hostnamectl set-hostname k8s-node2hostnamectl set-hostname k8s-node3每台机器都需要设置hosts

cat >> /etc/hosts << EOF172.16.10.130 k8s-master172.16.10.131 k8s-node1172.16.10.132 k8s-node2172.16.10.133 k8s-node3EOF配置免密登录,只在k8s-master上操作

[root@k8s-master ~]# ssh-keygen -f ~/.ssh/id_rsa -N \'\' -q拷贝密钥到其他3台节点

[root@k8s-master ~]# ssh-copy-id k8s-node1[root@k8s-master ~]# ssh-copy-id k8s-node2[root@k8s-master ~]# ssh-copy-id k8s-node3防火墙和SELinux

# 关闭防火墙systemctl disable --now firewalld# 禁用SELinuxsed-i\'/^SELINUX=/ c SELINUX=disabled\' /etc/selinux/config# 重启生效所以临时设置为宽容模式setenforce 0时间同步配置

# 安装时间服务器软件包dnf install -y chrony# 修改同步服务器sed-i\'/^pool/ c pool ntp1.aliyun.com iburst\' /etc/chrony.confsystemctl restart chronydsystemctl enable chronydchronyc sources配置内核转发及网桥过滤

# 添加网桥过滤及内核转发配置文件cat > /etc/sysctl.d/k8s.conf << EOFnet.bridge.bridge-nf-call-ip6tables =1net.bridge.bridge-nf-call-iptables =1vm.swappiness =0EOF# 加载br_netfilter模块modprobe br_netfilter使用新添加配置文件生效

sysctl -p /etc/sysctl.d/k8s.conf关闭swap

查看交换分区情况

# 临时关闭swapoff -a# 永远关闭swap分区sed-i\'s/.*swap.*/#&/\' /etc/fstab启用ipvs

cat >> /etc/modules-load.d/ipvs.conf << EOFbr_netfilterip_conntrackip_vsip_vs_lcip_vs_wlcip_vs_rrip_vs_wrrip_vs_lblcip_vs_lblcrip_vs_dhip_vs_ship_vs_foip_vs_nqip_vs_sedip_vs_ftpip_vs_shnf_conntrackip_tablesip_setxt_setipt_setipt_rpfilteript_REJECTipipEOF# 安装依赖dnf install ipvsadm ipset sysstat conntrack libseccomp -y重启服务

systemctl restart systemd-modules-load.service查看模块内容

lsmod | grep-e ip_vs -e nf_conntrack句柄数最大

# 设置为最大ulimit -SHn65535cat >> /etc/security/limits.conf <<EOF* soft nofile 655360* hard nofile 131072* soft nproc 655350* hard nproc 655350* seft memlock unlimited* hard memlock unlimiteddEOF # 查看修改结果ulimit -a系统优化

cat > /etc/sysctl.d/k8s_better.conf << EOFnet.bridge.bridge-nf-call-iptables=1net.bridge.bridge-nf-call-ip6tables=1net.ipv4.ip_forward=1vm.swappiness=0vm.overcommit_memory=1vm.panic_on_oom=0fs.inotify.max_user_instances=8192fs.inotify.max_user_watches=1048576fs.file-max=52706963fs.nr_open=52706963net.ipv6.conf.all.disable_ipv6=1net.netfilter.nf_conntrack_max=2310720EOFmodprobe br_netfilterlsmod |grep conntrackmodprobe ip_conntracksysctl -p /etc/sysctl.d/k8s_better.conf安装docker

# Step 1: 安装依赖yum install -y yum-utils device-mapper-persistent-data lvm2# Step 2: 添加软件源信息yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/rhel/docker-ce.repo# Step 3: 安装Docker-CEyum -y install docker-ce# docker -vDocker version 27.5.1, build 9f9e405# 设置国内镜像加速mkdir-p /etc/docker/ cat >> /etc/docker/daemon.json << EOF{ \"registry-mirrors\":[\"https://p3kgr6db.mirror.aliyuncs.com\", \"https://docker.m.daocloud.io\", \"https://your_id.mirror.aliyuncs.com\", \"https://docker.nju.edu.cn/\", \"https://docker.anyhub.us.kg\", \"https://dockerhub.jobcher.com\", \"https://dockerhub.icu\", \"https://docker.ckyl.me\", \"https://cr.console.aliyun.com\" ],\"exec-opts\": [\"native.cgroupdriver=systemd\"]}EOF# 设置docker开机启动并启动systemctl enable --now docker# 查看docker版本docker version安装cri-dockerd

下载地址:Releases · Mirantis/cri-dockerd (github.com)https://github.com/Mirantis/cri-dockerd/releases/download/v0.3.16/cri-dockerd-0.3.16-3.fc35.x86_64.rpm安装cri-docker

# 下载rpm包wget-c https://github.com/Mirantis/cri-dockerd/releases/download/v0.3.16/cri-dockerd-0.3.16-3.fc35.x86_64.rpmwget-c https://rpmfind.net/linux/almalinux/8.10/BaseOS/x86_64/os/Packages/libcgroup-0.41-19.el8.x86_64.rpm# 安装rpm包yum install libcgroup-0.41-19.el8.x86_64.rpmyum install cri-dockerd-0.3.16-3.fc35.x86_64.rpm设置cri-docker服务开机自启

systemctl enable cri-dockercri-docke设置国内镜像加速

# 编辑service文件vim /usr/lib/systemd/system/cri-docker.service文件修改第10行内容------------------ExecStart=/usr/bin/cri-dockerd --pod-infra-container-image=registry.aliyuncs.com/google_containers/pause:3.9 --container-runtime-endpoint fd://-----------------------------------# 重启Docker组件systemctl daemon-reload && systemctl restart docker cri-docker.socket cri-docker # 检查Docker组件状态systemctl status docker cir-docker.socket cri-dockerK8S软件安装

# 1、配置kubernetes源cat <<EOF | tee /etc/yum.repos.d/kubernetes.repo[kubernetes]name=Kubernetesbaseurl=https://mirrors.aliyun.com/kubernetes-new/core/stable/v1.32/rpm/enabled=1gpgcheck=1gpgkey=https://mirrors.aliyun.com/kubernetes-new/core/stable/v1.32/rpm/repodata/repomd.xml.keyEOF# 2、查看所有可用的版本yum list kubelet --showduplicates | sort-r |grep 1.32# 3、安装kubelet、kubeadm、kubectl、kubernetes-cniyum install -y kubelet kubeadm kubectl kubernetes-cni# 4、配置cgroup为了实现docker使用的cgroupdriver与kubelet使用的cgroup的一致性,建议修改如下文件内容。vim /etc/sysconfig/kubelet [3台全部设置下]---------------------KUBELET_EXTRA_ARGS=\"--cgroup-driver=systemd\"---------------------# 5、设置kubelet为开机自启动即可,由于没有生成配置文件,集群初始化后自动启动systemctl enable kubeletK8S集群初始化

# 只在k8s-master节点上操作[root@localhost ~]# kubeadm config print init-defaults > kubeadm-init.yaml# 编辑kubeadm-init.yaml修改如下配置:- advertiseAddress:为控制平面地址,(Master主机IP)advertiseAddress: 1.2.3.4修改为 advertiseAddress: 192.168.1.30- criSocket:为 containerd 的socket 文件地址criSocket: unix:///var/run/containerd/containerd.sock修改为 criSocket: unix:///var/run/cri-dockerd.sock- name: node 修改node为k8s-mastername: node修改为 name: k8s-master- imageRepository:阿里云镜像代理地址,否则拉取镜像会失败imageRepository: registry.k8s.io修改为:imageRepository: registry.aliyuncs.com/google_containers- kubernetesVersion:为k8s版本kubernetesVersion: 1.32.0修改为:kubernetesVersion: 1.32.6# 文件末尾增加启用ipvs功能---apiVersion: kubeproxy.config.k8s.io/v1alpha1kind: KubeProxyConfigurationmode: ipvs# 根据配置文件启动kubeadm初始化k8s$ kubeadm init --config=kubeadm-init.yaml --upload-certs--v=6输出结果:Your Kubernetes control-plane has initialized successfully!To start using your cluster, you need to run the following as a regular user: mkdir-p$HOME/.kube sudocp-i /etc/kubernetes/admin.conf $HOME/.kube/config sudochown$(id -u):$(id -g)$HOME/.kube/configAlternatively, if you are the root user, you can run: exportKUBECONFIG=/etc/kubernetes/admin.confYou should now deploy a pod network to the cluster.Run \"kubectl apply -f [podnetwork].yaml\" with one of the options listed at: https://kubernetes.io/docs/concepts/cluster-administration/addons/Then you can join any number of worker nodes by running the following on each as root:kubeadm join 192.168.1.30:6443 --token abcdef.0123456789abcdef \\--discovery-token-ca-cert-hash sha256:9d25c16abfec6ff6832ed2260c6c998d3fa6fedef61529d88520d3038bdbdde5K8S集群工作节点加入

# 注意:加入集群时需要添加 --cri-socket unix:///var/run/cri-dockerd.sockkubeadm join 192.168.1.30:6443 --token abcdef.0123456789abcdef \\--discovery-token-ca-cert-hash sha256:9d25c16abfec6ff6832ed2260c6c998d3fa6fedef61529d88520d3038bdbdde5 \\ --cri-socket unix:///var/run/cri-dockerd.sockK8S集群网络插件使用

# 下载calico资源清单wget --no-check-certificate https://raw.githubusercontent.com/projectcalico/calico/v3.28.0/manifests/calico.yaml# 修改calico文件vim calico.yaml- name: CALICO_IPV4POOL_CIDR value: \"10.244.0.0/16\" # 可以将镜像提前拉取下来,如果官网仓库不可达,可以尝试手动从quay.io下载镜像,quay.io是一个公共镜像仓库。docker pull calico/cni:v3.28.0docker pull calico/node:v3.28.0docker pull calico/kube-controllers:v3.28.0# 应用calico资源清单kubectl apply -f calico.yamlKubectl命令自动补全

yum -y install bash-completionsource /usr/share/bash-completion/bash_completionsource <(kubectl completion bash)echo\"source <(kubectl completion bash)\" >> ~/.bashrc安装helm v3.16.3

wget https://get.helm.sh/helm-v3.16.3-linux-amd64.tar.gztar xf helm-v3.16.3-linux-amd64.tar.gzcd linux-amd64/mv helm /usr/local/binhelm version部署动态sc存储

# k8s-master节点上执行yum -y install nfs-utilsecho\"/nfs/data/ *(insecure,rw,sync,no_root_squash)\" > /etc/exportsmkdir-p /nfs/data/chmod777-R /nfs/data/systemctl enable rpcbindsystemctl enable nfs-serversystemctl start rpcbindsystemctl start nfs-serverexportfs -v创建nfs-provisioner

apiVersion: v1kind: ServiceAccountmetadata: name: nfs-client-provisioner # sa名字,nfs-provisioner-deploy里的要对应 namespace: kube-system # 命名空间---kind: ClusterRoleapiVersion: rbac.authorization.k8s.io/v1 # 创建集群规则metadata: name: nfs-client-provisioner-runnerrules: - apiGroups: [\"\"] resources: [\"persistentvolumes\"] verbs: [\"get\",\"list\",\"watch\",\"create\",\"delete\"] - apiGroups: [\"\"] resources: [\"persistentvolumeclaims\"] verbs: [\"get\",\"list\",\"watch\",\"update\"] - apiGroups: [\"storage.k8s.io\"] resources: [\"storageclasses\"] verbs: [\"get\",\"list\",\"watch\"] - apiGroups: [\"\"] resources: [\"events\"] verbs: [\"create\",\"update\",\"patch\"]---kind: ClusterRoleBinding # 将服务认证用户与集群规则进行绑定apiVersion: rbac.authorization.k8s.io/v1metadata: name: run-nfs-client-provisionersubjects: - kind: ServiceAccount # 类型为sa name: nfs-client-provisioner # sa的名字一致 namespace: kube-system # 和nfs provisioner安装的namespace一致roleRef: kind: ClusterRole name: nfs-client-provisioner-runner apiGroup: rbac.authorization.k8s.io---kind: RoleapiVersion: rbac.authorization.k8s.io/v1metadata: name: leader-locking-nfs-client-provisioner namespace: kube-system # 和nfs provisioner安装的namespace一致rules: - apiGroups: [\"\"] resources: [\"endpoints\"] verbs: [\"get\",\"list\",\"watch\",\"create\",\"update\",\"patch\"]---kind: RoleBindingapiVersion: rbac.authorization.k8s.io/v1metadata: name: leader-locking-nfs-client-provisioner namespace: kube-system # 和nfs provisioner安装的namespace一致subjects: - kind: ServiceAccount # 类型为sa name: nfs-client-provisioner # sa的名字一致 namespace: kube-system # 和nfs provisioner安装的namespace一致roleRef: kind: Role name: leader-locking-nfs-client-provisioner apiGroup: rbac.authorization.k8s.io---apiVersion: apps/v1kind: Deploymentmetadata: name: nfs-client-provisioner labels: app: nfs-client-provisioner namespace: kube-system # 部署在指定ns下spec: replicas: 1 # 副本数,建议为奇数[1,3,5,7,9] strategy: type: Recreate # 使用重建的升级策略 selector: matchLabels: app: nfs-client-provisioner template: metadata: labels: app: nfs-client-provisioner spec: serviceAccountName: nfs-client-provisioner # sa名字,这个是在nfs-rbac.yaml里定义 containers: - name: nfs-client-provisioner # 容器名字 image: k8s.m.daocloud.io/sig-storage/nfs-subdir-external-provisioner:v4.0.2 # 镜像地址,这里采用私有仓库。 volumeMounts: - name: nfs-client-root mountPath: /persistentvolumes # 指定容器内挂载的目录 env: - name: PROVISIONER_NAME # 容器内的变量用于指定提供存储的名称 value: nfsnas # nfs-provisioner的名称,以后设置的storage class要和这个保持一致 - name: NFS_SERVER # 容器内的变量指定nfs服务器对应的目录 value: 192.168.1.254 # NFS服务器的地址 - name: NFS_PATH # 容器内的变量指定nfs服务器对应的目录 value: /volume1/服务/K8s-NFS # NFS服务的挂载目录,如果采用这个nfs动态申请PV,所创建的文件在这个目录里,一定要给权限,直接777。 volumes: - name: nfs-client-root # 赋值卷名字 nfs: server: 192.168.1.254 # NFS服务器的地址 path: /volume1/服务/K8s-NFS # NFS服务的挂载目录,一定要给权限,直接777,不服就是干---apiVersion: storage.k8s.io/v1kind: StorageClassmetadata: name: nfsnas annotations: storageclass.kubernetes.io/is-default-class: \"true\" # 设为默认存储类provisioner: nfsnas # 必须与 Deployment 中 PROVISIONER_NAME 一致parameters: archiveOnDelete: \"false\" # \"true\" 表示删除 PVC 时归档数据(重命名目录)