QT中使用C++运行yolo视频识别(2025年5月)_qt yolo

配置

QT:5.15.2

ubuntu:20.04

opencv:4.6.0

一、安装yolov8

在本地下载

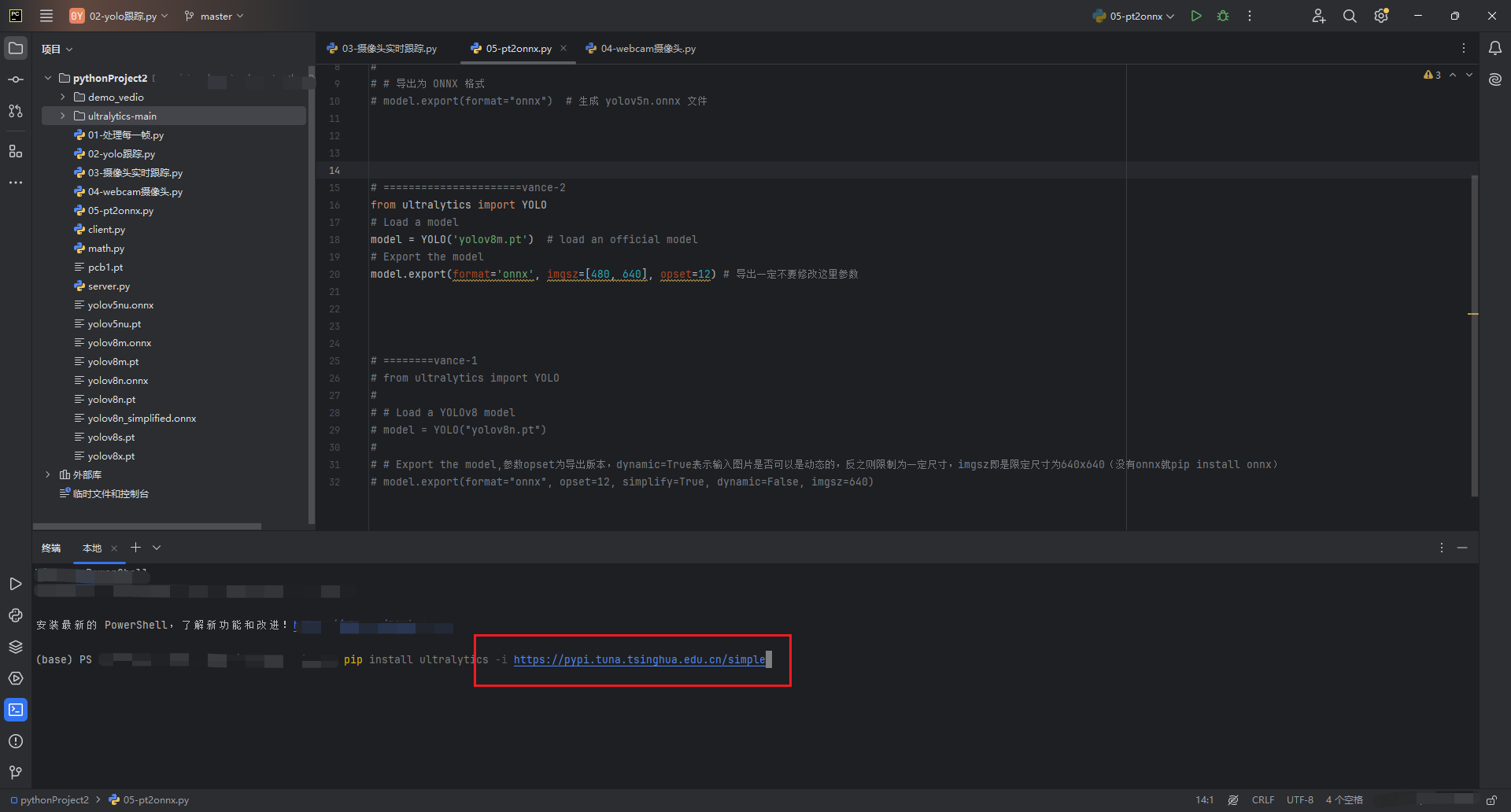

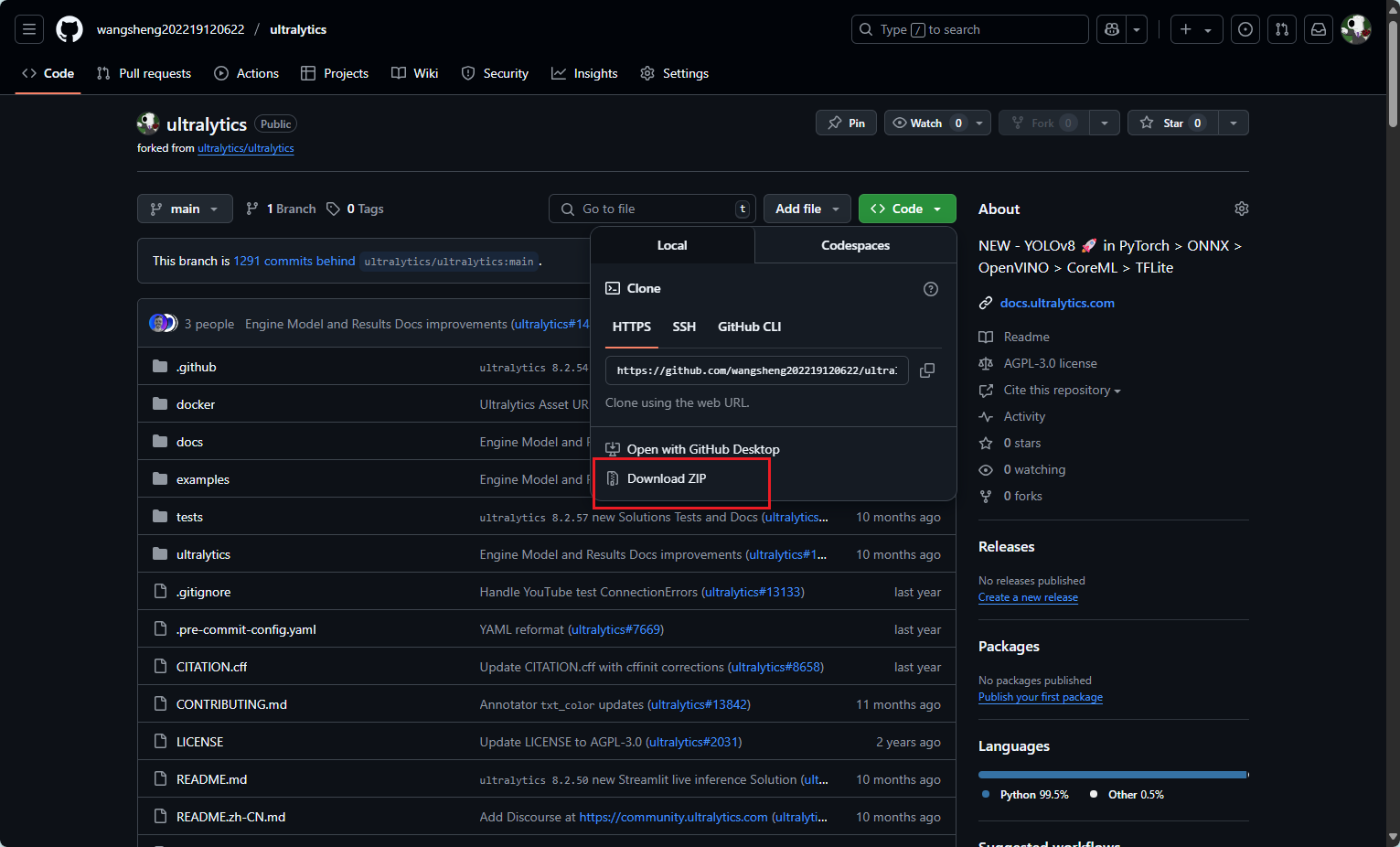

pip install ultralytics -i https://pypi.tuna.tsinghua.edu.cn/simple或者github下载

https://github.com/wangsheng202219120622/ultralytics二、导出onnx

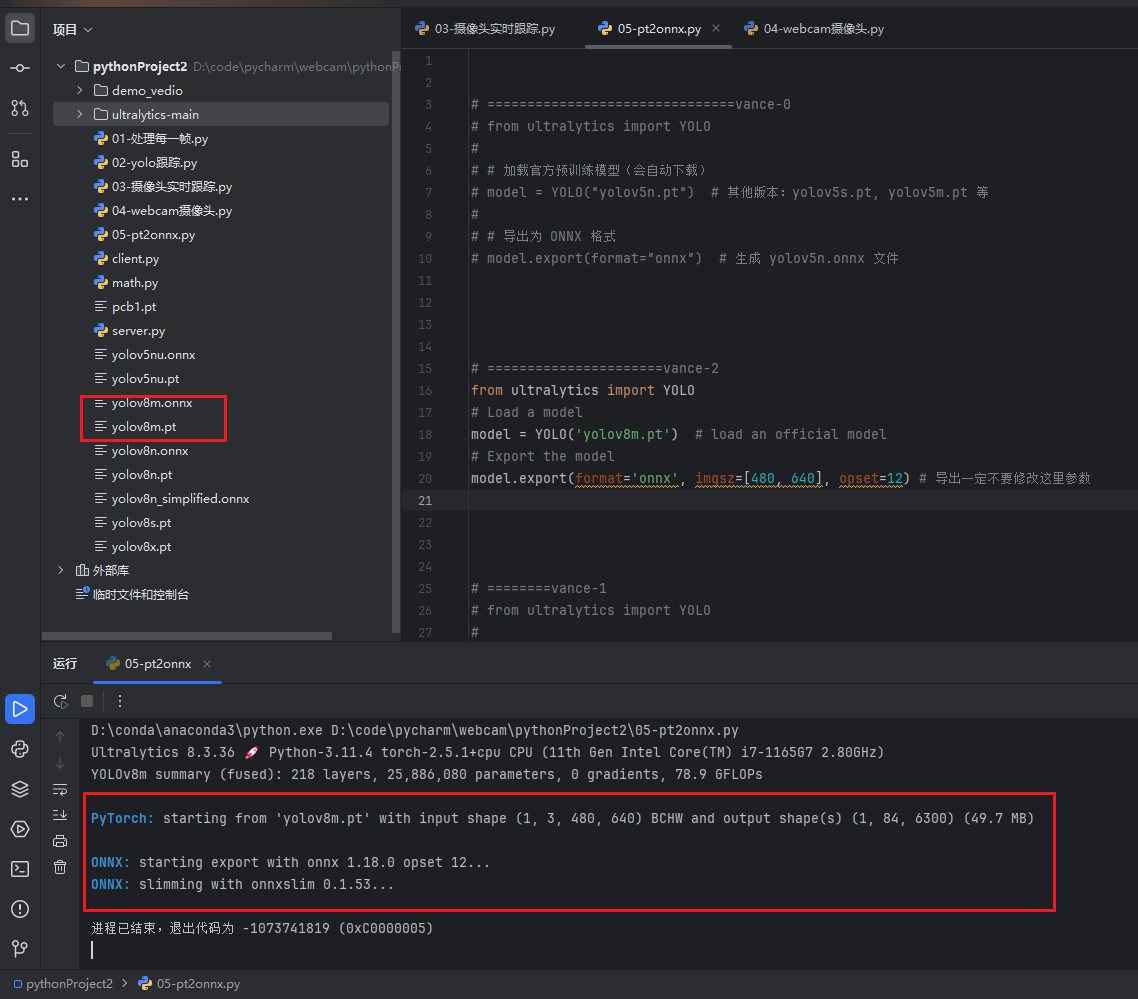

导出onnx格式模型的时候,注意,如果你是自己训练的模型,只需要把以下代码中yolov8n.pt修改为自己的模型即可,如best.pt。如果是下面代码中默认的模型,并且你没有下载到本地,系统会自动下载。将以下代码创建、拷贝到yolov8根目录下。

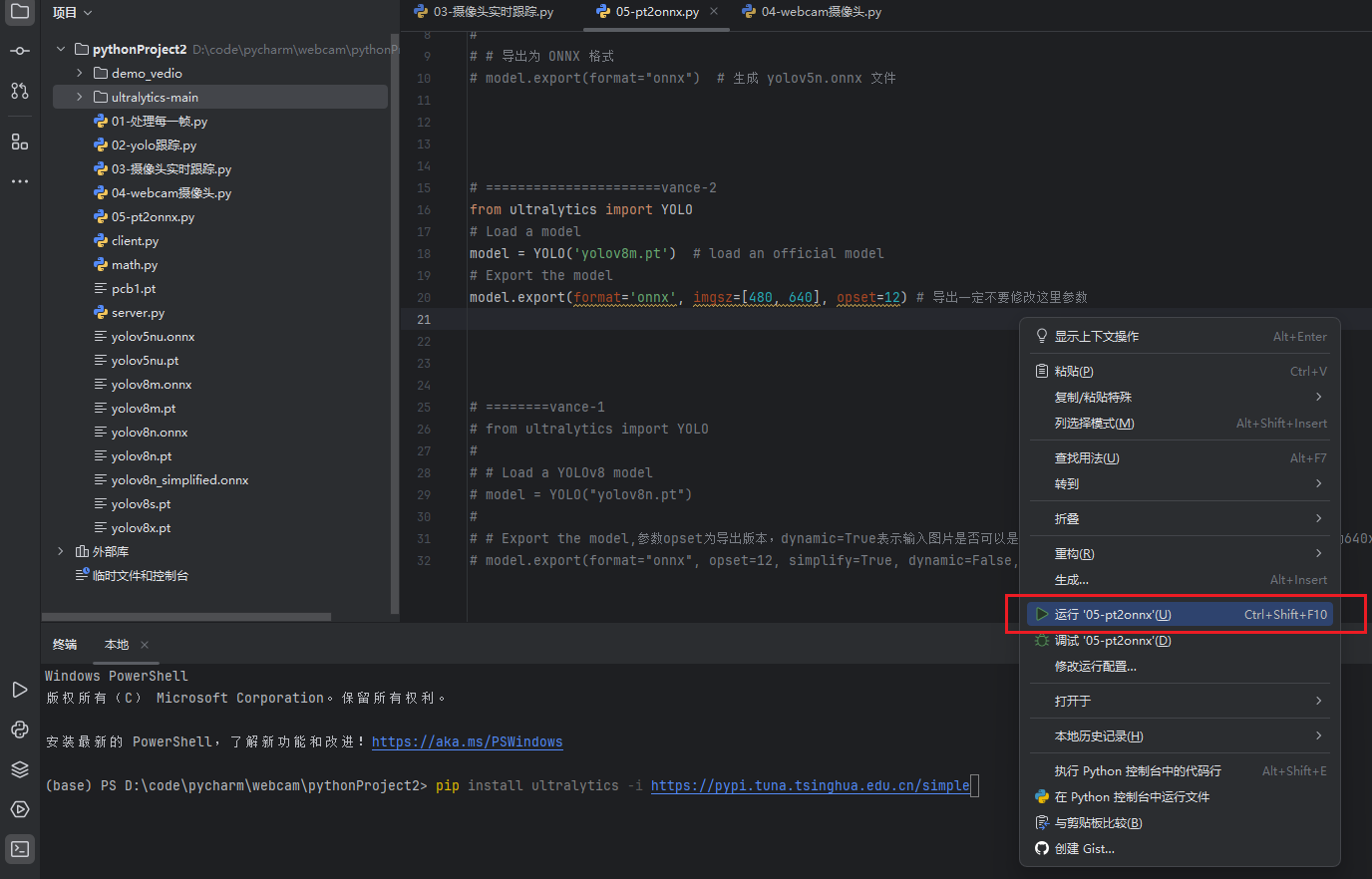

具体代码 pt2onnx.py:

from ultralytics import YOLO# Load a modelmodel = YOLO(\'yolov8n.pt\') # load an official model# Export the modelmodel.export(format=\'onnx\', imgsz=[480, 640], opset=12) # 导出一定不要修改这里参数右键运行即可

运行后即可生成onnx

三、基于opencv推理onnx

参考文章【OpenCV 】Ubuntu系统下配置安装OpenCV开发环境_ubuntu opencv环境变量配置-CSDN博客

(1)虚拟机下载opencv步骤

1. 安装依赖项

首先安装必要的编译工具和依赖库,打开虚拟机终端:

sudo apt-get update # 这是为了更新你的软件源sudo apt-get install build-essential # 这是为了安装编译所需的库sudo apt-get install cmake pkg-config git # 这是为了安装一些必要的工具sudo apt-get install libgtk2.0-dev libavcodec-dev libavformat-dev libswscale-dev python-dev python-numpy libtbb2 libtbb-dev libjpeg-dev libpng-dev libtiff-dev libtiff4.dev libswscale-dev libjasper-dev libdc1394-22-dev libgstreamer1.0-dev libgstreamer-plugins-base1.0-dev2. 下载 OpenCV

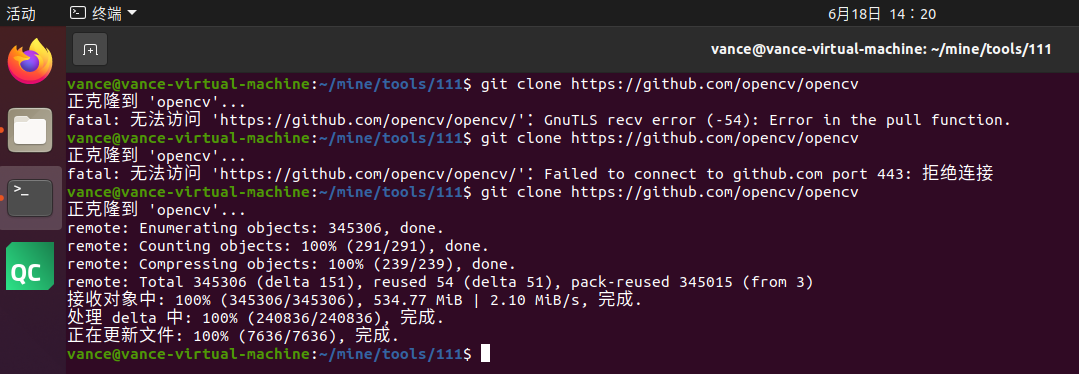

通过 Git 下载 OpenCV源码(若未安装 Git,先运行 sudo apt install -y git):

git clone https://github.com/opencv/opencv可能因为网络问题连接失败,需要多试几次,或者尝试虚拟机git下载GitHub项目-CSDN博客

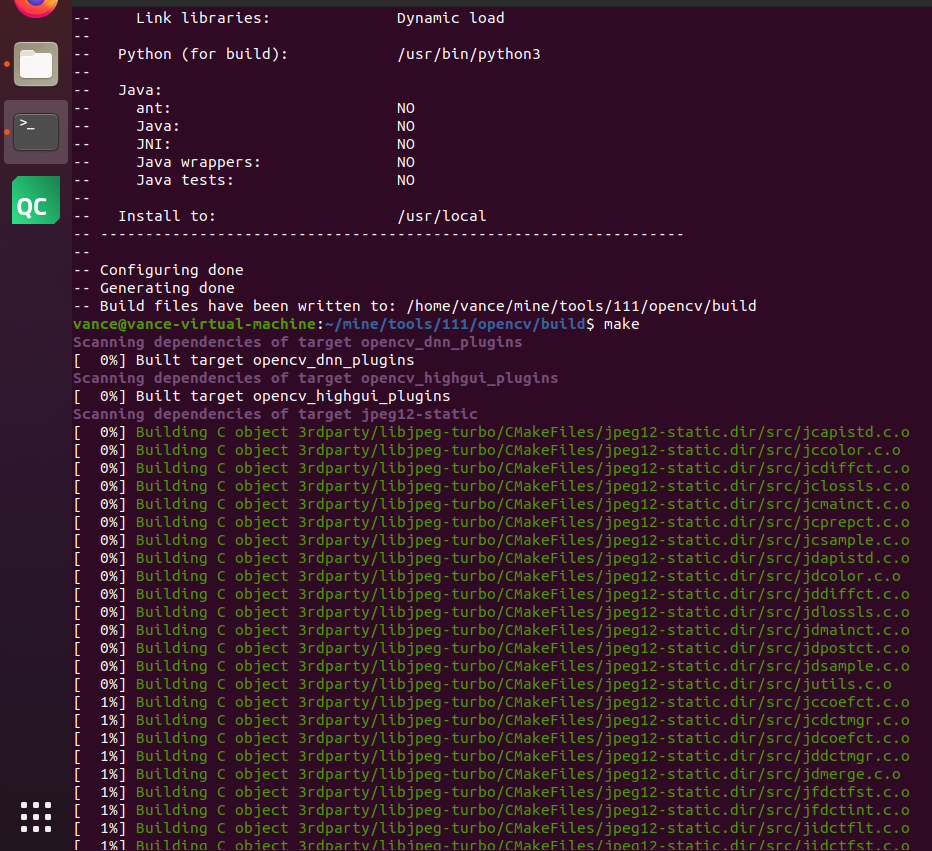

3. 编译下载OpenCV

创建构建目录并配置 CMake

cd opencvmkdir buildcd buildcmake -D CMAKE_BUILD_TYPE=Release -D CMAKE_INSTALL_PREFIX=/usr/local ..makesudo make install等待 配置-编译-下载

4. 配置环境&&验证安装

1)添加库路径

打开虚拟机终端

sudo vi /etc/ld.so.conf.d/opencv.conf输入下面的内容,然后保存并退出

/usr/local/lib2)添加环境变量

sudo vi /etc/profile在末尾加入

export PKG_CONFIG_PATH=/usr/local/lib/pkgconfig:$PKG_CONFIG_PATHexport LD_LIBRARY_PATH=$LD_LIBRARY_PATH:/usr/local/lib3)更新环境变量

sudo source /etc/profile4)重启,然后打开虚拟机终端

输入命令

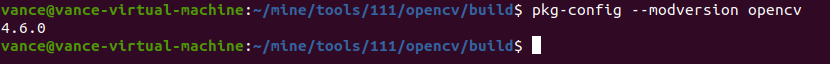

pkg-config --modversion opencv

如果没有出错,说明安装成功。

注意1:如果报了如下错误 Package opencv was not found in the pkg-config search path:

Package opencv was not found in the pkg-config search path.

Perhaps you should add the directory containing `opencv.pc\'

to the PKG_CONFIG_PATH environment variable

No package \'opencv\' found

那就是缺失了opencv.pc这个配置信息文件,故解决方法就是添加这个文件然后将其导入到环境变量中,具体操作如下:

1、首先创建opencv.pc文件(需要注意它的路径信息)

cd /usr/local/libsudo mkdir pkgconfigcd pkgconfigsudo touch opencv.pc2、然后在opencv.pc中添加以下信息(注意这些信息需要与自己安装opencv时的库路径对应)

prefix=/usr/localexec_prefix=${prefix}includedir=${prefix}/includelibdir=${exec_prefix}/libName: opencvDescription: The opencv libraryVersion:4.6.0Cflags: -I${includedir}/opencv4Libs: -L${libdir} -lopencv_shape -lopencv_stitching -lopencv_objdetect -lopencv_superres -lopencv_videostab -lopencv_calib3d -lopencv_features2d -lopencv_highgui -lopencv_videoio -lopencv_imgcodecs -lopencv_video -lopencv_photo -lopencv_ml -lopencv_imgproc -lopencv_flann -lopencv_core~ 3、保存退出,打开虚拟机终端,然后将文件导入到环境变量

export PKG_CONFIG_PATH=/usr/local/lib/pkgconfig至此就配置好opencv.pc,再执行 pkg-config --modversion opencv命令试试。

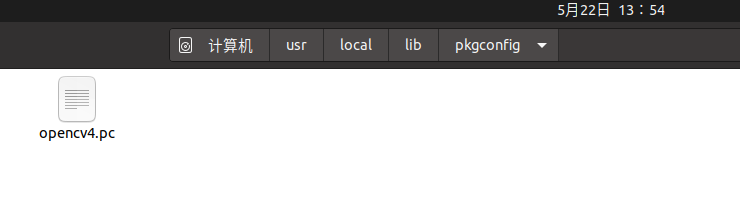

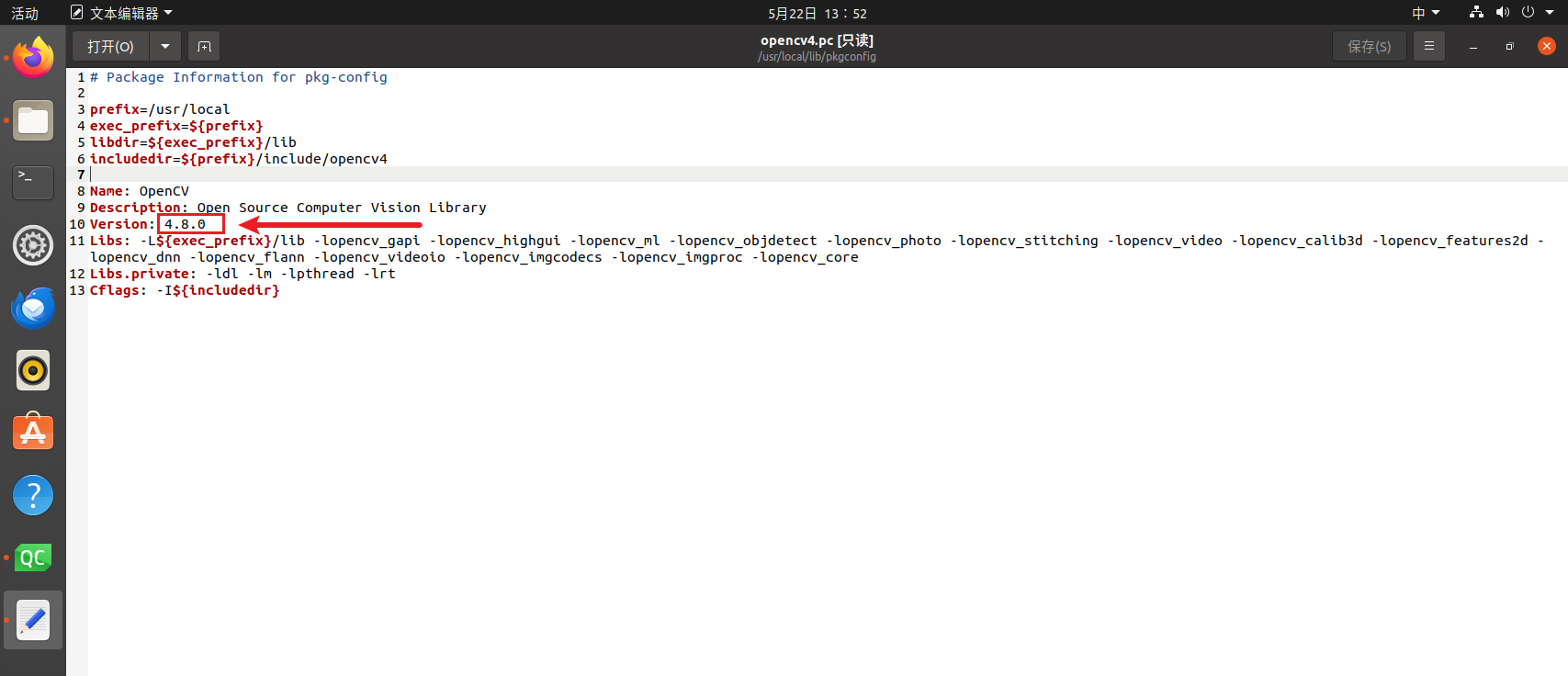

注意2:(本文读者可以不看这一条注意)如果下载的是opencv4或其他版本的,文件已经更新了但还是显示原来的版本,可能是opencv4.pc文件没改(pc文件一般在 /usr/local/lib/pkgconfig/ 或者 /usr/lib/pkgconfig/ 下面)

使用sudo指令进入文件修改即可

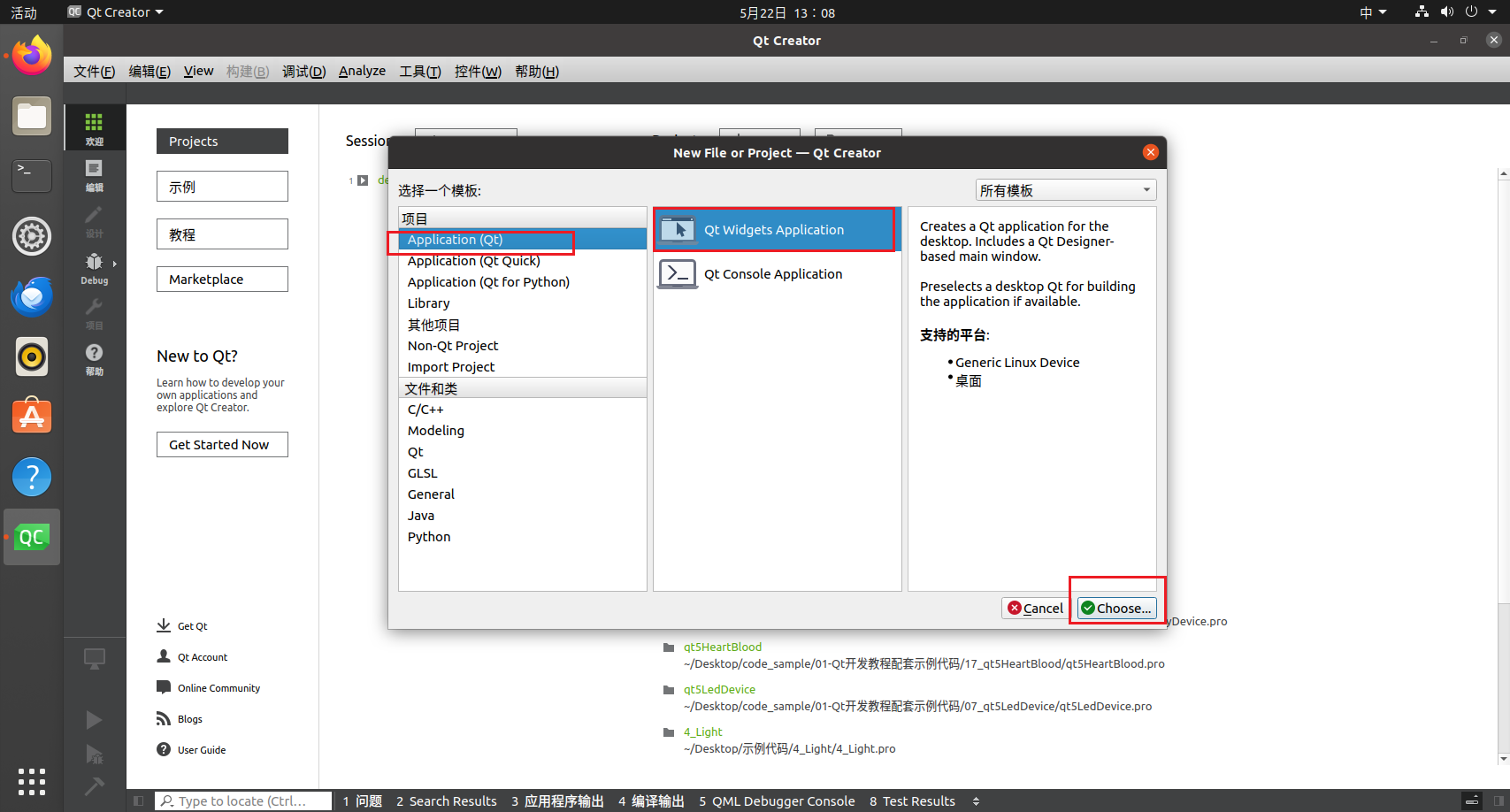

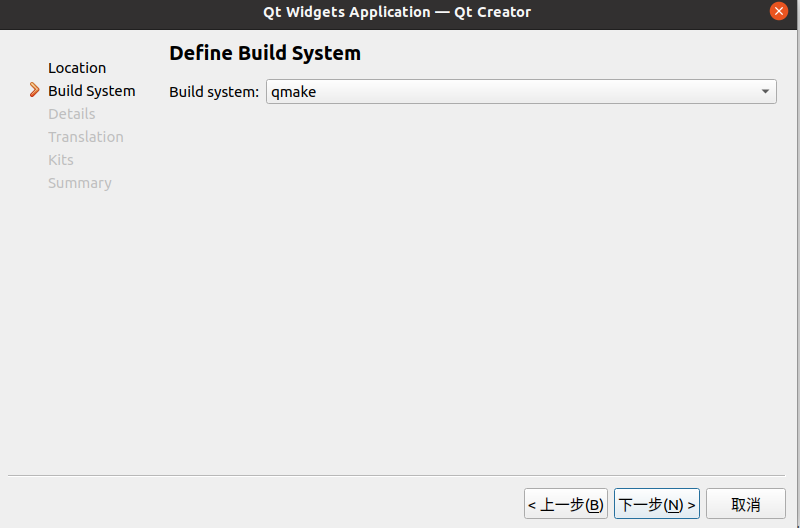

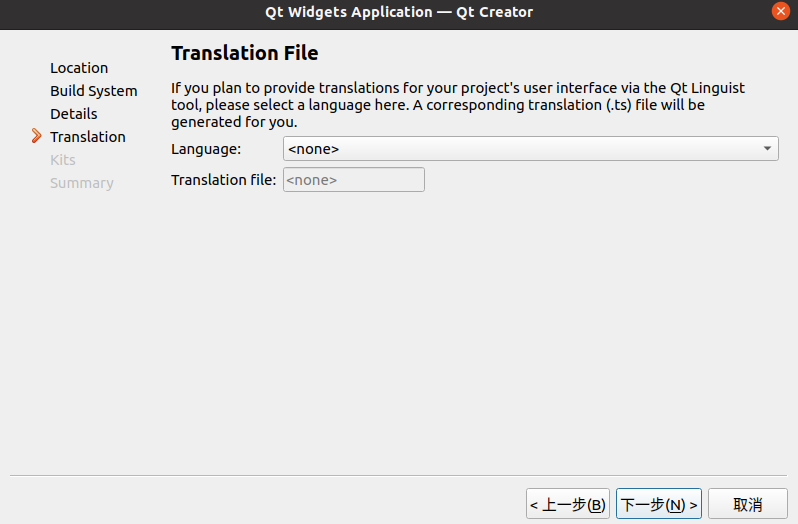

(2)创建QT项目

QT下载安装参考:Ubuntu 20.04安装Qt 5.15(最新,超详细)_ubuntu安装qt5.15-CSDN博客

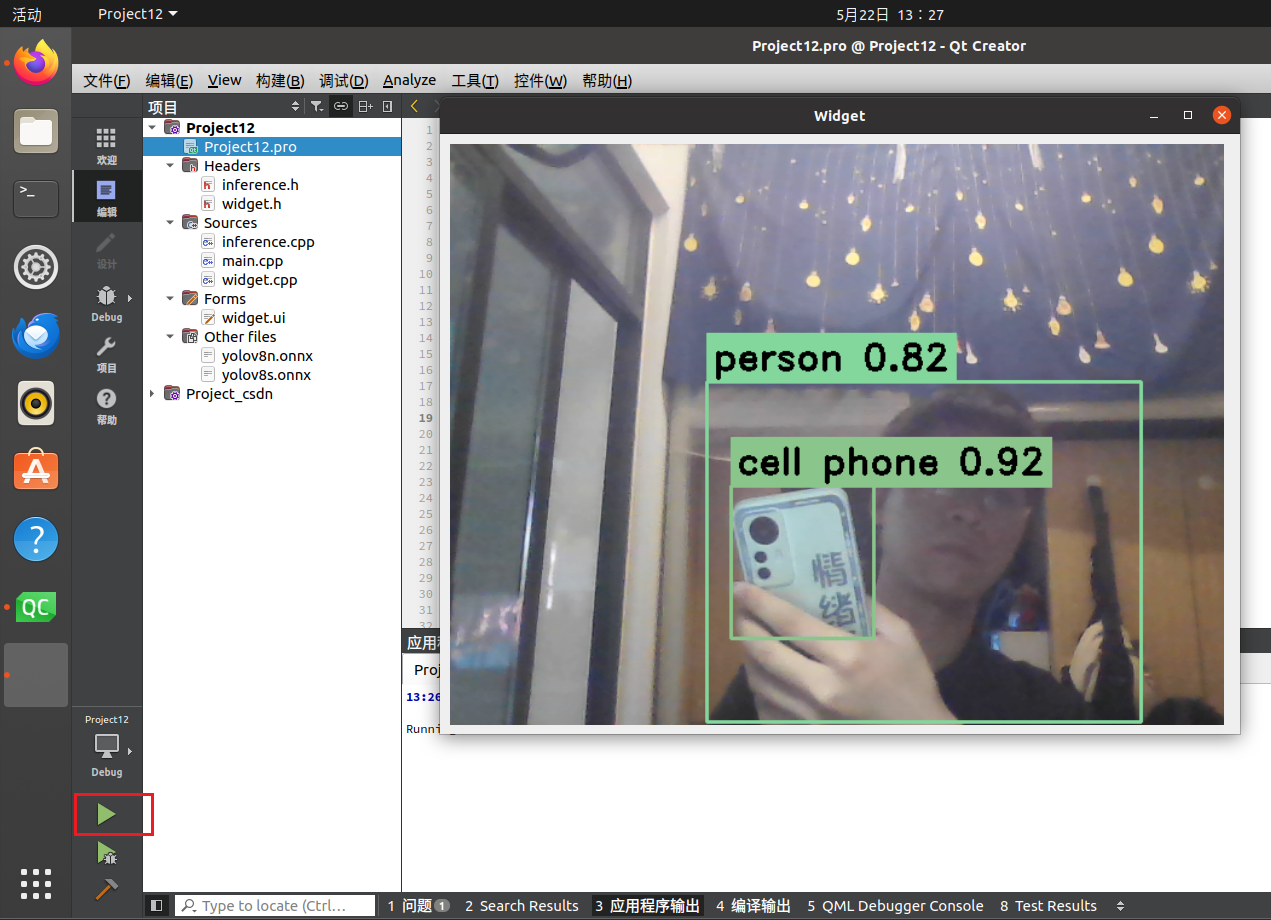

使用opencv4.6.0,linux和windows都可以,下面以linux为例子。注:运行代码需要onnx模型

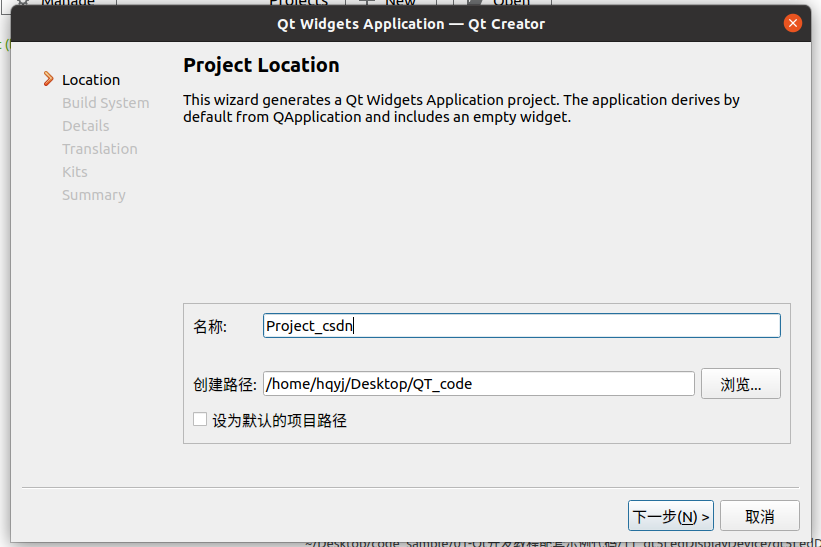

虚拟机打开QT,新建文件

名称随便取

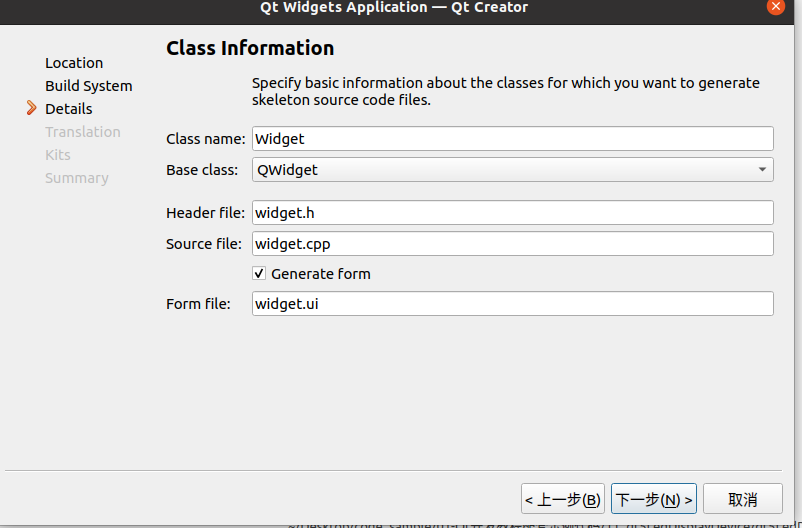

Base class 我这里选择的 QWidget

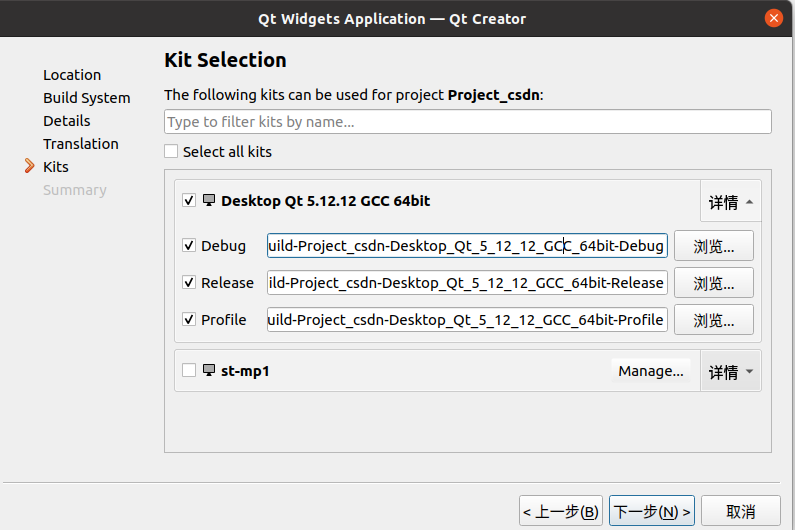

勾选Kits配置,Kits配置可以在网上寻找教程,这里不再介绍

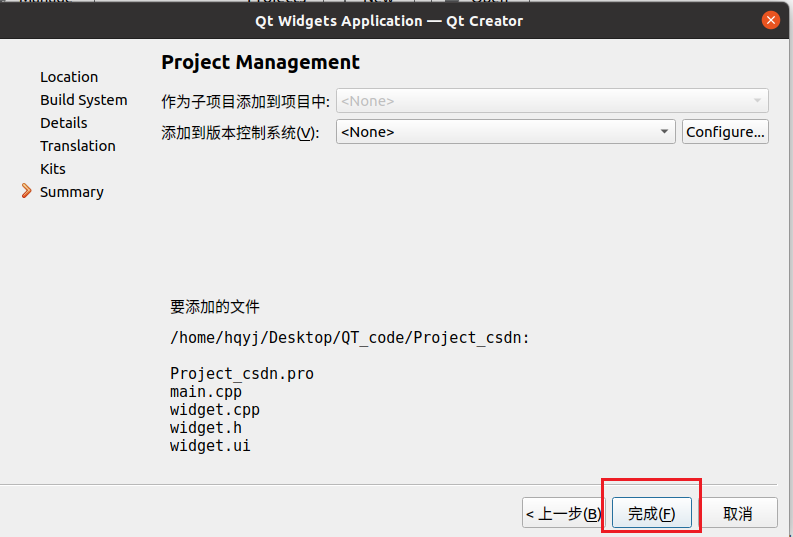

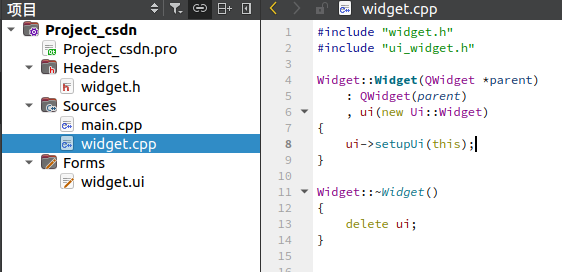

完成创建新项目

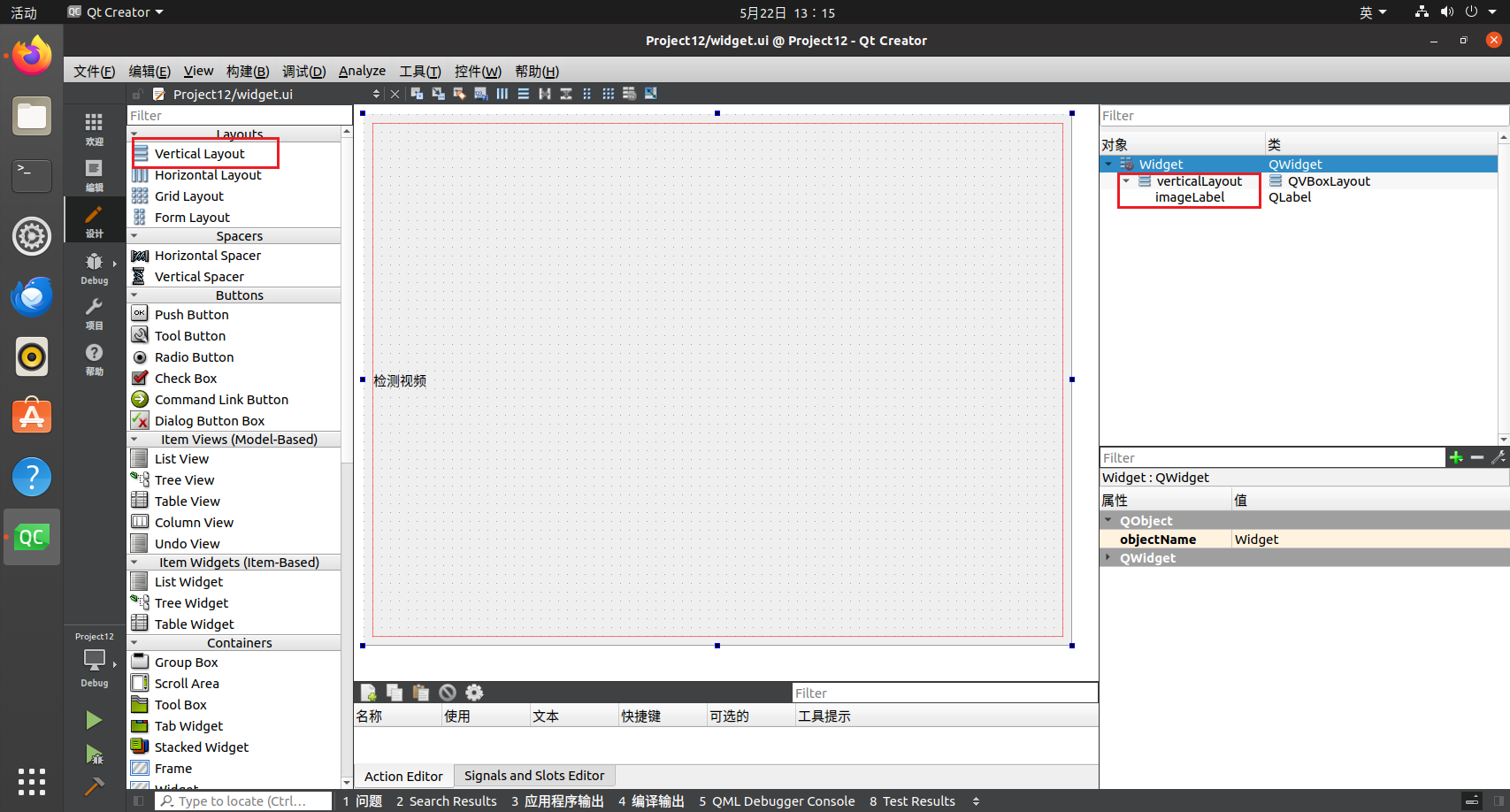

点击widget.ui,添加 layout和 label,修改label名称

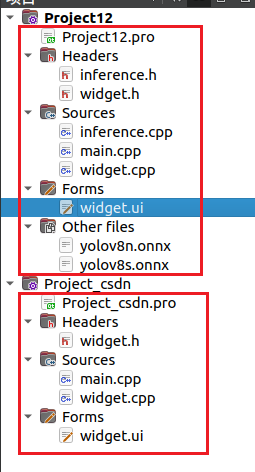

在新项目中添加或者修改文件

以下是相关代码

//inference.h

#ifndef INFERENCE_H#define INFERENCE_H// Cpp native#include #include #include #include // OpenCV / DNN / Inference#include #include #include struct Detection{ int class_id{0}; std::string className{}; float confidence{0.0}; cv::Scalar color{}; cv::Rect box{};};class Inference{public: Inference(const std::string &onnxModelPath, const cv::Size &modelInputShape = {640, 640}, const std::string &classesTxtFile = \"\", const bool &runWithCuda = true); std::vector runInference(const cv::Mat &input);private: void loadClassesFromFile(); void loadOnnxNetwork(); cv::Mat formatToSquare(const cv::Mat &source); std::string modelPath{}; std::string classesPath{}; bool cudaEnabled{}; std::vector classes{\"person\", \"bicycle\", \"car\", \"motorcycle\", \"airplane\", \"bus\", \"train\", \"truck\", \"boat\", \"traffic light\", \"fire hydrant\", \"stop sign\", \"parking meter\", \"bench\", \"bird\", \"cat\", \"dog\", \"horse\", \"sheep\", \"cow\", \"elephant\", \"bear\", \"zebra\", \"giraffe\", \"backpack\", \"umbrella\", \"handbag\", \"tie\", \"suitcase\", \"frisbee\", \"skis\", \"snowboard\", \"sports ball\", \"kite\", \"baseball bat\", \"baseball glove\", \"skateboard\", \"surfboard\", \"tennis racket\", \"bottle\", \"wine glass\", \"cup\", \"fork\", \"knife\", \"spoon\", \"bowl\", \"banana\", \"apple\", \"sandwich\", \"orange\", \"broccoli\", \"carrot\", \"hot dog\", \"pizza\", \"donut\", \"cake\", \"chair\", \"couch\", \"potted plant\", \"bed\", \"dining table\", \"toilet\", \"tv\", \"laptop\", \"mouse\", \"remote\", \"keyboard\", \"cell phone\", \"microwave\", \"oven\", \"toaster\", \"sink\", \"refrigerator\", \"book\", \"clock\", \"vase\", \"scissors\", \"teddy bear\", \"hair drier\", \"toothbrush\"}; cv::Size2f modelShape{}; float modelConfidenceThreshold {0.25}; float modelScoreThreshold {0.45}; float modelNMSThreshold {0.50}; bool letterBoxForSquare = true; cv::dnn::Net net;};#endif // INFERENCE_H//inference.cpp

#include \"inference.h\"//初始化YOLO模型的推理环境Inference::Inference(const std::string &onnxModelPath, const cv::Size &modelInputShape, const std::string &classesTxtFile, const bool &runWithCuda){ modelPath = onnxModelPath; modelShape = modelInputShape; classesPath = classesTxtFile; cudaEnabled = runWithCuda; loadOnnxNetwork(); // loadClassesFromFile(); The classes are hard-coded for this example}//对输入图像input执行YOLO模型的推理,并返回检测结果。std::vector Inference::runInference(const cv::Mat &input){ cv::Mat modelInput = input; if (letterBoxForSquare && modelShape.width == modelShape.height) modelInput = formatToSquare(modelInput); cv::Mat blob; cv::dnn::blobFromImage(modelInput, blob, 1.0/255.0, modelShape, cv::Scalar(), true, false); net.setInput(blob); std::vector outputs; net.forward(outputs, net.getUnconnectedOutLayersNames()); int rows = outputs[0].size[1]; int dimensions = outputs[0].size[2]; bool yolov8 = false; // yolov5 has an output of shape (batchSize, 25200, 85) (Num classes + box[x,y,w,h] + confidence[c]) // yolov8 has an output of shape (batchSize, 84, 8400) (Num classes + box[x,y,w,h]) if (dimensions > rows) // Check if the shape[2] is more than shape[1] (yolov8) { yolov8 = true; rows = outputs[0].size[2]; dimensions = outputs[0].size[1]; outputs[0] = outputs[0].reshape(1, dimensions); cv::transpose(outputs[0], outputs[0]); } float *data = (float *)outputs[0].data; float x_factor = modelInput.cols / modelShape.width; float y_factor = modelInput.rows / modelShape.height; std::vector class_ids; std::vector confidences; std::vector boxes; for (int i = 0; i modelScoreThreshold) { confidences.push_back(maxClassScore); class_ids.push_back(class_id.x); float x = data[0]; float y = data[1]; float w = data[2]; float h = data[3]; int left = int((x - 0.5 * w) * x_factor); int top = int((y - 0.5 * h) * y_factor); int width = int(w * x_factor); int height = int(h * y_factor); boxes.push_back(cv::Rect(left, top, width, height)); } } else // yolov5 { float confidence = data[4]; if (confidence >= modelConfidenceThreshold) { float *classes_scores = data+5; cv::Mat scores(1, classes.size(), CV_32FC1, classes_scores); cv::Point class_id; double max_class_score; minMaxLoc(scores, 0, &max_class_score, 0, &class_id); if (max_class_score > modelScoreThreshold) { confidences.push_back(confidence); class_ids.push_back(class_id.x); float x = data[0]; float y = data[1]; float w = data[2]; float h = data[3]; int left = int((x - 0.5 * w) * x_factor); int top = int((y - 0.5 * h) * y_factor); int width = int(w * x_factor); int height = int(h * y_factor); boxes.push_back(cv::Rect(left, top, width, height)); } } } data += dimensions; } std::vector nms_result; cv::dnn::NMSBoxes(boxes, confidences, modelScoreThreshold, modelNMSThreshold, nms_result); std::vector detections{}; for (unsigned long i = 0; i < nms_result.size(); ++i) { int idx = nms_result[i]; Detection result; result.class_id = class_ids[idx]; result.confidence = confidences[idx]; std::random_device rd; std::mt19937 gen(rd()); std::uniform_int_distribution dis(100, 255); result.color = cv::Scalar(dis(gen), dis(gen), dis(gen)); result.className = classes[result.class_id]; result.box = boxes[idx]; detections.push_back(result); } return detections;}//从指定的文本文件(classesTxtFile)中加载类别名称。void Inference::loadClassesFromFile(){ std::ifstream inputFile(classesPath); if (inputFile.is_open()) { std::string classLine; while (std::getline(inputFile, classLine)) classes.push_back(classLine); inputFile.close(); }}//加载YOLO模型的ONNX文件。void Inference::loadOnnxNetwork(){ net = cv::dnn::readNetFromONNX(modelPath); if (cudaEnabled) { std::cout << \"\\nRunning on CUDA\" << std::endl; net.setPreferableBackend(cv::dnn::DNN_BACKEND_CUDA); net.setPreferableTarget(cv::dnn::DNN_TARGET_CUDA); } else { std::cout << \"\\nRunning on CPU\" << std::endl; net.setPreferableBackend(cv::dnn::DNN_BACKEND_OPENCV); net.setPreferableTarget(cv::dnn::DNN_TARGET_CPU); }}//将输入图像调整为正方形。cv::Mat Inference::formatToSquare(const cv::Mat &source){ int col = source.cols; int row = source.rows; int _max = MAX(col, row); cv::Mat result = cv::Mat::zeros(_max, _max, CV_8UC3); source.copyTo(result(cv::Rect(0, 0, col, row))); return result;}//widget.h

#ifndef WIDGET_H#define WIDGET_H#include #include #include #include \"inference.h\"QT_BEGIN_NAMESPACEnamespace Ui { class Widget; }QT_END_NAMESPACEclass Widget : public QWidget{ Q_OBJECTpublic: Widget(QWidget *parent = nullptr); ~Widget();private slots: void processFrame();private: Ui::Widget *ui; Inference *inference; QTimer *timer; cv::VideoCapture capture; // 摄像头捕获对象 cv::Mat currentFrame; void updateImageDisplay(const cv::Mat &mat);};#endif // WIDGET_H//widget.cpp

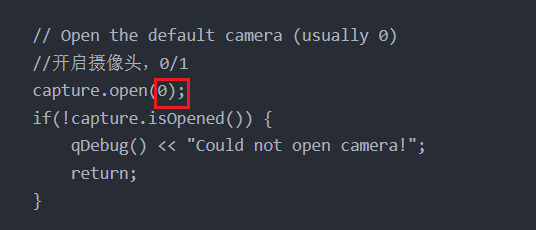

#include \"widget.h\"#include \"ui_widget.h\"#include #include #include #include Widget::Widget(QWidget *parent) : QWidget(parent) , ui(new Ui::Widget){ ui->setupUi(this); // Initialize the inference engine //初始化推理文件 bool runOnGPU = false; //加载权重:yolov8n.onnx、yolov8s.onnx、yolov8m.onnx inference = new Inference(\"/home/hqyj/Desktop/QT_code/Project12/yolov8m.onnx\", cv::Size(640, 480), \"classes.txt\", runOnGPU); // Open the default camera (usually 0) //开启摄像头,0/1 capture.open(0); if(!capture.isOpened()) { qDebug() <start(33); // ~30 fps}Widget::~Widget(){ delete ui; delete inference; delete timer; capture.release(); // Release the camera}//=======================================functions=============================================//================================帧率void Widget::processFrame(){ // Capture a new frame from camera capture >> currentFrame; if(currentFrame.empty()) return; // Run inference std::vector output = inference->runInference(currentFrame); // Draw detections cv::Mat displayFrame = currentFrame.clone(); int detections = output.size(); for (int i = 0; i imageLabel->size(), Qt::KeepAspectRatio, Qt::SmoothTransformation); ui->imageLabel->setPixmap(pixmap);}路径要改成你自己的![]()

//main.cpp

#include \"widget.h\"#include int main(int argc, char *argv[]){ QApplication a(argc, argv); Widget w; w.show(); return a.exec();}//pro

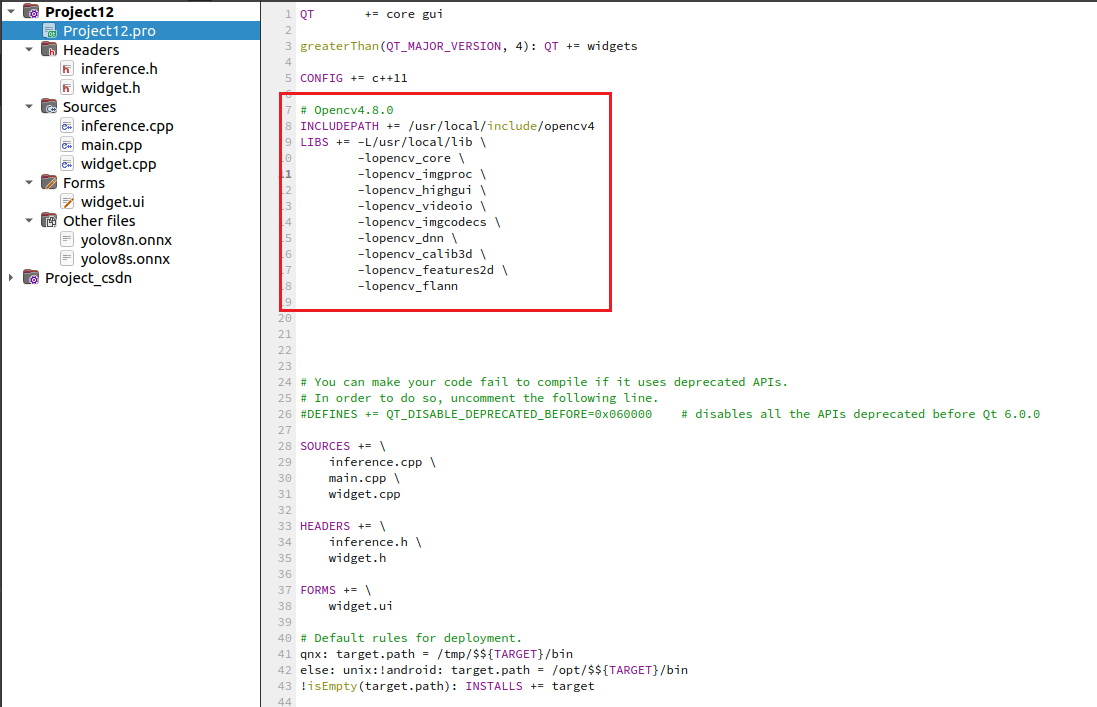

添加opencv路径,其中 include/opencv4 和 lib 是你opencv的地址

检查该路径下是否有opencv4和lib里面的文件

# Opencv4.8.0INCLUDEPATH += /usr/local/include/opencv4LIBS += -L/usr/local/lib \\ -lopencv_core \\ -lopencv_imgproc \\ -lopencv_highgui \\ -lopencv_videoio \\ -lopencv_imgcodecs \\ -lopencv_dnn \\ -lopencv_calib3d \\ -lopencv_features2d \\ -lopencv_flann配置好后点击运行即可

到此结束!!!

//================================================================//

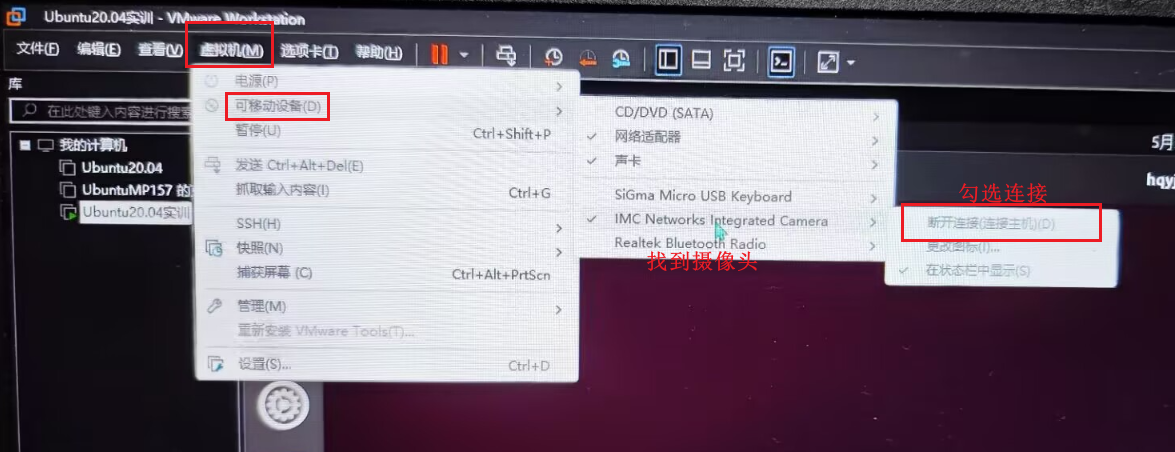

如果摄像头打不开的话:

1、可能是设备号有问题,在widget.cpp文件中,可以改成1试试

2、也可能是没勾选连接