Kafka KRaft + SSL + SASL/PLAIN 部署文档_kafka kraft ssl认证配置

本文档介绍如何在 Windows 环境下部署 Kafka 4.x,使用 KRaft 模式、SSL 加密和 SASL/PLAIN 认证。stevensu1/test-kafka

1. 环境准备

- JDK 17 或更高版本

- Kafka 4.x 版本(本文档基于 kafka_2.13-4.0.0)

2. 目录结构

D:\\kafka_2.13-4.0.0\\├── bin\\windows\\├── config\\│ ├── server.properties│ ├── ssl.properties│ ├── jaas.conf│ └── log4j2.properties├── logs\\└── config\\ssl\\ ├── kafka.server.keystore.jks └── kafka.server.truststore.jks

3. 配置文件说明

3.1 server.properties

# 节点角色配置process.roles=broker,controllernode.id=1# 监听器配置listeners=PLAINTEXT://:9092,CONTROLLER://:9093,SSL://:9094,SASL_SSL://:9095advertised.listeners=SSL://localhost:9094,SASL_SSL://localhost:9095controller.listener.names=CONTROLLERinter.broker.listener.name=SSLlistener.security.protocol.map=CONTROLLER:PLAINTEXT,PLAINTEXT:PLAINTEXT,SSL:SSL,SASL_SSL:SASL_SSL# 控制器配置controller.quorum.voters=1@localhost:9093# 日志配置log.dirs=D:/kafka_2.13-4.0.0/logs# 安全配置sasl.enabled.mechanisms=PLAINsasl.mechanism.inter.broker.protocol=PLAINauthorizer.class.name=org.apache.kafka.metadata.authorizer.StandardAuthorizerallow.everyone.if.no.acl.found=true# SSL 配置ssl.keystore.location=config/ssl/kafka.server.keystore.jksssl.keystore.password=kafka123ssl.key.password=kafka123ssl.truststore.location=config/ssl/kafka.server.truststore.jksssl.truststore.password=kafka123ssl.client.auth=requiredssl.enabled.protocols=TLSv1.2,TLSv1.3ssl.endpoint.identification.algorithm=HTTPSssl.secure.random.implementation=SHA1PRNG

3.2 ssl.properties

# SSL 配置ssl.keystore.location=config/ssl/kafka.server.keystore.jksssl.keystore.password=kafka123ssl.key.password=kafka123ssl.truststore.location=config/ssl/kafka.server.truststore.jksssl.truststore.password=kafka123ssl.client.auth=requiredssl.enabled.protocols=TLSv1.2,TLSv1.3ssl.endpoint.identification.algorithm=HTTPSssl.secure.random.implementation=SHA1PRNG

3.3 jaas.conf

KafkaServer { org.apache.kafka.common.security.plain.PlainLoginModule required username=\"admin\" password=\"admin-secret\" user_admin=\"admin-secret\" user_alice=\"alice-secret\";};

3.4 log4j2.properties

status = INFOname = KafkaConfig# 控制台输出appender.console.type = Consoleappender.console.name = consoleappender.console.layout.type = PatternLayoutappender.console.layout.pattern = %d{yyyy-MM-dd HH:mm:ss.SSS} [%thread] %-5level %logger{36} - %msg%n# 文件输出appender.kafka.type = RollingFileappender.kafka.name = kafkaappender.kafka.fileName = ${sys:kafka.logs.dir}/server.logappender.kafka.filePattern = ${sys:kafka.logs.dir}/server-%d{yyyy-MM-dd}-%i.log.gzappender.kafka.layout.type = PatternLayoutappender.kafka.layout.pattern = %d{yyyy-MM-dd HH:mm:ss.SSS} [%thread] %-5level %logger{36} - %msg%nappender.kafka.policies.type = Policiesappender.kafka.policies.time.type = TimeBasedTriggeringPolicyappender.kafka.policies.size.type = SizeBasedTriggeringPolicyappender.kafka.policies.size.size = 100MBappender.kafka.strategy.type = DefaultRolloverStrategyappender.kafka.strategy.max = 10# 根日志配置rootLogger.level = INFOrootLogger.appenderRefs = console, kafkarootLogger.appenderRef.console.ref = consolerootLogger.appenderRef.kafka.ref = kafka# Kafka 日志配置logger.kafka.name = kafkalogger.kafka.level = INFOlogger.kafka.additivity = falselogger.kafka.appenderRefs = console, kafkalogger.kafka.appenderRef.console.ref = consolelogger.kafka.appenderRef.kafka.ref = kafka

4. 部署步骤

4.1 初始步骤

1. 下载 Kafka 4.x 版本(本文档基于 kafka_2.13-4.0.0)

2. 解压到 D:\\kafka_2.13-4.0.0

3. 创建必要的目录:

mkdir D:\\kafka_2.13-4.0.0\\logsmkdir D:\\kafka_2.13-4.0.0\\config\\ssl

4. 创建配置文件(server.properties、ssl.properties、jaas.conf、log4j2.properties)

4.2 生成 SSL 证书

运行以下命令生成 SSL 证书:

# 1. 生成 CA 私钥和证书openssl req -new -x509 -keyout ca-key -out ca-cert -days 365 -nodes -subj \"/CN=kafka-ca\"# 2. 生成服务器私钥openssl genrsa -out kafka.server.key 2048# 3. 生成服务器证书签名请求(CSR)openssl req -new -key kafka.server.key -out kafka.server.csr -subj \"/CN=localhost\"# 4. 使用 CA 证书签名服务器证书openssl x509 -req -CA ca-cert -CAkey ca-key -in kafka.server.csr -out kafka.server.cert -days 365 -CAcreateserial# 5. 创建 JKS 格式的密钥库keytool -import -alias ca -file ca-cert -keystore kafka.server.truststore.jks -storepass kafka123 -nopromptkeytool -import -alias server -file kafka.server.cert -keystore kafka.server.keystore.jks -storepass kafka123 -noprompt# 6. 将私钥导入密钥库openssl pkcs12 -export -in kafka.server.cert -inkey kafka.server.key -out kafka.server.p12 -name server -password pass:kafka123keytool -importkeystore -srckeystore kafka.server.p12 -srcstoretype PKCS12 -destkeystore kafka.server.keystore.jks -deststoretype JKS -srcstorepass kafka123 -deststorepass kafka123# 7. 移动证书文件到配置目录move kafka.server.keystore.jks config\\ssl\\move kafka.server.truststore.jks config\\ssl\\

4.3 格式化存储目录

在启动 Kafka 服务前,需要格式化存储目录,确保 meta.properties 正确生成:

bin\\windows\\kafka-storage.bat random-uuidbin\\windows\\kafka-storage.bat format -t -c config\\server.properties

4.4 启动 Kafka 服务

运行以下命令启动 Kafka 服务:

start-kafka-kraft.bat

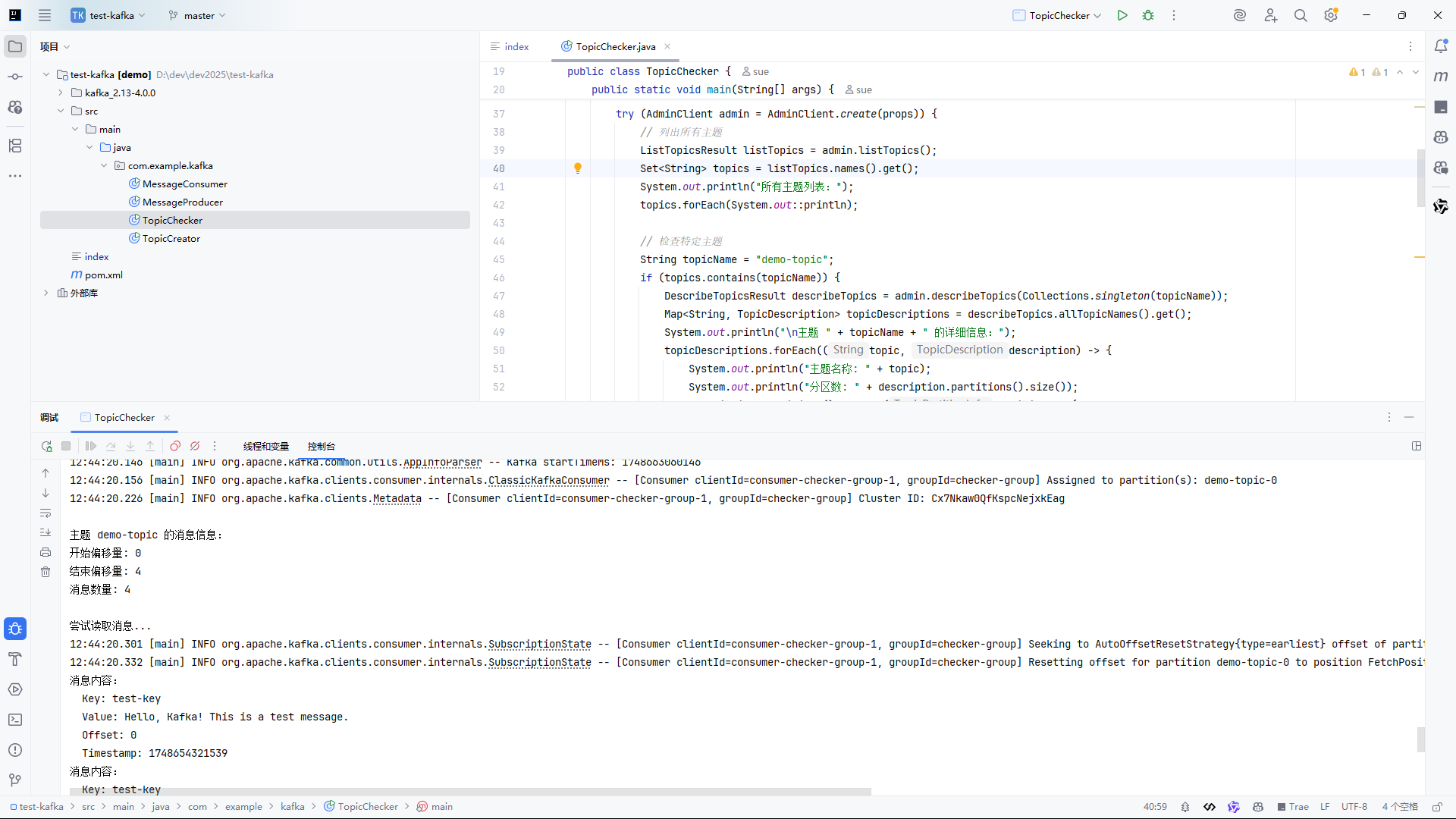

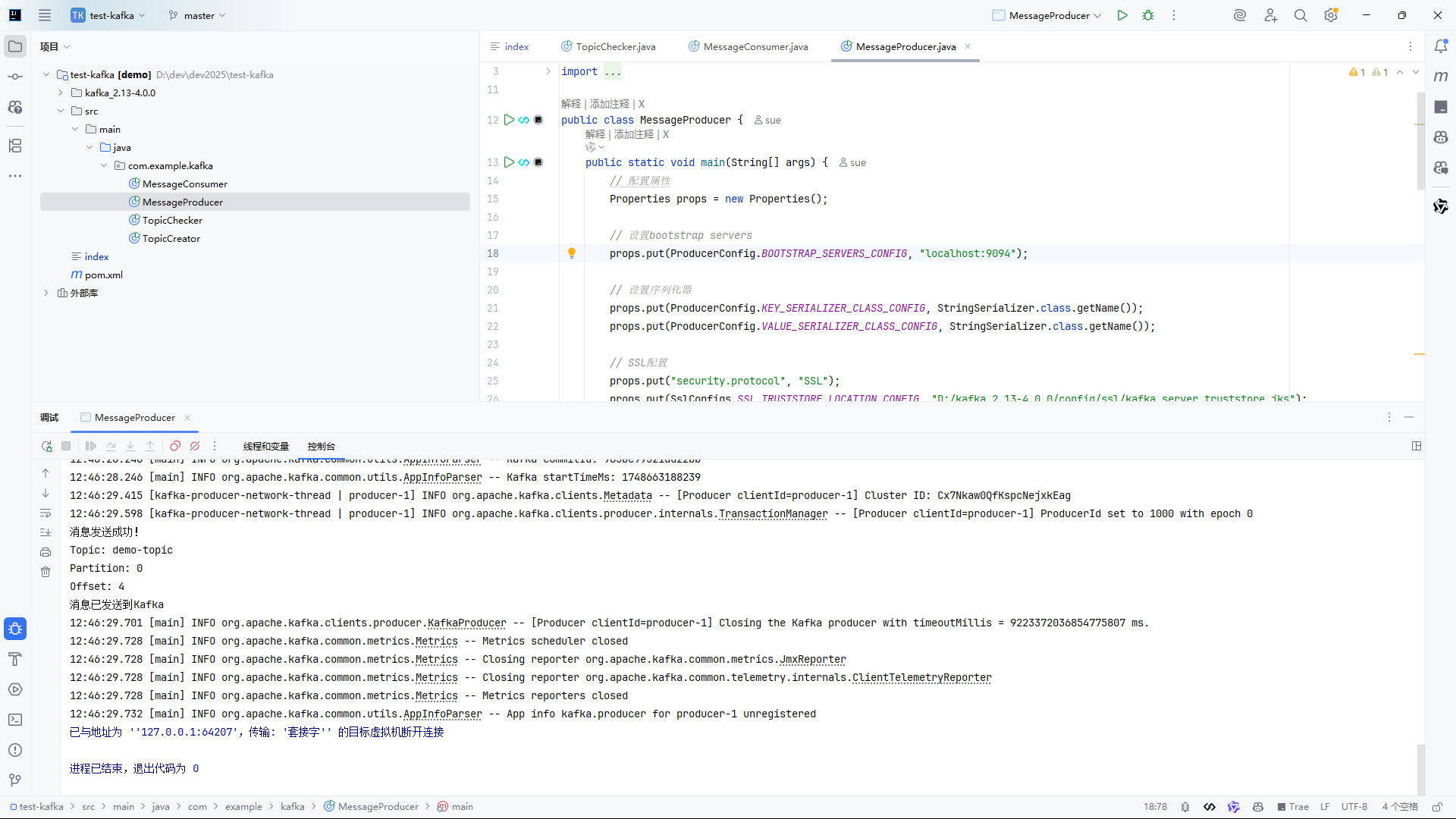

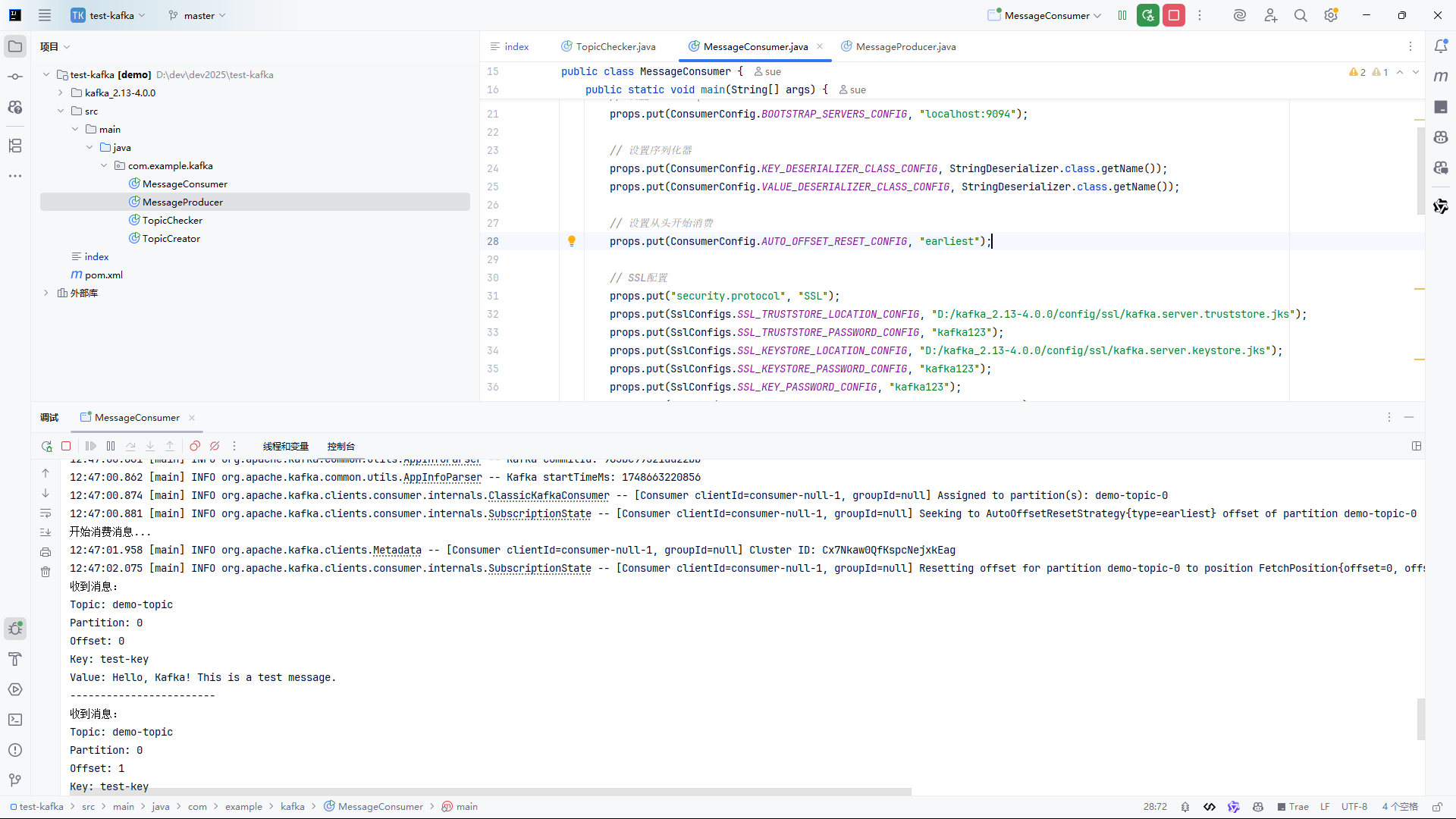

5. 客户端连接示例

5.1 Java 客户端配置

bootstrap.servers=localhost:9095security.protocol=SASL_SSLsasl.mechanism=PLAINsasl.jaas.config=org.apache.kafka.common.security.plain.PlainLoginModule required username=\"admin\" password=\"admin-secret\";ssl.truststore.location=你的truststore路径ssl.truststore.password=kafka123

5.2 命令行工具配置

使用以下命令创建主题:

bin\\windows\\kafka-topics.bat --create --topic test-topic --bootstrap-server localhost:9095 --command-config config\\client.properties

6. 常见问题

- 问题: No `meta.properties` found in logs directory

解决: 运行kafka-storage.bat format命令格式化存储目录。 - 问题: ClassNotFoundException: kafka.security.authorizer.AclAuthorizer

解决: 将authorizer.class.name修改为org.apache.kafka.metadata.authorizer.StandardAuthorizer。 - 问题: Log4j 配置错误

解决: 确保使用 Log4j2 配置文件,并在启动脚本中正确设置KAFKA_LOG4J_OPTS。

7. 参考链接

- Kafka 安全文档

- Log4j 迁移指南