Unity AR实现语音转文字_unity语音转文字

目录

一、选一个平台(每一个平台都很好,gpt评价的)

二、科大讯飞平台进行注册创建

三、Unity中接入api

四、Unity项目中进一步配置,与UI按钮联动

Unity实现AI语音交互完整逻辑框架概括来讲是:

麦克风录音 -> 语音识别(ASR) -> NLU理解 -> 语音合成(TTS) -> 播放音频

现在先实现前半段,语音识别(ASR)。

一、选一个平台(每一个平台都很好,gpt评价的)

ChatGPT结合目前 2025年最新国内市场,梳理了一份最实用、最主流、性价比最高的语音转文字(ASR)与文字转语音(TTS)API推荐清单。

我选择的是讯飞,最后在电脑和安卓android手机上测试,效果都挺不错的。

(我看到过一个欧拉蜜的平台, 语音识别(ASR) -> NLU理解 -> 语音合成(TTS)完整的流程可以比较简单的一起搭建下来。但是!我发现最新的官网没有注册的地方,只能登录,所以无法使用。)

二、科大讯飞平台进行注册创建

讯飞开放平台-以语音交互为核心的人工智能开放平台

1.首先官网注册和登录账号

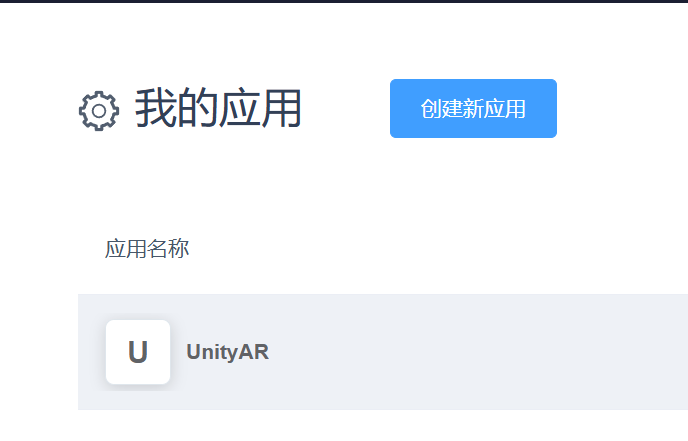

2.进入控制台,创建一个新应用

填一下这些内容,我写的是unity ar。

3.点击创建出来的应用,然后选择左侧菜单栏里,语音识别中的语音听写。

4.查看右侧的Websocket服务接口认证信息,有APPID、APISecret和APIKey。

这三串字符之后要输入到unity中。

三、Unity中接入api

写一个脚本,挂到一个空物体上。

脚本内容:

using System;using System.Collections;using System.Collections.Generic;using System.Net.WebSockets;using System.Security.Cryptography;using System.Text;using System.Threading;using TMPro;using UnityEngine;using UnityEngine.EventSystems;using UnityEngine.UI;public class XunfeiSpeechToText : MonoBehaviour{ #region 参数 /// /// host地址 /// [SerializeField] private string m_HostUrl = \"iat-api.xfyun.cn\"; /// /// 语言 /// [SerializeField] private string m_Language = \"zh_cn\"; /// /// 应用领域 /// [SerializeField] private string m_Domain = \"iat\"; /// /// 方言mandarin:中文普通话、其他语种 /// [SerializeField] private string m_Accent = \"mandarin\"; /// /// 音频的采样率 /// [SerializeField] private string m_Format = \"audio/L16;rate=16000\"; /// /// 音频数据格式 /// [SerializeField] private string m_Encoding = \"raw\"; /// /// websocket /// private ClientWebSocket m_WebSocket; /// /// 传输中断标记点 /// private CancellationToken m_CancellationToken; /// /// 语音识别API地址 /// [SerializeField] [Header(\"语音识别API地址\")] private string m_SpeechRecognizeURL; /// /// 讯飞的AppID /// [Header(\"填写APP ID\")] [SerializeField] private string m_AppID = \"讯飞的AppID\"; /// /// 讯飞的APIKey /// [Header(\"填写Api Key\")] [SerializeField] private string m_APIKey = \"讯飞的APIKey\"; /// /// 讯飞的APISecret /// [Header(\"填写Secret Key\")] [SerializeField] private string m_APISecret = \"讯飞的APISecret\"; /// /// 计算方法调用的时间 /// [SerializeField] protected System.Diagnostics.Stopwatch stopwatch = new System.Diagnostics.Stopwatch(); #endregion private void Awake() { //注册按钮事件 RegistButtonEvent(); //绑定地址 地址就是讯飞平台上的 WebSocket API地址 m_SpeechRecognizeURL = \"wss://iat-api.xfyun.cn/v2/iat\"; } #region 语音输入 /// /// 语音输入的按钮 /// [SerializeField] private Button m_VoiceInputBotton; /// /// 录音按钮的文本 /// [SerializeField] private TextMeshProUGUI m_VoiceBottonText; /// /// 录音的提示信息 /// [SerializeField] private TextMeshProUGUI m_RecordTips; /// /// 录制的音频长度 /// public int m_RecordingLength = 5; /// /// 临时接收音频的片段 /// private AudioClip recording; /// /// 注册按钮事件 /// private void RegistButtonEvent() { if (m_VoiceInputBotton == null || m_VoiceInputBotton.GetComponent()) return; EventTrigger _trigger = m_VoiceInputBotton.gameObject.AddComponent(); //添加按钮按下的事件 EventTrigger.Entry _pointDown_entry = new EventTrigger.Entry(); _pointDown_entry.eventID = EventTriggerType.PointerDown; _pointDown_entry.callback = new EventTrigger.TriggerEvent(); //添加按钮松开事件 EventTrigger.Entry _pointUp_entry = new EventTrigger.Entry(); _pointUp_entry.eventID = EventTriggerType.PointerUp; _pointUp_entry.callback = new EventTrigger.TriggerEvent(); //添加委托事件 _pointDown_entry.callback.AddListener(delegate { StartRecord(); }); _pointUp_entry.callback.AddListener(delegate { StopRecord(); }); _trigger.triggers.Add(_pointDown_entry); _trigger.triggers.Add(_pointUp_entry); } /// /// 开始录制 /// public void StartRecord() { m_VoiceBottonText.text = \"正在录音中...\"; StartRecordAudio(); } /// /// 结束录制 /// public void StopRecord() { m_VoiceBottonText.text = \"按住按钮,开始录音\"; m_RecordTips.text = \"录音结束,正在识别...\"; StopRecordAudio(AcceptClip); } /// /// 开始录制声音 /// public void StartRecordAudio() { recording = Microphone.Start(null, true, m_RecordingLength, 16000); } /// /// 结束录制,返回audioClip /// /// public void StopRecordAudio(Action _callback) { Microphone.End(null); _callback(recording); } /// /// 处理录制的音频数据 /// /// public void AcceptClip(AudioClip _audioClip) { m_RecordTips.text = \"正在进行语音识别...\"; SpeechToText(_audioClip, DealingTextCallback); } /// /// 处理识别到的文本 /// /// private void DealingTextCallback(string _msg) { //在此处处理接收到的数据,可以选择发送给大模型,或者打印测试,会在后续补充功能 m_RecordTips.text = _msg; Debug.Log(_msg); } #endregion #region 获取鉴权Url /// /// 获取鉴权url /// /// private string GetUrl() { //获取时间戳 string date = DateTime.Now.ToString(\"r\"); //拼接原始的signature string signature_origin = string.Format(\"host: \" + m_HostUrl + \"\\ndate: \" + date + \"\\nGET /v2/iat HTTP/1.1\"); //hmac-sha256算法-签名,并转换为base64编码 string signature = Convert.ToBase64String(new HMACSHA256(Encoding.UTF8.GetBytes(m_APISecret)).ComputeHash(Encoding.UTF8.GetBytes(signature_origin))); //拼接原始的authorization string authorization_origin = string.Format(\"api_key=\\\"{0}\\\",algorithm=\\\"hmac-sha256\\\",headers=\\\"host date request-line\\\",signature=\\\"{1}\\\"\", m_APIKey, signature); //转换为base64编码 string authorization = Convert.ToBase64String(Encoding.UTF8.GetBytes(authorization_origin)); //拼接鉴权的url string url = string.Format(\"{0}?authorization={1}&date={2}&host={3}\", m_SpeechRecognizeURL, authorization, date, m_HostUrl); return url; } #endregion #region 语音识别 /// /// 语音识别 /// /// /// public void SpeechToText(AudioClip _clip, Action _callback) { byte[] _audioData = ConvertClipToBytes(_clip); StartCoroutine(SendAudioData(_audioData, _callback)); } /// /// 识别短文本 /// /// /// /// public IEnumerator SendAudioData(byte[] _audioData, Action _callback) { yield return null; ConnetHostAndRecognize(_audioData, _callback); } /// /// 连接服务,开始识别 /// /// /// private async void ConnetHostAndRecognize(byte[] _audioData, Action _callback) { try { stopwatch.Restart(); //建立socket连接 m_WebSocket = new ClientWebSocket(); m_CancellationToken = new CancellationToken(); Uri uri = new Uri(GetUrl()); await m_WebSocket.ConnectAsync(uri, m_CancellationToken); //开始识别 SendVoiceData(_audioData, m_WebSocket); StringBuilder stringBuilder = new StringBuilder(); while (m_WebSocket.State == WebSocketState.Open) { var result = new byte[4096]; await m_WebSocket.ReceiveAsync(new ArraySegment(result), m_CancellationToken); //去除空字节 List list = new List(result); while (list[list.Count - 1] == 0x00) list.RemoveAt(list.Count - 1); string str = Encoding.UTF8.GetString(list.ToArray()); //获取返回的json ResponseData _responseData = JsonUtility.FromJson(str); if (_responseData.code == 0) { stringBuilder.Append(GetWords(_responseData)); } else { PrintErrorLog(_responseData.code); } m_WebSocket.Abort(); } string _resultMsg = stringBuilder.ToString(); //识别成功,回调 _callback(_resultMsg); stopwatch.Stop(); if (_resultMsg.Equals(null) || _resultMsg.Equals(\"\")) { Debug.Log(\"语音识别为空字符串\"); } else { //识别的数据不为空 在此处做功能处理 } Debug.Log(\"讯飞语音识别耗时:\" + stopwatch.Elapsed.TotalSeconds); } catch (Exception ex) { Debug.LogError(\"报错信息: \" + ex.Message); m_WebSocket.Dispose(); } } /// /// 获取识别到的文本 /// /// /// private string GetWords(ResponseData _responseData) { StringBuilder stringBuilder = new StringBuilder(); foreach (var item in _responseData.data.result.ws) { foreach (var _cw in item.cw) { stringBuilder.Append(_cw.w); } } return stringBuilder.ToString(); } private void SendVoiceData(byte[] audio, ClientWebSocket socket) { if (socket.State != WebSocketState.Open) { return; } PostData _postData = new PostData() { common = new CommonTag(m_AppID), business = new BusinessTag(m_Language, m_Domain, m_Accent), data = new DataTag(2, m_Format, m_Encoding, Convert.ToBase64String(audio)) }; string _jsonData = JsonUtility.ToJson(_postData); //发送数据 socket.SendAsync(new ArraySegment(Encoding.UTF8.GetBytes(_jsonData)), WebSocketMessageType.Binary, true, new CancellationToken()); } #endregion #region 工具方法 /// /// audioclip转为byte[] /// /// /// public byte[] ConvertClipToBytes(AudioClip audioClip) { float[] samples = new float[audioClip.samples]; audioClip.GetData(samples, 0); short[] intData = new short[samples.Length]; byte[] bytesData = new byte[samples.Length * 2]; int rescaleFactor = 32767; for (int i = 0; i < samples.Length; i++) { intData[i] = (short)(samples[i] * rescaleFactor); byte[] byteArr = new byte[2]; byteArr = BitConverter.GetBytes(intData[i]); byteArr.CopyTo(bytesData, i * 2); } return bytesData; } /// /// 打印错误日志 /// /// private void PrintErrorLog(int status) { if (status == 10005) { Debug.LogError(\"appid授权失败\"); return; } if (status == 10006) { Debug.LogError(\"请求缺失必要参数\"); return; } if (status == 10007) { Debug.LogError(\"请求的参数值无效\"); return; } if (status == 10010) { Debug.LogError(\"引擎授权不足\"); return; } if (status == 10019) { Debug.LogError(\"session超时\"); return; } if (status == 10043) { Debug.LogError(\"音频解码失败\"); return; } if (status == 10101) { Debug.LogError(\"引擎会话已结束\"); return; } if (status == 10313) { Debug.LogError(\"appid不能为空\"); return; } if (status == 10317) { Debug.LogError(\"版本非法\"); return; } if (status == 11200) { Debug.LogError(\"没有权限\"); return; } if (status == 11201) { Debug.LogError(\"日流控超限\"); return; } if (status == 10160) { Debug.LogError(\"请求数据格式非法\"); return; } if (status == 10161) { Debug.LogError(\"base64解码失败\"); return; } if (status == 10163) { Debug.LogError(\"缺少必传参数,或者参数不合法,具体原因见详细的描述\"); return; } if (status == 10200) { Debug.LogError(\"读取数据超时\"); return; } if (status == 10222) { Debug.LogError(\"网络异常\"); return; } } #endregion #region 数据定义 /// /// 发送的数据 /// [Serializable] public class PostData { [SerializeField] public CommonTag common; [SerializeField] public BusinessTag business; [SerializeField] public DataTag data; } [Serializable] public class CommonTag { [SerializeField] public string app_id = string.Empty; public CommonTag(string app_id) { this.app_id = app_id; } } [Serializable] public class BusinessTag { [SerializeField] public string language = \"zh_cn\"; [SerializeField] public string domain = \"iat\"; [SerializeField] public string accent = \"mandarin\"; public BusinessTag(string language, string domain, string accent) { this.language = language; this.domain = domain; this.accent = accent; } } [Serializable] public class DataTag { [SerializeField] public int status = 2; [SerializeField] public string format = \"audio/L16;rate=16000\"; [SerializeField] public string encoding = \"raw\"; [SerializeField] public string audio = string.Empty; public DataTag(int status, string format, string encoding, string audio) { this.status = status; this.format = format; this.encoding = encoding; this.audio = audio; } } [Serializable] public class ResponseData { [SerializeField] public int code = 0; [SerializeField] public string message = string.Empty; [SerializeField] public string sid = string.Empty; [SerializeField] public ResponcsedataTag data; } [Serializable] public class ResponcsedataTag { [SerializeField] public Results result; [SerializeField] public int status = 2; } [Serializable] public class Results { [SerializeField] public List ws; } [Serializable] public class WsTag { [SerializeField] public List cw; } [Serializable] public class CwTag { [SerializeField] public int sc = 0; [SerializeField] public string w = string.Empty; } #endregion}我的unity版本是2022.3.53,UI中的文字使用的是Text - TextMeshPro。

也就是TextMeshProUGUI 组件,不是老的Text 组件。

所以脚本中的这两个字段的类型需要是TextMeshProUGUI。

[SerializeField] private TextMeshProUGUI m_VoiceBottonText;[SerializeField] private TextMeshProUGUI m_RecordTips;如果是旧的UI系统,不是TextMeshPro,就使用text这个类型。

代码如下:

[SerializeField] private Text m_VoiceBottonText;[SerializeField] private Text m_RecordTips;四、Unity项目中进一步配置,与UI按钮联动

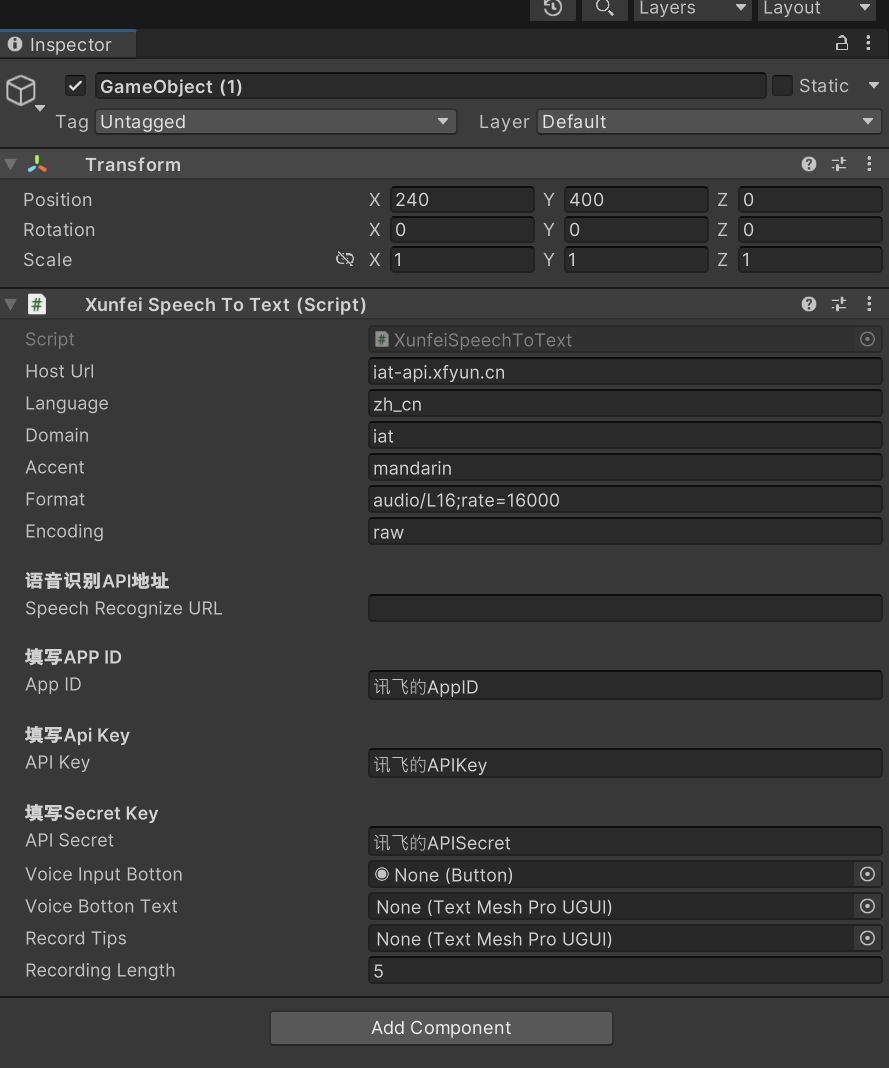

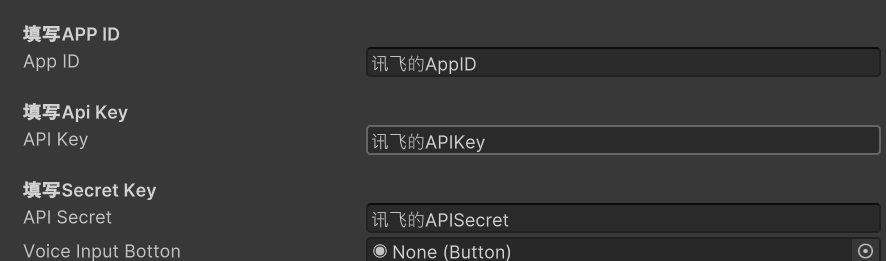

将脚本挂到物体上以后是这个效果。

Unity的UI里需要有一个按钮,一个文本text

1.把这三个字段填入自己创建的讯飞应用的Websocket服务接口认证信息中的字串。

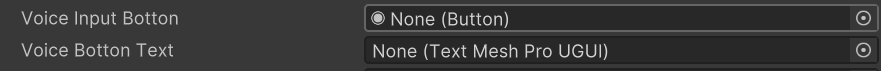

2.拖入需要长按开始讲话的按钮。以及按钮里的文字。

3.在UI里创建一个Text,用来显示语音识别的内容。

将这个组件拖入

![]()

五、测试

在电脑中测试正常。

导出apk到手机里,字体大小没有调节,但功能也正常。

(中文字体我到下一篇博客中写,如果使用默认字体会是一堆口,默认字体只显示英文)

按钮的提示语和场景中的提示语由代码里这些字段控制,可以自行修改。

/// /// 开始录制 /// public void StartRecord() { m_VoiceBottonText.text = \"正在录音中...\"; StartRecordAudio(); } /// /// 结束录制 /// public void StopRecord() { m_VoiceBottonText.text = \"按住按钮,开始录音\"; m_RecordTips.text = \"录音结束,正在识别...\"; StopRecordAudio(AcceptClip); }