K8S-Ingress资源对象

目录

一、核心概念

二、核心组件关系图

三、Ingress 工作流程(7步详解)

四、Ingress 核心配置要素

五、Ingress Controller 类型对比

六、常见问题与优化

验证-NodePort模式

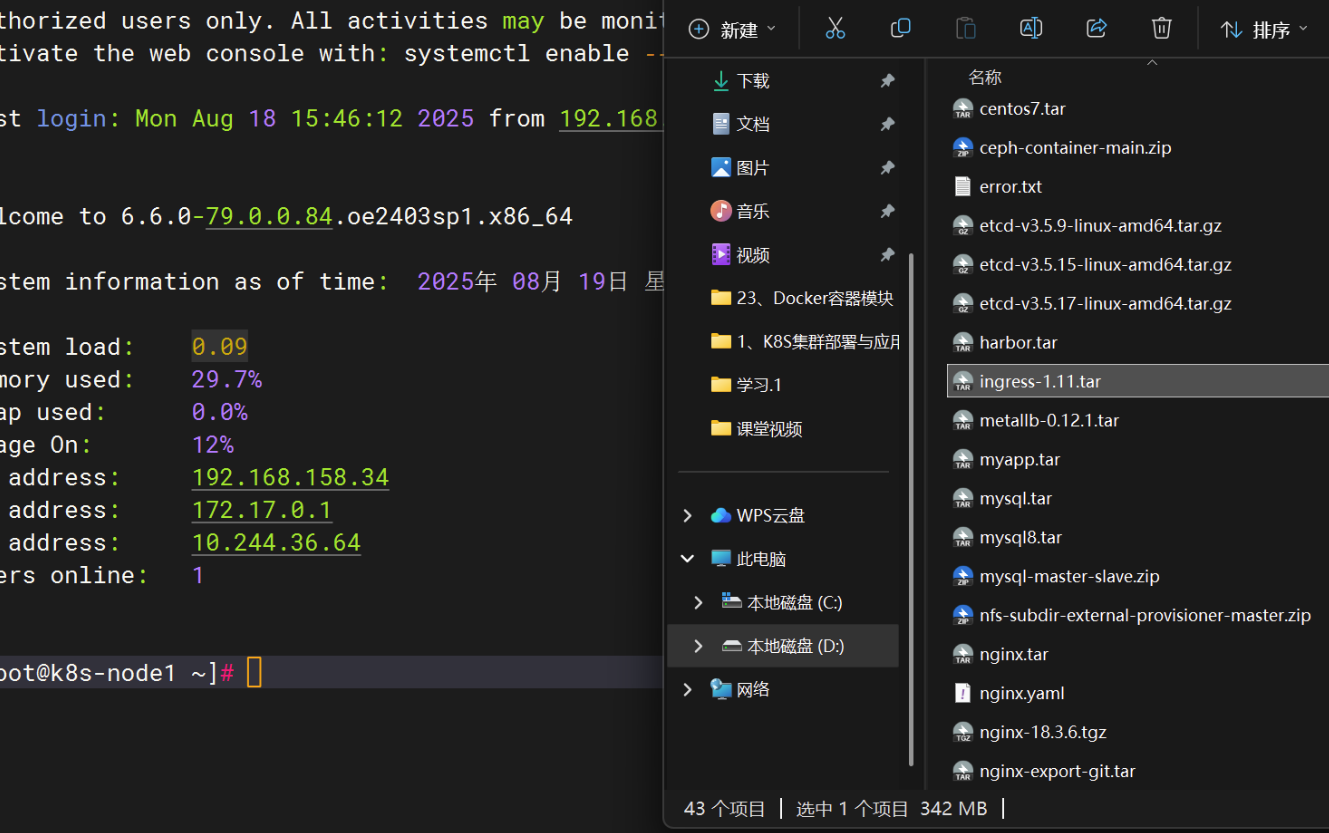

#将ingress-1.11.tar镜像包拷贝到每个node节点

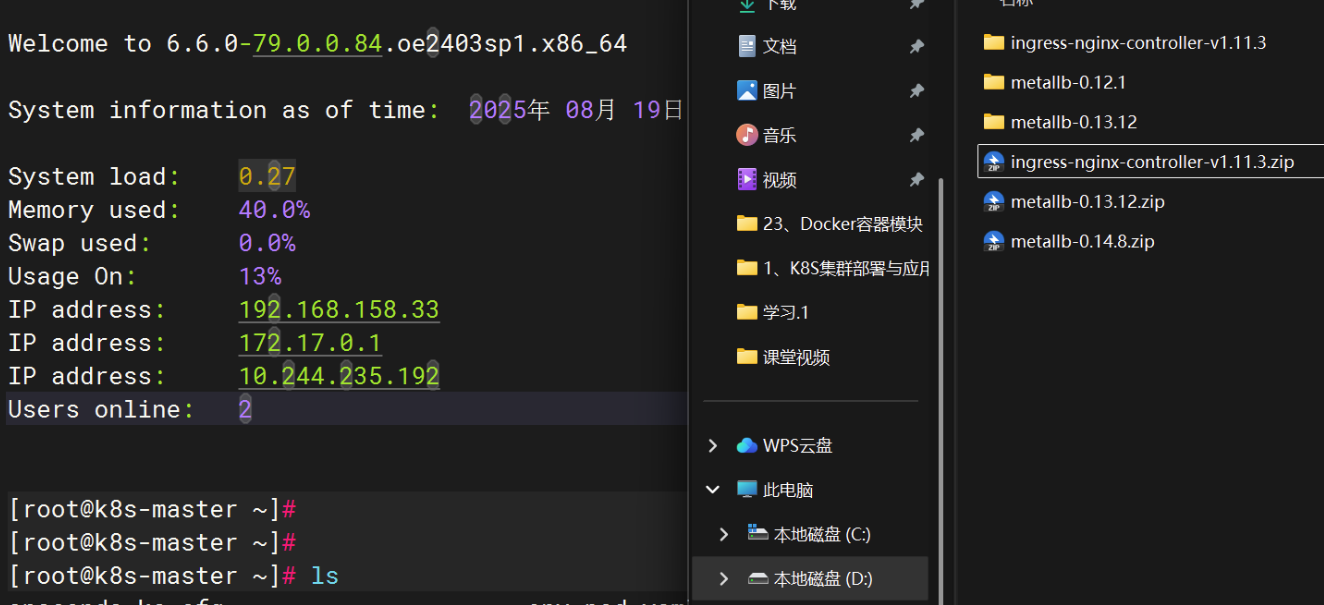

将ingress-nginx-controller-v1.11.3.zip拷贝到master主节点,这个是资源清单文件

验证-NodePort模式

将配置文件改为1个副本

验证-LoadBalancer模式

修改ARP模式,启用严格ARP模式

搭建metallb支持LoadBalancer

普通的service测试

ingress访问测试:

用测试机进行测试

故障排查

一、核心概念

-

Ingress

-

Ingress Controller

-

定义:实现 Ingress 规则的执行组件(如 Nginx、Traefik、HAProxy 等)。

-

核心职责:监听 API Server 的 Ingress 资源变更 → 生成具体路由规则 → 部署反向代理服务。

-

-

关键关联组件

-

Service:集群内部服务的抽象(ClusterIP/DNS),Ingress 最终将流量转发到 Service。

-

Endpoint:Service 对应的实际 Pod IP 列表(由 kube-proxy 维护)。

-

负载均衡器(LB):云厂商提供的入口(如 AWS ALB、GCP LB),可选(部分 Ingress Controller 自带 LB)。

-

二、核心组件关系图

graph LR客户端 --> 负载均衡器(Load Balancer)负载均衡器 --> Ingress Controller(反向代理)Ingress Controller -->|根据 Ingress 规则| Service(集群内部服务)Service --> Endpoint(Pod IP 列表)Endpoint --> Pod(实际容器)

三、Ingress 工作流程(7步详解)

-

用户发起请求 客户端(如浏览器)访问

http://app.example.com/path。 -

流量到达负载均衡器

-

云厂商 LB(如 AWS ALB)或裸金属 LB(如 Nginx)接收请求。

-

LB 将请求转发到 Ingress Controller 的 Pod(通过 NodePort/HostNetwork 暴露)。

-

-

Ingress Controller 监听规则

-

Ingress Controller 持续监听 Kubernetes API Server 的

ingresses资源变更。 -

当新增/修改 Ingress 资源时,Controller 解析规则并更新自身反向代理配置(如 Nginx 的

nginx.conf)。

-

-

匹配 Ingress 规则 Ingress Controller 根据请求的 Host(域名) 和 Path(路径) 匹配 Ingress 规则:

-

示例规则:

spec: rules: - host: app.example.com # 匹配域名 http: paths: - path: /api # 匹配路径 pathType: Prefix # 前缀匹配 backend: service: name: api-svc # 转发到 Service port: number: 80

-

-

转发到对应 Service 匹配成功后,Ingress Controller 将请求转发到目标 Service(通过 ClusterIP)。

-

Service 路由到 Pod

-

Service 通过 kube-proxy 维护的 iptables/IPVS 规则,将流量负载均衡到后端 Endpoint(Pod IP)。

-

最终请求到达目标 Pod 处理。

-

-

响应返回客户端 Pod 处理完成后,响应沿原路径返回(Pod → Service → Ingress Controller → LB → 客户端)。

四、Ingress 核心配置要素

-

基础配置

-

apiVersion:networking.k8s.io/v1(最新版本)。 -

kind:Ingress。 -

metadata.name: Ingress 资源名称(如app-ingress)。

-

-

规则定义(rules)

-

host: 匹配的域名(支持*通配符,如*.example.com)。 -

http: HTTP 路由规则(支持paths数组)。 -

path: 匹配的 URL 路径(支持Prefix/Exact/ImplementationSpecific类型)。 -

backend: 后端服务(service.name+service.port.number)。

-

-

TLS 配置(tls)

-

用于 HTTPS 加密:

tls:- hosts: - app.example.com # 需与 rules.host 匹配 secretName: app-tls-secret # 存储证书的 Kubernetes Secret

-

-

注解(Annotations) 扩展功能(不同 Ingress Controller 支持不同注解):

-

Nginx 特有

-

nginx.ingress.kubernetes.io/rewrite-target: 重写路径(如/old-path → /new-path)。 -

nginx.ingress.kubernetes.io/ssl-redirect: 强制 HTTPS 跳转。 -

nginx.ingress.kubernetes.io/rate-limit-rate: 速率限制(如 10r/s)。

-

-

通用注解

-

kubernetes.io/ingress.class: 指定 Ingress Controller 类型(如nginx/traefik)。

-

-

五、Ingress Controller 类型对比

六、常见问题与优化

-

常见问题

-

规则不生效:检查 Ingress Controller 是否运行、规则语法是否正确、

ingress.class是否匹配。 -

性能瓶颈:调整 Ingress Controller 的副本数、启用 HTTP/2、优化 TLS 会话复用。

-

跨域(CORS):通过注解配置(如

nginx.ingress.kubernetes.io/enable-cors: \"true\")。

-

-

优化建议

-

启用健康检查:在 Ingress 规则中配置

healthCheck(部分 Controller 支持)。 -

日志与监控:收集 Ingress Controller 的访问日志(如 Nginx 的

access.log)和指标(如请求量、延迟)。 -

灰度发布:结合服务网格(如 Istio)实现基于权重/头部的流量切分。

-

验证-NodePort模式

#将ingress-1.11.tar镜像包拷贝到每个node节点

#将镜像包仍到node节点,并加载镜像[root@k8s-node1 ~]# docker load -i ingress-1.11.tar [root@k8s-node2 ~]# docker load -i ingress-1.11.tar

将ingress-nginx-controller-v1.11.3.zip拷贝到master主节点,这个是资源清单文件

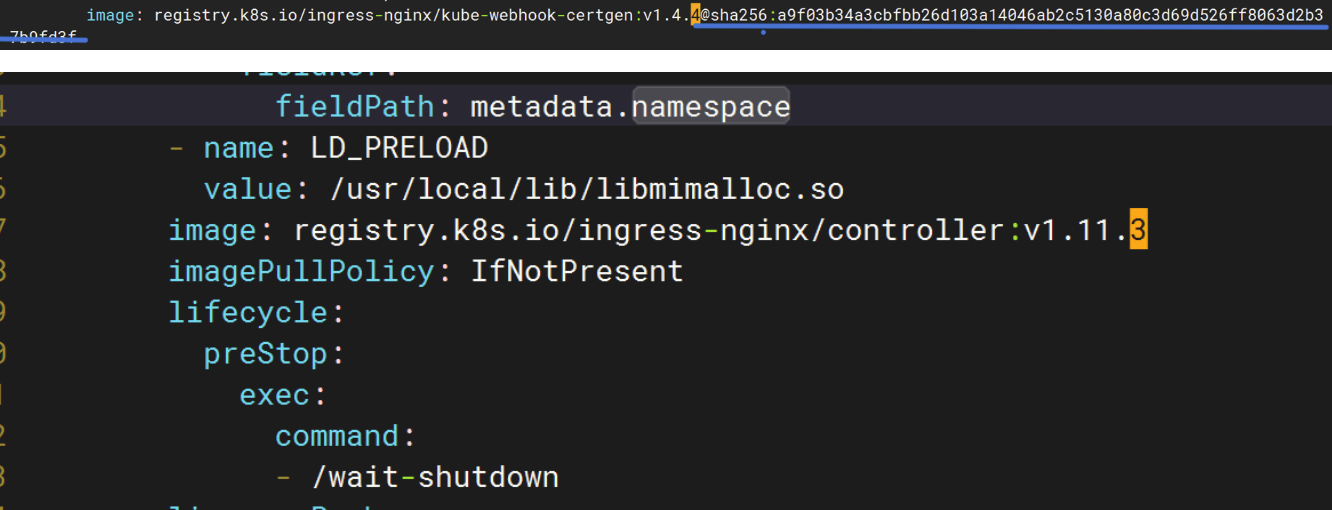

[root@k8s-master cloud]# vim deploy.yaml

将所有带镜像的,把后面删除掉

一共三处

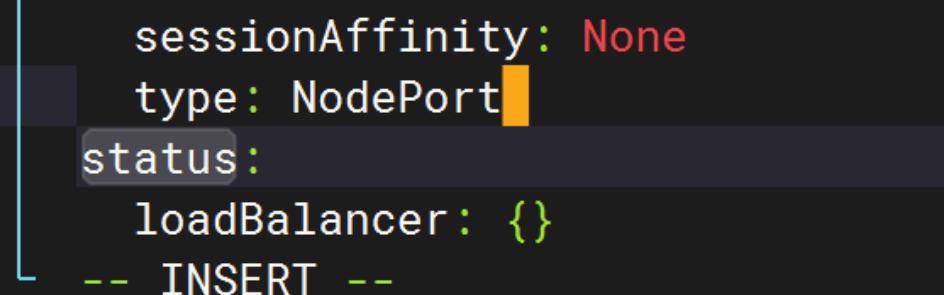

将type类型修改为

NodePort

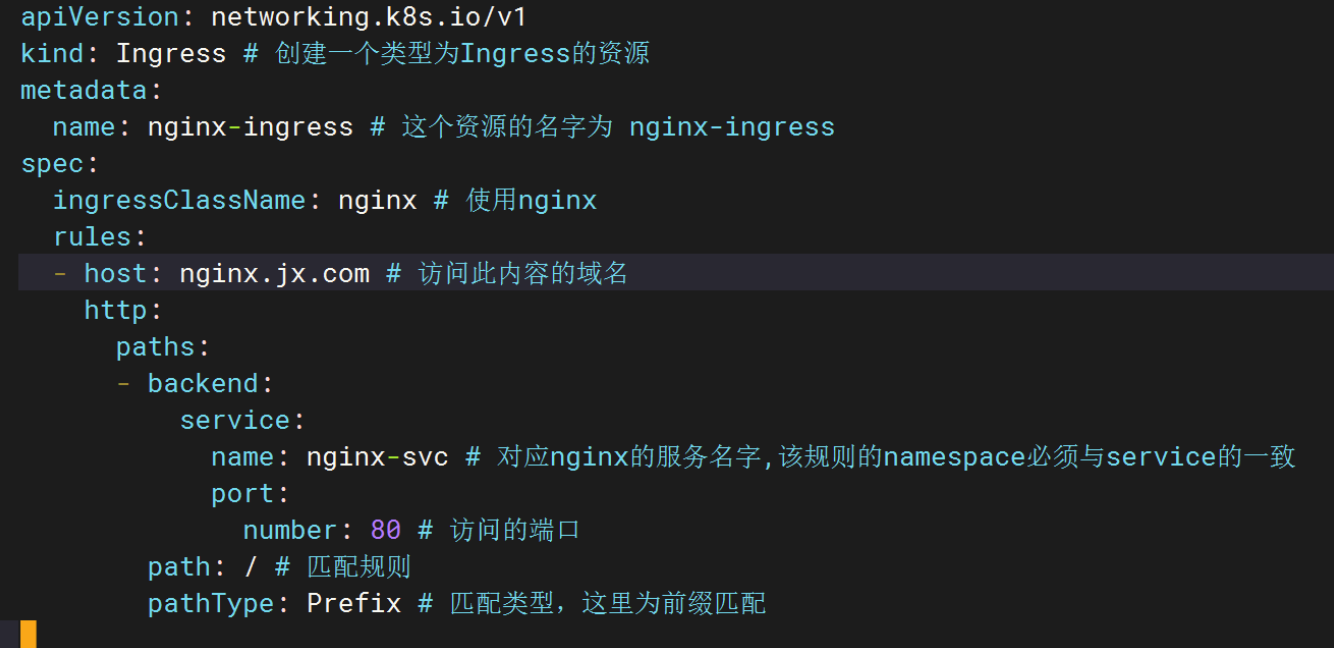

vim ingress-http.yaml

案例操作:

验证-NodePort模式

#将镜像包仍到node节点,并加载镜像[root@k8s-node1 ~]# docker load -i ingress-1.11.tar [root@k8s-node2 ~]# docker load -i ingress-1.11.tar [root@k8s-master ~]# rzrz waiting to receive.**[root@k8s-master ~]# [root@k8s-master ~]# unzip ingress-nginx-controller-v1.11.3.zip Archive: ingress-nginx-controller-v1.11.3.zip[root@k8s-master ~]# cd ingress-nginx-controller-v1.11.3/[root@k8s-master ingress-nginx-controller-v1.11.3]# lsbuild deploy hack mkdocs.yml README.mdchangelog docs images netlify.toml rootfsChangelog.md ginkgo_upgrade.md internal NEW_CONTRIBUTOR.md SECURITY_CONTACTScharts GOLANG_VERSION ISSUE_TRIAGE.md NEW_RELEASE_PROCESS.md SECURITY.mdcloudbuild.yaml go.mod LICENSE NGINX_BASE TAGcmd go.sum magefiles OWNERS testcode-of-conduct.md go.work Makefile OWNERS_ALIASES versionCONTRIBUTING.md go.work.sum MANUAL_RELEASE.md pkg#配置文件路径 /root/ingress-nginx-controller-v1.11.3/deploy/static/provider/cloud/[root@k8s-master ingress-nginx-controller-v1.11.3]# cd deploy/[root@k8s-master deploy]# lsgrafana prometheus README.md static[root@k8s-master deploy]# cd static/[root@k8s-master static]# lsprovider[root@k8s-master static]# cd provider/[root@k8s-master provider]# lsaws baremetal cloud do exoscale kind oracle scw[root@k8s-master provider]# cd cloud/[root@k8s-master cloud]# lsdeploy.yaml kustomization.yaml#修改配置文件,将里面的三处镜像后面的@到最后的删除掉[root@k8s-master cloud]# vim deploy.yaml #创建pod[root@k8s-master cloud]# kubectl create -f deploy.yaml [root@k8s-master cloud]# kubectl -n ingress-nginx get podNAME READY STATUS RESTARTS AGEingress-nginx-admission-create-qkm2p 0/1 Completed 0 45singress-nginx-admission-patch-n27t5 0/1 Completed 0 45singress-nginx-controller-7d7455dcf8-84grm 1/1 Running 0 45s#查看是在哪个节点[root@k8s-master cloud]# kubectl -n ingress-nginx get pod -o wideNAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATESingress-nginx-admission-create-qkm2p 0/1 Completed 0 71s 10.244.36.104 k8s-node1 ingress-nginx-admission-patch-n27t5 0/1 Completed 0 71s 10.244.36.105 k8s-node1 ingress-nginx-controller-7d7455dcf8-84grm 1/1 Running 0 71s 10.244.36.106 k8s-node1 #显示 所以需要修改LoadBalancer [root@k8s-master cloud]# kubectl -n ingress-nginx get svcNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S)AGEingress-nginx-controller LoadBalancer 10.99.187.201 80:30237/TCP,443:30538/TCP 106s ingress-nginx-controller-admission ClusterIP 10.101.75.87 443/TCP106s[root@k8s-master cloud]# kubectl -n ingress-nginx edit svc ingress-nginx-controller type: NodePort #将这儿的 LoadBalancer 改为NodePort 然后保存退出 #再查看service,就没问题了修改好了[root@k8s-master cloud]# kubectl -n ingress-nginx get svcNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S)AGEingress-nginx-controller NodePort 10.99.187.201 80:30237/TCP,443:30538/TCP 7m25singress-nginx-controller-admission ClusterIP 10.101.75.87 443/TCP7m25s#创建的这个service是什么类型,无所谓[root@k8s-master cloud]# vim nginx-ingress.yamlapiVersion: apps/v1kind: Deploymentmetadata: labels: app: nginx-deploy name: nginx-deployspec: replicas: 3 selector: matchLabels: app: nginx-deploy template: metadata: labels: app: nginx-deploy spec: containers: - image: nginx:latest imagePullPolicy: IfNotPresent name: nginx ports: - containerPort: 80---apiVersion: v1kind: Servicemetadata: labels: app: nginx-deploy name: nginx-svc #这个service的名称一定要记住,一会要用spec: ports: - port: 80 protocol: TCP targetPort: 80 selector: app: nginx-deploy type: ClusterIP #什么类型无所谓#提交[root@k8s-master cloud]# kubectl create -f nginx-ingress.yaml deployment.apps/nginx-deploy createdservice/nginx-svc created#三个副本pod创建好了[root@k8s-master cloud]# kubectl get podNAME READY STATUS RESTARTS AGEnginx-89f8c6894-jdx6b 1/1 Running 1 (19h ago) 5d18hnginx-deploy-7bd594f975-lh24s 1/1 Running 0 5m13snginx-deploy-7bd594f975-ns2x7 1/1 Running 0 5m13snginx-deploy-7bd594f975-z5whx 1/1 Running 0 5m13s第二步编辑ingress规则文件[root@k8s-master cloud]# vim ingress-http.yamlapiVersion: networking.k8s.io/v1kind: Ingress # 创建一个类型为Ingress的资源metadata: name: nginx-ingress # 这个资源的名字为 nginx-ingressspec: ingressClassName: nginx # 使用nginx rules: - host: nginx.jx.com # 访问此内容的域名 http: paths: - backend: service: name: nginx-svc # 对应nginx的服务名字,该规则的namespace必须与service的一致 port: number: 80 # 访问的端口 path: / # 匹配规则 pathType: Prefix # 匹配类型,这里为前缀匹配#######Exact(精确匹配):#当 PathType 的值为 Exact 时,意味着服务的路由规则将仅在传入请求的路径与指定的路径完全相同时才会被匹配。#例如,如果一个服务的路径配置为 /api/v1/resource 且 PathType 为 Exact,那么只有当请求的路径是 /api/v1/resource 时,该服务才会被选中处理请求,多一个字符或少一个字符都不会匹配,包括 /api/v1/resource/ 或者 /api/v1/resource?id=1 这样的请求路径都不会被该服务处理,这是一种非常严格的精确匹配规则。#Prefix(前缀匹配):#当 PathType 的值为 Prefix 时,服务将匹配以指定路径作为前缀的请求路径。#例如,如果一个服务的路径配置为 /api/v1 且 PathType 为 Prefix,那么 /api/v1、/api/v1/resource、/api/v1/resource/1 等以 /api/v1 开头的请求路径都会被该服务处理,只要请求路径以 /api/v1 开头,该服务就会处理该请求,而不要求请求路径完全等于 /api/v1。[root@k8s-master cloud]# kubectl apply -f ingress-http.yaml ingress.networking.k8s.io/nginx-ingress created[root@k8s-master cloud]# kubectl get ingressNAME CLASS HOSTS ADDRESS PORTS AGEnginx-ingress nginx nginx.jx.com 10.99.187.201 80 54s[root@k8s-master cloud]# kubectl describe ingressName: nginx-ingressLabels: Namespace: defaultAddress: 10.99.187.201Ingress Class: nginxDefault backend: Rules: Host Path Backends ---- ---- -------- nginx.jx.com ###着重看这里,通过svc转发至后端 / nginx-svc:80 (10.244.169.162:80,10.244.36.107:80,10.244.36.108:80)Annotations: Events: Type Reason Age FromMessage ---- ------ ---- ----------- Normal Sync 23s (x2 over 63s) nginx-ingress-controller Scheduled for sync#查看了解lua模块[root@k8s-master cloud]# kubectl -n ingress-nginx get podNAME READY STATUS RESTARTS AGEingress-nginx-admission-create-qkm2p 0/1 Completed 0 39mingress-nginx-admission-patch-n27t5 0/1 Completed 0 39mingress-nginx-controller-7d7455dcf8-84grm 1/1 Running 0 39m[root@k8s-master cloud]# cd [root@k8s-master ~]# kubectl -n ingress-nginx exec -it ingress-nginx-controller-7d7455dcf8-84grm -- bashingress-nginx-controller-7d7455dcf8-84grm:/etc/nginx$ ingress-nginx-controller-7d7455dcf8-84grm:/etc/nginx$ ingress-nginx-controller-7d7455dcf8-84grm:/etc/nginx$ ingress-nginx-controller-7d7455dcf8-84grm:/etc/nginx$ lsingress-nginx-controller-7d7455dcf8-84grm:/etc/nginx$ vi nginx.conf#原理就是调用lua模块#lua模块server { server_name nginx.jx.com ; http2 on; #监听端口 listen 80 ; listen [::]:80 ;listen 443 ssl; listen [::]:443 ssl; set $proxy_upstream_name \"-\"; ssl_certificate_by_lua_block { certificate.call() } location / { #下面是变量 set $namespace \"default\"; set $ingress_name \"nginx-ingress\"; set $service_name \"nginx-svc\"; set $service_port \"80\"; set $location_path \"/\"; set $global_rate_limit_exceeding n; 现在我们可以进行访问了#先用ipvsadm查看一下#这里下载ipvsadm,配置好#先下载安装yum install ipvsadm -y#验证安装ipvsadm --version#加载 IPVS 内核模块# 加载核心模块modprobe ip_vs# 检查已加载模块lsmod | grep ip_vs# 此模式必须安装ipvs内核模块(集群部署的时候已安装),否则会降级为iptables# 开启ipvs,cm: configmap# 打开配置文件修改mode: \"ipvs\"[root@k8s-master01 ~]# kubectl edit cm kube-proxy -n kube-system#重启 kube-proxy Pod[root@k8s-master01 ~]# kubectl delete pod -l k8s-app=kube-proxy -n kube-system[root@k8s-master ~]# ipvsadm -Ln | grep 30237TCP 172.17.0.1:30237 rrTCP 192.168.158.33:30237 rrTCP 10.244.235.192:30237 rr#访问端口号是30237[root@k8s-master ~]# kubectl -n ingress-nginx get svcNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S)AGEingress-nginx-controller NodePort 10.99.187.201 80:30237/TCP,443:30538/TCP 74mingress-nginx-controller-admission ClusterIP 10.101.75.87 443/TCP74m#在访问节点写入hosts解析记录,由于ingress-controller运行再node2节点,所以hosts要写成node2的节点IP地址[root@k8s-master ~]# vim /etc/hosts127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4::1 localhost localhost.localdomain localhost6 localhost6.localdomain6192.168.158.33 k8s-master192.168.158.34 k8s-node1192.168.158.35 k8s-node2####192.168.158.34 nginx.jx.com##测试,只能使用域名访问[root@k8s-master ~]# curl nginx.jx.com:30237Welcome to nginx! html { color-scheme: light dark; }body { width: 35em; margin: 0 auto;font-family: Tahoma, Verdana, Arial, sans-serif; }Welcome to nginx!

If you see this page, the nginx web server is successfully installed andworking. Further configuration is required.

For online documentation and support please refer tonginx.org.

Commercial support is available atnginx.com.

Thank you for using nginx.

故障#在master主节点上可以访问,在其它node节点上不可以访问方法#在master主机和每台node节点上添加上node节点ip和域名,####192.168.158.34 nginx.jx.com192.168.158.35 nginx.jx.com然后所有的node节点都能正常访问将配置文件改为1个副本

[root@k8s-master cloud]# vim nginx-ingress.yaml [root@k8s-master cloud]# kubectl apply -f nginx-ingress.yaml service/nginx-svc configured[root@k8s-master cloud]# kubectl get ponginx-deploy-7bd594f975-lh24s 1/1 Running 0 97m[root@k8s-master cloud]# kubectl -n ingress-nginx get svcNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S)AGEingress-nginx-controller NodePort 10.99.187.201 80:30237/TCP,443:30538/TCP 114mingress-nginx-controller-admission ClusterIP 10.101.75.87 443/TCP114m[root@k8s-master cloud]# kubectl -n ingress-nginx get pod -o wideNAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATESingress-nginx-admission-create-qkm2p 0/1 Completed 0 114m 10.244.36.104 k8s-node1 ingress-nginx-admission-patch-n27t5 0/1 Completed 0 114m 10.244.36.105 k8s-node1 ingress-nginx-controller-7d7455dcf8-84grm 1/1 Running 0 114m 10.244.36.106 k8s-node1 [root@k8s-master cloud]# kubectl get po -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATESnginx-89f8c6894-jdx6b 1/1 Running 1 (21h ago) 5d19h 10.244.36.96 k8s-node1 nginx-deploy-647c54f577-hqkmf 1/1 Running 0 13s 10.244.36.109 k8s-node1 pod-controller-7nlxv 1/1 Running 1 (21h ago) 5d7h 10.244.36.98 k8s-node1 pod-controller-zn82f 1/1 Running 1 (31h ago) 5d7h 10.244.169.161 k8s-node2 验证-LoadBalancer模式

修改ARP模式,启用严格ARP模式

搭建metallb支持LoadBalancer

[root@k8s-master ~]# unzip metallb-0.14.8.zip [root@k8s-master ~]# cd /root/metallb-0.14.8/config/manifests[root@k8s-master manifests]# lsmetallb-frr-k8s-prometheus.yaml metallb-frr-prometheus.yaml metallb-native-prometheus.yamlmetallb-frr-k8s.yaml metallb-frr.yaml metallb-native.yaml [root@k8s-master manifests]# kubectl apply -f metallb-native.yaml[root@k8s-master manifests]# cat > IPAddressPool.yaml< L2Advertisement.yaml<<EOFapiVersion: metallb.io/v1beta1kind: L2Advertisementmetadata: name: planip-pool namespace: metallb-systemspec: ipAddressPools: - planip-pool #这里需要跟上面ip池的名称保持一致EOF[root@k8s-master manifests]# vim L2Advertisement.yaml [root@k8s-master manifests]# kubectl apply -f IPAddressPool.yaml ipaddresspool.metallb.io/planip-pool created[root@k8s-master manifests]# kubectl apply -f L2Advertisement.yaml l2advertisement.metallb.io/planip-pool created[root@k8s-master manifests]# kubectl -n metallb-system get podNAME READY STATUS RESTARTS AGEcontroller-77676c78d9-495lv 1/1 Running 0 2m34sspeaker-5pc9l 1/1 Running 0 2m34sspeaker-gtdxh 1/1 Running 0 2m34sspeaker-nw2dp 1/1 Running 0 2m34s[root@k8s-master manifests]# lsIPAddressPool.yaml metallb-frr-k8s.yaml metallb-native-prometheus.yamlL2Advertisement.yaml metallb-frr-prometheus.yaml metallb-native.yamlmetallb-frr-k8s-prometheus.yaml metallb-frr.yaml普通的service测试

[root@k8s-master cloud]# kubectl -n ingress-nginx get svcNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S)AGEingress-nginx-controller LoadBalancer 10.99.187.201 192.168.158.41 80:30237/TCP,443:30538/TCP 4h20mingress-nginx-controller-admission ClusterIP 10.101.75.87 443/TCP4h20m#nginx-svc1的192.168.158.40 实际上是负载均衡给它分了一个webip[root@k8s-master cloud]# kubectl get svcNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEkubernetes ClusterIP 10.96.0.1 443/TCP 7d17hmy-external-service ExternalName api.example.com 5d4hnginx-svc ClusterIP 10.98.123.137 80/TCP 4h7mnginx-svc-nodeport NodePort 10.105.189.102 80:30080/TCP 5d22hnginx-svc1 LoadBalancer 10.110.71.147 192.168.158.40 80:31607/TCP 42msvc-test NodePort 10.110.238.126 808:31807/TCP 19h[root@k8s-master01 ingress-controller]# cat nginx.yaml apiVersion: apps/v1kind: Deploymentmetadata: labels: app: nginx-deploy1 name: nginx-deploy1spec: replicas: 3 selector: matchLabels: app: nginx-deploy1 template: metadata: labels: app: nginx-deploy1 spec: containers: - image: nginx imagePullPolicy: IfNotPresent name: nginx1 ports: - containerPort: 80---apiVersion: v1kind: Servicemetadata: labels: app: nginx-deploy1 name: nginx-svc1spec: ports: - port: 80 protocol: TCP targetPort: 80 selector: app: nginx-deploy1 type: LoadBalancer ###无所谓是什么类型##提交[root@k8s-master01 ingress-controller]# kubectl apply -f nginx.yaml [root@k8s-master cloud]# kubectl get podNAME READY STATUS RESTARTS AGEnginx-89f8c6894-jdx6b 1/1 Running 1 (34h ago) 6d9hnginx-deploy-647c54f577-hqkmf 1/1 Running 0 13h #这个是nginx-ingress.yaml运行的podnginx-deploy1-75c4474d6d-7nbsp 1/1 Running 0 12h#这个是nginx.yaml运行的podnginx-deploy1-75c4474d6d-7vpql 1/1 Running 0 12hnginx-deploy1-75c4474d6d-qjq4m 1/1 Running 0 12hpod-controller-7nlxv 1/1 Running 1 (34h ago) 5d21hpod-controller-zn82f 1/1 Running 1 (45h ago) 5d21h#查看svc服务#ip地址池分配了ip 192.168.158.40 [root@k8s-master cloud]# kubectl get svcNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEkubernetes ClusterIP 10.96.0.1 443/TCP 8dmy-external-service ExternalName api.example.com 5d16hnginx-svc ClusterIP 10.98.123.137 80/TCP 15hnginx-svc-nodeport NodePort 10.105.189.102 80:30080/TCP 6d9hnginx-svc1 LoadBalancer 10.110.71.147 192.168.158.40 80:31607/TCP 12h##测试访问[root@k8s-master01 ingress-controller]# curl 192.168.158.40Welcome to nginx! html { color-scheme: light dark; }body { width: 35em; margin: 0 auto;font-family: Tahoma, Verdana, Arial, sans-serif; }Welcome to nginx!

If you see this page, the nginx web server is successfully installed andworking. Further configuration is required.

For online documentation and support please refer tonginx.org.

Commercial support is available atnginx.com.

Thank you for using nginx.

#在之前ingress配置文件中添加第二个域名[root@k8s-master manifests]# cd /root/ingress-nginx-controller-v1.11.3/deploy/static/provider/cloud/[root@k8s-master cloud]# vim ingress-http.yaml apiVersion: networking.k8s.io/v1kind: Ingress # 创建一个类型为Ingress的资源metadata: name: nginx-ingress # 这个资源的名字为 nginx-ingressspec: ingressClassName: nginx # 使用nginx rules: - host: nginx.jx.com # 访问此内容的域名 http: paths: - backend: service: name: nginx-svc # 对应nginx的服务名字 port: number: 80 # 访问的端口 path: / # 匹配规则 pathType: Prefix # 匹配类型,这里为前缀匹配 - host: nginx2.jx.com # 访问此内容的域名 http: paths: - backend: service: name: nginx-svc1 # 对应nginx的服务名字 port: number: 80 # 访问的端口 path: / # 匹配规则 pathType: Prefix # 匹配类型,这里为前缀匹配#配置好后记得提交查看#正确显示两个域名[root@k8s-master cloud]# kubectl get ingressNAME CLASS HOSTS ADDRESS PORTS AGEnginx-ingress nginx nginx.jx.com,nginx2.jx.com 192.168.158.41 80 3h10m查看运行svc1的pod#正常显示3个副本以及他们运行的节点[root@k8s-master cloud]# kubectl get pod -o wideNAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATESnginx-89f8c6894-jdx6b 1/1 Running 1 (34h ago) 6d9h 10.244.36.96 k8s-node1 nginx-deploy-647c54f577-hqkmf 1/1 Running 0 13h 10.244.36.109 k8s-node1 nginx-deploy1-75c4474d6d-7nbsp 1/1 Running 0 12h 10.244.36.114 k8s-node1 nginx-deploy1-75c4474d6d-7vpql 1/1 Running 0 12h 10.244.169.163 k8s-node2 nginx-deploy1-75c4474d6d-qjq4m 1/1 Running 0 12h 10.244.36.115 k8s-node1 pod-controller-7nlxv 1/1 Running 1 (34h ago) 5d21h 10.244.36.98 k8s-node1 pod-controller-zn82f 1/1 Running 1 (45h ago) 5d21h 10.244.169.161 k8s-node2 #查看ingress域名详细信息[root@k8s-master manifests]# kubectl describe ingress nginx-ingress Name: nginx-ingressLabels: Namespace: defaultAddress: 192.168.158.41Ingress Class: nginxDefault backend: Rules: Host Path Backends ---- ---- -------- nginx.jx.com / nginx-svc:80 (10.244.36.109:80) nginx2.jx.com / nginx-svc1:80 (10.244.169.163:80,10.244.36.114:80,10.244.36.115:80)Annotations: Events: Type Reason Age FromMessage ---- ------ ---- ----------- Normal Sync 2m49s (x4 over 3h12m) nginx-ingress-controller Scheduled for sync 修改ingress模式:

[root@k8s-master01 ~]# kubectl -n ingress-nginx edit svc ingress-nginx-controller type: LoadBalancer #修改为LoadBalancer模式status: loadBalancer: {}ingress访问测试:

在ingress所运行的节点上使用域名直接访问即可!!!

curl nginx.jx.comcurl nginx2.jx.com查看ingress是在哪个节点上

#ingerss是运行在node1节点上的[root@k8s-master cloud]# kubectl -n ingress-nginx get pod -o wideNAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATESingress-nginx-admission-create-qkm2p 0/1 Completed 0 15h 10.244.36.104 k8s-node1 ingress-nginx-admission-patch-n27t5 0/1 Completed 0 15h 10.244.36.105 k8s-node1 ingress-nginx-controller-7d7455dcf8-84grm 1/1 Running 0 15h 10.244.36.106 k8s-node1 查看我们要访问的Ingress的svc 负载均衡分的虚拟ip是多少

#这里给的是192.168.158.41#最终结果是我们要通过这个ip 192.168.158.41 进行域名访问,访问到nginx服务上,#当然,不同的域名访问不同的服务[root@k8s-master cloud]# kubectl -n ingress-nginx get svcNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEingress-nginx-controller LoadBalancer 10.99.187.201 192.168.158.41 80:30237/TCP,443:30538/TCP 15hingress-nginx-controller-admission ClusterIP 10.101.75.87 443/TCP 15h查看svc1

#分给svc1的虚拟ip是192.168.158.40 #测试:这时在web页面访问这个ip是可以正常访问的[root@k8s-master cloud]# kubectl get svcNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEkubernetes ClusterIP 10.96.0.1 443/TCP 8dmy-external-service ExternalName api.example.com 5d16hnginx-svc ClusterIP 10.98.123.137 80/TCP 15hnginx-svc-nodeport NodePort 10.105.189.102 80:30080/TCP 6d9hnginx-svc1 LoadBalancer 10.110.71.147 192.168.158.40 80:31607/TCP 12h用测试机进行测试

需要在测试 机上的 /etc/hosts 里加上 svc虚拟ip和域名

#加上这个192.168.158.41 nginx.jx.com192.168.158.41 nginx2.jx.com#测试机进行访问#正常访问两个域名指向的不同nginx服务[root@luo ~]# curl nginx.jx.com12345[root@luo ~]# curl nginx2.jx.comWelcome to nginx! html { color-scheme: light dark; }body { width: 35em; margin: 0 auto;font-family: Tahoma, Verdana, Arial, sans-serif; }Welcome to nginx!

If you see this page, the nginx web server is successfully installed andworking. Further configuration is required.

For online documentation and support please refer tonginx.org.

Commercial support is available atnginx.com.

Thank you for using nginx.

故障排查

当无法正常访问时#查看ingress是否正常启用kubectl get ingresskubectl describe ingress nginx-ingress #查看ingress的pod运行在哪个节点上kubectl -n ingress-nginx get pod -o wide#查看ingress的svc服务,它的虚拟ipkubectl -n ingress-nginx get svc #查看svc1服务的虚拟ipkubectl get svc#也可以查看一下ip地址池配置,也有可能我们分配的ip地址段有误,