阿里重磅开源 LHM:开源3D数字人神器_阿里lhm

项目介绍

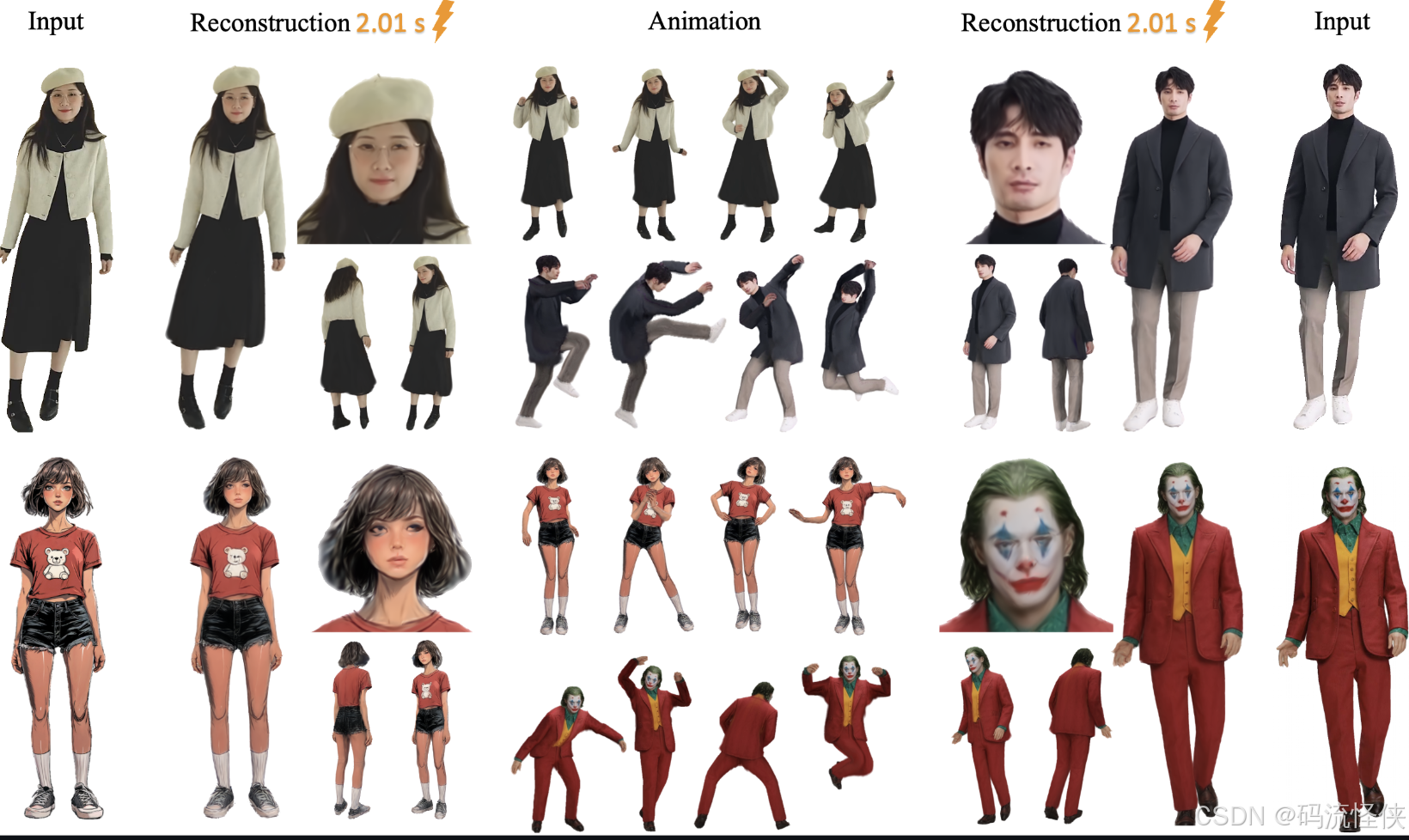

- 阿里巴巴通义实验室开源的LHM(Large Animatable Human Reconstruction Model) 是当前3D建模领域的突破性工具,能够从单张图像快速生成高质量可动画化的3D人体模型。仅需单张图片输入,5秒内生成可动画的3D模型,无需复杂后处理,支持实时渲染(30FPS)和姿态控制,动态调整模型动作。LHM通过单图闪电建模和实时动画控制,大幅降低了3D内容创作门槛,尤其适合需要快速原型设计的场景。其开源策略进一步推动行业生态发展,开发者可基于此探索更多创新应用。

- GitHub 地址:https://lingtengqiu.github.io/LHM/

- 项目地址:https://lingtengqiu.github.io/LHM/

- 论文地址:https://arxiv.org/pdf/2503.10625

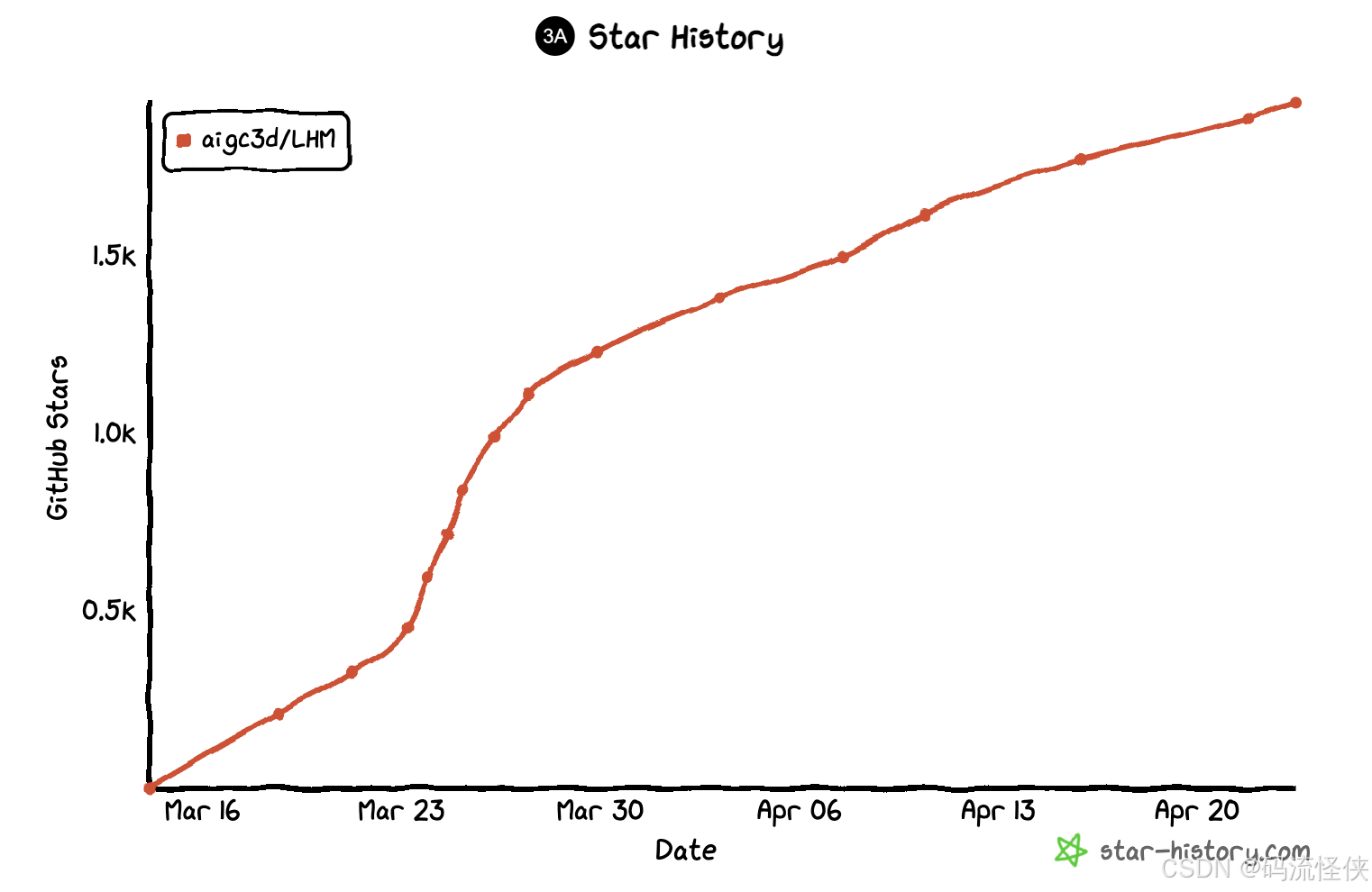

- star 数:1.9k

- star变化图:

项目教程

-

快速开始:B 站提供快速开始教程

- LHM教程:https://www.bilibili.com/video/BV18So4YCESk/

- LHM-ComfyUI教程:https://www.bilibili.com/video/BV1J9Z1Y2EiJ/

-

从Docker中构建环境:

# CUDA 121# step0. download docker imageswget -P ./lhm_cuda_dockers https://virutalbuy-public.oss-cn-hangzhou.aliyuncs.com/share/aigc3d/data/for_lingteng/LHM/LHM_Docker/lhm_cuda121.tar # step1. build from docker filesudo docker load -i ./lhm_cuda_dockers/lhm_cuda121.tar # step2. run docker_file and open the communication port 7860sudo docker run -p 7860:7860 -v PATH/FOLDER:DOCKER_WORKSPACES -it lhm:cuda_121 /bin/bash -

环境配置:

# 克隆代码git clone git@github.com:aigc3d/LHM.gitcd LHM# 安装依赖# cuda 11.8sh ./install_cu118.shpip install rembg# cuda 12.1sh ./install_cu121.shpip install rembg -

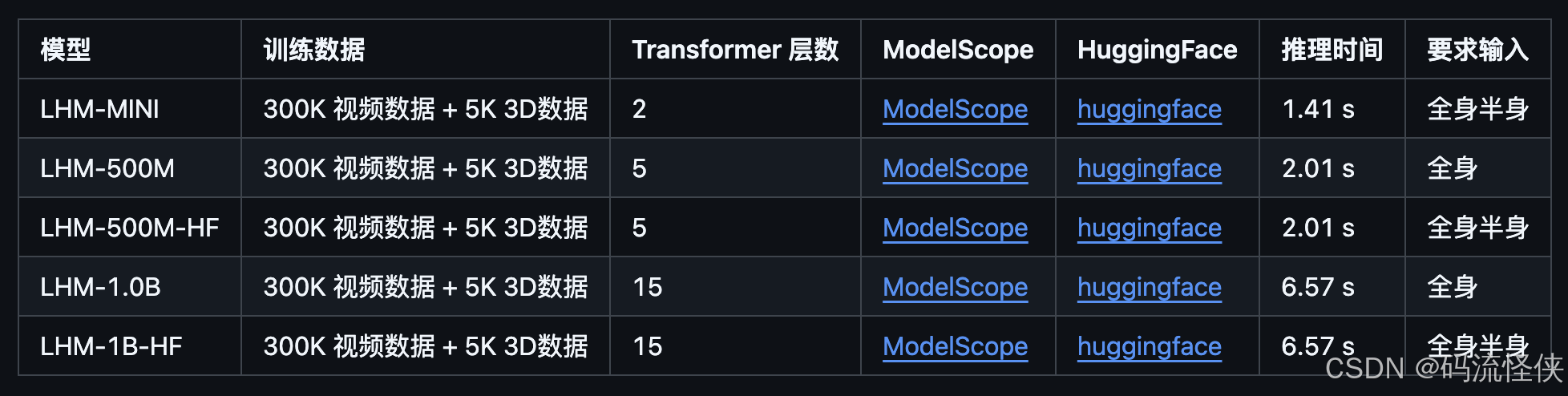

模型参数:如果你没下载模型,模型将会自动下载

- 从HuggingFace下载:

from huggingface_hub import snapshot_download # MINI Modelmodel_dir = snapshot_download(repo_id=\'3DAIGC/LHM-MINI\', cache_dir=\'./pretrained_models/huggingface\')# 500M-HF Modelmodel_dir = snapshot_download(repo_id=\'3DAIGC/LHM-500M-HF\', cache_dir=\'./pretrained_models/huggingface\')# 1B-HF Modelmodel_dir = snapshot_download(repo_id=\'3DAIGC/LHM-1B-HF\', cache_dir=\'./pretrained_models/huggingface\')- 从ModelScope下载:

from modelscope import snapshot_download# MINI Modelmodel_dir = snapshot_download(model_id=\'Damo_XR_Lab/LHM-MINI\', cache_dir=\'./pretrained_models\')# 500M-HF Modelmodel_dir = snapshot_download(model_id=\'Damo_XR_Lab/LHM-500M-HF\', cache_dir=\'./pretrained_models\')# 1B-HF Modelmodel_dir = snapshot_download(model_id=\'Damo_XR_Lab/LHM-1B-HF\', cache_dir=\'./pretrained_models\') -

加载先验模型权重:

# 下载先验模型权重wget https://virutalbuy-public.oss-cn-hangzhou.aliyuncs.com/share/aigc3d/data/LHM/LHM_prior_model.tar tar -xvf LHM_prior_model.tar -

下载后的完整目录:

├── configs│ ├── inference│ ├── accelerate-train-1gpu.yaml│ ├── accelerate-train-deepspeed.yaml│ ├── accelerate-train.yaml│ └── infer-gradio.yaml├── engine│ ├── BiRefNet│ ├── pose_estimation│ ├── SegmentAPI├── example_data│ └── test_data├── exps│ ├── releases├── LHM│ ├── datasets│ ├── losses│ ├── models│ ├── outputs│ ├── runners│ ├── utils│ ├── launch.py├── pretrained_models│ ├── dense_sample_points│ ├── gagatracker│ ├── human_model_files│ ├── sam2│ ├── sapiens│ ├── voxel_grid│ ├── arcface_resnet18.pth│ ├── BiRefNet-general-epoch_244.pth├── scripts│ ├── exp│ ├── convert_hf.py│ └── upload_hub.py├── tools│ ├── metrics├── train_data│ ├── example_imgs│ ├── motion_video├── inference.sh├── README.md├── requirements.txt -

本地部署:现在支持用户自定义动作输入,但是由于动作估计器内存占比,LHM-500M 在用户自定义动作输入gradio中需要22GB 的内存, 也可以提前处理好,然后用项目之前的接口。

# Memory-saving version; More time available for Use.# The maximum supported length for 720P video is 20s.python ./app_motion_ms.py python ./app_motion_ms.py --model_name LHM-1B-HF# Support user motion sequence input. As the pose estimator requires some GPU memory, this Gradio application requires at least 24 GB of GPU memory to run LHM-500M.python ./app_motion.py python ./app_motion.py --model_name LHM-1B-HF# preprocessing video sequencepython ./app.pypython ./app.py --model_name LHM-1B -

推理过程:已经支持半身图像了。

# MODEL_NAME={LHM-500M, LHM-500M-HF, LHM-1B, LHM-1B-HF}# bash ./inference.sh LHM-500M ./train_data/example_imgs/ ./train_data/motion_video/mimo1/smplx_params# bash ./inference.sh LHM-1B ./train_data/example_imgs/ ./train_data/motion_video/mimo1/smplx_params# bash ./inference.sh LHM-500M-HF ./train_data/example_imgs/ ./train_data/motion_video/mimo1/smplx_params# bash ./inference.sh LHM-1B-HF ./train_data/example_imgs/ ./train_data/motion_video/mimo1/smplx_params# export animation videobash inference.sh ${MODEL_NAME} ${IMAGE_PATH_OR_FOLDER} ${MOTION_SEQ}# export mesh bash ./inference_mesh.sh ${MODEL_NAME} -

处理视频动作数据:

- 下载动作提取相关的预训练模型权重

wget -P ./pretrained_models/human_model_files/pose_estimate https://virutalbuy-public.oss-cn-hangzhou.aliyuncs.com/share/aigc3d/data/LHM/yolov8x.ptwget -P ./pretrained_models/human_model_files/pose_estimate https://virutalbuy-public.oss-cn-hangzhou.aliyuncs.com/share/aigc3d/data/LHM/vitpose-h-wholebody.pth- 安装额外的依赖

cd ./engine/pose_estimationpip install mmcv==1.3.9pip install -v -e third-party/ViTPosepip install ultralytics- 运行以下命令,从视频中提取动作数据

# python ./engine/pose_estimation/video2motion.py --video_path ./train_data/demo.mp4 --output_path ./train_data/custom_motionpython ./engine/pose_estimation/video2motion.py --video_path ${VIDEO_PATH} --output_path ${OUTPUT_PATH}# 对于半身视频,比如./train_data/xiaoming.mp4,我们推荐使用以下命令:python ./engine/pose_estimation/video2motion.py --video_path ${VIDEO_PATH} --output_path ${OUTPUT_PATH} --fitting_steps 100 0- 使用提取的动作数据驱动数字人

# bash ./inference.sh LHM-500M-HF ./train_data/example_imgs/ ./train_data/custom_motion/demo/smplx_paramsbash inference.sh ${MODEL_NAME} ${IMAGE_PATH_OR_FOLDER} ${OUTPUT_PATH}/${VIDEO_NAME}/smplx_params

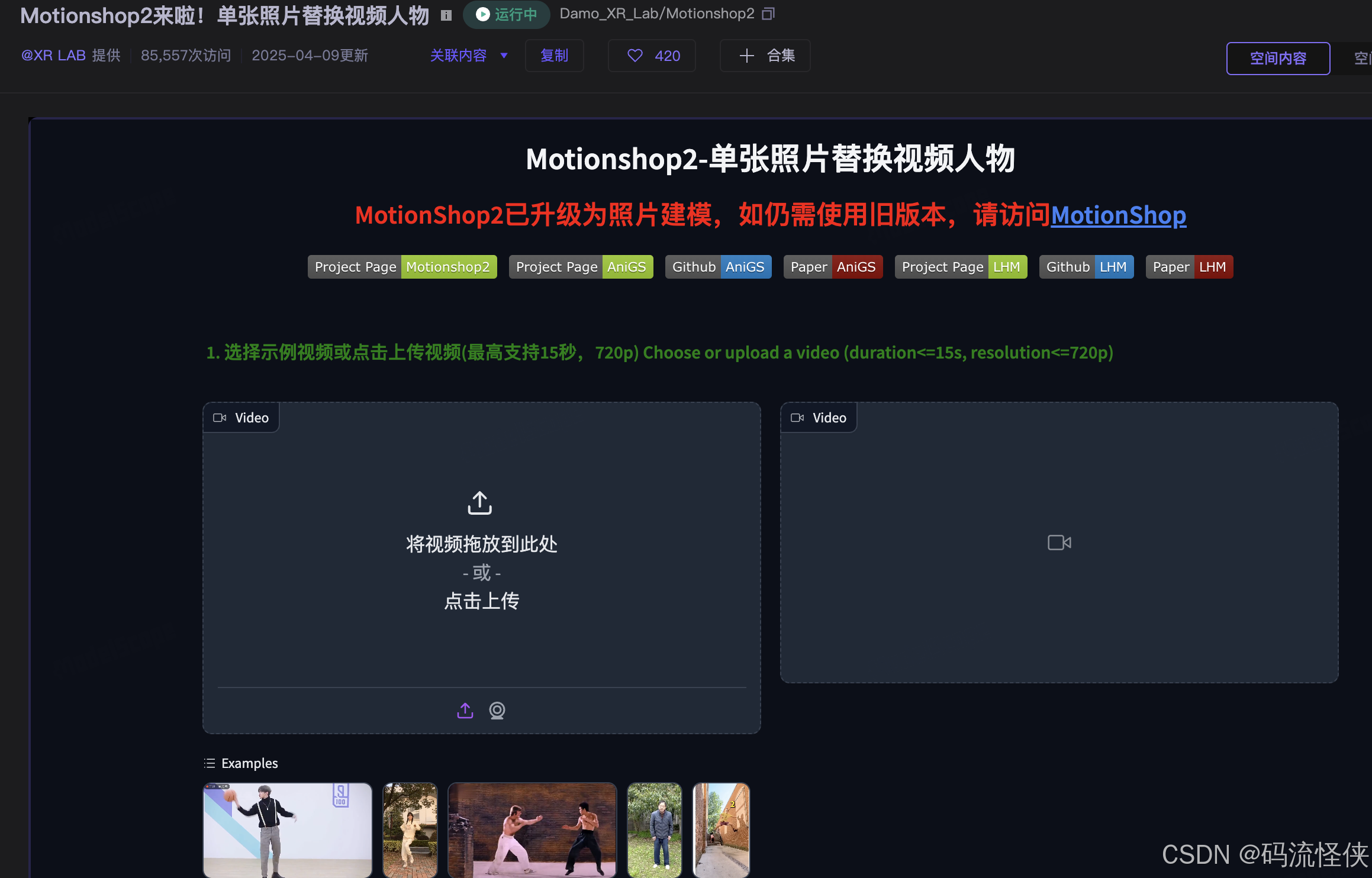

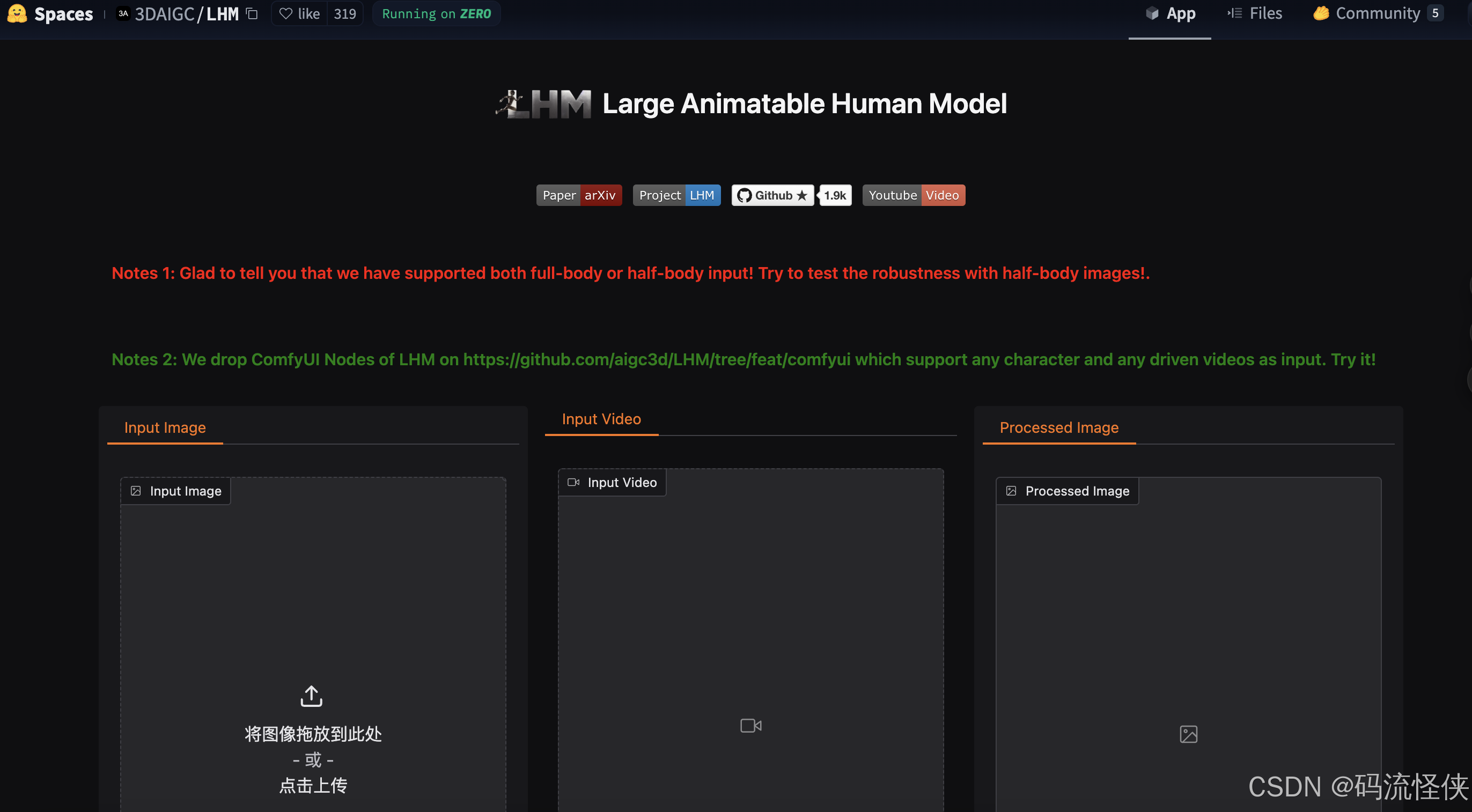

在线体验

- 网址1(Motionshop2):https://modelscope.cn/studios/Damo_XR_Lab/Motionshop2/summary

- 网址 2(huggingface):https://huggingface.co/spaces/3DAIGC/LHM

演示过程

-

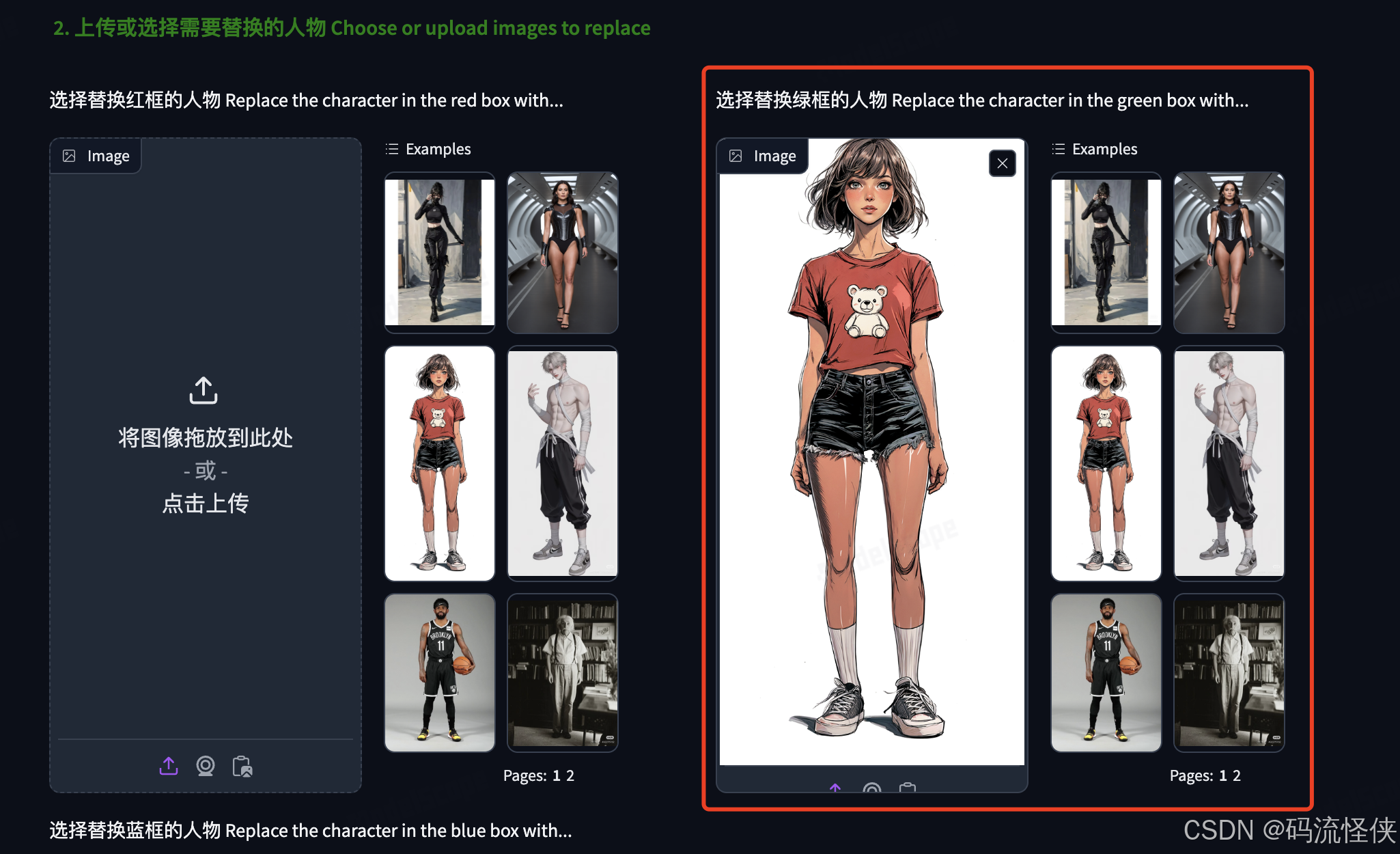

以Motionshop2为例,具体的操作过程也很简单,小白都可以直接上手。

-

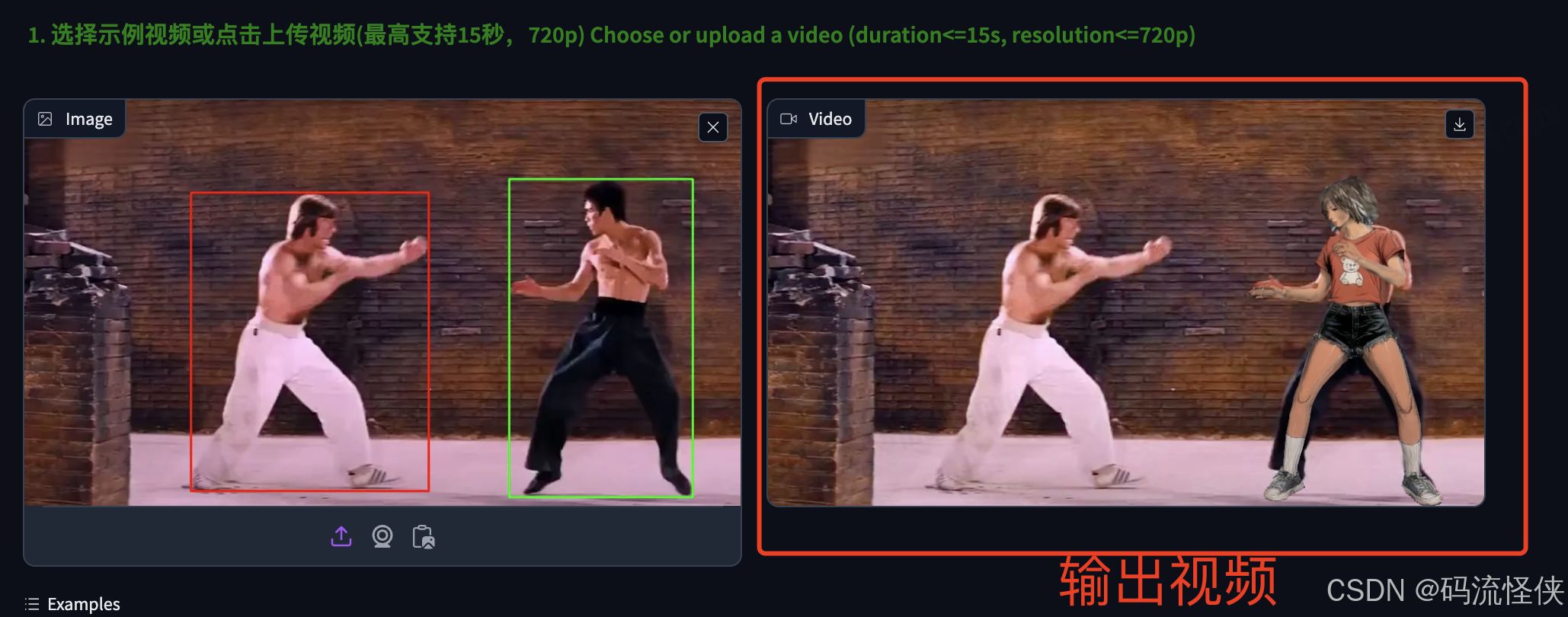

上传输入视频分析,直接用官方提供的示例视频

-

用示例图片替换绿框里人物

-

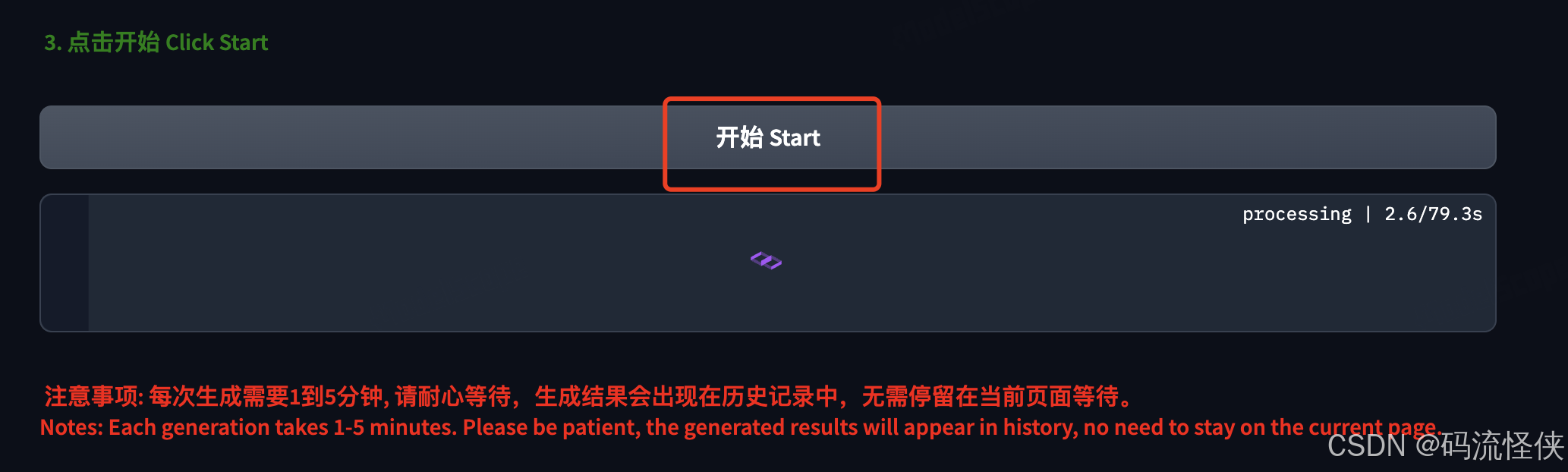

点击“开始”,等待结果

-

展示结果:动作贴合效果还不错,但还是可以看到背后李小龙的身影。

-

效果视频

阿里开源 LHM 3D 数字人效果

-