神经网络学习之优化器

1.官方文档:

for input, target in dataset:# 梯度清零 optimizer.zero_grad() output = model(input) loss = loss_fn(output, target) loss.backward() optimizer.step()代码实战:

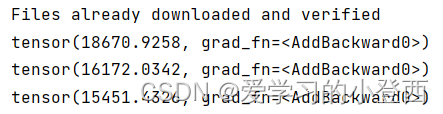

import torchimport torchvisionfrom torch import nnfrom torch.nn import Sequential, Conv2d, MaxPool2d, Flatten, Linearfrom torch.utils.data import DataLoaderdataset = torchvision.datasets.CIFAR10("data", train=False, download=True, transform=torchvision.transforms.ToTensor())# 将数据集用DataLoader加载dataloader = DataLoader(dataset, batch_size=1)# 创建相应网络class Peipei(nn.Module): def __init__(self) -> None: super(Peipei, self).__init__() self.model1 = Sequential( Conv2d(3, 32, 5, padding=2, stride=1), MaxPool2d(2), Conv2d(32, 32, 5, padding=2), MaxPool2d(2), Conv2d(32, 64, 5, padding=2), MaxPool2d(2), Flatten(), Linear(1024, 64), Linear(64, 10) ) def forward(self, x): x = self.model1(x) return x# 搭建相应网络peipei = Peipei()# 定义损失函数loss = nn.CrossEntropyLoss()# 选择优化器optim = torch.optim.SGD(peipei.parameters(), lr=0.01)for epoch in range(20): running_loss = 0.00 for data in dataloader: imgs, targets = data outputs = peipei(imgs) result_loss = loss(outputs, targets) # 优化器梯度清零 optim.zero_grad() # 反向传播,计算每个节点的梯度/参数,以便于后续选择合适的优化器 result_loss.backward() # 进行优化 optim.step() running_loss = running_loss + result_loss print(running_loss)结果:(可以看到loss在减小)