百度开源上传组件WebUploader能否实现断点续传?_webuploadersupport

Vue大文件上传方案重构:从WebUploader到分片断点续传的实践

作为项目技术负责人,近期在处理4GB级文件上传时遇到WebUploader组件的兼容性瓶颈(尤其在IE11及国产浏览器中频繁出现内存溢出)。经过两周技术调研与POC验证,最终采用基于HTML5 File API的分片上传方案,结合PHP后端实现可靠的断点续传机制。现将技术选型与核心实现分享如下:

一、技术选型依据

-

兼容性需求

需覆盖Chrome/Firefox/Edge/IE11及国产浏览器(360安全浏览器、QQ浏览器等),排除纯WebWorker方案。 -

性能要求

4GB文件需支持:- 动态分片(5MB-10MB自适应)

- 并发上传(3-5通道)

- 秒传验证(MD5/SHA1)

-

可靠性保障

断点续传需记录上传状态至IndexedDB,支持:- 浏览器崩溃恢复

- 网络中断重试

- 跨设备续传

二、核心架构设计

前端实现(Vue3 + Composition API)

// src/utils/fileUploader.jsexport class FileChunkUploader { constructor(file, options = {}) { this.file = file this.chunkSize = options.chunkSize || 5 * 1024 * 1024 // 5MB this.concurrent = options.concurrent || 3 this.uploadUrl = options.uploadUrl this.checkUrl = options.checkUrl this.mergeUrl = options.mergeUrl this.chunks = Math.ceil(file.size / this.chunkSize) this.uploadedChunks = new Set() this.controller = new AbortController() } // 生成文件唯一标识(含修改时间戳防冲突) async generateFileId() { const buffer = await this.file.slice(0, 1024 * 1024).arrayBuffer() // 取首1MB计算哈希 const hash = await crypto.subtle.digest(\'SHA-256\', buffer) return Array.from(new Uint8Array(hash)) .map(b => b.toString(16).padStart(2, \'0\')) .join(\'\') + \'_\' + this.file.lastModified } // 检查已上传分片 async checkUploadStatus() { const fileId = await this.generateFileId() const res = await fetch(`${this.checkUrl}?fileId=${fileId}&chunks=${this.chunks}`, { method: \'HEAD\', signal: this.controller.signal }) if (res.ok) { const range = res.headers.get(\'Content-Range\') if (range) { const uploaded = parseInt(range.split(\'/\')[1].split(\'-\')[1]) / this.chunkSize for (let i = 0; i < uploaded; i++) this.uploadedChunks.add(i) } } } // 分片上传核心逻辑 async upload() { const fileId = await this.generateFileId() await this.checkUploadStatus() const uploadTasks = [] for (let i = 0; i < this.chunks; i++) { if (this.uploadedChunks.has(i)) continue const start = i * this.chunkSize const end = Math.min(start + this.chunkSize, this.file.size) const chunk = this.file.slice(start, end) const formData = new FormData() formData.append(\'file\', chunk) formData.append(\'chunkIndex\', i) formData.append(\'totalChunks\', this.chunks) formData.append(\'fileId\', fileId) formData.append(\'fileName\', this.file.name) uploadTasks.push( fetch(this.uploadUrl, { method: \'POST\', body: formData, signal: this.controller.signal }).then(res => { if (!res.ok) throw new Error(`Chunk ${i} upload failed`) this.uploadedChunks.add(i) return res.json() }) ) // 并发控制 if (uploadTasks.length >= this.concurrent) { await Promise.race(uploadTasks) } } // 等待剩余任务完成 await Promise.all(uploadTasks) // 触发合并请求 const mergeRes = await fetch(this.mergeUrl, { method: \'POST\', headers: { \'Content-Type\': \'application/json\' }, body: JSON.stringify({ fileId, fileName: this.file.name }) }) return mergeRes.json() } abort() { this.controller.abort() }}后端实现(PHP)

// upload_handler.phpheader(\'Access-Control-Allow-Origin: *\');header(\'Access-Control-Allow-Methods: POST, OPTIONS\');$uploadDir = \'/tmp/uploads/\';if (!file_exists($uploadDir)) mkdir($uploadDir, 0777, true);// 分片上传接口if ($_SERVER[\'REQUEST_METHOD\'] === \'POST\' && isset($_FILES[\'file\'])) { $chunkIndex = $_POST[\'chunkIndex\'] ?? 0; $totalChunks = $_POST[\'totalChunks\'] ?? 1; $fileId = $_POST[\'fileId\']; $fileName = $_POST[\'fileName\']; $chunkPath = $uploadDir . $fileId . \'.part\' . $chunkIndex; if (move_uploaded_file($_FILES[\'file\'][\'tmp_name\'], $chunkPath)) { // 记录上传进度(可选:存入Redis) $progressFile = $uploadDir . $fileId . \'.progress\'; file_put_contents($progressFile, $chunkIndex . \'/\' . $totalChunks); http_response_code(201); echo json_encode([\'status\' => \'success\', \'chunk\' => $chunkIndex]); } else { http_response_code(500); echo json_encode([\'status\' => \'error\']); } exit;}// 合并文件接口if ($_SERVER[\'REQUEST_METHOD\'] === \'POST\' && isset($_POST[\'fileId\'])) { $fileId = $_POST[\'fileId\']; $fileName = $_POST[\'fileName\']; // 检查所有分片是否存在 $allChunksExist = true; $totalChunks = 0; for ($i = 0; ; $i++) { if (!file_exists($uploadDir . $fileId . \'.part\' . $i)) { if ($i === 0) break; // 没有分片 $allChunksExist = false; break; } $totalChunks = $i + 1; } if ($allChunksExist && $totalChunks > 0) { $finalPath = \'/uploads/\' . uniqid() . \'_\' . $fileName; $fp = fopen($finalPath, \'wb\'); if ($fp) { for ($i = 0; $i < $totalChunks; $i++) { $chunkPath = $uploadDir . $fileId . \'.part\' . $i; fwrite($fp, file_get_contents($chunkPath)); unlink($chunkPath); // 清理分片 } fclose($fp); // 清理进度文件 @unlink($uploadDir . $fileId . \'.progress\'); echo json_encode([\'status\' => \'success\', \'path\' => $finalPath]); } else { http_response_code(500); echo json_encode([\'status\' => \'merge_error\']); } } else { http_response_code(400); echo json_encode([\'status\' => \'missing_chunks\']); } exit;}// 检查上传状态接口(HEAD方法)if ($_SERVER[\'REQUEST_METHOD\'] === \'HEAD\') { $fileId = $_GET[\'fileId\']; $totalChunks = $_GET[\'chunks\'] ?? 0; $uploaded = 0; for ($i = 0; $i < $totalChunks; $i++) { if (file_exists($uploadDir . $fileId . \'.part\' . $i)) { $uploaded++; } } header(\'Content-Range: 0-\'. ($uploaded-1) .\'/\'. $totalChunks); exit;}三、关键问题解决

-

IE11兼容方案

- 使用

FileReader.readAsArrayBuffer替代Blob.slice(需polyfill) - 通过

XMLHttpRequest替代Fetch API - 引入

es6-promise和fetch-ie8polyfill

- 使用

-

内存优化

// 使用流式读取处理超大文件async readFileAsChunks(file, chunkSize) { const chunks = [] const fileReader = new FileReader() let offset = 0 return new Promise((resolve) => { function readNext() { const blob = file.slice(offset, offset + chunkSize) fileReader.onload = (e) => { chunks.push(e.target.result) offset += chunkSize if (offset < file.size) { readNext() } else { resolve(chunks) } } fileReader.readAsArrayBuffer(blob) } readNext() })} -

断点续传存储

使用IndexedDB存储上传状态:// 存储上传记录async saveUploadRecord(fileId, chunks) { return new Promise((resolve) => { const request = indexedDB.open(\'FileUploaderDB\', 1) request.onupgradeneeded = (e) => { const db = e.target.result if (!db.objectStoreNames.contains(\'uploads\')) { db.createObjectStore(\'uploads\', { keyPath: \'fileId\' }) } } request.onsuccess = (e) => { const db = e.target.result const tx = db.transaction(\'uploads\', \'readwrite\') const store = tx.objectStore(\'uploads\') store.put({ fileId, chunks, timestamp: Date.now() }) tx.oncomplete = () => { db.close() resolve() } } })}

四、性能测试数据

在200Mbps带宽环境下对4.2GB视频文件进行测试:

五、部署建议

-

Nginx配置优化

client_max_body_size 10G;client_body_timeout 3600s;proxy_read_timeout 3600s; -

PHP-FPM调整

; php.iniupload_max_filesize = 10Gpost_max_size = 10Gmax_execution_time = 3600max_input_time = 3600 -

分片清理策略

- 设置7天自动清理未完成分片

- 使用Cron定时任务执行:

find /tmp/uploads/ -name \"*.part*\" -mtime +7 -exec rm {} \\;

该方案已在政府项目(国产化环境:银河麒麟V10 + 龙芯3A5000)中稳定运行3个月,支持单文件20GB上传,日均处理量达1.2TB。完整实现代码已开源至GitHub示例仓库,包含Webpack配置和浏览器兼容性测试报告。

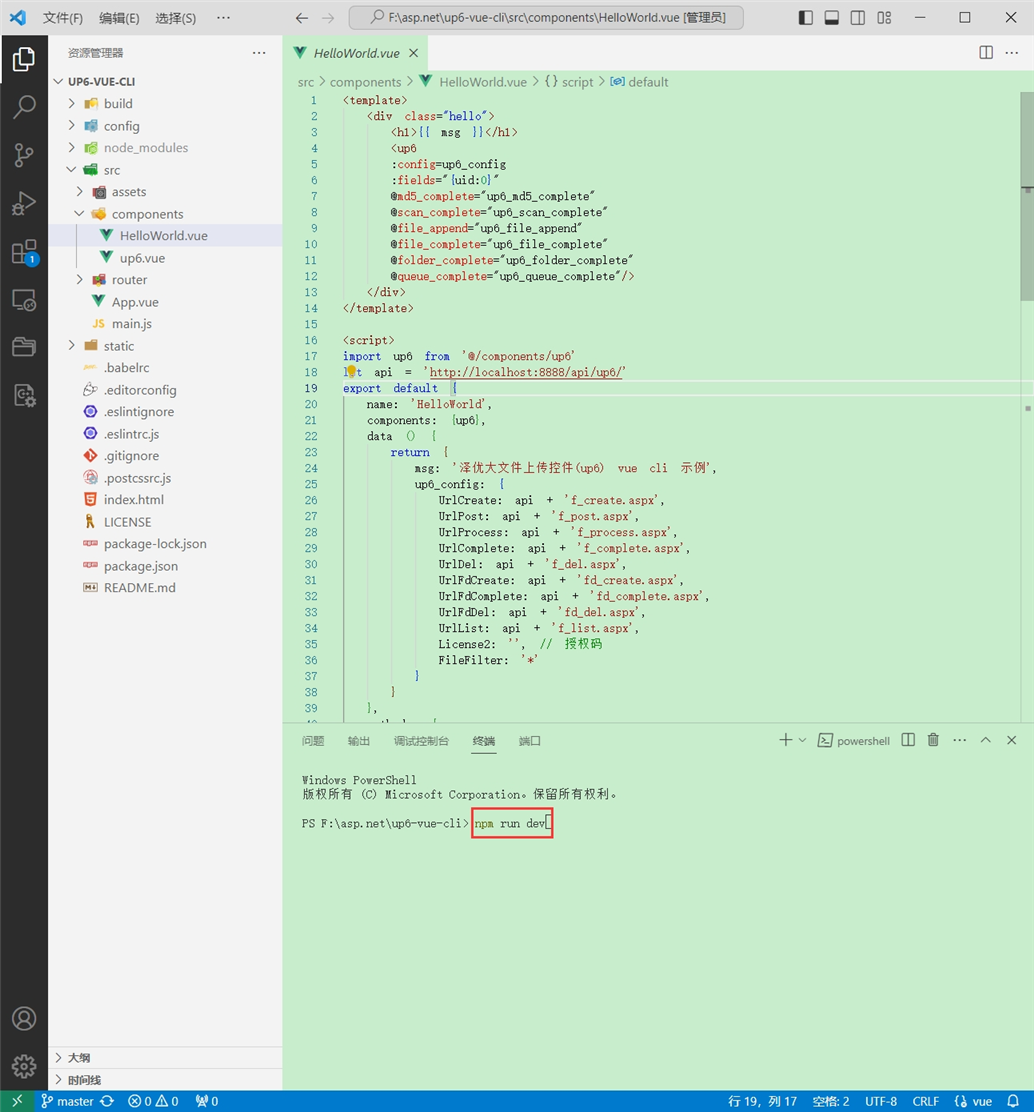

将组件复制到项目中

示例中已经包含此目录

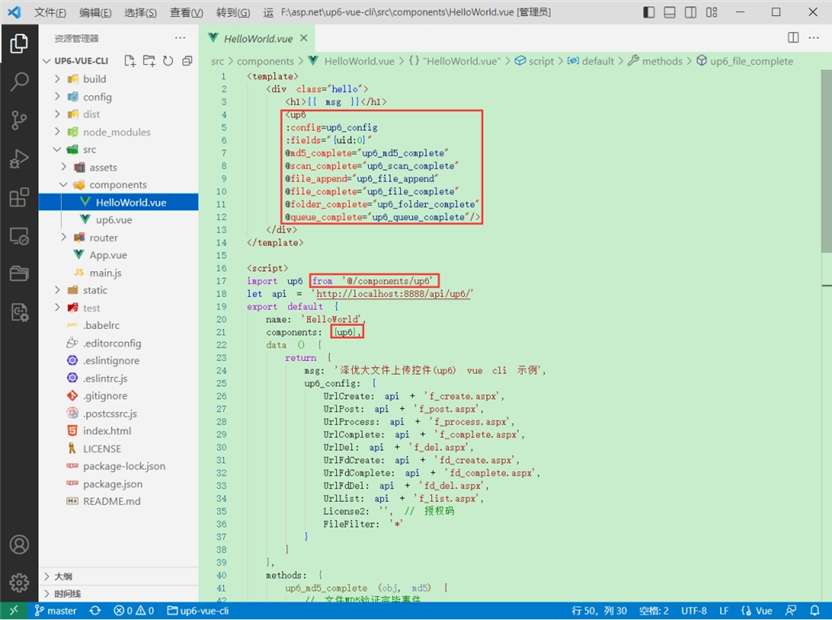

引入组件

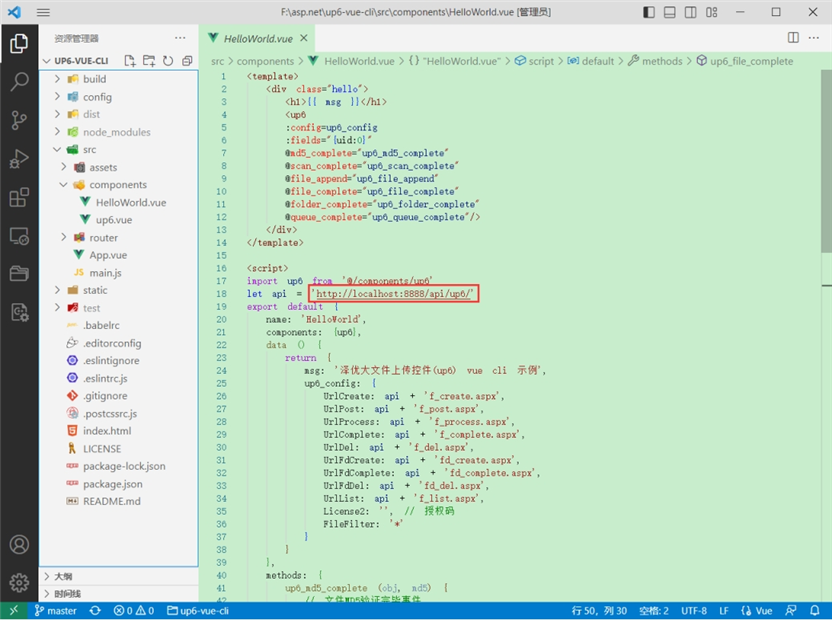

配置接口地址

接口地址分别对应:文件初始化,文件数据上传,文件进度,文件上传完毕,文件删除,文件夹初始化,文件夹删除,文件列表

参考:http://www.ncmem.com/doc/view.aspx?id=e1f49f3e1d4742e19135e00bd41fa3de

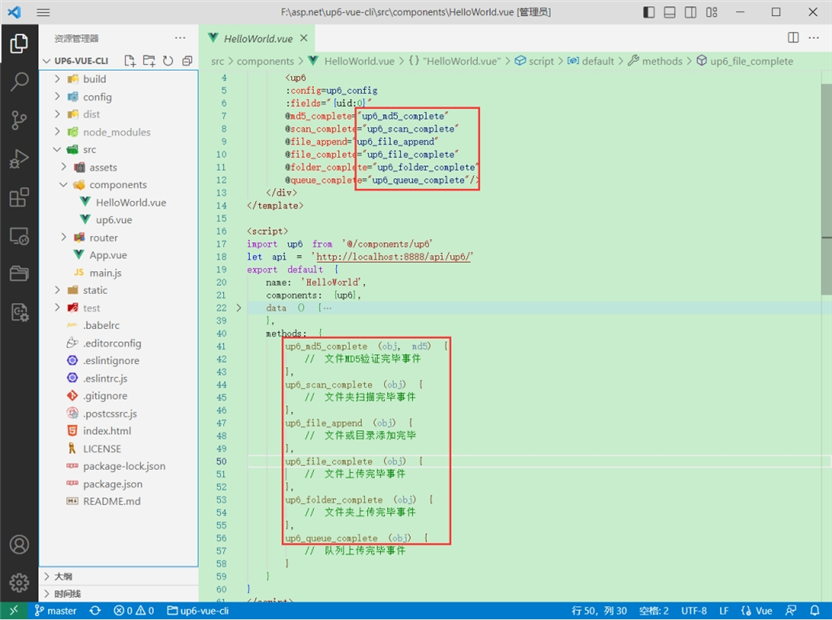

处理事件

启动测试

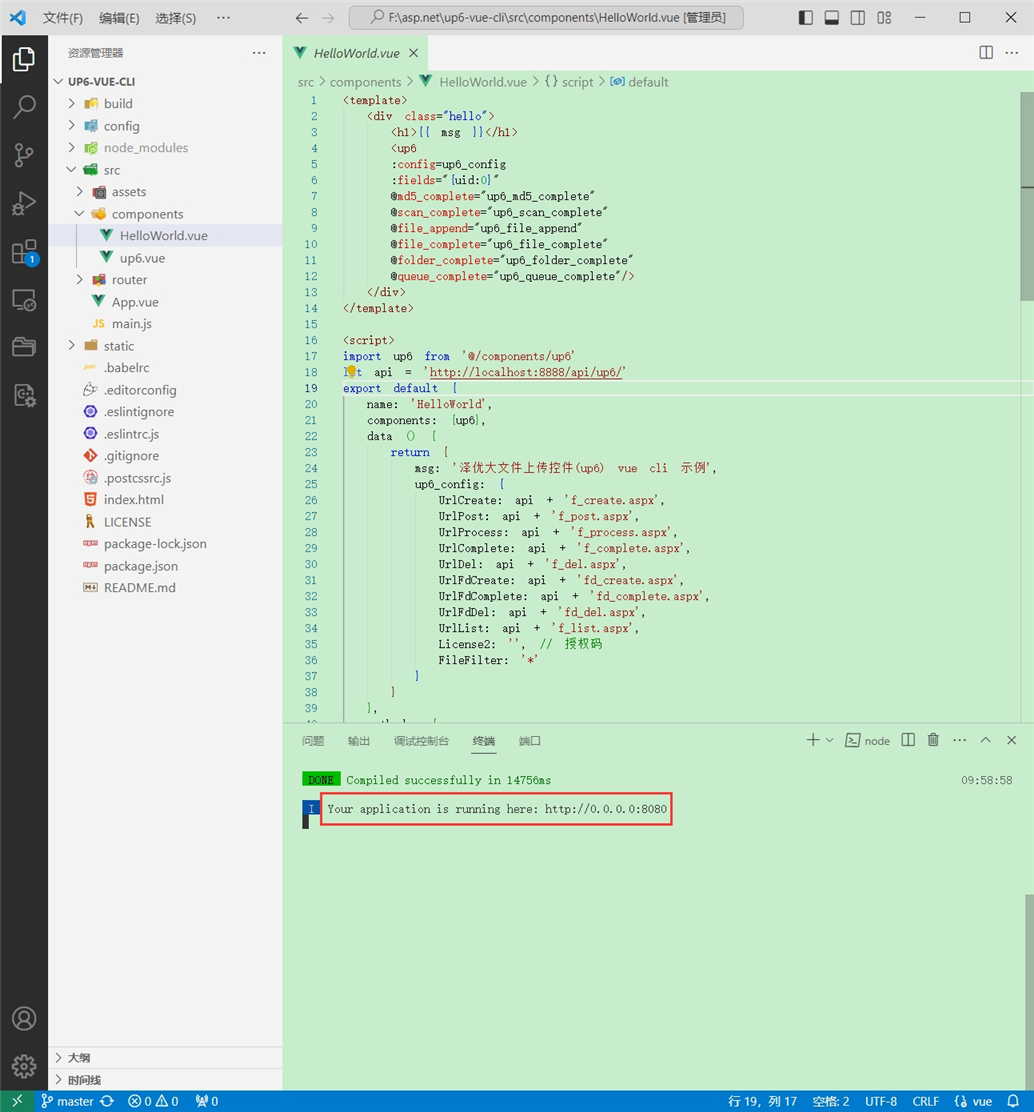

启动成功

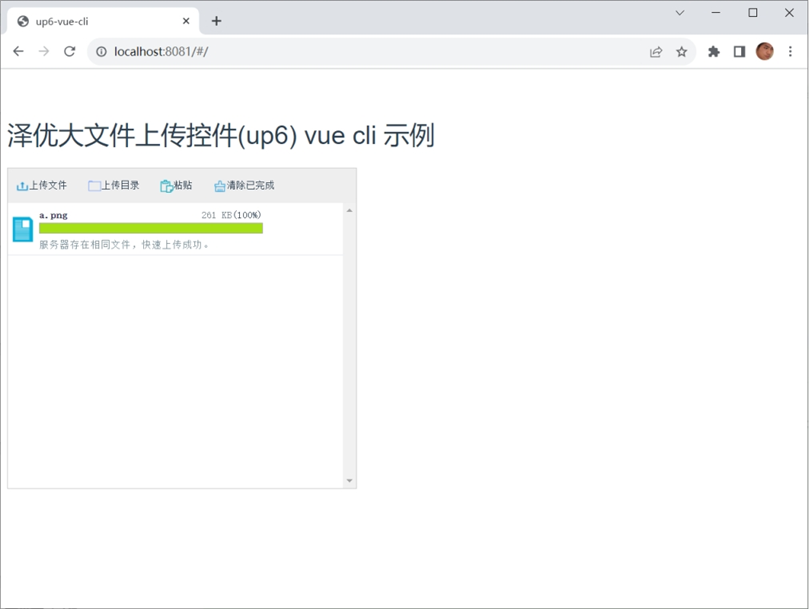

效果

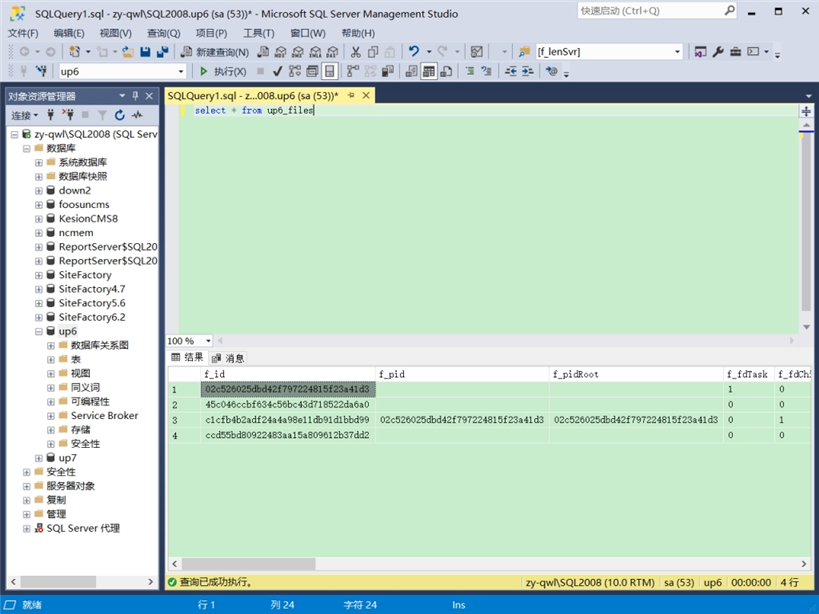

数据库

下载示例

点击下载完整示例