大模型LLM通过python调用mcp服务代码示例_python 调用mcp

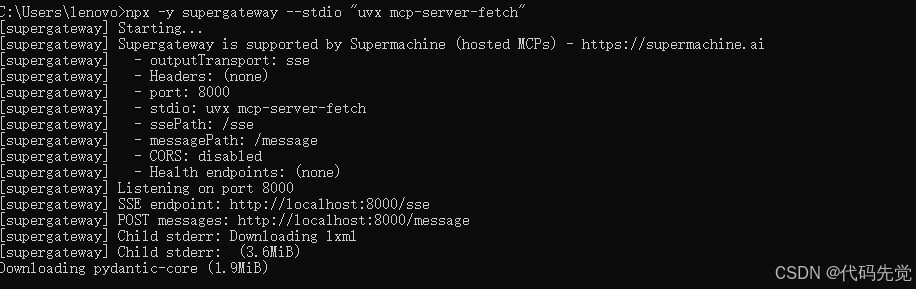

开启mcp服务,这里以网页抓取为例

npx -y supergateway --stdio \"uvx mcp-server-fetch\"

python代码

import asyncio

import os

from openai import AsyncOpenAI

from mcp.client.sse import sse_client

from mcp import ClientSession

from typing import Optional

from contextlib import AsyncExitStack

import json

class MCPAgent:

def __init__(self):

self.exit_stack = AsyncExitStack()

self.session: Optional[ClientSession] = None

self.llm = AsyncOpenAI(

api_key=\"sk-xxx\",

base_url=\"https://api.siliconflow.cn/v1/\"

)

async def connect_server(self, sse_endpoint: str):

transport = await self.exit_stack.enter_async_context(

sse_client(sse_endpoint)

)

self.session = await self.exit_stack.enter_async_context(

ClientSession(*transport)

)

await self.session.initialize()

print(\"可用工具:\", [t.name for t in (await self.session.list_tools()).tools])

async def execute_workflow(self, query: str) -> str:

# 步骤1:获取工具描述

tools = [{

\"type\": \"function\",

\"function\": {

\"name\": t.name,

\"description\": t.description,

\"parameters\": t.inputSchema

}

} for t in (await self.session.list_tools()).tools]

# 步骤2:LLM决策工具调用

chat_completion = await self.llm.chat.completions.create(

model=\"deepseek-ai/DeepSeek-V3\", #deepseek-ai/DeepSeek-R1-Distill-Qwen-7B deepseek-ai/DeepSeek-R1-Distill-Qwen-7B deepseek-ai/DeepSeek-R1

messages=[{\"role\": \"user\", \"content\": query}],

tools=tools,

tool_choice=\"auto\"

)

# 步骤3:执行工具调用

if tool_calls := chat_completion.choices[0].message.tool_calls:

results = []

for tool_call in tool_calls:

args = json.loads(tool_call.function.arguments)

result = await self.session.call_tool(

tool_call.function.name,

args

)

print(result.content[0].text)

print(\"---------\")

results.append(result.content[0].text)

# 步骤4:结果整合

final_response = await self.llm.chat.completions.create(

model=\"deepseek-r1-distill-qwen\", #deepseek-ai/DeepSeek-R1-Distill-Qwen-7B

messages=[{

\"role\": \"user\",

\"content\": f\"原始问题:{query}\\n工具结果:{json.dumps(results)}\"

}]

)

return final_response.choices[0].message.content

return chat_completion.choices[0].message.content

# return json.dumps(results)

async def main():

agent = MCPAgent()

try:

# 连接本地SSE服务

await agent.connect_server(\"http://localhost:8000/sse\")

# 示例查询

response = await agent.execute_workflow(

\"看下这个页面: https://blog.csdn.net/u010479989/article/details/147422815,并总结三个关键点\"

)

print(f\"\\n最终响应:{response}\")

finally:

await agent.exit_stack.aclose()

if __name__ == \"__main__\":

asyncio.run(main())

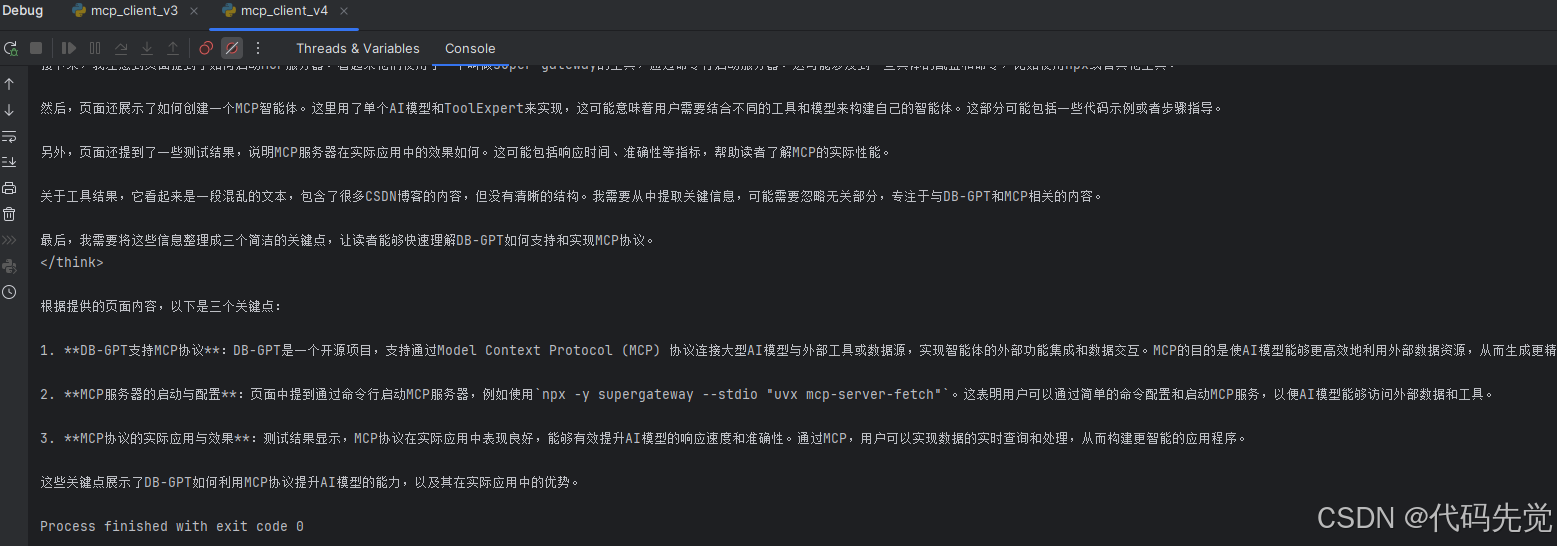

执行结果